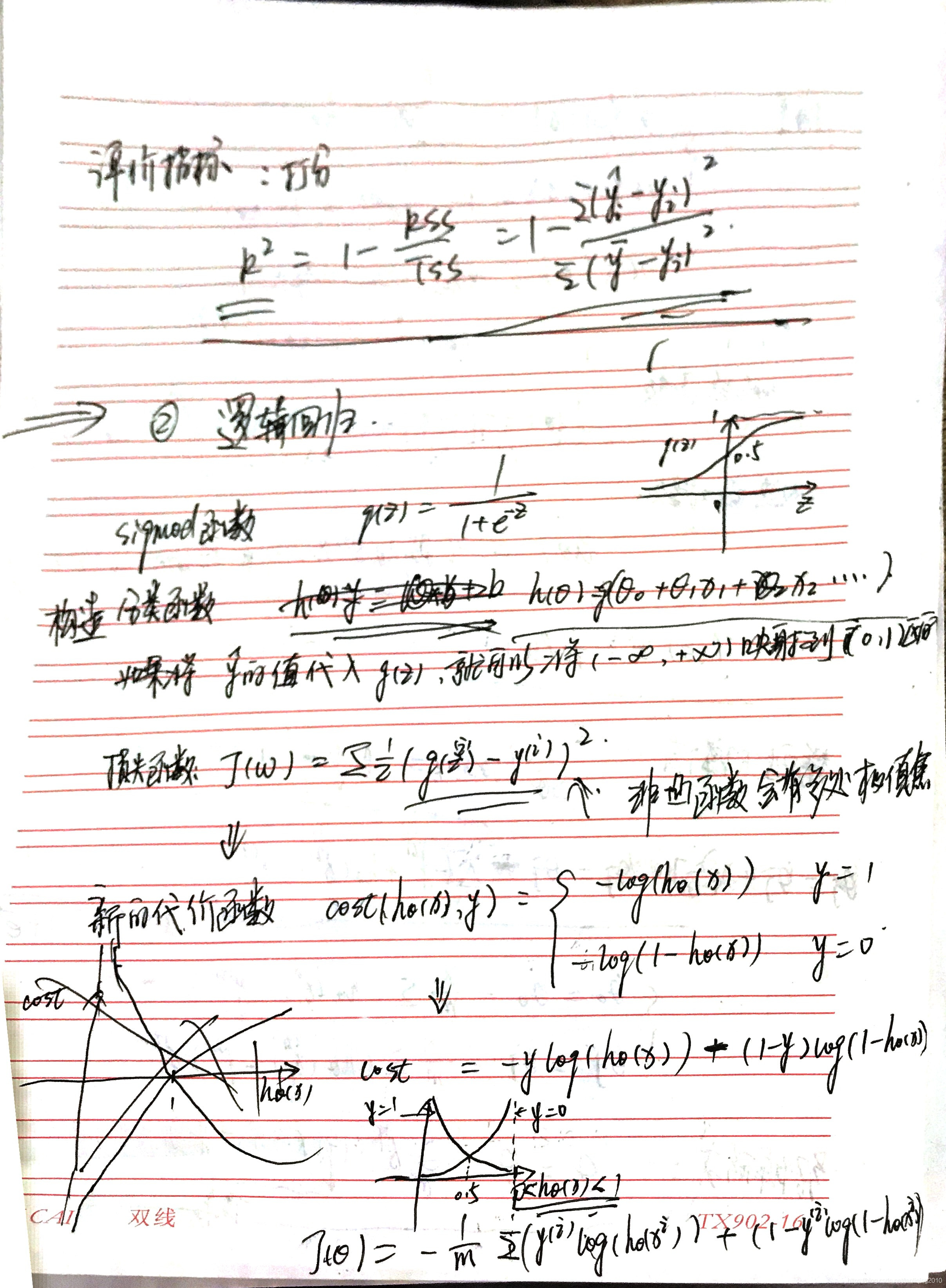

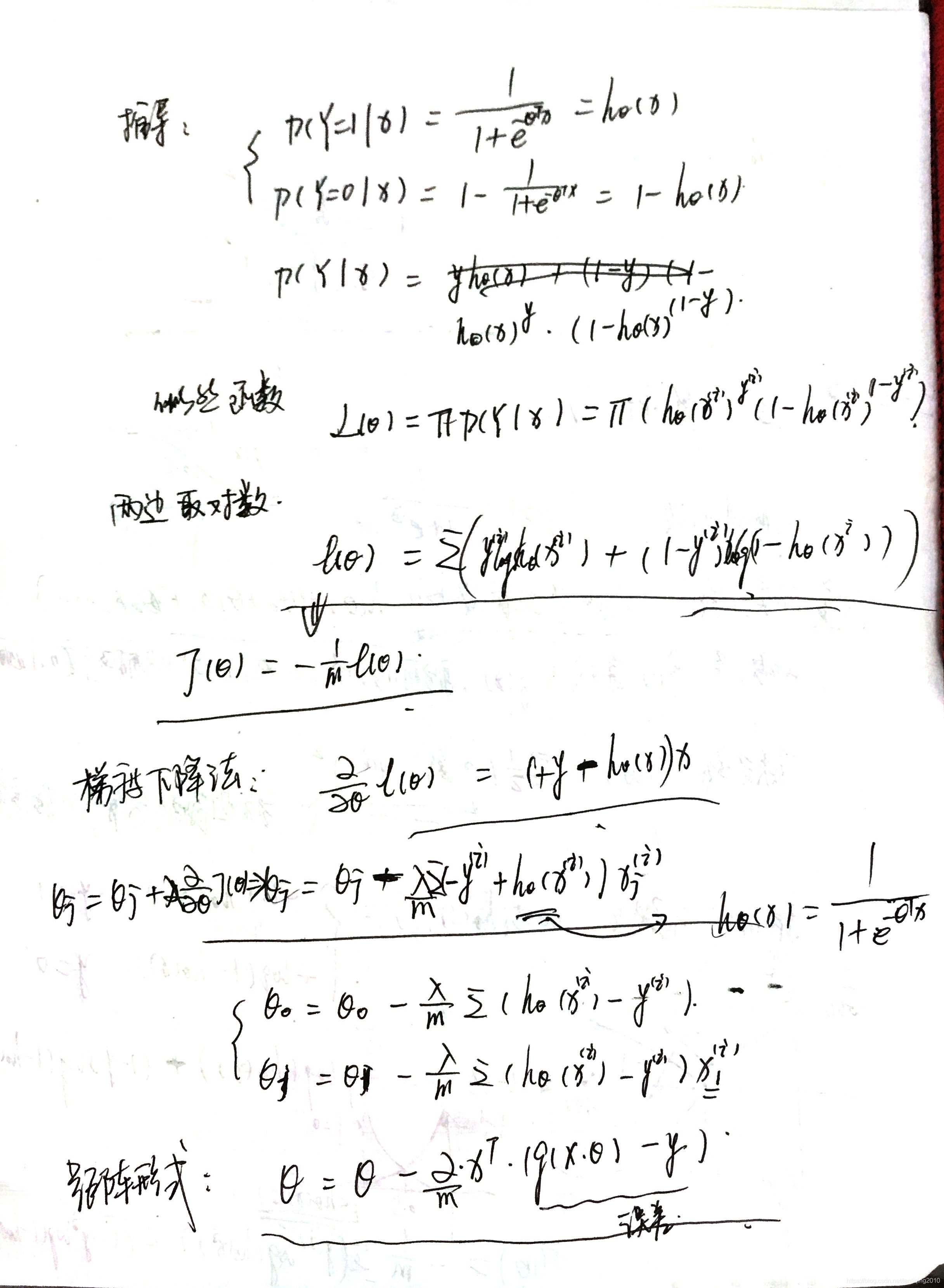

1. 原理推导

相关博客

https://blog.youkuaiyun.com/ligang_csdn/article/details/53838743

https://blog.youkuaiyun.com/t46414704152abc/article/details/79574003

https://www.cnblogs.com/ModifyRong/p/7739955.html

2. 代码实现

def sigmod(x):

return 1/(1 + np.exp(-x))

def compute_loss_theta(data , target , theta):

m,n = data.shape

h = sigmod(np.dot(data , theta))

## 这里是位置相乘,不是矩阵乘法

cost1 = -1 * np.sum(target * np.log(h) + (1-target) * np.log(1 - h)) / m

dW = np.dot(data.T , (h - target) ) / m

return dW , cost1

def train(X, y , alpha =0.01 , repeat=5000):

lost = []

theta = np.ones(X.shape[1]).reshape((-1, 1))

for i in range(0 , repeat):

dW , cost = compute_loss_theta(X , y , theta)

# 梯度下降法

theta = theta - alpha * dW

lost.append(cost)

if i %100 == 0:

print 'i=%d , cost=%f' %(i,cost)

return lost , theta

def predict(x_test , theta):

return x_test.dot(theta)

3. sklearn实现

from sklearn.linear_model.logistic import LogisticRegression

import logic_regression

from sklearn.cross_validation import train_test_split

X , y = logic_regression.loadData()

clss = LogisticRegression()

X_train , X_test , y_train , y_test = train_test_split(X , y , train_size = 0.7)

clss.fit(X_train,y_train)

print clss.coef_

print clss.intercept_

print clss.score(X_test , y_test)

本文深入探讨了逻辑回归的原理,包括sigmoid函数的作用及其损失函数的推导过程,并提供了从零开始的代码实现,最后对比了使用sklearn库进行逻辑回归的简便方法,适合初学者理解和实践。

本文深入探讨了逻辑回归的原理,包括sigmoid函数的作用及其损失函数的推导过程,并提供了从零开始的代码实现,最后对比了使用sklearn库进行逻辑回归的简便方法,适合初学者理解和实践。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?