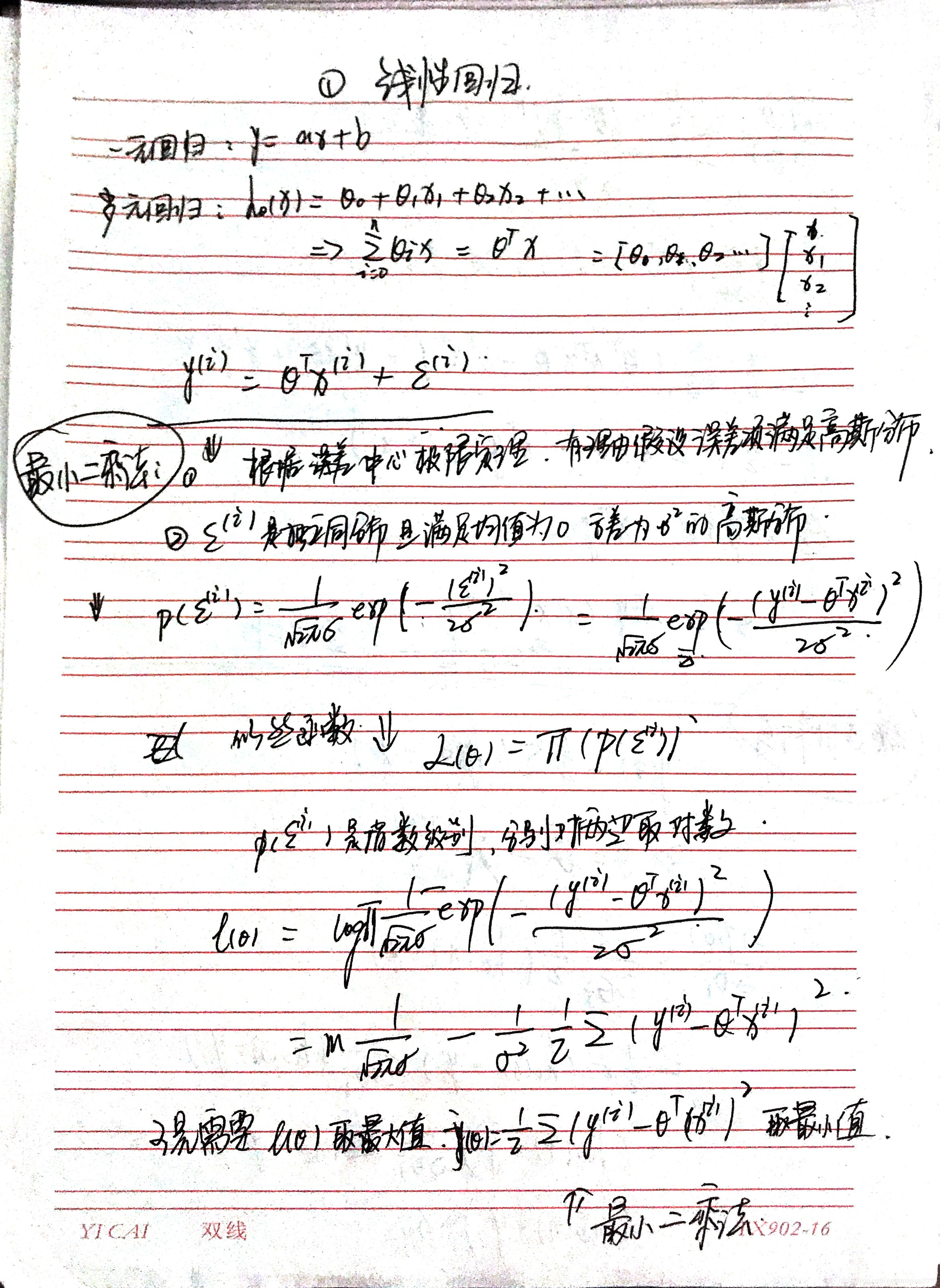

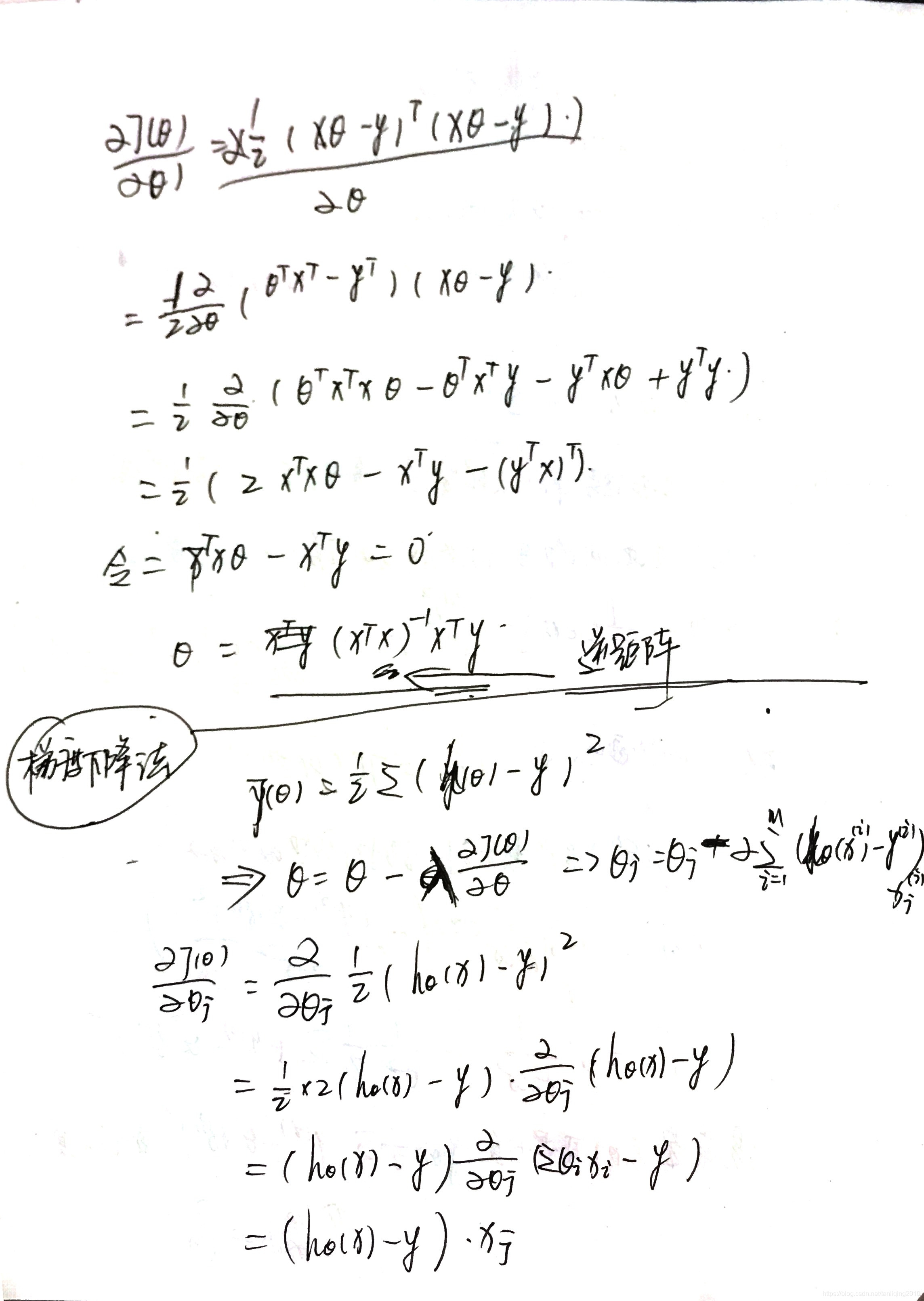

1 算法推导

2. 梯度下降实现

def batchGradientDescent(X, y , theta , alpha , repeat):

m = len(X)

cost = []

for i in range(0 , repeat):

hyp = np.dot(X , theta)

loss = hyp - y

grad = np.dot(X.T ,loss) / m

theta = theta - alpha * grad

cost1 = 0.5 * m * np.sum(np.square(np.dot(X , theta.T) - y))

cost.append(cost1)

return theta , cost

3. 矩阵实现

def linear_regression_mat(data,target):

X = np.mat(data)

X1 = X.T.dot(X)

if np.linalg.det(X1) == 0:

theta = np.linalg.solve(X.T , X).dot( X.T).dot( target)

print 'dddd'

return theta

theta = np.linalg.inv(X1).dot(X.T).dot( target)

#theta = np.linalg.inv(X1) * (X.T) * target

return theta

4. sklearn实现

lr = sklearn.linear_model.LinearRegression()

lr.fit(X_train , y_train)

y_pred = lr.predict(X_test)

print lr.coef_

print lr.intercept_

https://github.com/lmm915815/my_ML_python/tree/master/linear_regression

本文深入探讨了线性回归算法的多种实现方式,包括从零开始的梯度下降法,利用矩阵运算的直接求解法,以及使用sklearn库的便捷实现。通过对比不同方法,读者可以全面理解线性回归的工作原理及其在实际应用中的灵活性。

本文深入探讨了线性回归算法的多种实现方式,包括从零开始的梯度下降法,利用矩阵运算的直接求解法,以及使用sklearn库的便捷实现。通过对比不同方法,读者可以全面理解线性回归的工作原理及其在实际应用中的灵活性。

7万+

7万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?