Elasticsearch

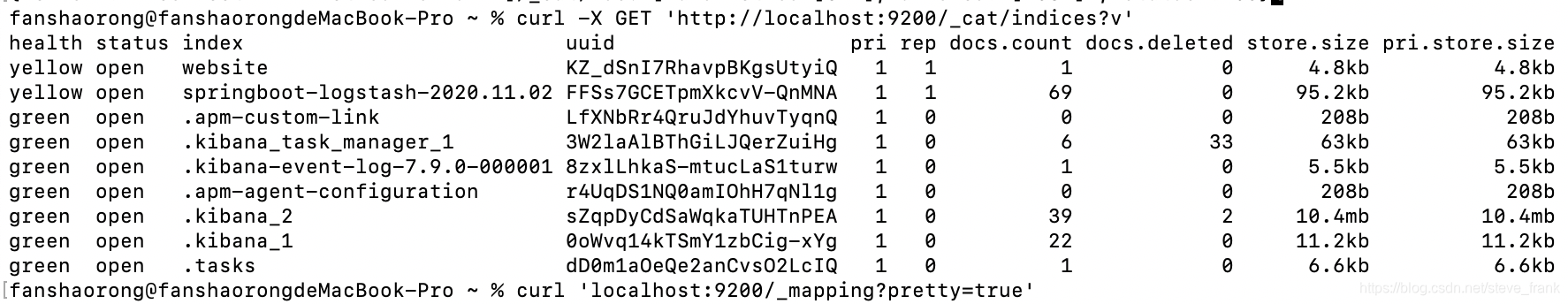

查看当前节点的所有 Index

curl -X GET 'http://localhost:9200/_cat/indices?v'

列出每个 Index 所包含的 Type

curl 'localhost:9200/_mapping?pretty=true'

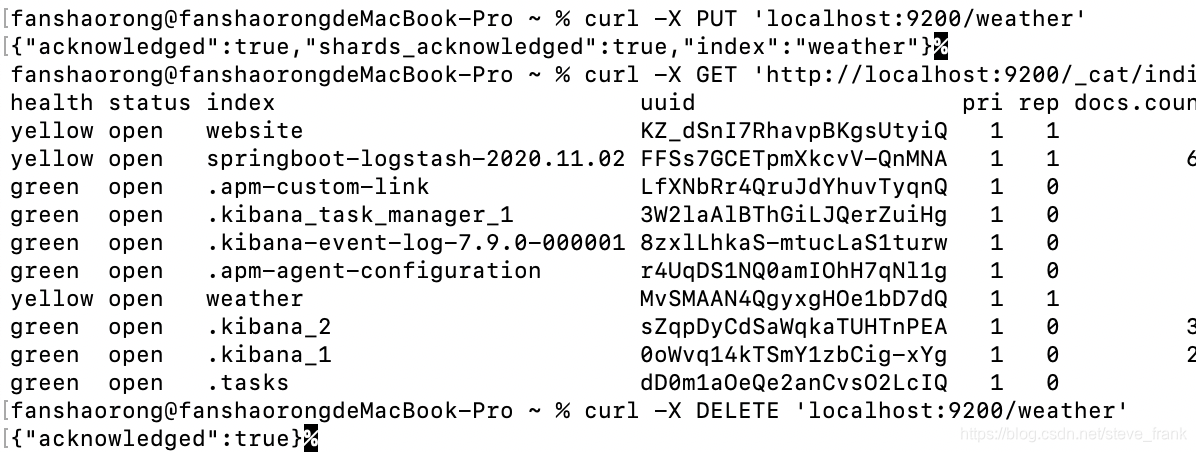

新建一个名叫weather的 Index

curl -X PUT 'localhost:9200/weather'

删除这个 Index

curl -X DELETE 'localhost:9200/weather'

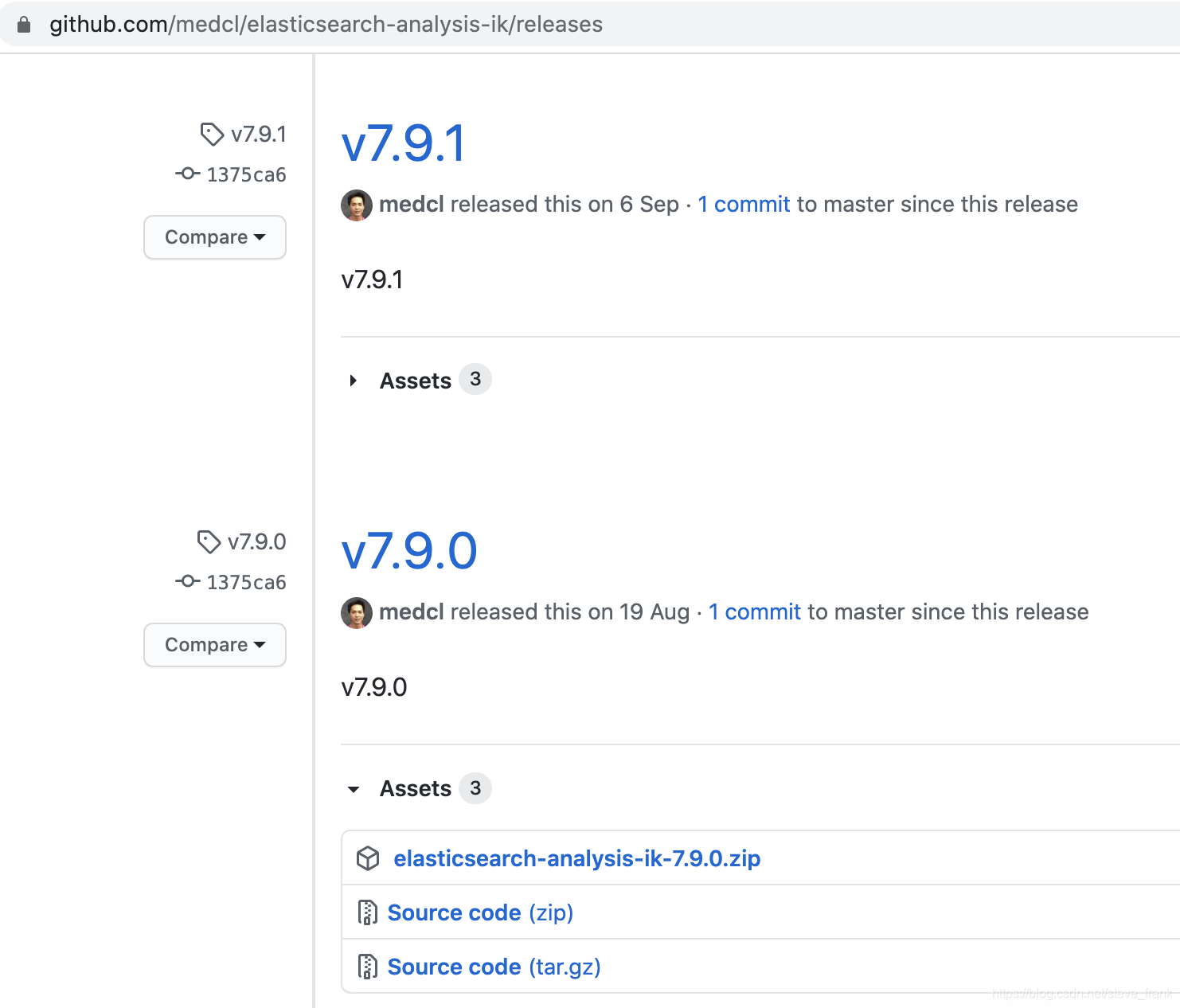

中文分词设置

https://github.com/medcl/elasticsearch-analysis-ik/releases

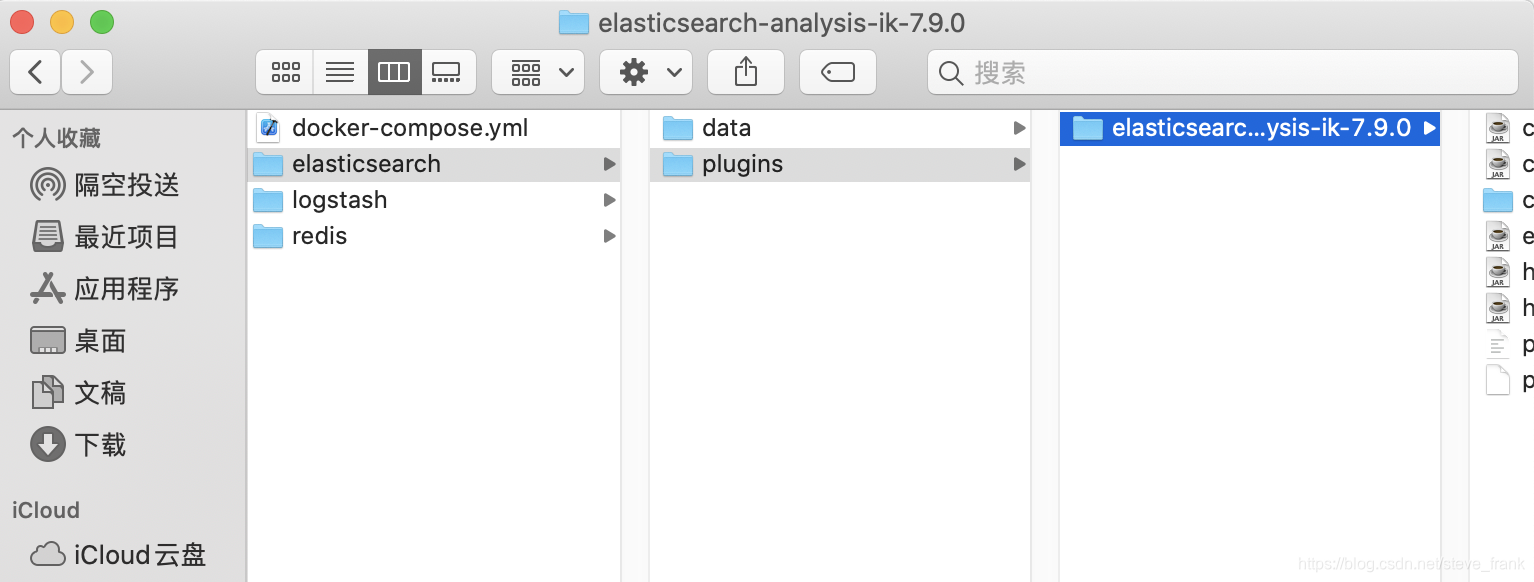

解压到插件配置目录

重新启动elasticsearch

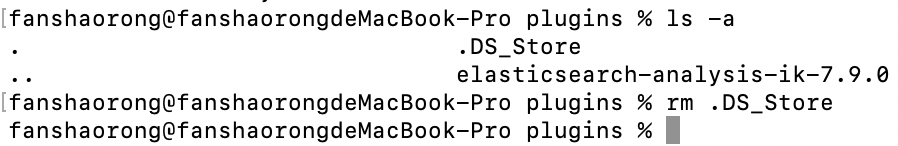

Could not load plugin descriptor for plugin directory [.DS_Store]

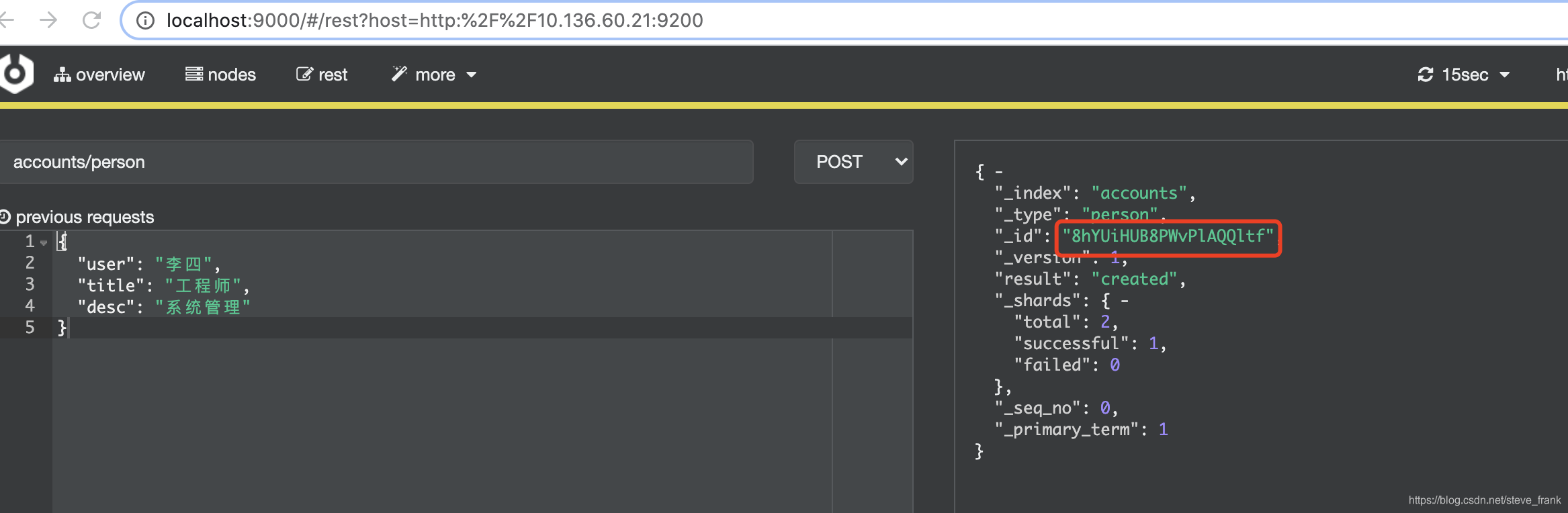

新增记录

curl -H 'Content-type: application/json' -XPOST 'http://10.136.60.21:9200/accounts/person' -d '{

"user": "李四",

"title": "工程师",

"desc": "系统管理"

}'或者

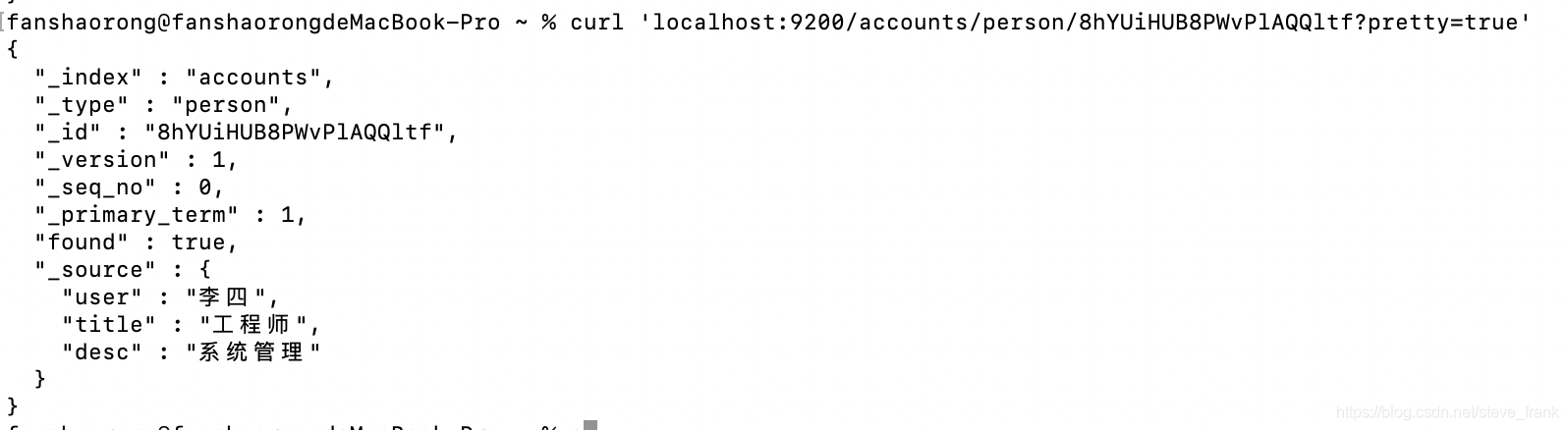

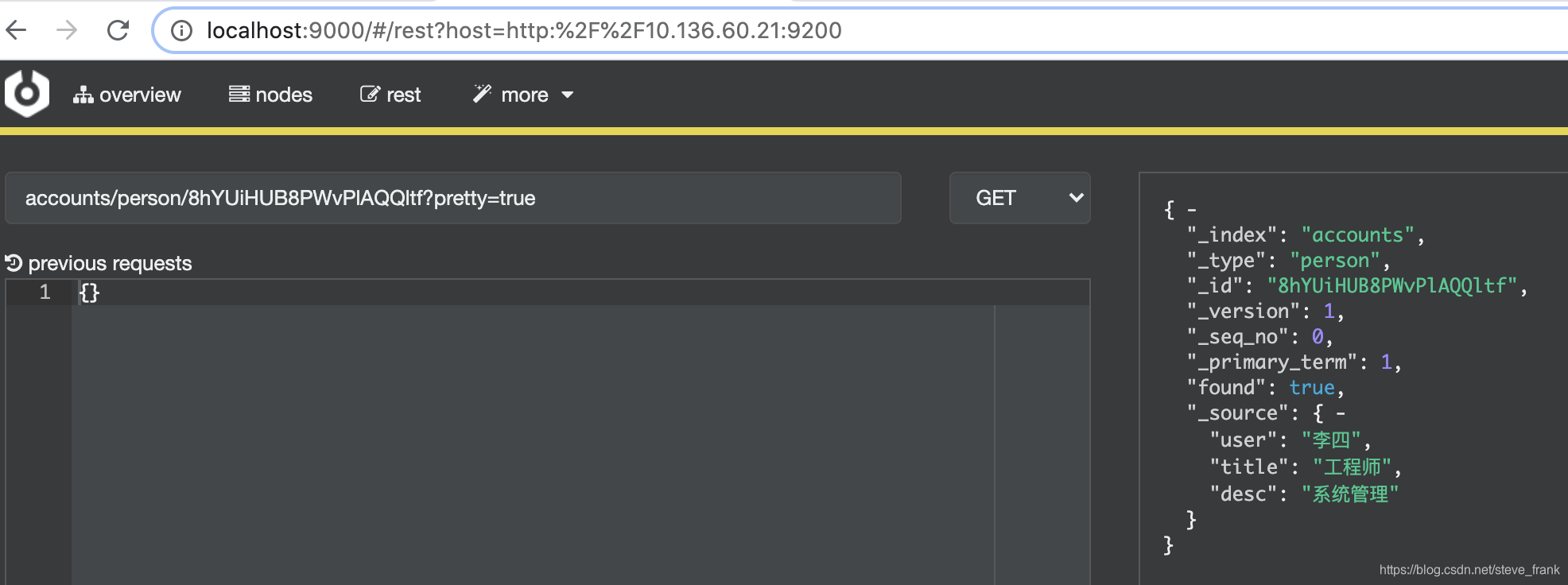

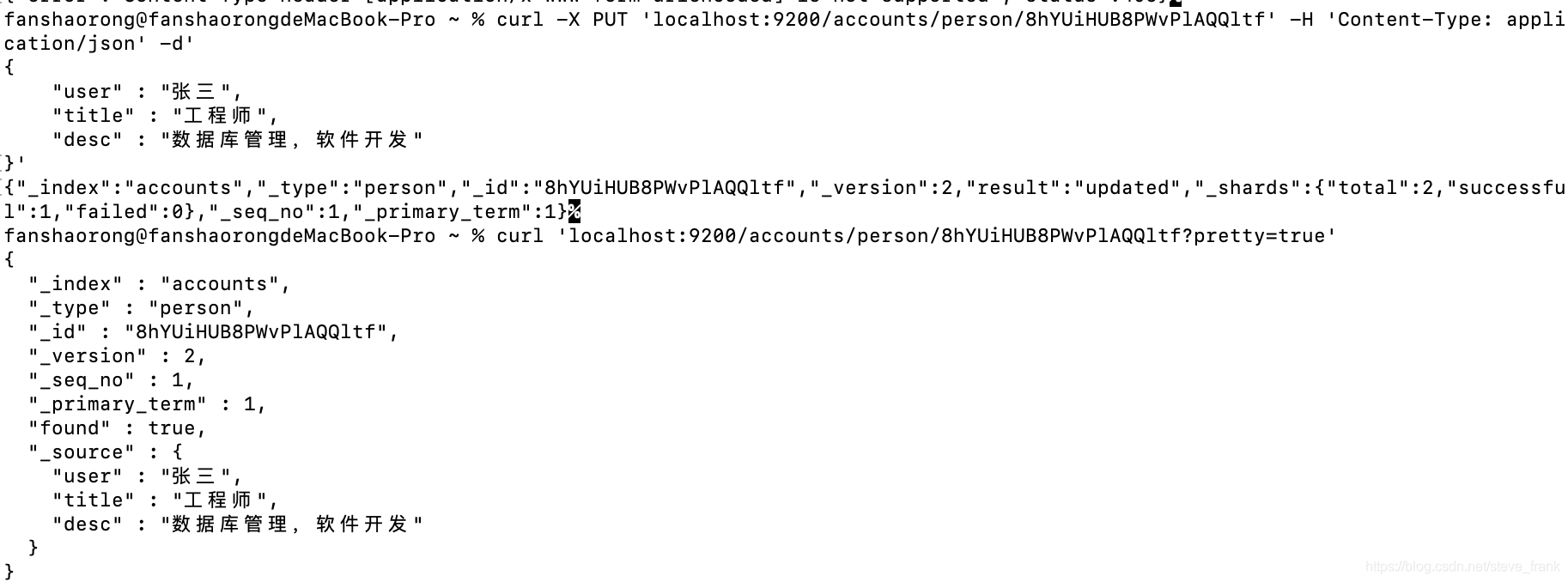

查看记录

curl 'localhost:9200/accounts/person/8hYUiHUB8PWvPlAQQltf?pretty=true'

或者

更新记录

curl -X PUT 'localhost:9200/accounts/person/8hYUiHUB8PWvPlAQQltf' -H 'Content-Type: application/json' -d'

{

"user" : "张三",

"title" : "工程师",

"desc" : "数据库管理,软件开发"

}'

删除记录

curl -X DELETE 'localhost:9200/accounts/person/8hYUiHUB8PWvPlAQQltf'

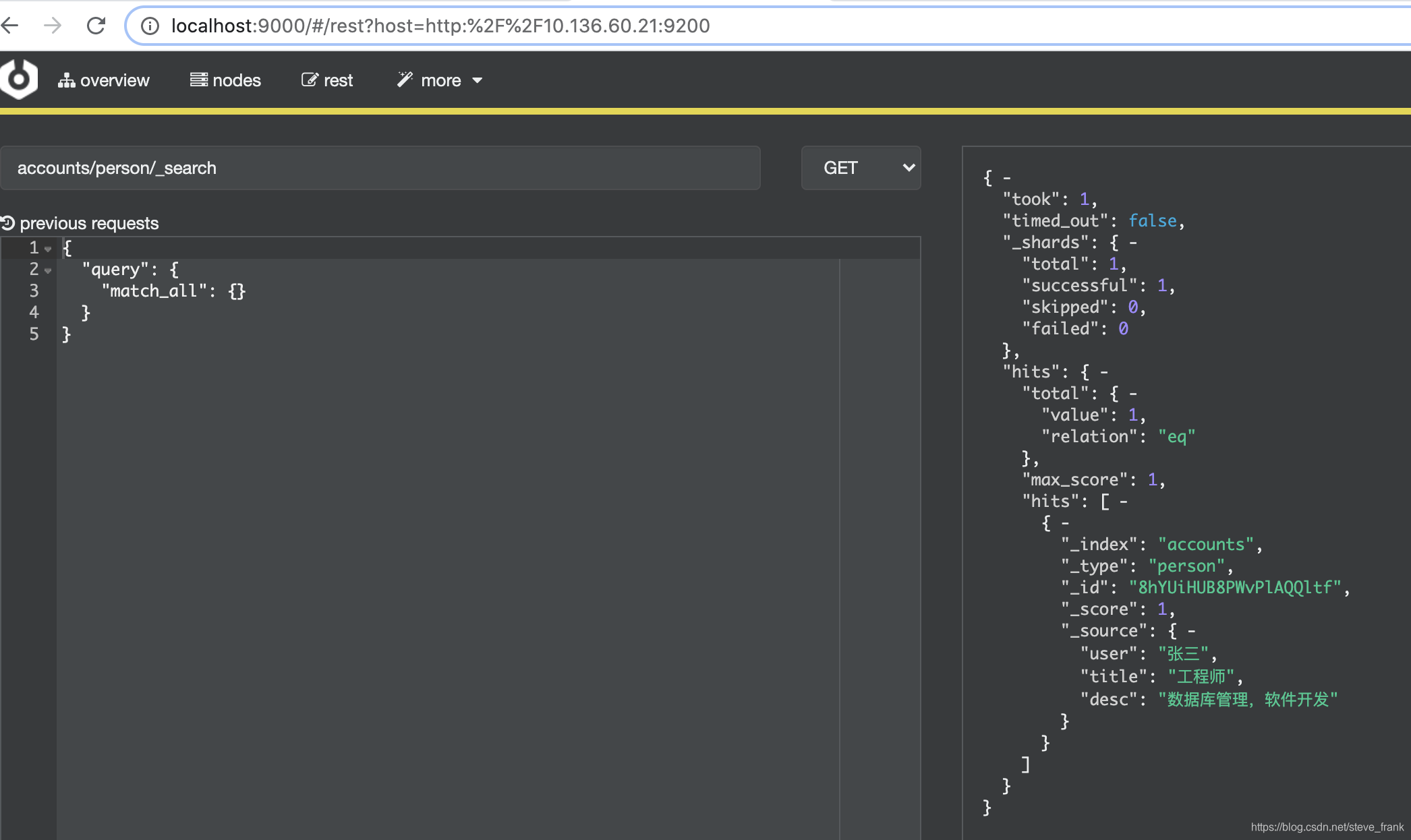

数据查询

匹配所有文档

/Index/Type/_search

curl -H 'Content-type: application/json' -XGET 'http://10.136.60.21:9200/accounts/person/_search'

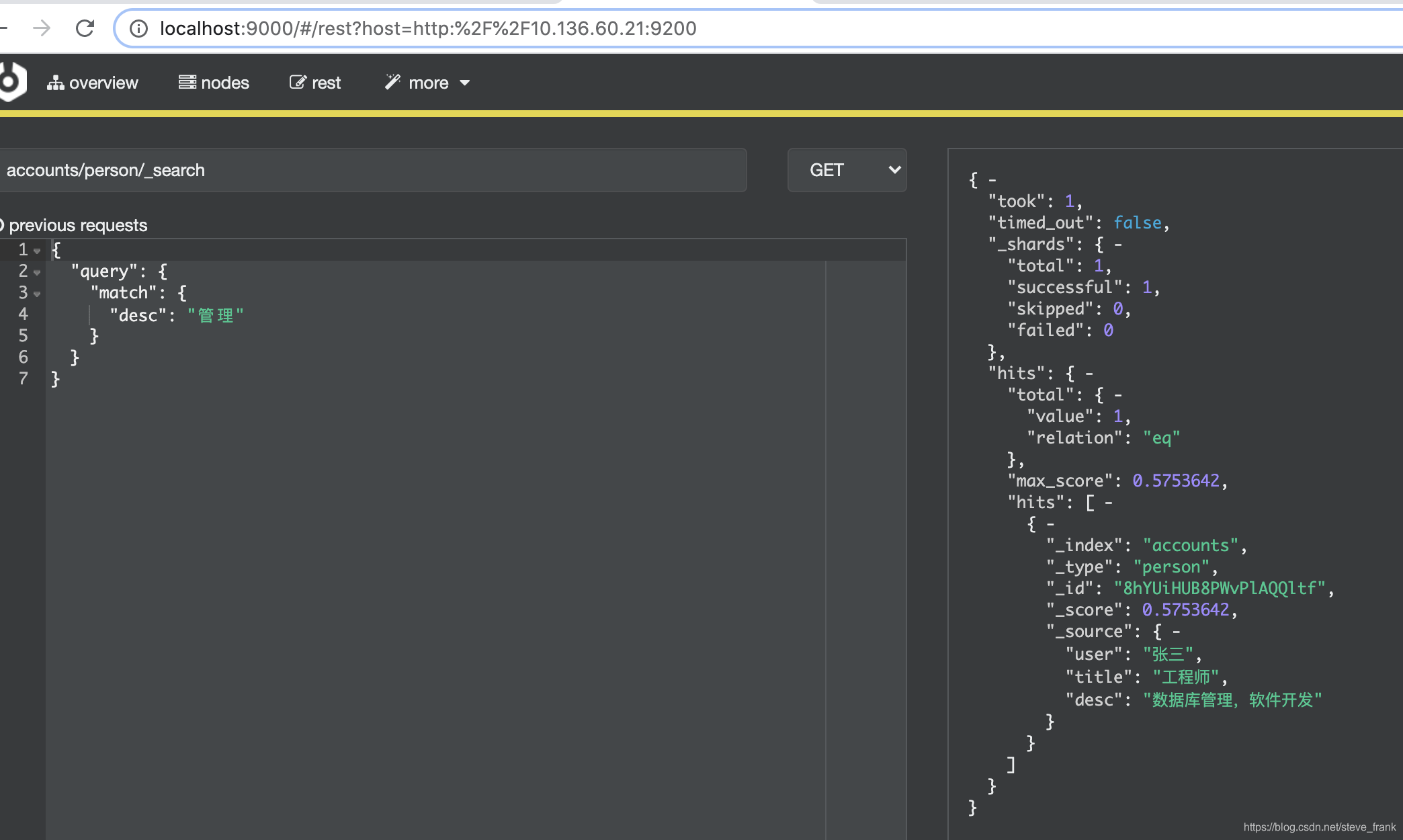

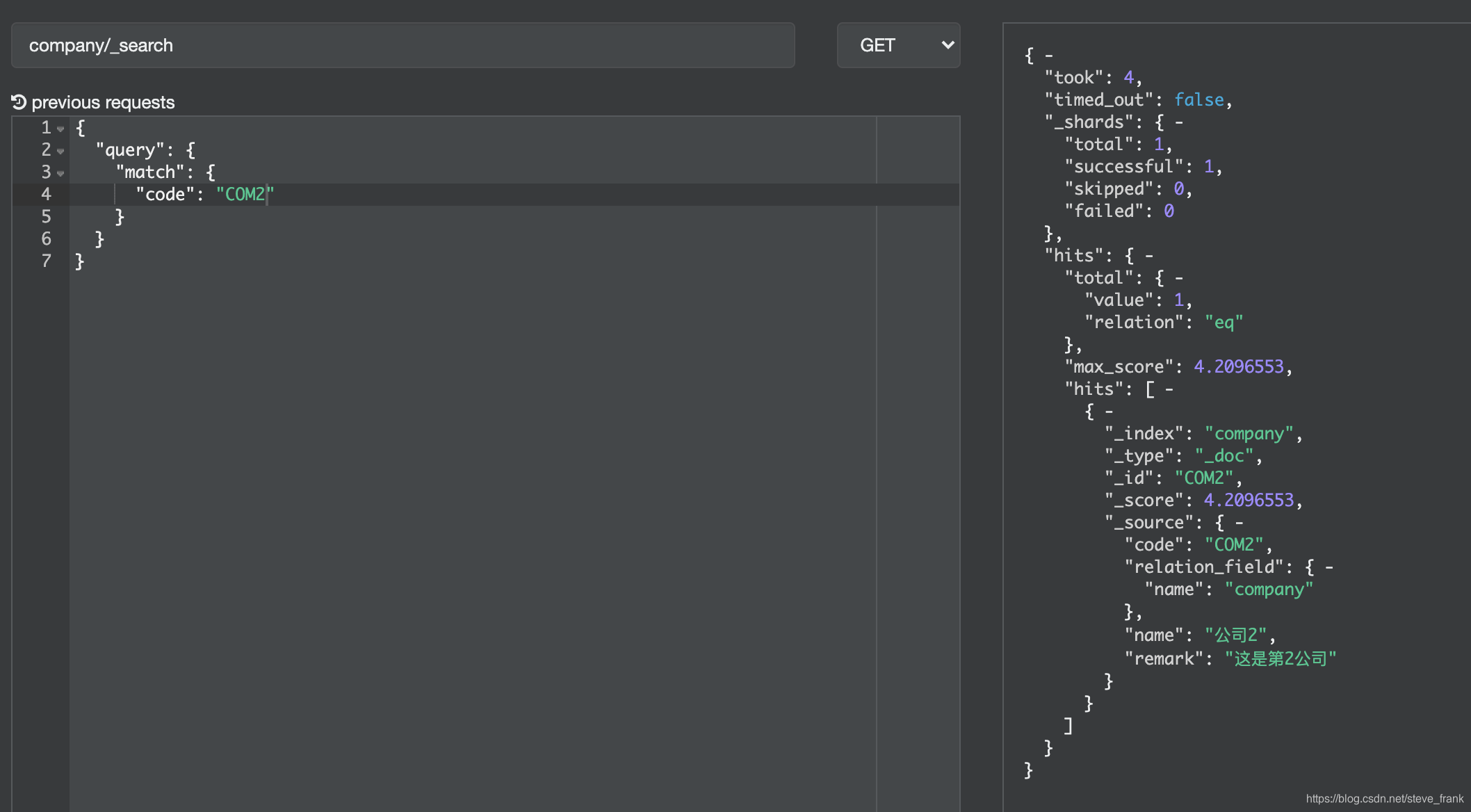

匹配查询

curl -H 'Content-type: application/json' -XPOST 'http://10.136.60.21:9200/accounts/person/_search' -d '{

"query": {

"match": {

"desc": "管理"

}

}

}'

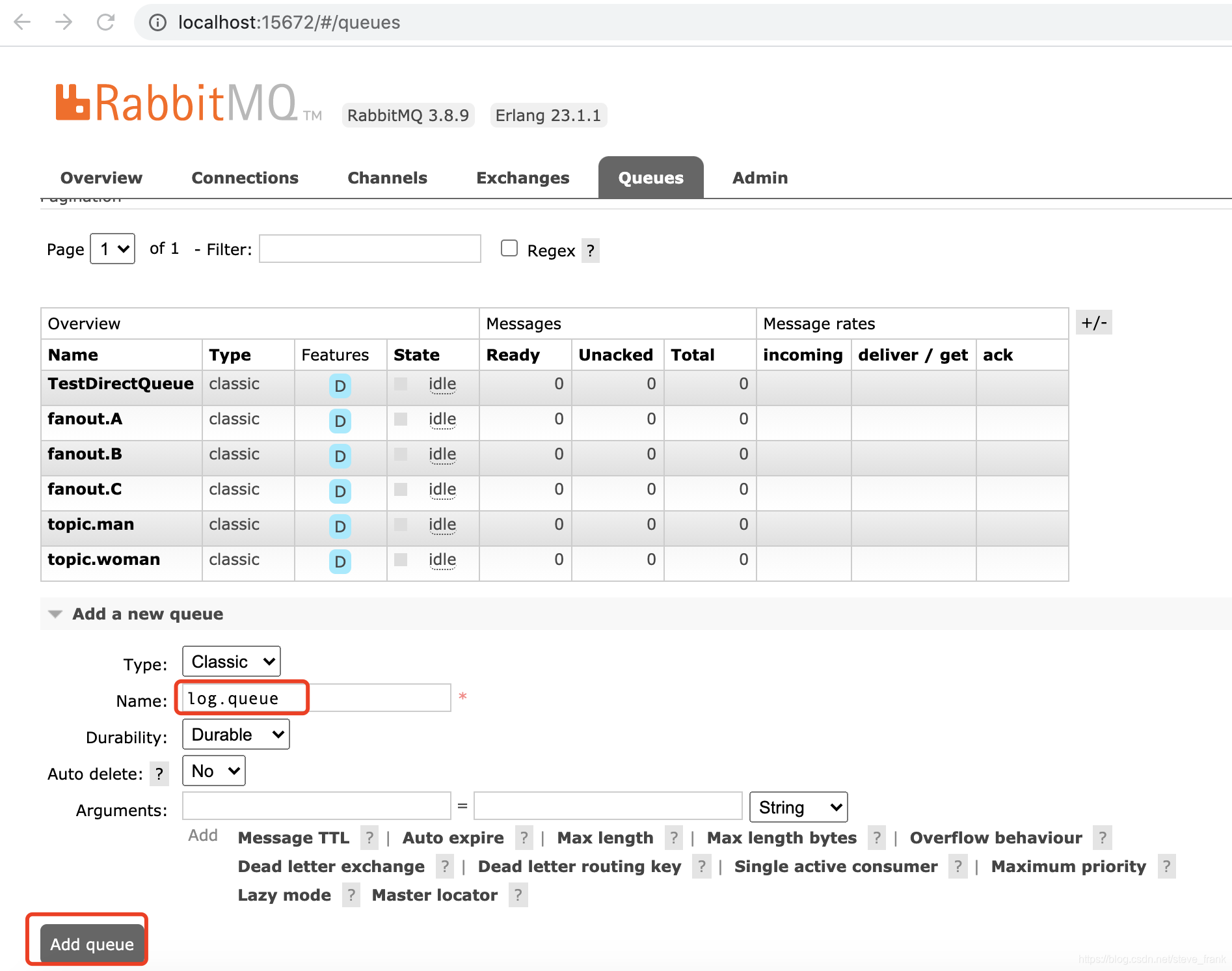

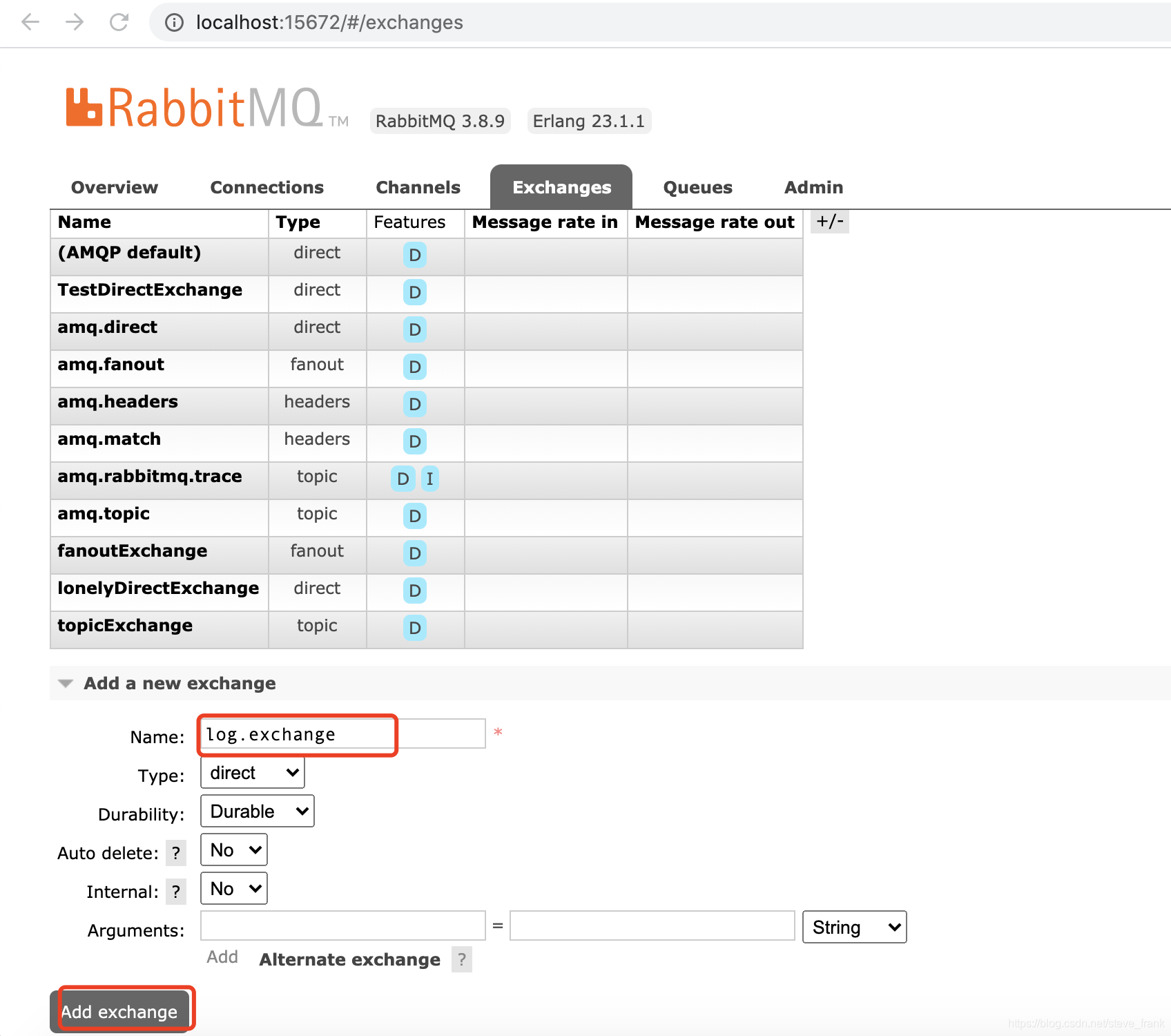

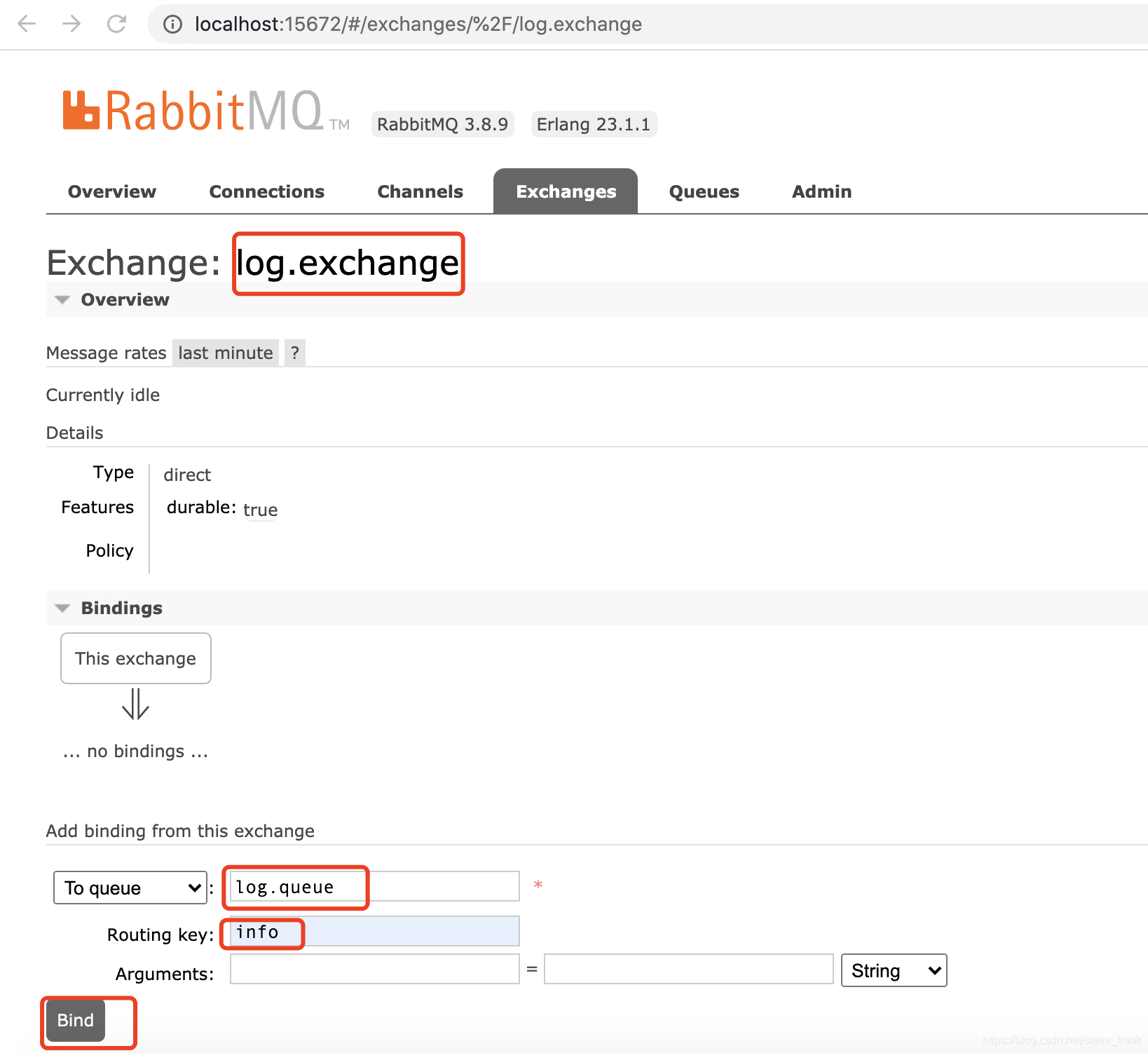

ELK集成RabbitMQ

RabbitMQ添加队列log.queue

添加交换机log.exchange

log.exchange绑定log.queue

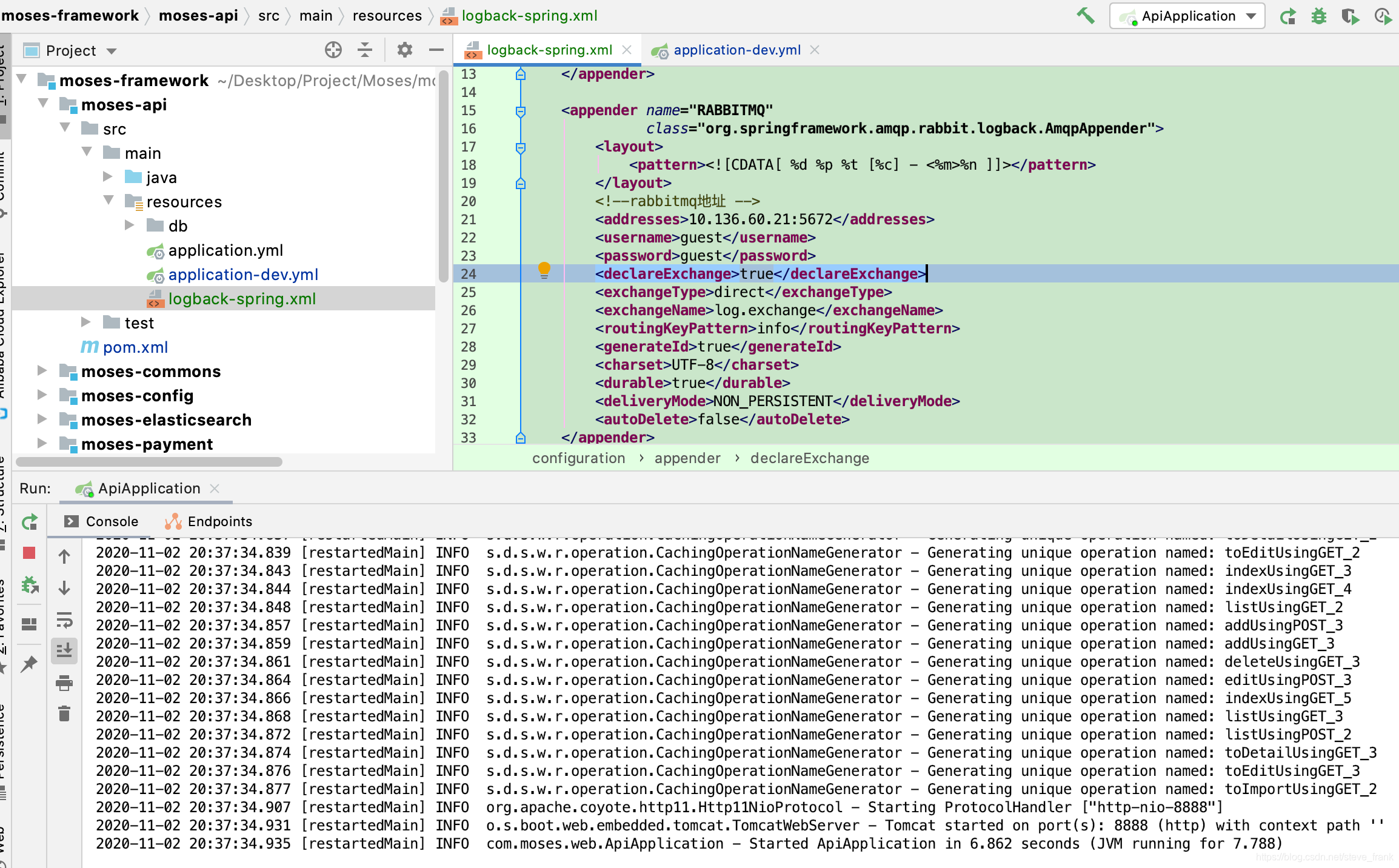

logback.xml文件,配置appender输出日志到RabbitMQ

<?xml version="1.0" encoding="UTF-8"?>

<configuration debug="false">

<!--定义日志文件的存储地址 勿在 LogBack 的配置中使用相对路径-->

<property name="LOG_HOME" value="d:/"/>

<!-- 控制台输出 -->

<appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<!--格式化输出,%d:日期;%thread:线程名;%-5level:级别,从左显示5个字符宽度;%msg:日志消息;%n:换行符-->

<pattern>%d{yyyy-MM-dd HH:mm:ss.SSS} [%thread] %-5level %logger{50} - %msg%n</pattern>

</encoder>

</appender>

<appender name="RABBITMQ"

class="org.springframework.amqp.rabbit.logback.AmqpAppender">

<layout>

<pattern><![CDATA[ %d %p %t [%c] - <%m>%n ]]></pattern>

</layout>

<!--rabbitmq地址 -->

<addresses>10.136.60.21:5672</addresses>

<username>guest</username>

<password>guest</password>

<declareExchange>true</declareExchange>

<exchangeType>direct</exchangeType>

<exchangeName>log.exchange</exchangeName>

<routingKeyPattern>info</routingKeyPattern>

<generateId>true</generateId>

<charset>UTF-8</charset>

<durable>true</durable>

<deliveryMode>NON_PERSISTENT</deliveryMode>

<autoDelete>false</autoDelete>

</appender>

<logger name="com.light.rabbitmq" level="info" additivity="false">

<appender-ref ref="STDOUT"/>

<appender-ref ref="RABBITMQ"/>

</logger>

<!-- 日志输出级别,level 默认值 DEBUG,root 其实是 logger,它是 logger 的根 -->

<root level="INFO">

<appender-ref ref="STDOUT"/>

<appender-ref ref="RABBITMQ"/>

</root>

</configuration>logstash.conf获取RabbitMQ数据输出至es

input{

rabbitmq{

host=>"10.136.60.21"

port=> 5672

user=>"guest"

password=>"guest"

queue=>"log.queue"

durable=> true

codec=>json

type=> "result"

}

}

output {

elasticsearch {

hosts => "es:9200"

index => "springboot-logstash-%{+YYYY.MM.dd}"

}

}启动项目

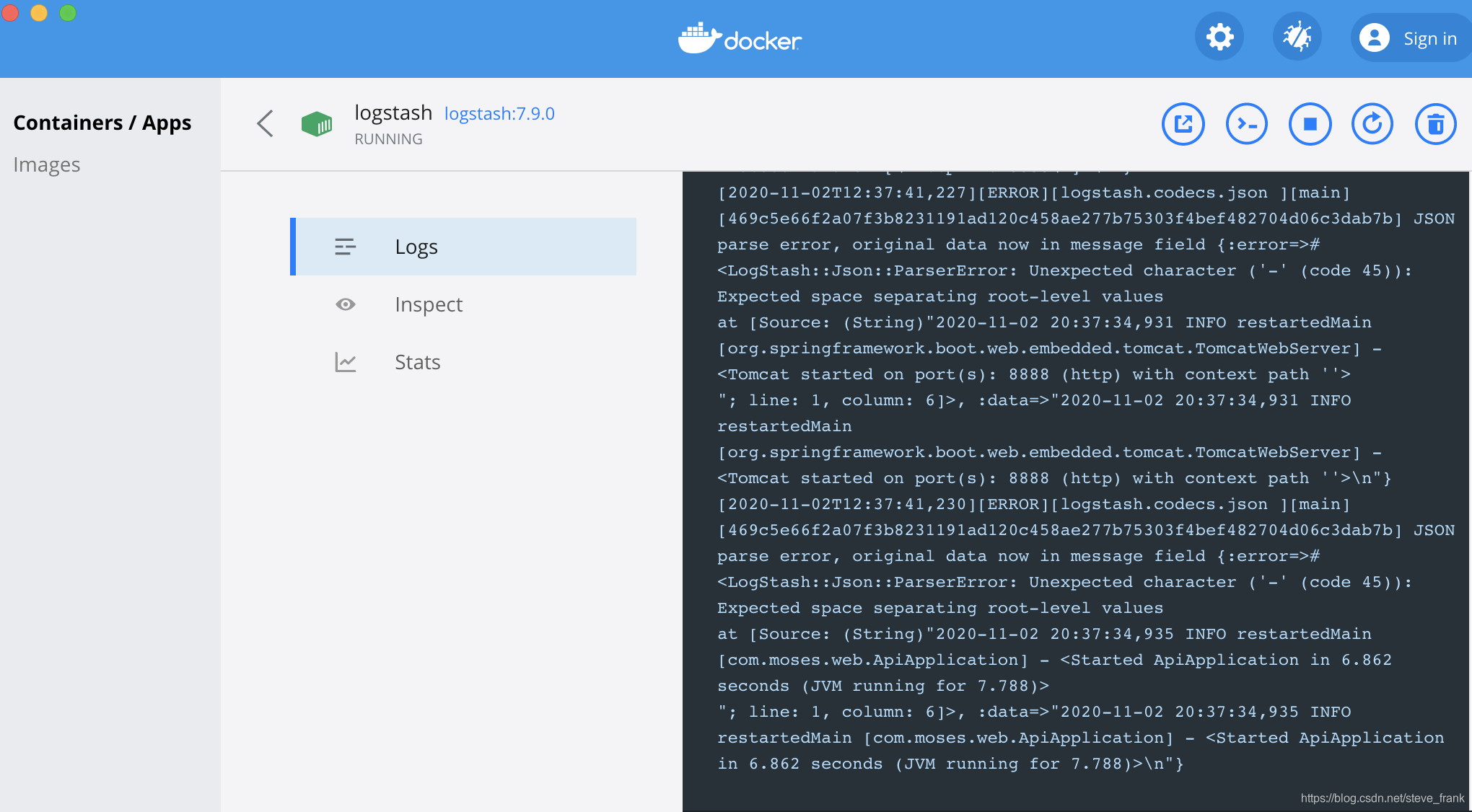

logstash日志

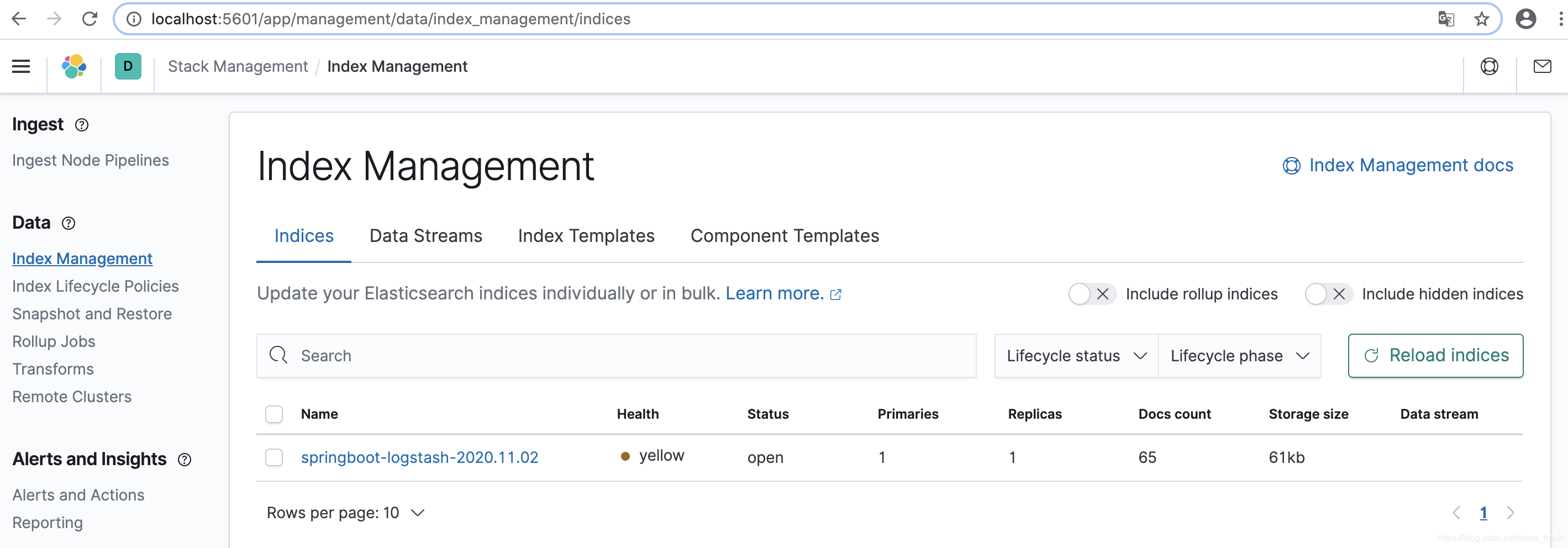

Kibana查看

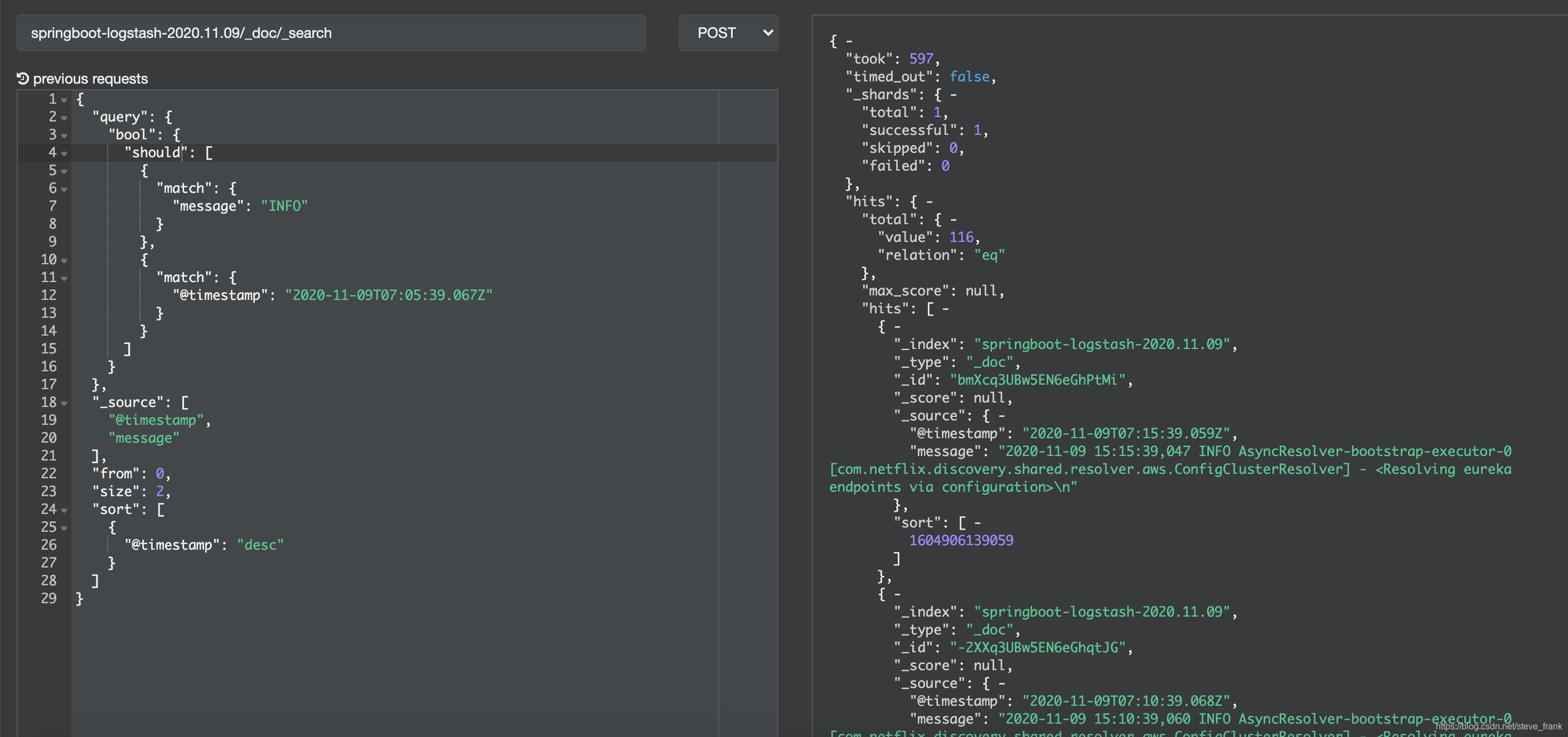

Cerebro查询

或should

curl -H 'Content-type: application/json' -XPOST 'http://172.20.10.2:9200/springboot-logstash-2020.11.09/_doc/_search' -d '{

"query": {

"bool": {

"should": [

{

"match": {

"message": "INFO"

}

},

{

"match": {

"@timestamp": "2020-11-09T07:05:39.067Z"

}

}

]

}

},

"_source": [

"@timestamp",

"message"

],

"from": 0,

"size": 2,

"sort": [

{

"@timestamp": "desc"

}

]

}'

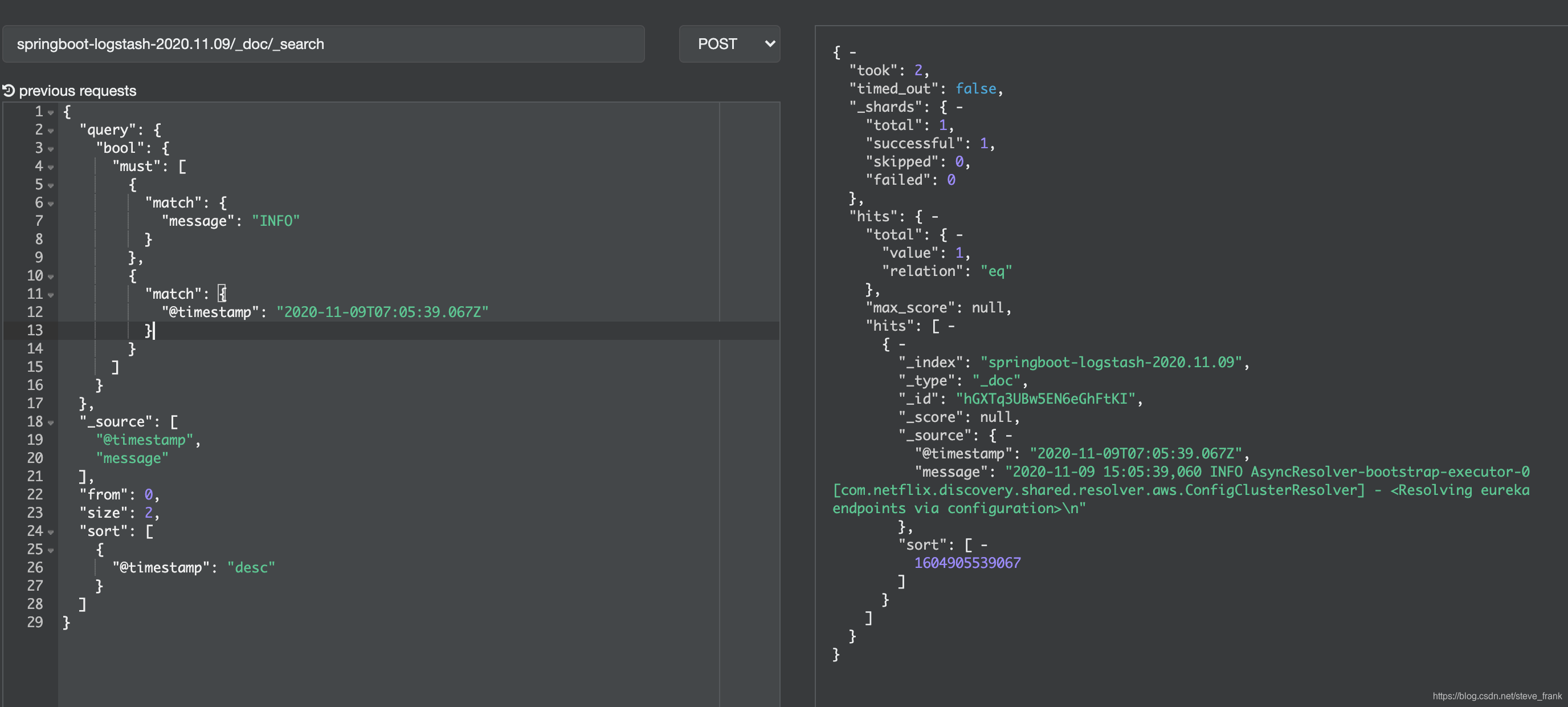

且must

curl -H 'Content-type: application/json' -XPOST 'http://172.20.10.2:9200/springboot-logstash-2020.11.09/_doc/_search' -d '{

"query": {

"bool": {

"must": [

{

"match": {

"message": "INFO"

}

},

{

"match": {

"@timestamp": "2020-11-09T07:05:39.067Z"

}

}

]

}

},

"_source": [

"@timestamp",

"message"

],

"from": 0,

"size": 2,

"sort": [

{

"@timestamp": "desc"

}

]

}'

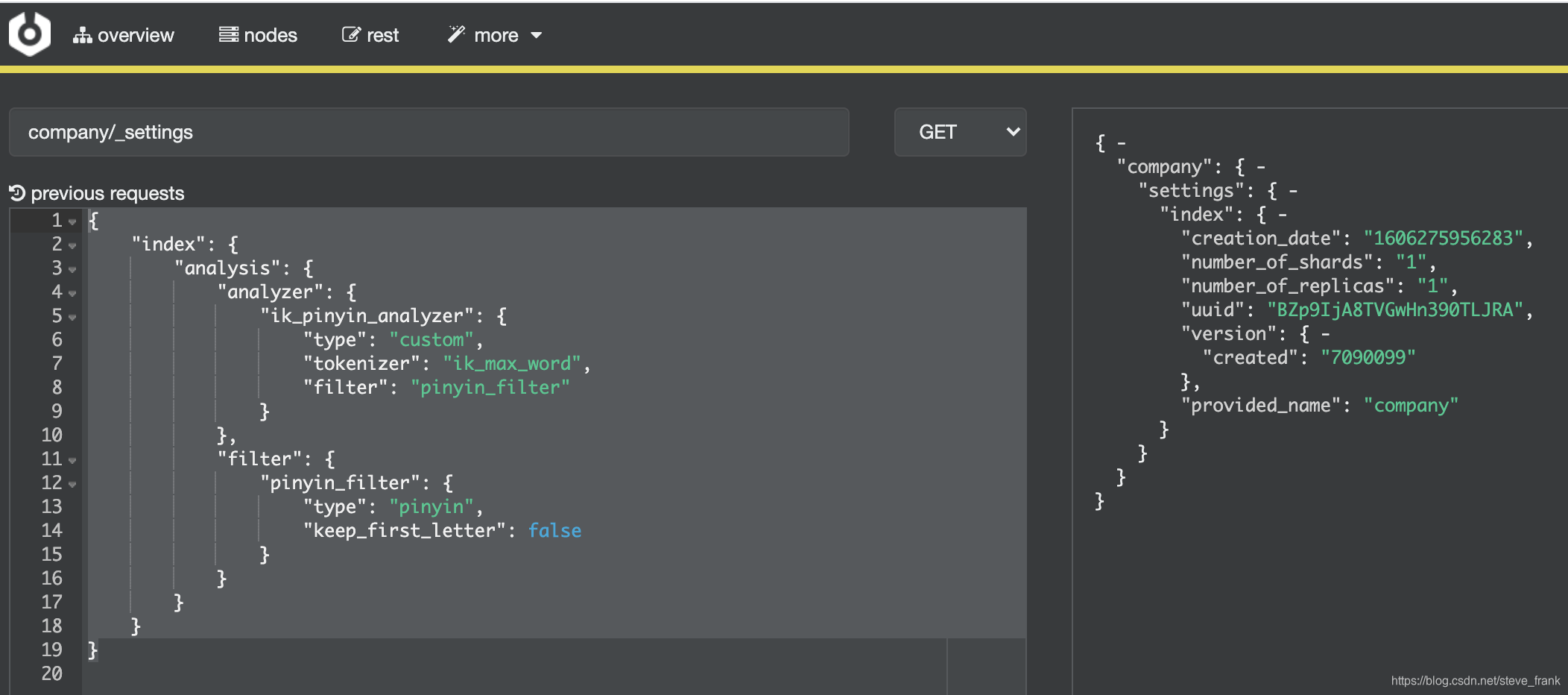

分词器设置

{

"index": {

"analysis": {

"analyzer": {

"ik_pinyin_analyzer": {

"type": "custom",

"tokenizer": "ik_max_word",

"filter": "pinyin_filter"

}

},

"filter": {

"pinyin_filter": {

"type": "pinyin",

"keep_first_letter": false

}

}

}

}

}

409

409

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?