ECCV18

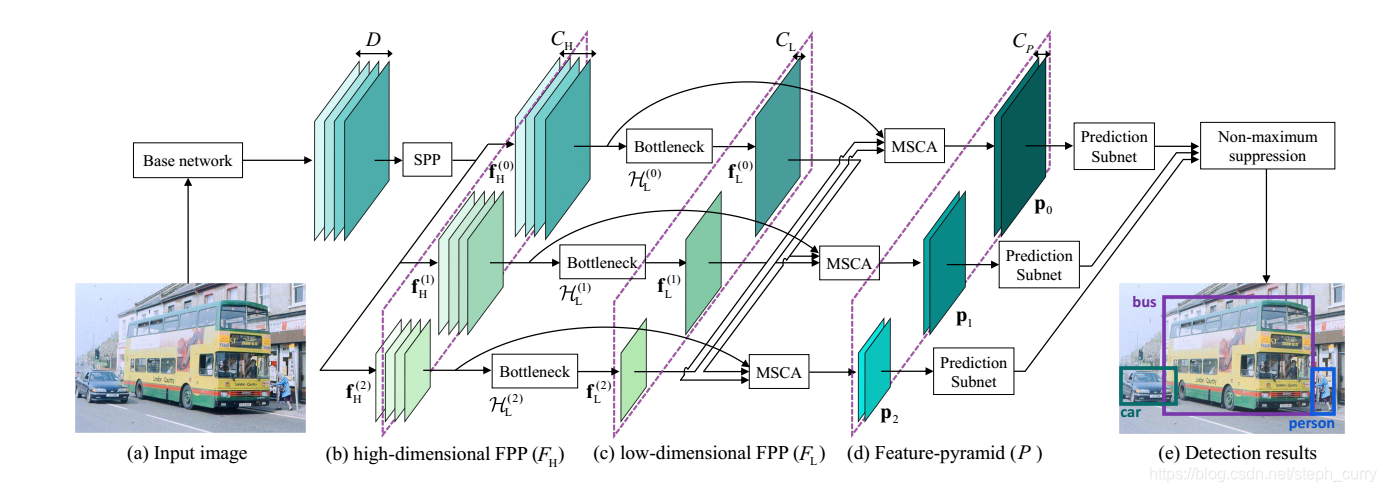

现在主流的一些检测方法通常使用一个网络来 生成通道数递增的特征,如SSD, 但是这样不同层语义信息差距较大, 会限制检测精度, 尤其是对小目标检测。 作者认为相较于提升深度, 提升网络的宽度更有效。

首先使用SPP(spatial pyramid pooling) 来生成不同分辨率的特征, 这些特征是并行生成的,可以认为这些不同尺寸的特征之间有相似的语义信息。 然后我们resize这些特征到相同尺寸, 进行融合, 得到特征金子塔的每个层特征。

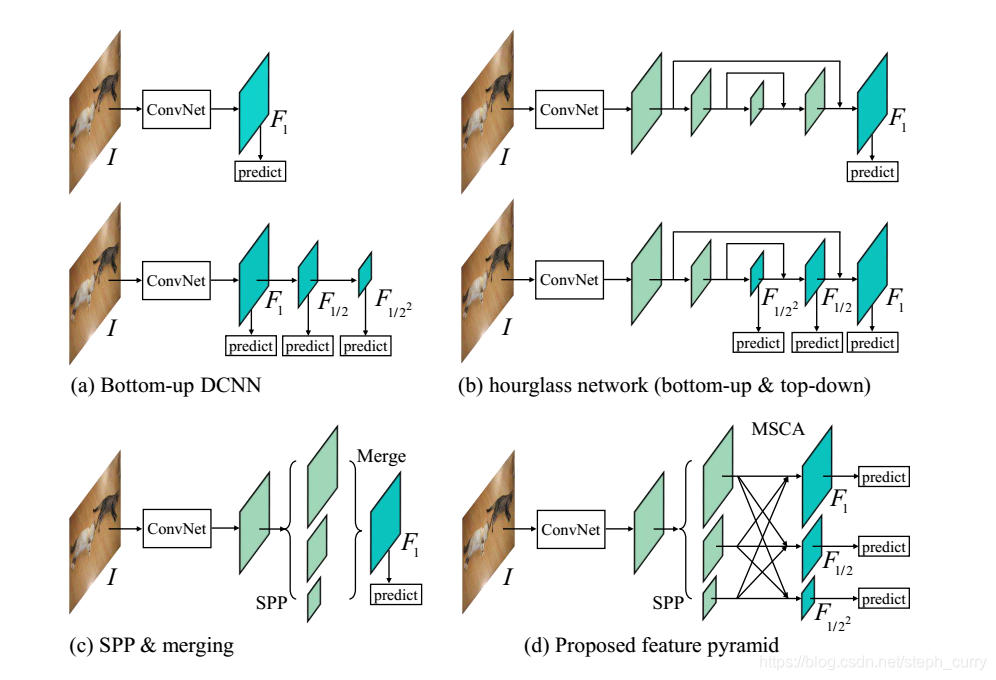

这个图是不同方法使用特征的方式, 其中(a)下是SSD的方式, (d)是文章提出的方法。

细节如下:

输入图片经过base network提取的特征大小设为:D*W*H。 经过SPP, 生成不同尺寸相同channel的特征:

![]()

其中第n个特征通道数为D, 分辨率为![]() 。

。

我们继续使用bottleneck来进一步提取特征和降低channel,生成:

![]()

通道数均为:D/(N-1)。 分辨率不变。

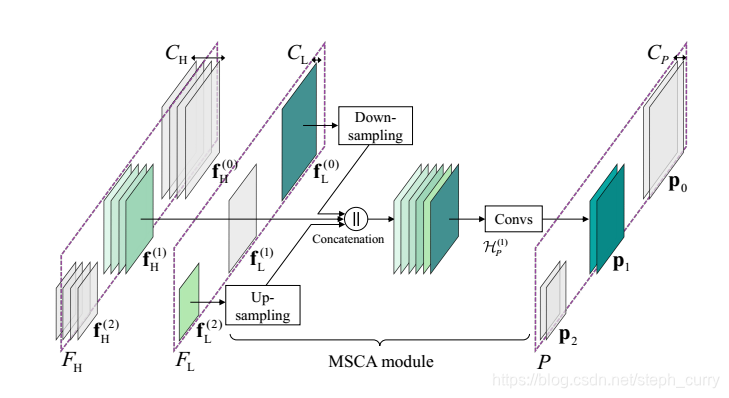

然后用MSCA模块来融合和

。具体的:

以的生成为例, 首先将

降采样,

上采样,到

的尺寸, 然后通过skip连接将

与它们concat。

之后再通过一段卷积, 得到。

小结:

本文提出一种新的生成feature pyramid的方法,结合SPP, 通过并行的方式生成。

CVPR19的 deep high resolution那篇做人体位姿的文章应该就是参考了这篇, 使用并行架构。

本文提出一种用于目标检测的新特征金字塔方法,利用SPP并行生成具有相似语义信息的不同尺寸特征,通过融合得到每层特征,特别适用于小目标检测,CVPR19的人体位姿文章参考了此方法。

本文提出一种用于目标检测的新特征金字塔方法,利用SPP并行生成具有相似语义信息的不同尺寸特征,通过融合得到每层特征,特别适用于小目标检测,CVPR19的人体位姿文章参考了此方法。

2141

2141

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?