from sklearn import datasets

from sklearn import cross_validation

from sklearn.naive_bayes import GaussianNB

from sklearn.svm import SVC

from sklearn.ensemble import RandomForestClassifier

from sklearn import metrics

# Datasets

dataset = datasets.make_classification(n_samples=1000, n_features=10)

# Inspect the data structures

print("dataset.data: \n", dataset[0])

print("dataset.target: \n", dataset[1])

# Cross-validation

kf = cross_validation.KFold(1000, n_folds=10, shuffle=True)

for train_index, test_index in kf:

X_train, y_train = dataset[0][train_index], dataset[1][train_index]

X_test, y_test = dataset[0][test_index], dataset[1][test_index]

# Inspect the data structures

print("X_train: \n", X_train)

print("y_train: \n", y_train)

print("X_test: \n", X_test)

print("y_test: \n", y_test)

# Navie Bayes

GaussianNB_clf = GaussianNB()

GaussianNB_clf.fit(X_train, y_train)

GaussianNB_pred = GaussianNB_clf.predict(X_test)

# Inspect the data structures

print("GaussianNB_pred: \n", GaussianNB_pred)

print("y_test: \n", y_test)

# SVM

SVC_clf = SVC(C=1e-01, kernel='rbf', gamma=0.1)

SVC_clf.fit(X_train, y_train)

SVC_pred = SVC_clf.predict(X_test)

# Inspect the data structures

print("SVC_pred: \n", SVC_pred)

print("y_test: \n", y_test)

# Random Forest

Random_Forest_clf = RandomForestClassifier(n_estimators=6)

Random_Forest_clf.fit(X_train, y_train)

Random_Forest_pred = Random_Forest_clf.predict(X_test)

# Inspect the data structures

print("Random_Forest_pred: \n", Random_Forest_pred)

print("y_test: \n", y_test)

# Performance evaluation

print()

GaussianNB_acc = metrics.accuracy_score(y_test, GaussianNB_pred)

print(" GaussianNB_acc: ", GaussianNB_acc)

GaussianNB_f1 = metrics.f1_score(y_test, GaussianNB_pred)

print(" GaussianNB_f1: ", GaussianNB_f1)

GaussianNB_auc = metrics.roc_auc_score(y_test, GaussianNB_pred)

print(" GaussianNB_auc: ", GaussianNB_auc)

print()

SVC_acc = metrics.accuracy_score(y_test, SVC_pred)

print(" SVC_acc: ", SVC_acc)

SVC_f1 = metrics.f1_score(y_test, SVC_pred)

print(" SVC_f1: ", SVC_f1)

SVC_auc = metrics.roc_auc_score(y_test, SVC_pred)

print(" SVC_auc: ",SVC_auc)

print()

Random_Forest_acc = metrics.accuracy_score(y_test, Random_Forest_pred)

print(" Random_Forest_acc: ", Random_Forest_acc)

Random_Forest_f1 = metrics.f1_score(y_test, Random_Forest_pred)

print(" Random_Forest_f1: ", Random_Forest_f1)

Random_Forest_auc = metrics.roc_auc_score(y_test, Random_Forest_pred)

print(" Random_Forest_auc: ", Random_Forest_auc)运行结果:

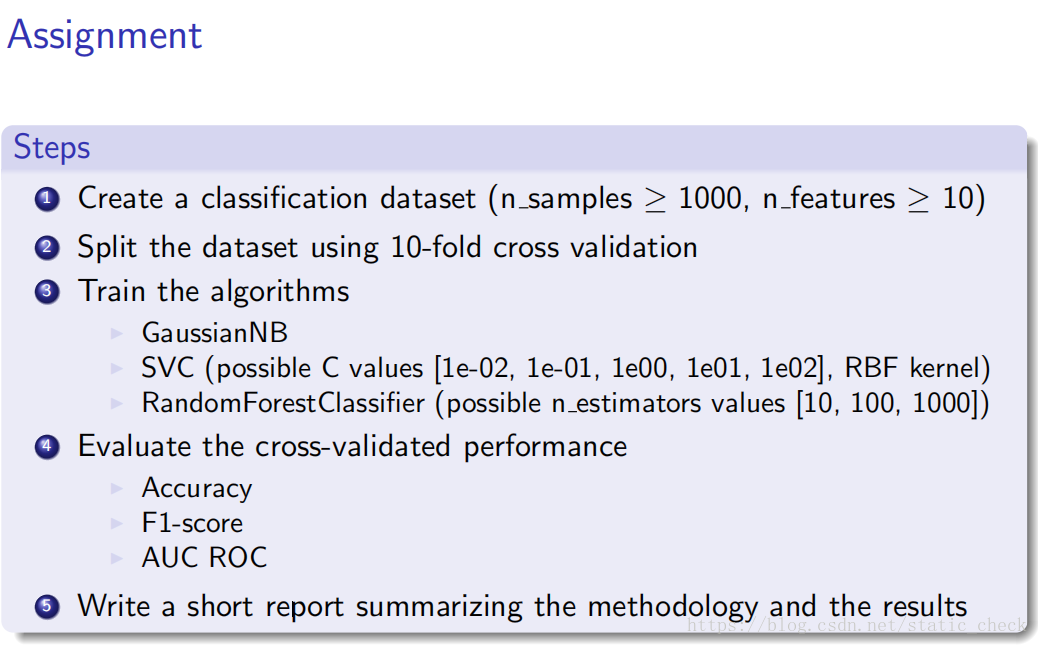

步骤如下:

1、 生成数据集

dataset = datasets.make_classification(n_samples=1000, n_features=10);

2、 将数据集划分为训练集和测试集;

kf = cross_validation.KFold(1000, n_folds=10, shuffle=True) for train_index, test_index in kf: X_train, y_train = dataset[0][train_index], dataset[1][train_index] X_test, y_test = dataset[0][test_index], dataset[1][test_index]

对于Naive Bayes( 天真的港湾 朴素贝叶斯),SVM, Random Forest三种算法,接下来的套路都是相同的,不妨以朴素贝叶斯为例。

3、设定训练方式;

GaussianNB_clf = GaussianNB()

4、用训练集训练;

GaussianNB_clf.fit(X_train, y_train)

5、生成预测集;

GaussianNB_pred = GaussianNB_clf.predict(X_test)

6、比较预测集和测试集,给出正确性评估,有三种评估方式。

GaussianNB_acc = metrics.accuracy_score(y_test, GaussianNB_pred) print(" GaussianNB_acc: ", GaussianNB_acc) GaussianNB_f1 = metrics.f1_score(y_test, GaussianNB_pred) print(" GaussianNB_f1: ", GaussianNB_f1) GaussianNB_auc = metrics.roc_auc_score(y_test, GaussianNB_pred) print(" GaussianNB_auc: ", GaussianNB_auc)

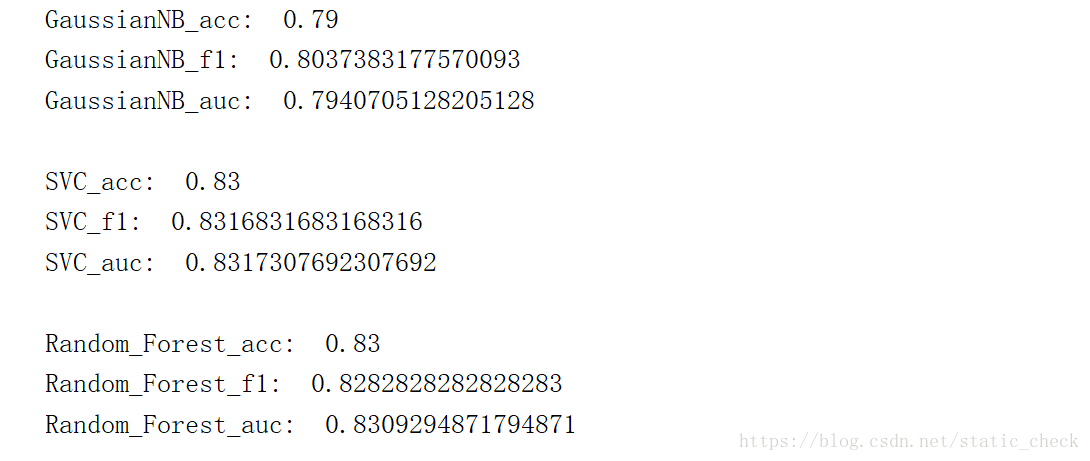

得分评估:

跑了几次程序,结果都不太一样,三种算法孰优孰劣很难讲,既然他们能被作为库函数,肯定有自己的独特优点。

890

890

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?