训练完faster rcnn想要计算一下mAP,结果整了半天才成功,在这里记录一下,省的下次忘了。

前面直接说计算方法和可能遇到的错误,后面再详细对代码进行解析。

计算流程

首先说一下faster rcnn中mAP的计算流程。主要流程为:

1、运行/tools下的test_net.py文件。

2、test_net.py文件调用/lib/fast_rcnn/test.py文件中的test_net函数进行检测。

3、test_net函数在检测完成后调用/lib/datasets/pascal_voc.py中的evaluate_detections函数计算mAP。

测试命令如下

./tools/test_net.py --gpu 0 \

--def PATH/TO/test.prototxt \

--net PATH/TO/vgg16_faster_rcnn_iter_40000.caffemodel \ #训练好的模型

--imdb voc_2007_test #数据集名称错误一

'Selective search data not found at: {}'.format(filename)

AssertionError: Selective search data not found at: /home/xuwh/Detect-model/py-faster-rcnn/data/xiaoding-data/selective_search_data/voc_2007_test.mat

这时需要打开config文件,把__C.TEST.HAS_RPN = False改为True。

错误二

File "/home/xuwh/Detect-model/py-faster-rcnn/tools/../lib/datasets/pascal_voc.py", line 250, in _write_voc_results_file

with open(filename, 'wt') as f:

IOError: [Errno 2] No such file or directory: '/home/xuwh/Detect-model/py-faster-rcnn/data/xiaoding-data/results/VOC2007/Main/comp4_d721c71f-dcb0-46ad-a93d-d7e6738d6cbf_det_test_type_none.txt'

根提示,打开pascal_voc.py 修改下面的函数中的filename,修改结果如下,修改原因会在后面解释

def _get_voc_results_file_template(self):

# VOCdevkit/results/VOC2007/Main/<comp_id>_det_test_aeroplane.txt

# filename = self._get_comp_id() + '_det_' + self._image_set + '_{:s}.txt'

filename = self._image_set + '_{:s}'

path = os.path.join(

self._devkit_path,

'results',

'VOC' + self._year,

'Main',

filename)

return path然后根据没有文件夹提示的路径手动新建文件夹。

最后在运行就可以了,我的结果如下

代码解析

首先运行的是/tools下的test_net.py文件,部分代码如下,这部分都是一些初始化的过程。

if __name__ == '__main__':

args = parse_args() #获取命令行参数,参数具体含义见该文件的parse_args函数

print('Called with args:')

print(args)

if args.cfg_file is not None:

cfg_from_file(args.cfg_file)

if args.set_cfgs is not None:

cfg_from_list(args.set_cfgs)

cfg.GPU_ID = args.gpu_id

print('Using config:')

pprint.pprint(cfg)

while not os.path.exists(args.caffemodel) and args.wait: #判断是否找到测试模型

print('Waiting for {} to exist...'.format(args.caffemodel))

time.sleep(10)

caffe.set_mode_gpu() #一些初始化过程

caffe.set_device(args.gpu_id)

net = caffe.Net(args.prototxt, args.caffemodel, caffe.TEST)

net.name = os.path.splitext(os.path.basename(args.caffemodel))[0]

imdb = get_imdb(args.imdb_name)

imdb.competition_mode(args.comp_mode)

if not cfg.TEST.HAS_RPN:

imdb.set_proposal_method(cfg.TEST.PROPOSAL_METHOD)

test_net(net, imdb, max_per_image=args.max_per_image, vis=args.vis) #调用/lib/fast_rcnn/test.py中的函数进行目标检测然后转到/lib/fast_rcnn/test.py文件,部分代码如下

def test_net(net, imdb, max_per_image=100, thresh=0.05, vis=False):

"""Test a Fast R-CNN network on an image database."""

num_images = len(imdb.image_index) #获取测试集图像数量

# all detections are collected into:

# all_boxes[cls][image] = N x 5 array of detections in

# (x1, y1, x2, y2, score)

all_boxes = [[[] for _ in xrange(num_images)]

for _ in xrange(imdb.num_classes)]

output_dir = get_output_dir(imdb, net)

# timers

_t = {'im_detect' : Timer(), 'misc' : Timer(), 'total' : Timer()}

if not cfg.TEST.HAS_RPN:

roidb = imdb.roidb

_t['total'].tic()

total_time_consume = 0

for i in xrange(num_images):

# filter out any ground truth boxes

if cfg.TEST.HAS_RPN:

box_proposals = None

else:

# The roidb may contain ground-truth rois (for example, if the roidb

# comes from the training or val split). We only want to evaluate

# detection on the *non*-ground-truth rois. We select those the rois

# that have the gt_classes field set to 0, which means there's no

# ground truth.

box_proposals = roidb[i]['boxes'][roidb[i]['gt_classes'] == 0]

im = cv2.imread(imdb.image_path_at(i))

_t['im_detect'].tic()

scores, boxes = im_detect(net, im, box_proposals) #读取图像进行检测,记录每张图像的检测时间

_t['im_detect'].toc()

total_time_consume += _t['im_detect'].average_time

_t['misc'].tic()

# skip j = 0, because it's the background class

for j in xrange(1, imdb.num_classes): #判断检测到的目标框是否满足阈值

inds = np.where(scores[:, j] > thresh)[0]

cls_scores = scores[inds, j]

cls_boxes = boxes[inds, j*4:(j+1)*4]

cls_dets = np.hstack((cls_boxes, cls_scores[:, np.newaxis])) \

.astype(np.float32, copy=False)

keep = nms(cls_dets, cfg.TEST.NMS)

cls_dets = cls_dets[keep, :]

if vis:

vis_detections(im, imdb.classes[j], cls_dets) #该函数是在图像上画出目标框

all_boxes[j][i] = cls_dets

# Limit to max_per_image detections *over all classes*

if max_per_image > 0:

image_scores = np.hstack([all_boxes[j][i][:, -1]

for j in xrange(1, imdb.num_classes)])

if len(image_scores) > max_per_image:

image_thresh = np.sort(image_scores)[-max_per_image]

for j in xrange(1, imdb.num_classes):

keep = np.where(all_boxes[j][i][:, -1] >= image_thresh)[0]

all_boxes[j][i] = all_boxes[j][i][keep, :]

_t['misc'].toc()

print 'im_detect: {:d}/{:d} {:.3f}s {:.3f}s' \

.format(i + 1, num_images, _t['im_detect'].average_time,

_t['misc'].average_time)

_t['total'].toc()

print 'total detect time: {:.6f}s'.format(total_time_consume)

det_file = os.path.join(output_dir, 'detections.pkl')

with open(det_file, 'wb') as f:

cPickle.dump(all_boxes, f, cPickle.HIGHEST_PROTOCOL)

print 'Evaluating detections'

imdb.evaluate_detections(all_boxes, output_dir) #调用该函数进行检测结果的评估检测完成后调用/lib/datasets/pascal_voc.py中的evaluate_detections函数进行评估

def evaluate_detections(self, all_boxes, output_dir):

self._write_voc_results_file(all_boxes) #保存各类被检测的目标框的坐标,一个类别一个文件

self._do_python_eval(output_dir) #进行评估

if self.config['matlab_eval']:

self._do_matlab_eval(output_dir)

# if self.config['cleanup']: #注释部分的功能是计算完成后自动删除计算过程中生成的各类别目标框文件,如果不需要可以取消注释

# for cls in self._classes:

# if cls == '__background__':

# continue

# filename = self._get_voc_results_file_template().format(cls)

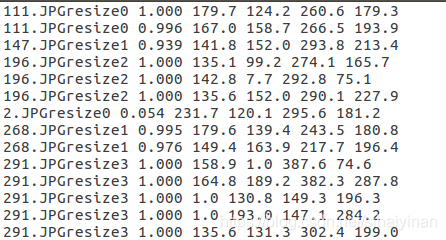

# os.remove(filename)其中,_write_voc_results_file(self, all_boxes)函数的功能是每一类创建一个文本文件,保存属于该类的目标框的信息,具体形式如下

我的类别一共有六类,就生成了六个文件,每一个文件的内容如下,每一列分别是图像名字,置信度,xmin,ymin,xmax,ymax。

_write_voc_results_file的具体代码如下

def _get_comp_id(self):

comp_id = (self._comp_id + '_' + self._salt if self.config['use_salt']

else self._comp_id)

return comp_id

def _get_voc_results_file_template(self):

# VOCdevkit/results/VOC2007/Main/<comp_id>_det_test_aeroplane.txt

# filename = self._get_comp_id() + '_det_' + self._image_set + '_{:s}.txt'

filename = self._image_set + '_{:s}'

path = os.path.join(

self._devkit_path,

'results',

'VOC' + self._year,

'Main',

filename)

return path

def _write_voc_results_file(self, all_boxes):

for cls_ind, cls in enumerate(self.classes):

if cls == '__background__':

continue

print 'Writing {} VOC results file'.format(cls)

filename = self._get_voc_results_file_template().format(cls)

with open(filename, 'wt') as f:

for im_ind, index in enumerate(self.image_index):

dets = all_boxes[cls_ind][im_ind]

if dets == []:

continue

# the VOCdevkit expects 1-based indices

for k in xrange(dets.shape[0]):

f.write('{:s} {:.3f} {:.1f} {:.1f} {:.1f} {:.1f}\n'.

format(index, dets[k, -1],

dets[k, 0] + 1, dets[k, 1] + 1,

dets[k, 2] + 1, dets[k, 3] + 1))从代码中可以看到,原始程序默认创建的文件是由filename = self._get_voc_results_file_template().format(cls)这一句来命名的。但是默认生成的文件名是一串随机的字符,因此会提示不存在文件夹,因此我就修改了一下,将文件名命名成确定的名字。

该语句运行结束后会生成如上图所示的几个文件,然后再运行_do_python_eval(output_dir)进行mAP的计算。_do_python_eval(output_dir)的代码如下

def _do_python_eval(self, output_dir = 'output'):

annopath = os.path.join( #找到annotation文件夹

self._devkit_path,

'VOC' + self._year,

'Annotations',

'{:s}.xml')

imagesetfile = os.path.join( #找到测试图像文件夹

self._devkit_path,

'VOC' + self._year,

'ImageSets',

'Main',

self._image_set + '.txt')

cachedir = os.path.join(self._devkit_path, 'annotations_cache')

aps = [] #存放计算的ap

recs = [] #存放计算的recall值

precs = [] #存放计算的precision值

# The PASCAL VOC metric changed in 2010

use_07_metric = True if int(self._year) < 2010 else False #判断mAP的计算方法,因为10年之前的计算方法和10年之后的是不同的

print 'VOC07 metric? ' + ('Yes' if use_07_metric else 'No')

if not os.path.isdir(output_dir):

os.mkdir(output_dir)

for i, cls in enumerate(self._classes):

if cls == '__background__':

continue

filename = self._get_voc_results_file_template().format(cls) #使用voc_eval计算mAP

rec, prec, ap = voc_eval(

filename, annopath, imagesetfile, cls, cachedir, ovthresh=0.5,

use_07_metric=use_07_metric)

aps += [ap]

recs += [rec[-1]]

precs += [prec[-1]]

print('AP for {} = {:.4f}'.format(cls, ap))

print('recall for {} = {:.4f}'.format(cls, rec[-1]))

print('precision for {} = {:.4f}'.format(cls, prec[-1]))

with open(os.path.join(output_dir, cls + '_pr.pkl'), 'w') as f:

cPickle.dump({'rec': rec, 'prec': prec, 'ap': ap}, f)

print('Mean AP = {:.4f}'.format(np.mean(aps)))

print('~~~~~~~~')

print('Results:')

for ap in aps:

print('{:.3f}'.format(ap))

print('{:.3f}'.format(np.mean(aps)))

print('~~~~~~~~')

print('')

print('--------------------------------------------------------------')

print('Results computed with the **unofficial** Python eval code.')

print('Results should be very close to the official MATLAB eval code.')

print('Recompute with `./tools/reval.py --matlab ...` for your paper.')

print('-- Thanks, The Management')

print('--------------------------------------------------------------')

本文记录了Faster R-CNN模型计算mAP的详细步骤和常见错误处理,包括修改配置文件、理解计算流程及代码解析,涉及关键步骤如test_net.py的执行、结果评估函数evaluate_detections等。

本文记录了Faster R-CNN模型计算mAP的详细步骤和常见错误处理,包括修改配置文件、理解计算流程及代码解析,涉及关键步骤如test_net.py的执行、结果评估函数evaluate_detections等。

5989

5989

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?