CNN 卷积神经网络

为什么CNN常用于图像处理:

- 局部性

- 平移性

- 可缩性

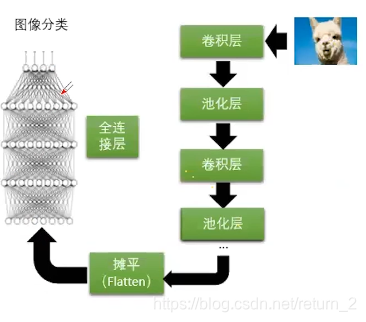

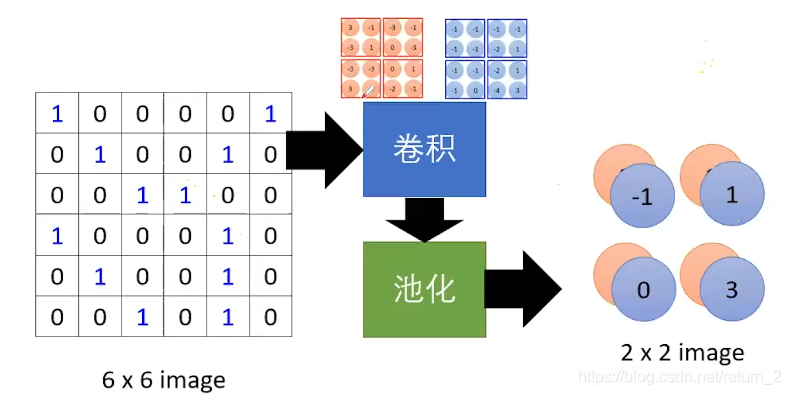

CNN简易图

局部性与平移性会在卷积层得到体现。

可缩性会在池化层得到体现。

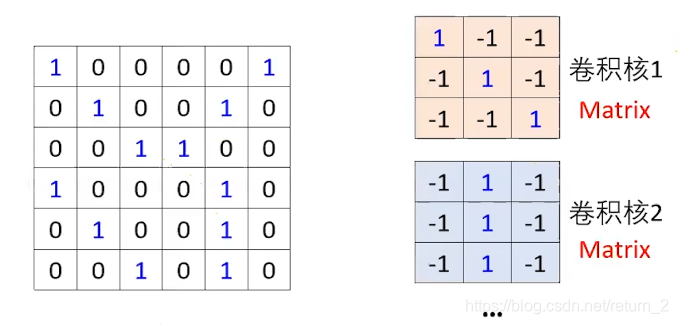

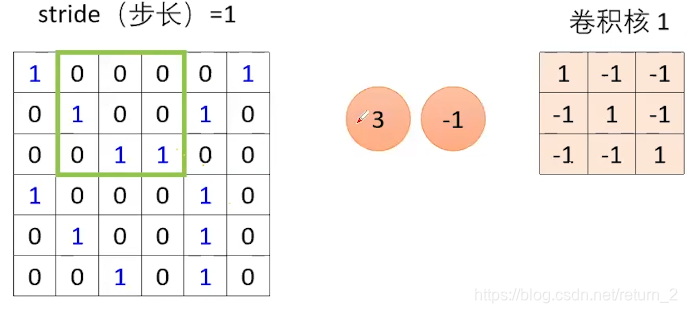

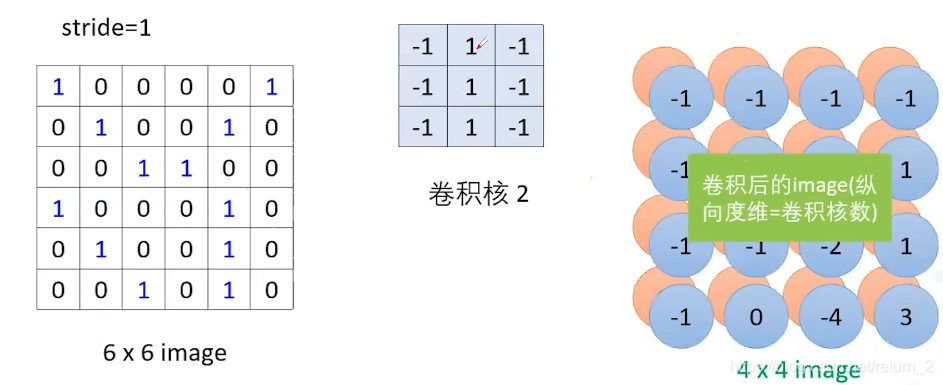

CNN-卷积层(参数,不同维度下的特征提取)

卷积核的通道数和输入数据的通道数一致,卷积核个数等于输出通道数

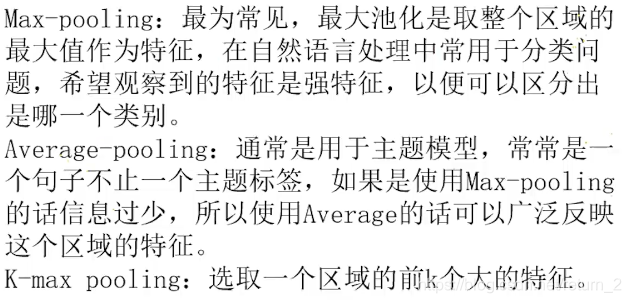

CNN-池化层

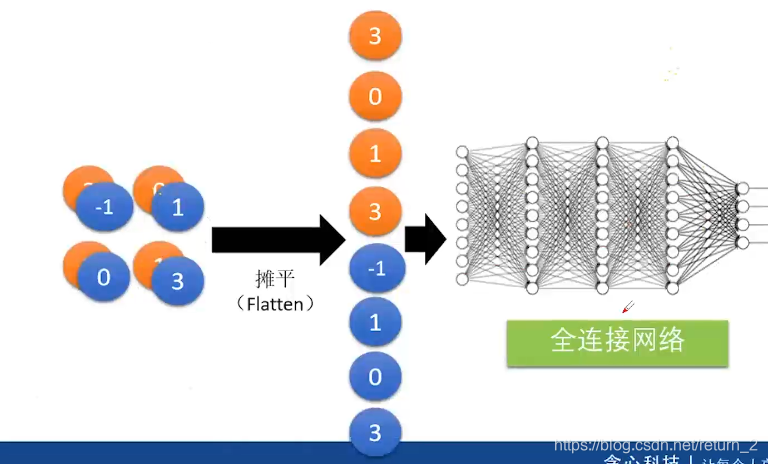

摊平(Flatten)

- 在test-CNN中卷积核宽度是与词向量的维度一致

- 用卷积核进行卷积时,不仅考虑了词义而且考虑了词序及上下文

CNN实现MNIST

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch import optim

from torch.utils.data import DataLoader

from torchvision import transforms

from torchvision import datasets

from matplotlib import pyplot as plt

# tensor type :(batch,channel,w,h)

# 1. Prepare Datases

# data transform to desired form

transform = transforms.Compose([transforms.ToTensor(),transforms.Normalize(0.1307,0.3081)])

# load data

train_data = datasets.MNIST(root='./data/mnist/',train=True,transform=transform)

test_data = datasets.MNIST(root='./data/mnist/',train=False,transform=transform)

# mini-batch

train_loader = DataLoader(dataset=train_data,shuffle=True,batch_size=64,num_workers=2)

test_loader = DataLoader(dataset=test_data,shuffle=False,batch_size=64,num_workers=2)

# 2. Design Model Using class

class MNISTModel(nn.Module):

def __init__(self):

super(MNISTModel,self).__init__()

self.conv1 = nn.Conv2d(1,10,5) # 10,12,12

self.conv2 = nn.Conv2d(10,20,5) # 20,4,4

self.conv3 = nn.Conv2d(20,40,1) # 40,2,2

self.pool = nn.MaxPool2d(2)

self.linear = nn.Linear(160,10)

self.relu = nn.ReLU()

def forward(self,x):

batch_size = x.size(0)

x = self.relu(self.pool(self.conv1(x)))

x = self.relu(self.pool(self.conv2(x)))

x = self.relu(self.pool(self.conv3(x)))

x = x.view(batch_size,-1)

x = self.linear(x)

return x

model = MNISTModel()

# using GPU

device = torch.device('cuda')

model.to(device)

# 3.optmizor and loss function

loss_f = nn.CrossEntropyLoss()

opti = optim.SGD(model.parameters(),lr=0.01,momentum=0.5)

# 4.Traing cycle

def train(epoch):

loss_num = 0.0

for index,data in enumerate(train_loader):

inputs , target = data

inputs , target = inputs.to(device),target.to(device)

outputs = model.forward(inputs)

loss = loss_f(outputs,target)

opti.zero_grad()

loss.backward()

opti.step()

loss_num += loss.item()

if index % 100 == 99:

print(f'epoch:{epoch+1},index:{index+1},loss:{loss_num / 100}')

loss_num = 0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

images, labels = images.to(device), labels.to(device)

outputs = model.forward(images)

# return (max,maxindex)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print(f'accuracy on test set: {100 * correct / total} % ')

return correct / total

if __name__ == '__main__':

epoch_list = []

acc_list = []

for epoch in range(10):

train(epoch)

acc = test()

epoch_list.append(epoch)

acc_list.append(acc)

plt.plot(epoch_list, acc_list)

plt.ylabel('accuracy')

plt.xlabel('epoch')

plt.show()

这篇博客介绍了如何使用卷积神经网络(CNN)处理图像数据,特别是针对MNIST手写数字识别任务。文章通过PyTorch库展示了CNN模型的构建过程,包括卷积层、池化层和全连接层的使用,并提供了训练和测试模型的代码示例。最终,模型在测试集上得到了较高的准确率。

这篇博客介绍了如何使用卷积神经网络(CNN)处理图像数据,特别是针对MNIST手写数字识别任务。文章通过PyTorch库展示了CNN模型的构建过程,包括卷积层、池化层和全连接层的使用,并提供了训练和测试模型的代码示例。最终,模型在测试集上得到了较高的准确率。

1095

1095

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?