算法介绍

低维嵌入(Low-Dimensional Embedding)是一种降低高维数据维度的技术,目的是在保留数据特征的同时减少数据的复杂性。这种技术常用于可视化、特征学习、以及数据压缩等领域。低维嵌入的目标是将高维数据映射到一个低维空间,以便更好地理解和可视化数据。

在 k k k近邻学习中,随着数据维度的增加,样本之间的距离变得更加稀疏,导致KNN算法性能下降。这是因为在高维空间中,样本之间的距离变得很难定义和度量,使得KNN算法的效果变差。

通过低维嵌入技术,可以将高维数据映射到一个更低维的子空间中。这样可以在新的低维表示中更好地保留数据的结构和关系,从而提高KNN算法在原始空间中的性能。

低维嵌入示意图如下所示:

我们假定

m

m

m个样本在原始空间的距离矩阵为

D

∈

R

m

×

m

D\in \mathbb{R}^{m\times m}

D∈Rm×m,其中第

i

i

i行

j

j

j列的元素

d

i

s

t

i

j

dist_{ij}

distij为样本

x

i

x_i

xi到样本

x

j

x_j

xj的距离。我们的目标是获得样本在

d

′

d^\prime

d′维空间的表示

Z

∈

R

d

′

×

m

Z\in \mathbb{R}^{d^\prime \times m}

Z∈Rd′×m,

d

′

≤

d

d^\prime \leq d

d′≤d,且任意两个样本在

d

′

d^\prime

d′维空间中的欧式距离等于原始空间中的距离,即

∣

∣

z

i

−

z

j

∣

∣

=

d

i

s

t

i

j

||z_i-z_j||=dist_{ij}

∣∣zi−zj∣∣=distij。

令

B

=

Z

T

Z

∈

R

m

×

m

B=Z^TZ\in \mathbb{R}^{m\times m}

B=ZTZ∈Rm×m,其中

B

B

B是降维后样本的内积矩阵,

b

i

j

=

z

i

T

z

j

b_{ij}=z_i^Tz_j

bij=ziTzj,有:

d

i

s

t

i

j

2

=

∣

∣

z

i

∣

∣

2

+

∣

∣

z

j

∣

∣

2

−

2

z

i

T

z

j

=

b

i

i

+

b

j

j

−

2

b

i

j

(3)

\begin{aligned} dist_{ij}^2&=||z_i||^2+||z_j||^2-2z_i^Tz_j\\ &=b_{ii}+b_{jj}-2b_{ij} \end{aligned} \tag{3}

distij2=∣∣zi∣∣2+∣∣zj∣∣2−2ziTzj=bii+bjj−2bij(3)

为了方便讨论,我们令降维后的样本

Z

Z

Z被中心化过,即

∑

i

=

1

m

z

i

=

0

\sum_{i=1}^mz_i=0

∑i=1mzi=0,显然矩阵

B

B

B的行和列的和都为零,即

∑

i

=

1

m

b

i

j

=

∑

j

=

1

m

b

i

j

=

0

\sum_{i=1}^mb_{ij}=\sum_{j=1}^mb_{ij}=0

∑i=1mbij=∑j=1mbij=0,故可知:

∑

i

=

1

m

d

i

s

t

i

j

2

=

∑

i

=

1

m

b

i

i

+

∑

i

=

1

m

b

j

j

−

2

∑

i

=

1

m

b

i

j

=

t

r

(

B

)

+

m

b

j

j

(4)

\begin{aligned} \sum_{i=1}^mdist_{ij}^2&=\sum_{i=1}^mb_{ii}+\sum_{i=1}^mb_{jj}-2\sum_{i=1}^mb_{ij}\\ &=tr(B)+mb_{jj} \end{aligned} \tag{4}

i=1∑mdistij2=i=1∑mbii+i=1∑mbjj−2i=1∑mbij=tr(B)+mbjj(4)

∑ j = 1 m d i s t i j 2 = ∑ j = 1 m b i i + ∑ j = 1 m b j j − 2 ∑ j = 1 m b i j = m b i i + t r ( B ) (5) \begin{aligned} \sum_{j=1}^mdist_{ij}^2&=\sum_{j=1}^mb_{ii}+\sum_{j=1}^mb_{jj}-2\sum_{j=1}^mb_{ij}\\ &=mb_{ii}+tr(B) \end{aligned} \tag{5} j=1∑mdistij2=j=1∑mbii+j=1∑mbjj−2j=1∑mbij=mbii+tr(B)(5)

∑ i = 1 m ∑ j = 1 m d i s t i j 2 = ∑ i = 1 m ∑ j = 1 m b i i + ∑ i = 1 m ∑ j = 1 m b j j − 2 ∑ i = 1 m ∑ j = 1 m b i j = 2 m ⋅ t r ( B ) (6) \begin{aligned} \sum_{i=1}^m\sum_{j=1}^mdist_{ij}^2&=\sum_{i=1}^m\sum_{j=1}^mb_{ii}+\sum_{i=1}^m\sum_{j=1}^mb_{jj}-2\sum_{i=1}^m\sum_{j=1}^mb_{ij}\\ &=2m\cdot tr(B) \end{aligned} \tag{6} i=1∑mj=1∑mdistij2=i=1∑mj=1∑mbii+i=1∑mj=1∑mbjj−2i=1∑mj=1∑mbij=2m⋅tr(B)(6)

其中 t r ( B ) = ∑ i = 1 m b i i = ∑ i = 1 m z i T z i = ∑ i = 1 m ∣ ∣ z i ∣ ∣ 2 tr(B)=\sum_{i=1}^m b_{ii}=\sum_{i=1}^m z_i^Tz_i=\sum_{i=1}^m||z_i||^2 tr(B)=∑i=1mbii=∑i=1mziTzi=∑i=1m∣∣zi∣∣2,令:

d i s t i ⋅ 2 = 1 m ∑ j = 1 m d i s t i j 2 (7) dist_{i\cdot}^2=\frac{1}{m}\sum_{j=1}^mdist_{ij}^2 \tag{7} disti⋅2=m1j=1∑mdistij2(7)

d

i

s

t

⋅

j

2

=

1

m

∑

i

=

1

m

d

i

s

t

i

j

2

(8)

dist_{\cdot j}^2=\frac{1}{m}\sum_{i=1}^mdist_{ij}^2 \tag{8}

dist⋅j2=m1i=1∑mdistij2(8)

d

i

s

t

⋅

⋅

2

=

1

m

2

∑

i

=

1

m

∑

j

=

1

m

d

i

s

t

i

j

2

(9)

dist_{\cdot \cdot}^2=\frac{1}{m^2}\sum_{i=1}^m\sum_{j=1}^mdist_{ij}^2 \tag{9}

dist⋅⋅2=m21i=1∑mj=1∑mdistij2(9)

- d i s t i ⋅ 2 dist_{i\cdot}^2 disti⋅2表示样本 i i i到所有其他样本的平均距离平方。这个值反映了样本 i i i 与其他样本的整体相似性。

- d i s t ⋅ j 2 dist_{\cdot j}^2 dist⋅j2表示所有样本到样本 j j j 的平均距离平方。这个值反映了所有样本到某个特定样本 j j j 的整体相似性。

- d i s t ⋅ ⋅ 2 dist_{\cdot \cdot}^2 dist⋅⋅2表示所有样本之间的平均距离平方。这个值反映了整个样本集合的相似性。

由式(3)可知:

b

i

j

=

−

1

2

(

d

i

s

t

i

j

2

−

b

i

i

−

b

j

j

)

(10)

b_{ij}=-\frac{1}{2}(dist_{ij}^2-b_{ii}-b_{jj}) \tag{10}

bij=−21(distij2−bii−bjj)(10)

将式(6)带入式(9)中可知:

d

i

s

t

⋅

⋅

2

=

1

m

2

⋅

2

m

⋅

t

r

(

B

)

=

2

m

⋅

t

r

(

B

)

(11)

\begin{aligned} dist_{\cdot \cdot}^2&=\frac{1}{m^2}\cdot 2m\cdot tr(B)\\ &=\frac{2}{m}\cdot tr(B) \end{aligned} \tag{11}

dist⋅⋅2=m21⋅2m⋅tr(B)=m2⋅tr(B)(11)

故有:

t

r

(

B

)

=

m

2

d

i

s

t

⋅

⋅

2

(12)

tr(B)=\frac{m}{2}dist_{\cdot \cdot}^2\tag{12}

tr(B)=2mdist⋅⋅2(12)

将式(4)带入式(8)中有:

d

i

s

t

⋅

j

2

=

1

m

(

t

r

(

B

)

+

m

b

j

j

)

(13)

\begin{aligned} dist_{\cdot j}^2&=\frac{1}{m}(tr(B)+mb_{jj}) \end{aligned} \tag{13}

dist⋅j2=m1(tr(B)+mbjj)(13)

将式(12)带入式(13)中有:

d

i

s

t

⋅

j

2

=

1

m

(

t

r

(

B

)

+

m

b

j

j

)

=

1

m

(

m

2

d

i

s

t

⋅

⋅

2

+

m

b

j

j

)

=

1

2

d

i

s

t

⋅

⋅

2

+

b

j

j

(14)

\begin{aligned} dist_{\cdot j}^2&=\frac{1}{m}(tr(B)+mb_{jj})\\ &=\frac{1}{m}(\frac{m}{2}dist_{\cdot \cdot}^2+mb_{jj})\\ &=\frac{1}{2}dist_{\cdot \cdot}^2+b_{jj} \end{aligned} \tag{14}

dist⋅j2=m1(tr(B)+mbjj)=m1(2mdist⋅⋅2+mbjj)=21dist⋅⋅2+bjj(14)

故有:

b

j

j

=

d

i

s

t

⋅

j

2

−

1

2

d

i

s

t

⋅

⋅

2

(15)

b_{jj}=dist_{\cdot j}^2-\frac{1}{2}dist_{\cdot \cdot}^2 \tag{15}

bjj=dist⋅j2−21dist⋅⋅2(15)

将式(5)带入式(7)中有:

d

i

s

t

i

⋅

2

=

1

m

(

m

b

i

i

+

t

r

(

B

)

)

(16)

\begin{aligned} dist_{i\cdot}^2&=\frac{1}{m}(mb_{ii}+tr(B))\\ \end{aligned} \tag{16}

disti⋅2=m1(mbii+tr(B))(16)

将式(12)带入式(16)中有:

d

i

s

t

i

⋅

2

=

1

m

(

m

b

i

i

+

t

r

(

B

)

)

=

1

m

(

m

b

i

i

+

m

2

d

i

s

t

⋅

⋅

2

)

=

b

i

i

+

1

2

d

i

s

t

⋅

⋅

2

(17)

\begin{aligned} dist_{i\cdot}^2&=\frac{1}{m}(mb_{ii}+tr(B))\\ &=\frac{1}{m}(mb_{ii}+\frac{m}{2}dist_{\cdot \cdot}^2)\\ &=b_{ii}+\frac{1}{2}dist_{\cdot \cdot}^2 \end{aligned} \tag{17}

disti⋅2=m1(mbii+tr(B))=m1(mbii+2mdist⋅⋅2)=bii+21dist⋅⋅2(17)

故有:

b

i

i

=

d

i

s

t

i

⋅

2

−

1

2

d

i

s

t

⋅

⋅

2

(18)

b_{ii}=dist_{i\cdot}^2-\frac{1}{2}dist_{\cdot \cdot}^2 \tag{18}

bii=disti⋅2−21dist⋅⋅2(18)

最后将式(15)和式(18)带入式(10)可得:

b i j = − 1 2 ( d i s t i j 2 − d i s t i ⋅ 2 − d i s t ⋅ j 2 + d i s t ⋅ ⋅ 2 ) (19) b_{ij}=-\frac{1}{2}(dist_{ij}^2-dist_{i\cdot}^2-dist_{\cdot j}^2+dist_{\cdot \cdot}^2) \tag{19} bij=−21(distij2−disti⋅2−dist⋅j2+dist⋅⋅2)(19)

故此时就可通过降维前后不变的距离矩阵 D D D来求取内积矩阵 B B B。

最后我们通过对矩阵 B B B进行特征值分解,即 B = V Λ V T B=V\Lambda V^T B=VΛVT,其中 Λ = diag ( λ 1 , λ 2 , . . . , λ d ) \Lambda=\text{diag}(\lambda_1,\lambda_2,...,\lambda_d) Λ=diag(λ1,λ2,...,λd)为特征值所构成的对角矩阵,且 λ 1 ≥ λ 2 ≥ . . . ≥ λ d \lambda_1\geq\lambda_2\geq...\geq\lambda_d λ1≥λ2≥...≥λd, V V V是特征向量。

故我们假定 B B B有 d ⋆ d^\star d⋆个非零特征值,它们所构成的对角矩阵 Λ ⋆ = diag ( λ 1 , λ 2 , . . . , λ d ⋆ ) \Lambda_{\star}=\text{diag}(\lambda_1,\lambda_2,...,\lambda_{d^\star}) Λ⋆=diag(λ1,λ2,...,λd⋆),其中 V ⋆ V_\star V⋆是特征向量。且 B = Z T Z = V ⋆ Λ ⋆ V ⋆ T B=Z^TZ=V_\star\Lambda_\star V_\star^T B=ZTZ=V⋆Λ⋆V⋆T,则 Z Z Z可表达为:

Z = Λ ⋆ 1 / 2 V ⋆ T ∈ R d ⋆ × m (20) Z=\Lambda_\star^{1/2}V_\star^T \in \mathbb{R}^{d^\star \times m} \tag{20} Z=Λ⋆1/2V⋆T∈Rd⋆×m(20)

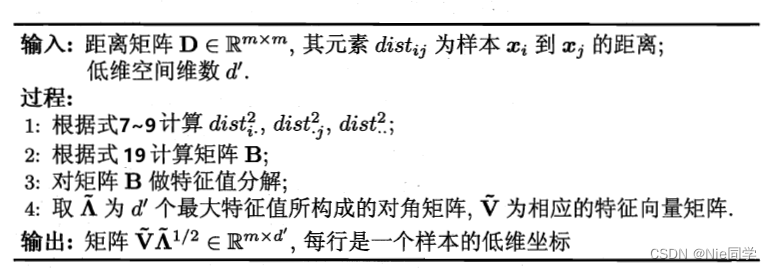

其算法流程图如下所示:

实验分析

数据集如下图所示:

读入数据集:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

# 设置中文显示

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

# 读取数据集

data = pd.read_csv('data/correlated_dataset.csv')

计算距离矩阵函数:

def calculate_distance_matrix(data):

# 计算距离矩阵

num_samples = len(data)

distances = np.zeros((num_samples, num_samples))

for i in range(num_samples):

for j in range(num_samples):

distances[i, j] = np.linalg.norm(data[i] - data[j])

return distances

MDS算法:

def calculate_mds_diagonal_matrix(distances, d_star):

m = len(distances)

# 计算降维前后不变的距离平方

dist_i_dot_sq = np.mean(distances**2, axis=1)

dist_dot_j_sq = np.mean(distances**2, axis=0)

dist_dot_dot_sq = np.mean(distances**2)

# 计算内积矩阵B

B = -0.5 * (distances**2 - dist_i_dot_sq.reshape(-1, 1) - dist_dot_j_sq + dist_dot_dot_sq)

# 对B进行特征值分解

eigenvalues, eigenvectors = np.linalg.eigh(B)

# 选择非零特征值对应的特征向量

nonzero_eigenvalues = eigenvalues[eigenvalues > 1e-10]

nonzero_eigenvectors = eigenvectors[:, eigenvalues > 1e-10]

# 取前d_star个非零特征值对应的特征向量

top_indices = np.argsort(nonzero_eigenvalues)[::-1][:d_star]

selected_eigenvectors = nonzero_eigenvectors[:, top_indices]

# 计算对角矩阵

diagonal_matrix = np.diag(nonzero_eigenvalues[top_indices])

return diagonal_matrix

定义画图函数:

def plot_diagonal_matrix(diagonal_matrix):

plt.imshow(diagonal_matrix, cmap='viridis')

# 在主对角线上显示数值

for i in range(diagonal_matrix.shape[0]):

plt.text(i, i, f'{diagonal_matrix[i, i]:.2f}', color='red', ha='center', va='center')

plt.colorbar(label='特征值')

plt.title('特征值构成的对角矩阵')

plt.show()

执行MDS算法:

# 提取特征列

features = data.iloc[:, :-1].values

# 计算距离矩阵

distances = calculate_distance_matrix(features)

# 设定降维后的维度

d_star = 6

# 使用MDS算法计算特征值构成的对角矩阵

diagonal_matrix = calculate_mds_diagonal_matrix(distances, d_star)

# 绘制对角矩阵的可视化

plot_diagonal_matrix(diagonal_matrix)

博客介绍了低维嵌入技术,它可降低高维数据维度,保留数据特征并减少复杂性,常用于可视化等领域。在k近邻学习中,高维数据会使KNN算法性能下降,低维嵌入能将高维数据映射到低维子空间,提高KNN算法性能,还给出了相关公式推导和实验分析。

博客介绍了低维嵌入技术,它可降低高维数据维度,保留数据特征并减少复杂性,常用于可视化等领域。在k近邻学习中,高维数据会使KNN算法性能下降,低维嵌入能将高维数据映射到低维子空间,提高KNN算法性能,还给出了相关公式推导和实验分析。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?