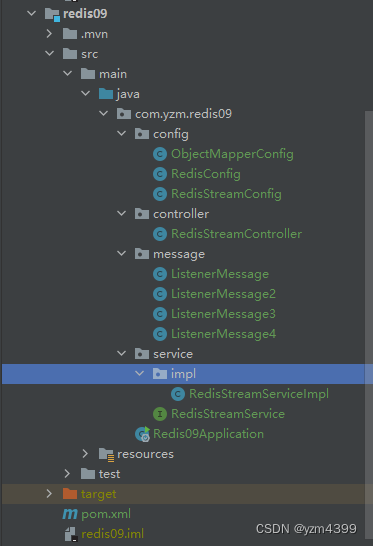

1.环境

<!-- RedisTemplate -->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

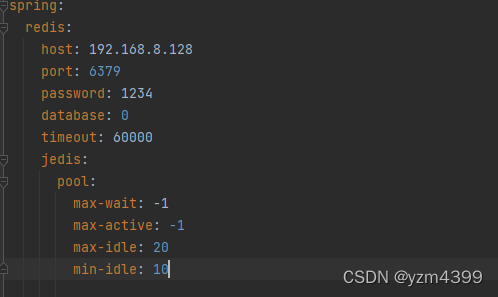

spring:

redis:

host: 192.168.8.128

port: 6379

password: 1234

database: 0

timeout: 60000

jedis:

pool:

max-wait: -1

max-active: -1

max-idle: 20

min-idle: 10

2.Redis配置

package com.yzm.redis09.config;

import com.fasterxml.jackson.annotation.JsonAutoDetect;

import com.fasterxml.jackson.annotation.JsonInclude;

import com.fasterxml.jackson.annotation.JsonTypeInfo;

import com.fasterxml.jackson.annotation.PropertyAccessor;

import com.fasterxml.jackson.core.JsonGenerator;

import com.fasterxml.jackson.core.JsonParser;

import com.fasterxml.jackson.databind.*;

import com.fasterxml.jackson.datatype.jsr310.JavaTimeModule;

import java.io.IOException;

import java.text.SimpleDateFormat;

import java.time.LocalDateTime;

import java.time.format.DateTimeFormatter;

public class ObjectMapperConfig {

public static final ObjectMapper objectMapper;

private static final String PATTERN = "yyyy-MM-dd HH:mm:ss";

static {

JavaTimeModule javaTimeModule = new JavaTimeModule();

javaTimeModule.addSerializer(LocalDateTime.class, new LocalDateTimeSerializer());

javaTimeModule.addDeserializer(LocalDateTime.class, new LocalDateTimeDeserializer());

objectMapper = new ObjectMapper()

// 转换为格式化的json(控制台打印时,自动格式化规范)

//.enable(SerializationFeature.INDENT_OUTPUT)

// Include.ALWAYS 是序列化对像所有属性(默认)

// Include.NON_NULL 只有不为null的字段才被序列化,属性为NULL 不序列化

// Include.NON_EMPTY 如果为null或者 空字符串和空集合都不会被序列化

// Include.NON_DEFAULT 属性为默认值不序列化

.setSerializationInclusion(JsonInclude.Include.NON_NULL)

// 如果是空对象的时候,不抛异常

.configure(SerializationFeature.FAIL_ON_EMPTY_BEANS, false)

// 反序列化的时候如果多了其他属性,不抛出异常

.configure(DeserializationFeature.FAIL_ON_UNKNOWN_PROPERTIES, false)

// 取消时间的转化格式,默认是时间戳,可以取消,同时需要设置要表现的时间格式

.configure(SerializationFeature.WRITE_DATES_AS_TIMESTAMPS, false)

.setDateFormat(new SimpleDateFormat(PATTERN))

// 对LocalDateTime序列化跟反序列化

.registerModule(javaTimeModule)

.setVisibility(PropertyAccessor.ALL, JsonAutoDetect.Visibility.ANY)

// 此项必须配置,否则会报java.lang.ClassCastException: java.util.LinkedHashMap cannot be cast to XXX

.enableDefaultTyping(ObjectMapper.DefaultTyping.NON_FINAL, JsonTypeInfo.As.PROPERTY)

;

}

static class LocalDateTimeSerializer extends JsonSerializer<LocalDateTime> {

@Override

public void serialize(LocalDateTime value, JsonGenerator gen, SerializerProvider serializers) throws IOException {

gen.writeString(value.format(DateTimeFormatter.ofPattern(PATTERN)));

}

}

static class LocalDateTimeDeserializer extends JsonDeserializer<LocalDateTime> {

@Override

public LocalDateTime deserialize(JsonParser p, DeserializationContext deserializationContext) throws IOException {

return LocalDateTime.parse(p.getValueAsString(), DateTimeFormatter.ofPattern(PATTERN));

}

}

}

package com.yzm.redis09.config;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.Jackson2JsonRedisSerializer;

import org.springframework.data.redis.serializer.StringRedisSerializer;

@Configuration

public class RedisConfig {

/**

* redisTemplate配置

*/

@Bean

public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory factory) {

RedisTemplate<String, Object> template = new RedisTemplate<>();

// 配置连接工厂

template.setConnectionFactory(factory);

//使用Jackson2JsonRedisSerializer来序列化和反序列化redis的value值(默认使用JDK的序列化方式)

Jackson2JsonRedisSerializer<Object> jacksonSerializer = new Jackson2JsonRedisSerializer<>(Object.class);

jacksonSerializer.setObjectMapper(ObjectMapperConfig.objectMapper);

StringRedisSerializer stringRedisSerializer = new StringRedisSerializer();

// 使用StringRedisSerializer来序列化和反序列化redis的key,value采用json序列化

template.setKeySerializer(stringRedisSerializer);

template.setValueSerializer(jacksonSerializer);

// 设置hash key 和value序列化模式

template.setHashKeySerializer(stringRedisSerializer);

template.setHashValueSerializer(jacksonSerializer);

template.afterPropertiesSet();

return template;

}

}

3.Stream命令

https://www.lanmper.cn/redis/t9475.html

package com.yzm.redis09.service;

import org.springframework.data.domain.Range;

import org.springframework.data.redis.connection.RedisZSetCommands;

import org.springframework.data.redis.connection.stream.*;

import java.util.List;

import java.util.Map;

public interface RedisStreamService {

/**

* 生产消息

* XADD key * hkey1 hval1 [hkey2 hval2...]

* key不存在,创建键为key的Stream流,并往流里添加消息

* key存在,往流里添加消息

*/

RecordId xAdd(String key, Map<String, Object> map);

/**

* 查看Stream的详情

* XINFO STREAM key

*/

StreamInfo.XInfoStream xInfo(String key);

/**

* 查看Stream的消息个数

* XLEN key

*/

Long xLen(String key);

/**

* 查询消息

* XRANGE key start end [COUNT count]

* range:表示查询区间,比如区间(消息ID,消息ID2),查询消息ID到消息ID2之间的消息,特殊值("-","+")表示流中可能的最小ID和最大ID

* Range.unbounded():查询所有

* Range.closed(消息ID,消息ID2):查询[消息ID,消息ID2]

* Range.open(消息ID,消息ID2):查询(消息ID,消息ID2)

* limit:表示查询出来后限制显示个数

* Limit.limit().count(限制个数)

*/

List<MapRecord<String, Object, Object>> xRange(String key, Range<String> range, RedisZSetCommands.Limit limit);

default List<MapRecord<String, Object, Object>> xRange(String key, Range<String> range) {

return this.xRange(key, range, RedisZSetCommands.Limit.unlimited());

}

/**

* 查询消息

* XREVRANGE key end start [COUNT count]

* xReverseRange用法跟xRange一样,只是最后显示的时候是反序的,即消息ID从大到小显示

*/

List<MapRecord<String, Object, Object>> xReverseRange(String key, Range<String> range, RedisZSetCommands.Limit limit);

default List<MapRecord<String, Object, Object>> xReverseRange(String key, Range<String> range) {

return this.xReverseRange(key, range, RedisZSetCommands.Limit.unlimited());

}

/**

* 批量删除消息

* XDEL key ID [ID ...]

*/

Long xDel(String key, String... recordIds);

/**

* 修剪/保留消息

* XTRIM key MAXLEN | MINID [~] count

* count:保留消息个数,当count是具体的消息ID时,表示移除ID小于count这个ID的所有消息

* approximateTrimming:近似

* 等于false时,表示精确保留count个个数的消息,不多不少只能是count

* 等于true时,表示近似保留count个个数的消息,不能少于count,但可以稍微多余count(前提条件是数据量多于200个)

*/

default Long xTrim(String key, long count) {

return this.xTrim(key, count, false);

}

Long xTrim(String key, long count, boolean approximateTrimming);

/**

* 创建消费组

* XGROUP CREATE key groupname id-or-$

* XGROUP SETID key groupname id-or-$ (消费组已创建,重新设置读取消息顺序)

* id为0表示组从stream的第一条数据开始读,

* id为$表示组从新的消息开始读取。(默认)

*/

default String xGroupCreate(String key, String group) {

return xGroupCreate(key, ReadOffset.latest(), group);

}

String xGroupCreate(String key, ReadOffset offset, String group);

/**

* 销毁消费组

* XGROUP DESTROY key groupname

*/

Boolean xGroupDestroy(String key, String group);

/**

* 查看消费组详情

* XINFO GROUPS key

*/

StreamInfo.XInfoGroups xInfoGroups(String key);

/**

* 读取消息

* XREAD [COUNT count] [BLOCK milliseconds] STREAMS key[key ...] id[id ...]

* 从一个或者多个流中读取数据

* 特殊ID=0-0:从队列最先添加的消息读取

* 特殊ID=$:只接收从我们阻塞的那一刻开始通过XADD添加到流的消息,对已经添加的历史消息不感兴趣

* 在阻塞模式中,可以使用$,表示最新的消息ID。(在非阻塞模式下$无意义)。

*/

List<MapRecord<String, Object, Object>> xRead(StreamReadOptions options, StreamOffset<String>... offsets);

/**

* 读取消息,强制带消费组、消费者

* XREADGROUP GROUP group consumer [COUNT count] [BLOCK milliseconds] [NOACK] STREAMS key[key ...] ID[ID ...]

* 特殊符号 0-0:表示从pending列表重新读取消息,不支持阻塞,无法读取的过程自动ack

* 特殊符号 > :表示只接收比消费者晚创建的消息,之前的消息不管

* 特殊符号 $ :在xReadGroup中使用是无意义的,报错提示:ERR The $ ID is meaningless in the context of XREADGROUP

*/

List<MapRecord<String, Object, Object>> xReadGroup(Consumer consumer, StreamReadOptions options, StreamOffset<String>... offsets);

/**

* 消费者详情

* XINFO CONSUMERS key group

*/

StreamInfo.XInfoConsumers xInfoConsumers(String key, String group);

/**

* 删除消费者

* XGROUP DELCONSUMER key groupname consumername

*/

Boolean xGroupDelConsumer(String key, Consumer consumer);

/**

* Pending Entries List (PEL)

* XPENDING key group [consumer] [start end count]

* 查看指定消费组的待处理列表

*/

PendingMessagesSummary xPending(String key, String group);

/**

* 查看指定消费者的待处理列表

*/

default PendingMessages xPending(String key, Consumer consumer) {

return this.xPending(key, consumer, Range.unbounded(), -1L);

}

PendingMessages xPending(String key, Consumer consumer, Range<?> range, long count);

/**

* 消息确认(从PEL中删除一条或多条消息)

* XACK key group ID[ID ...]

*/

Long xAck(String key, String group, String... recordIds);

/**

* 消息转移

* XCLAIM key group consumer min-idle-time ID[ID ...]

* idleTime:转移条件,进入PEL列表的时间大于空闲时间

*/

default List<ByteRecord> xClaim(String key, String group, String consumer, long idleTime, String recordId) {

return xClaim(key, group, consumer, idleTime, RecordId.of(recordId));

}

List<ByteRecord> xClaim(String key, String group, String consumer, long idleTime, RecordId... recordIds);

}

package com.yzm.redis09.service.impl;

import com.yzm.redis09.service.RedisStreamService;

import org.springframework.dao.DataAccessException;

import org.springframework.data.domain.Range;

import org.springframework.data.redis.connection.RedisConnection;

import org.springframework.data.redis.connection.RedisZSetCommands;

import org.springframework.data.redis.connection.stream.*;

import org.springframework.data.redis.core.RedisCallback;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.core.StreamOperations;

import org.springframework.stereotype.Service;

import java.time.Duration;

import java.util.List;

import java.util.Map;

@Service

public class RedisStreamServiceImpl implements RedisStreamService {

private final RedisTemplate<String, Object> redisTemplate;

private final StreamOperations<String, Object, Object> streamOperations;

public RedisStreamServiceImpl(RedisTemplate<String, Object> redisTemplate) {

this.redisTemplate = redisTemplate;

this.streamOperations = redisTemplate.opsForStream();

}

@Override

public RecordId xAdd(String key, Map<String, Object> map) {

return streamOperations.add(key, map);

}

@Override

public StreamInfo.XInfoStream xInfo(String key) {

return streamOperations.info(key);

}

@Override

public Long xLen(String key) {

return streamOperations.size(key);

}

@Override

public List<MapRecord<String, Object, Object>> xRange(String key, Range<String> range, RedisZSetCommands.Limit limit) {

return streamOperations.range(key, range, limit);

}

@Override

public List<MapRecord<String, Object, Object>> xReverseRange(String key, Range<String> range, RedisZSetCommands.Limit limit) {

return streamOperations.reverseRange(key, range, limit);

}

@Override

public Long xDel(String key, String... recordIds) {

return streamOperations.delete(key, recordIds);

}

@Override

public Long xTrim(String key, long count, boolean approximateTrimming) {

return streamOperations.trim(key, count, approximateTrimming);

}

@Override

public String xGroupCreate(String key, ReadOffset offset, String group) {

return streamOperations.createGroup(key, offset, group);

}

@Override

public Boolean xGroupDestroy(String key, String group) {

return streamOperations.destroyGroup(key, group);

}

@Override

public StreamInfo.XInfoGroups xInfoGroups(String key) {

return streamOperations.groups(key);

}

@SafeVarargs

@Override

public final List<MapRecord<String, Object, Object>> xRead(StreamReadOptions options, StreamOffset<String>... offsets) {

return streamOperations.read(options, offsets);

}

@SafeVarargs

@Override

public final List<MapRecord<String, Object, Object>> xReadGroup(Consumer consumer, StreamReadOptions options, StreamOffset<String>... offsets) {

return streamOperations.read(consumer, options, offsets);

}

@Override

public StreamInfo.XInfoConsumers xInfoConsumers(String key, String group) {

return streamOperations.consumers(key, group);

}

@Override

public Boolean xGroupDelConsumer(String key, Consumer consumer) {

return streamOperations.deleteConsumer(key, consumer);

}

@Override

public PendingMessagesSummary xPending(String key, String group) {

return streamOperations.pending(key, group);

}

@Override

public PendingMessages xPending(String key, Consumer consumer, Range<?> range, long count) {

return streamOperations.pending(key, consumer, range, count);

}

@Override

public Long xAck(String key, String group, String... recordIds) {

return streamOperations.acknowledge(key, group, recordIds);

}

@Override

public List<ByteRecord> xClaim(String key, String group, String consumer, long idleTime, RecordId... recordIds) {

return redisTemplate.execute(new RedisCallback<List<ByteRecord>>() {

@Override

public List<ByteRecord> doInRedis(RedisConnection redisConnection) throws DataAccessException {

return redisConnection.streamCommands().xClaim(key.getBytes(), group, consumer, Duration.ofSeconds(idleTime), recordIds);

}

});

}

}

4.命令调用

package com.yzm.redis09.controller;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.yzm.redis09.config.ObjectMapperConfig;

import com.yzm.redis09.service.RedisStreamService;

import lombok.extern.slf4j.Slf4j;

import org.springframework.data.domain.Range;

import org.springframework.data.redis.connection.RedisZSetCommands;

import org.springframework.data.redis.connection.stream.*;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.bind.annotation.RestController;

import java.time.Duration;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

@Slf4j

@RestController

public class RedisStreamController {

private final ObjectMapper objectMapper = ObjectMapperConfig.objectMapper;

private final RedisStreamService redisStreamService;

public RedisStreamController(RedisStreamService redisStreamService) {

this.redisStreamService = redisStreamService;

}

@GetMapping("/add")

public void add(

@RequestParam("key") String key,

@RequestParam("id") Integer id,

@RequestParam("name") String name) {

Map<String, Object> map = new HashMap<>();

map.put("id", id);

map.put("name", name);

System.out.println("recordId = " + redisStreamService.xAdd(key, map));

}

@GetMapping("/padd")

public void add(

@RequestParam("key") String key,

@RequestParam("id") Integer id) {

for (int i = id; i < id + 100; i++) {

Map<String, Object> map = new HashMap<>();

map.put("id", i);

map.put("name", i);

System.out.println("recordId = " + redisStreamService.xAdd(key, map));

}

}

@GetMapping("/info")

public void info(@RequestParam("key") String key) throws JsonProcessingException {

StreamInfo.XInfoStream info = redisStreamService.xInfo(key);

System.out.println("xInfo = " + objectMapper.writerWithDefaultPrettyPrinter().writeValueAsString(info));

}

@GetMapping("/len")

public void len(@RequestParam("key") String key) {

System.out.println("xLen = " + redisStreamService.xLen(key));

}

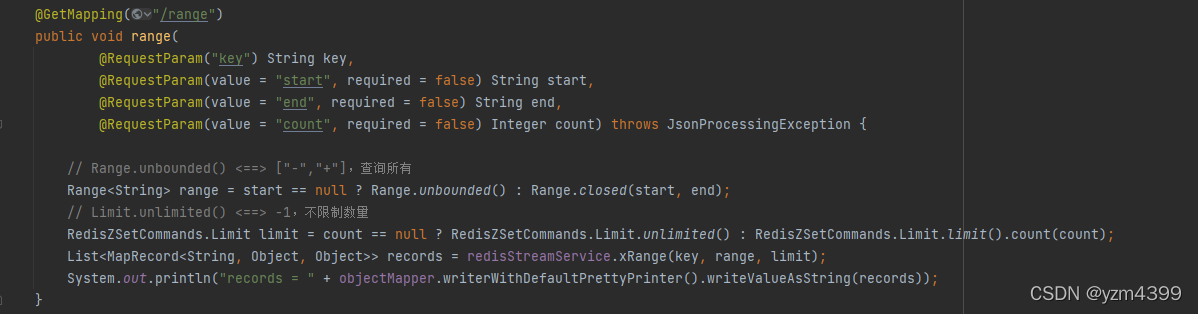

@GetMapping("/range")

public void range(

@RequestParam("key") String key,

@RequestParam(value = "start", required = false) String start,

@RequestParam(value = "end", required = false) String end,

@RequestParam(value = "count", required = false) Integer count) throws JsonProcessingException {

// Range.unbounded() <==> ["-","+"],查询所有

Range<String> range = start == null ? Range.unbounded() : Range.closed(start, end);

// Limit.unlimited() <==> -1,不限制数量

RedisZSetCommands.Limit limit = count == null ? RedisZSetCommands.Limit.unlimited() : RedisZSetCommands.Limit.limit().count(count);

List<MapRecord<String, Object, Object>> records = redisStreamService.xRange(key, range, limit);

System.out.println("records = " + objectMapper.writerWithDefaultPrettyPrinter().writeValueAsString(records));

}

@GetMapping("/del")

public void del(@RequestParam("key") String key, @RequestParam("recordId") String recordId) {

System.out.println("xDel = " + redisStreamService.xDel(key, recordId));

}

@GetMapping("/trim")

public void trim(

@RequestParam("key") String key,

@RequestParam("count") int count,

@RequestParam("at") boolean at) {

System.out.println("xTrim = " + redisStreamService.xTrim(key, count, at));

}

@GetMapping("/createGroup")

public void createGroup(@RequestParam("key") String key, @RequestParam("group") String group) {

System.out.println("xGroupCreate = " + redisStreamService.xGroupCreate(key, group));

}

@GetMapping("/destroyGroup")

public void destroyGroup(@RequestParam("key") String key, @RequestParam("group") String group) {

System.out.println("xGroupDestroy = " + redisStreamService.xGroupDestroy(key, group));

}

@GetMapping("/groups")

public void groups(@RequestParam("key") String key) throws JsonProcessingException {

StreamInfo.XInfoGroups groups = redisStreamService.xInfoGroups(key);

System.out.println("groups = " + objectMapper.writerWithDefaultPrettyPrinter().writeValueAsString(groups));

}

@GetMapping("/read")

public void read(

@RequestParam("key") String key,

@RequestParam(value = "count") int count,

@RequestParam(value = "ack") boolean ack,

@RequestParam(value = "block", required = false) Long block,

@RequestParam(value = "mode") int mode) {

log.info("开始 。。。");

// count:限制读取消息个数

StreamReadOptions options = StreamReadOptions.empty().count(count);

// xRead 不支持ack,报错:ERR The NOACK option is only supported by XREADGROUP. You called XREAD instead.

if (ack) options = options.autoAcknowledge();

// block:是否阻塞,并设置超时时间

if (block != null && block >= 0L) options = options.block(Duration.ofSeconds(block));

StreamOffset<String> offset;

if (mode == 1) {

// 特殊符号 0-0:表示从队列最先添加的消息读取,不支持阻塞

offset = StreamOffset.fromStart(key);

} else if (mode == 2) {

// 特殊符号 > :xRead 不支持,报错ERR The > ID can be specified only when calling XREADGROUP using the GROUP <group> <consumer> option.

offset = StreamOffset.create(key, ReadOffset.lastConsumed());

} else {

// 特殊符号 $ :xRead需要在阻塞模式下使用,非阻塞无意义

offset = StreamOffset.create(key, ReadOffset.latest());

}

List<MapRecord<String, Object, Object>> read = redisStreamService.xRead(options, offset);

System.out.println("read = " + read);

log.info("结束 。。。");

}

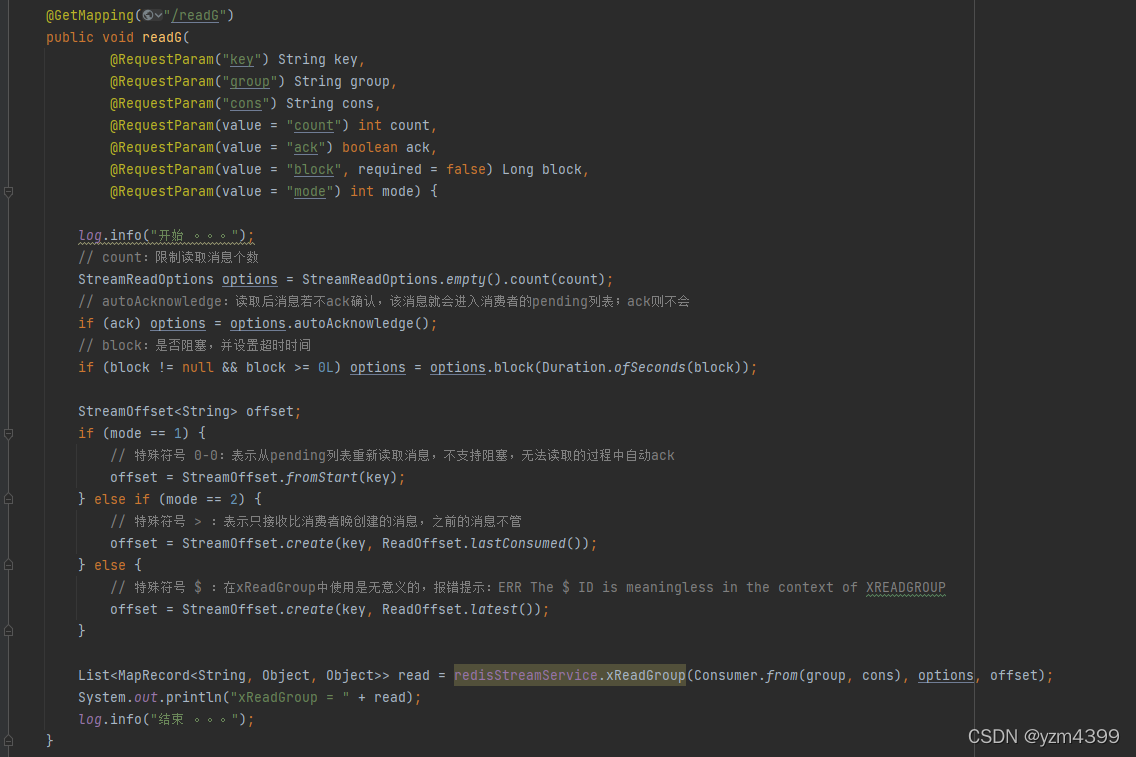

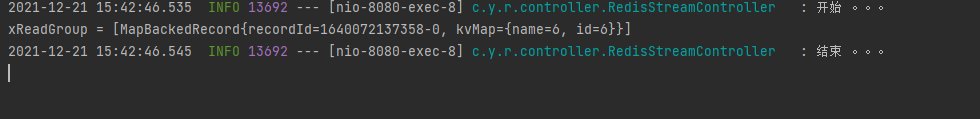

@GetMapping("/readG")

public void readG(

@RequestParam("key") String key,

@RequestParam("group") String group,

@RequestParam("cons") String cons,

@RequestParam(value = "count") int count,

@RequestParam(value = "ack") boolean ack,

@RequestParam(value = "block", required = false) Long block,

@RequestParam(value = "mode") int mode) {

log.info("开始 。。。");

// count:限制读取消息个数

StreamReadOptions options = StreamReadOptions.empty().count(count);

// autoAcknowledge:读取后消息若不ack确认,该消息就会进入消费者的pending列表;ack则不会

if (ack) options = options.autoAcknowledge();

// block:是否阻塞,并设置超时时间

if (block != null && block >= 0L) options = options.block(Duration.ofSeconds(block));

StreamOffset<String> offset;

if (mode == 1) {

// 特殊符号 0-0:表示从pending列表重新读取消息,不支持阻塞,无法读取的过程中自动ack

offset = StreamOffset.fromStart(key);

} else if (mode == 2) {

// 特殊符号 > :表示只接收比消费者晚创建的消息,之前的消息不管

offset = StreamOffset.create(key, ReadOffset.lastConsumed());

} else {

// 特殊符号 $ :在xReadGroup中使用是无意义的,报错提示:ERR The $ ID is meaningless in the context of XREADGROUP

offset = StreamOffset.create(key, ReadOffset.latest());

}

List<MapRecord<String, Object, Object>> read = redisStreamService.xReadGroup(Consumer.from(group, cons), options, offset);

System.out.println("xReadGroup = " + read);

log.info("结束 。。。");

}

@GetMapping("/consumers")

public void consumers(@RequestParam("key") String key, @RequestParam("group") String group) throws JsonProcessingException {

StreamInfo.XInfoConsumers consumers = redisStreamService.xInfoConsumers(key, group);

System.out.println("consumers = " + objectMapper.writerWithDefaultPrettyPrinter().writeValueAsString(consumers));

}

@GetMapping("/delConsumer")

public void delConsumer(@RequestParam("key") String key,

@RequestParam("group") String group,

@RequestParam("cons") String cons) {

System.out.println("delConsumer = " + redisStreamService.xGroupDelConsumer(key, Consumer.from(group, cons)));

}

@GetMapping("/pending")

public void pending(@RequestParam("key") String key, @RequestParam(value = "group") String group) throws JsonProcessingException {

PendingMessagesSummary pending = redisStreamService.xPending(key, group);

System.out.println("pendingSummary = " + objectMapper.writerWithDefaultPrettyPrinter().writeValueAsString(pending));

}

@GetMapping("/pending2")

public void pending2(

@RequestParam("key") String key,

@RequestParam(value = "group") String group,

@RequestParam(value = "cons") String cons) throws JsonProcessingException {

PendingMessages pending = redisStreamService.xPending(key, Consumer.from(group, cons));

System.out.println("pending = " + objectMapper.writerWithDefaultPrettyPrinter().writeValueAsString(pending));

}

@GetMapping("/ack")

public void ack(@RequestParam("key") String key,

@RequestParam(value = "group") String group,

@RequestParam(value = "reId") String reId) {

System.out.println("ack = " + redisStreamService.xAck(key, group, reId));

}

@GetMapping("/claim")

public void xClaim(

@RequestParam("key") String key,

@RequestParam(value = "group") String group,

@RequestParam(value = "cons") String cons,

@RequestParam("time") long idleTime,

@RequestParam("reid") String reid) {

List<ByteRecord> byteRecords = redisStreamService.xClaim(key, group, cons, idleTime, reid);

ByteRecord record = byteRecords.get(0);

System.out.println(record.getId());

System.out.println(record.getValue());

}

}

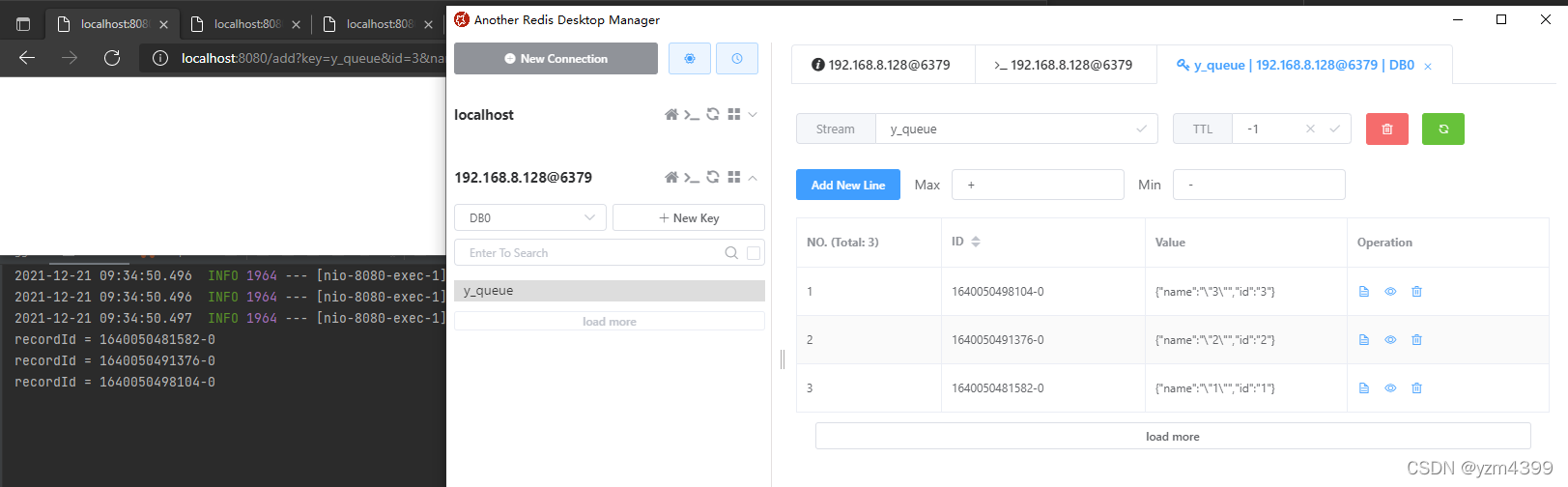

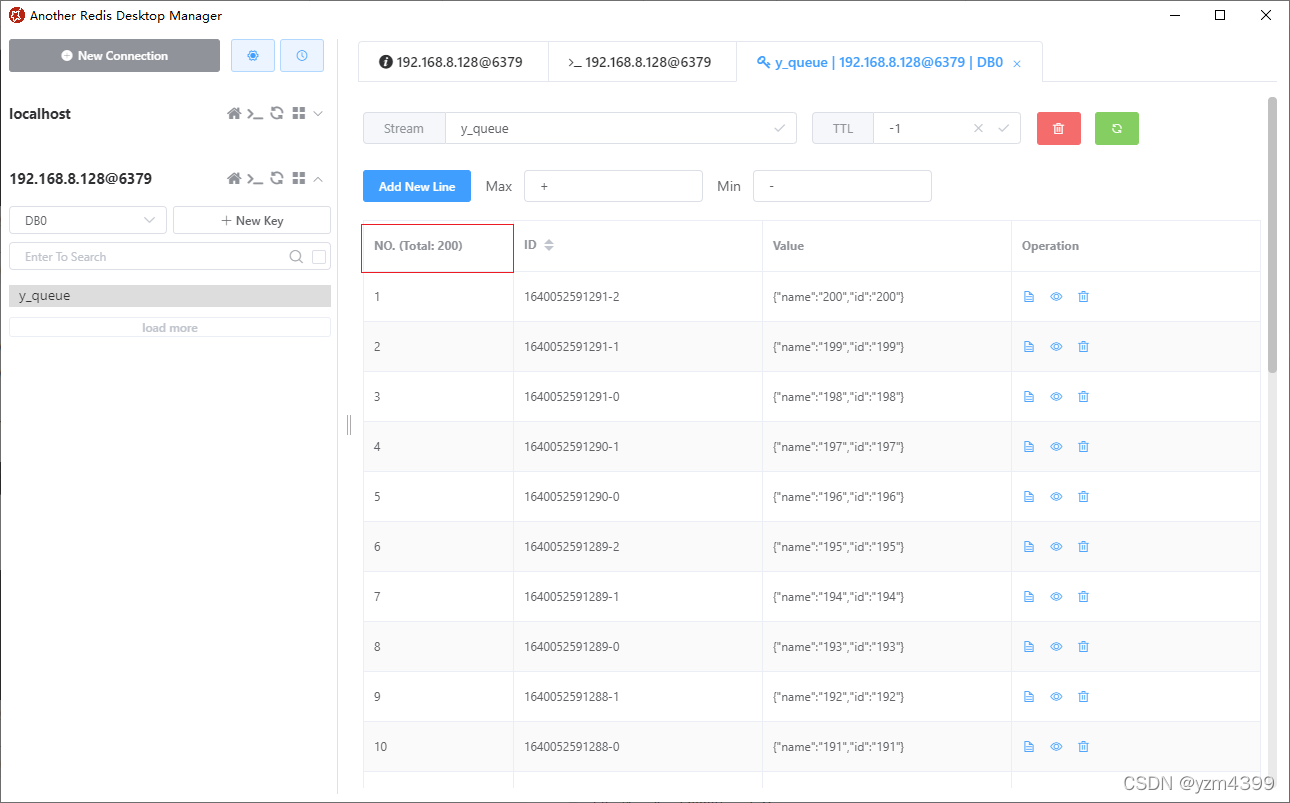

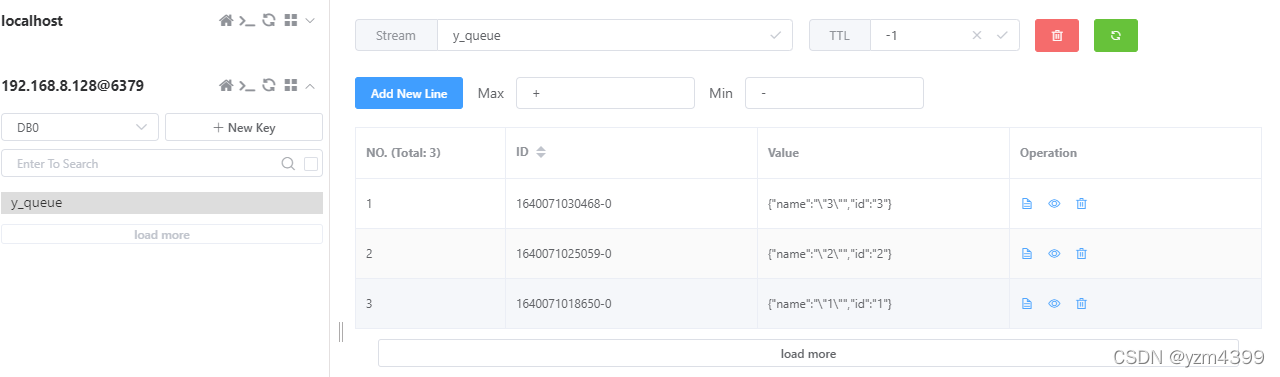

生产消息

xadd

http://localhost:8080/add?key=y_queue&id=1&name=1

http://localhost:8080/add?key=y_queue&id=2&name=2

http://localhost:8080/add?key=y_queue&id=3&name=3

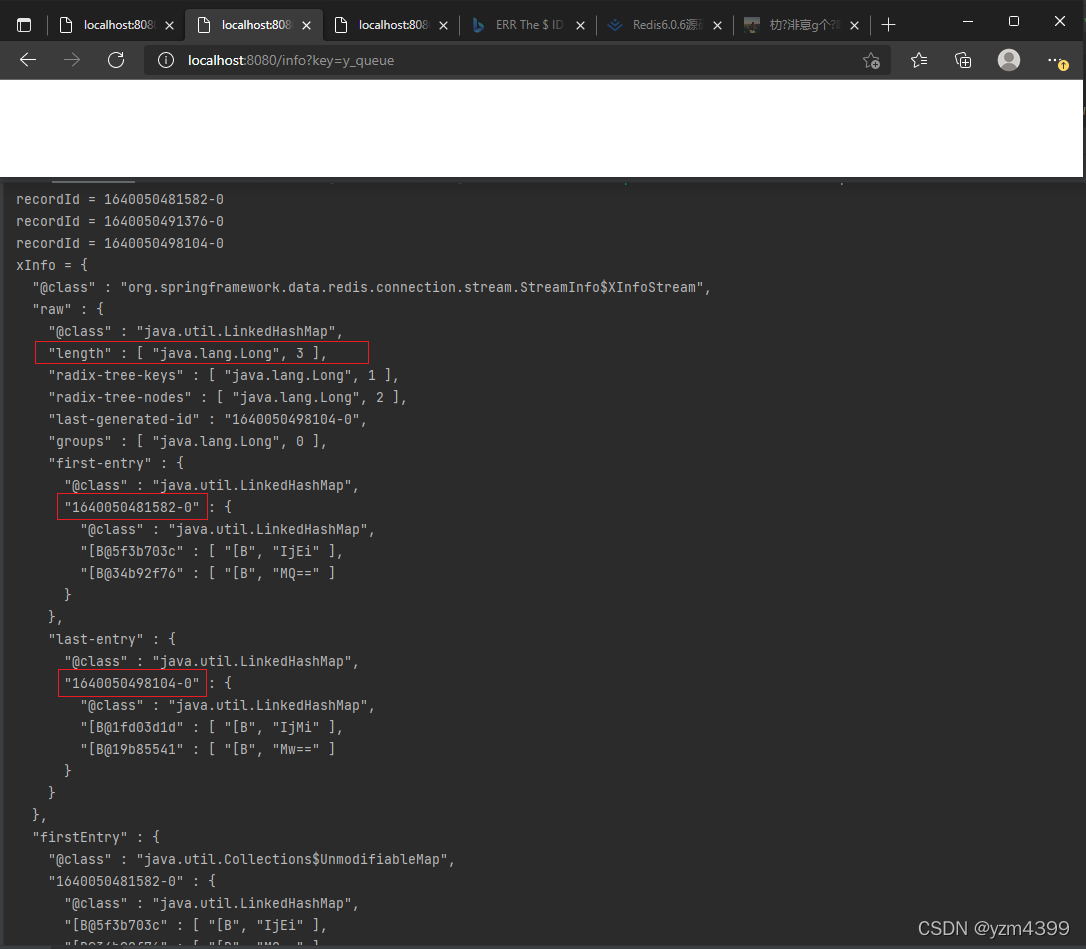

流信息

xinfo

http://localhost:8080/info?key=y_queue

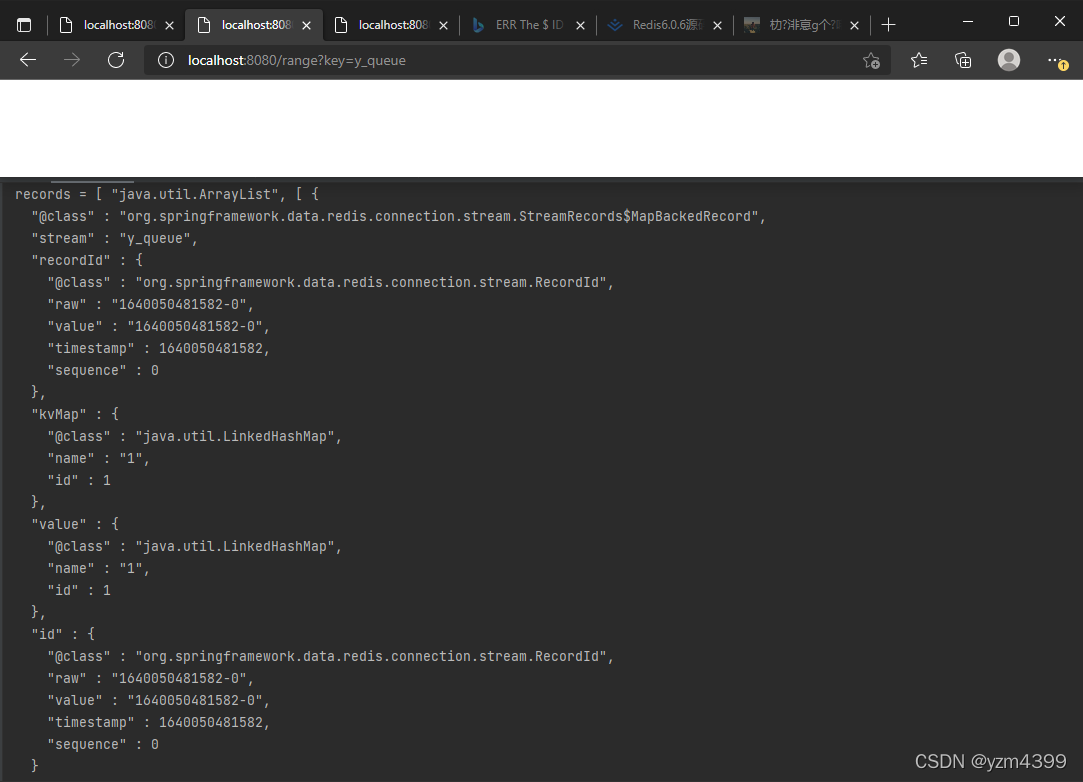

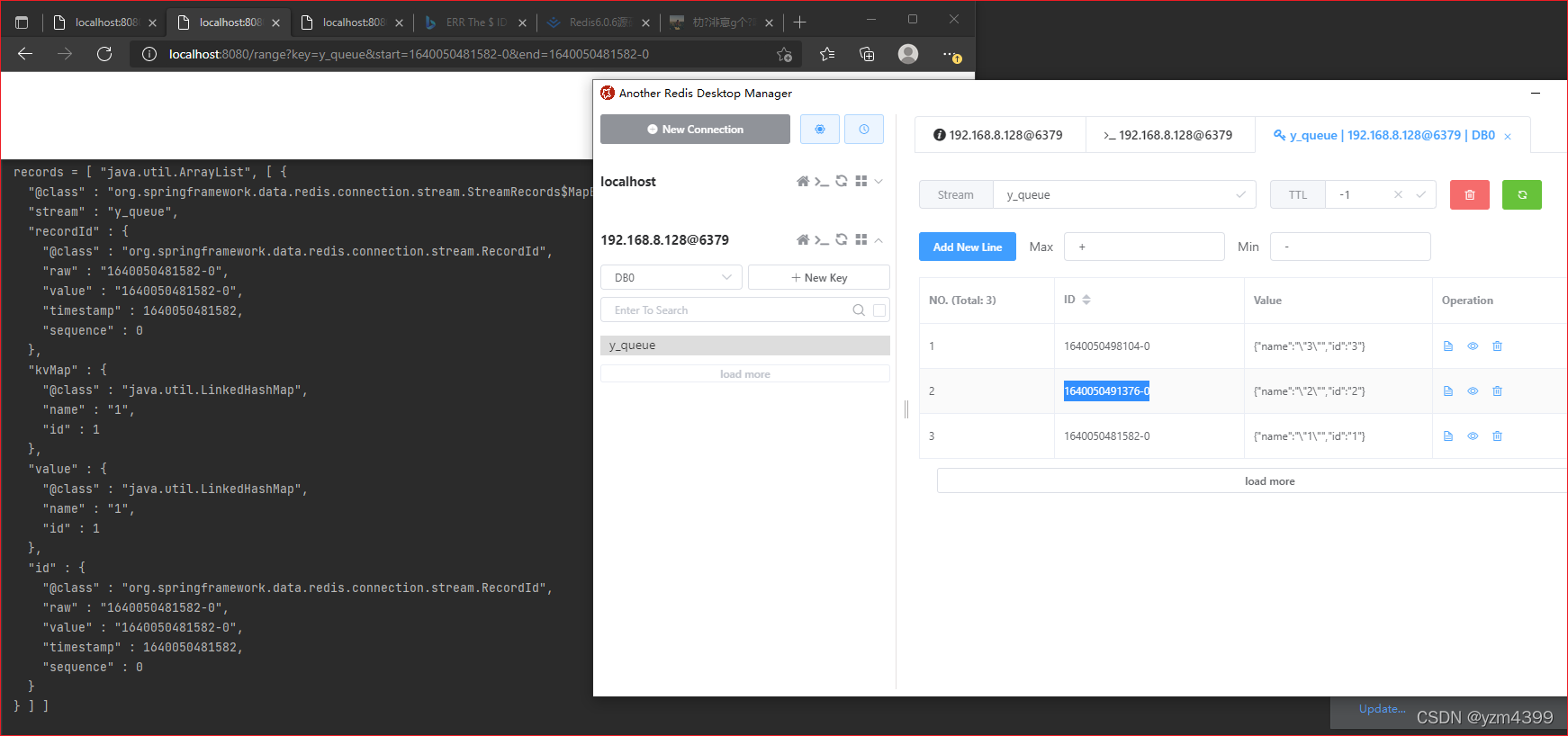

xrange:获取流数据

Range:区间,以ID作为下标; closed==[],open==()

Limit:限制个数

http://localhost:8080/range?key=y_queue

[-,+] 查出所有1、2、3

http://localhost:8080/range?key=y_queue&start=1640050481582-0&end=1640050481582-0

[1640050481582-0,1640050481582-0]:查出1(1640050481582-0是1的ID)

http://localhost:8080/range?key=y_queue&count=1

[ -,+]:查出所有,但限制了个数为1,最后只有1

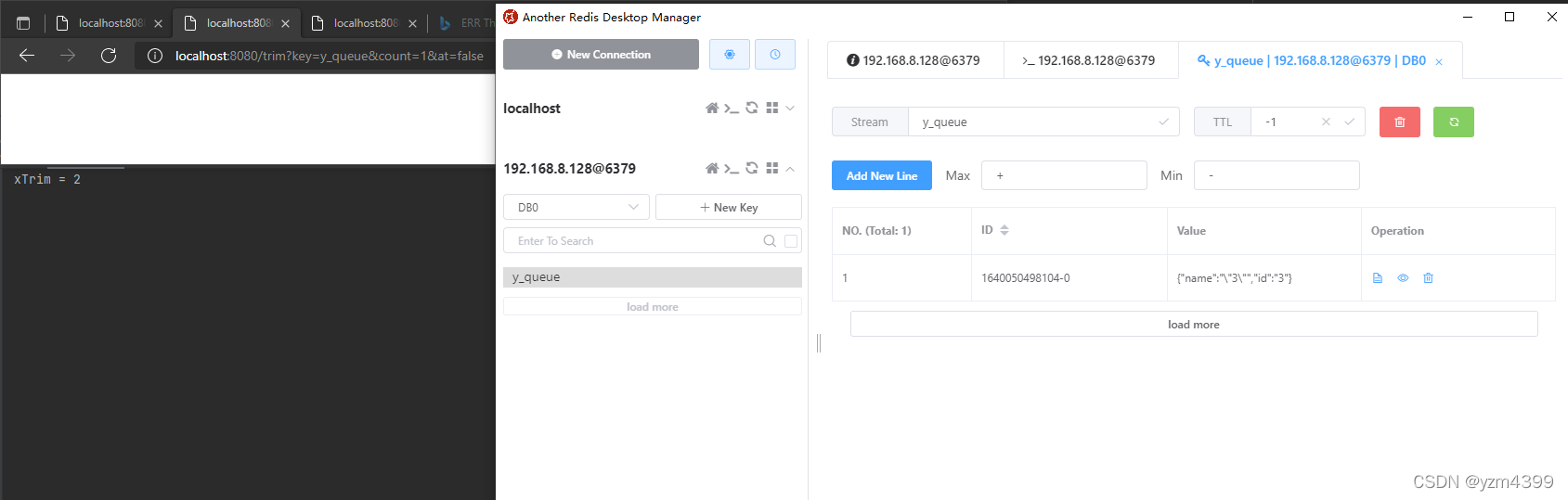

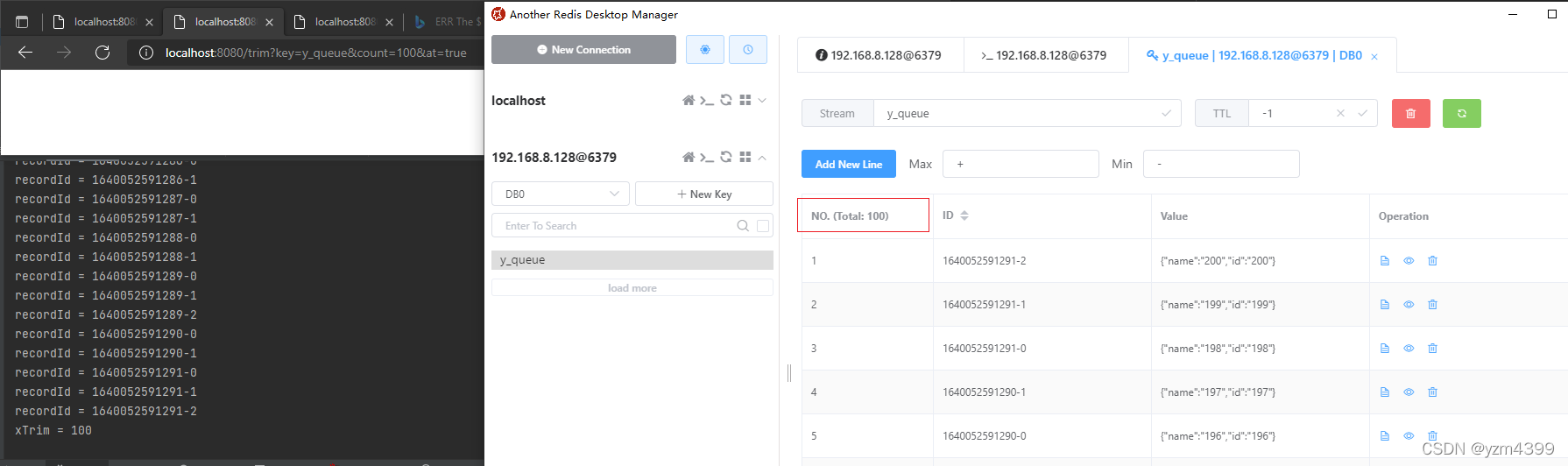

修剪,限制/保留长度

xtrim

http://localhost:8080/trim?key=y_queue&count=1&at=false

精准保留1个消息

新增消息

http://localhost:8080/padd?key=y_queue&id=1

http://localhost:8080/padd?key=y_queue&id=101

消息个数达到200个

http://localhost:8080/trim?key=y_queue&count=100&at=true

近似保留100个,当总消息个数太少,比如少于100个,近似保留则不会执行

http://localhost:8080/trim?key=y_queue&count=10&at=true

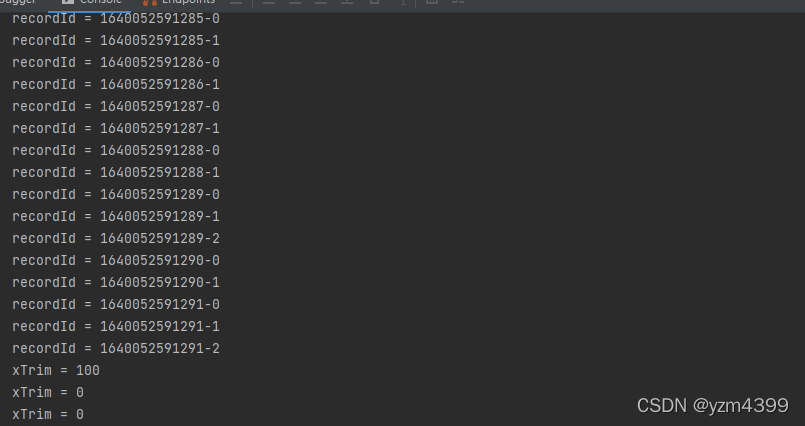

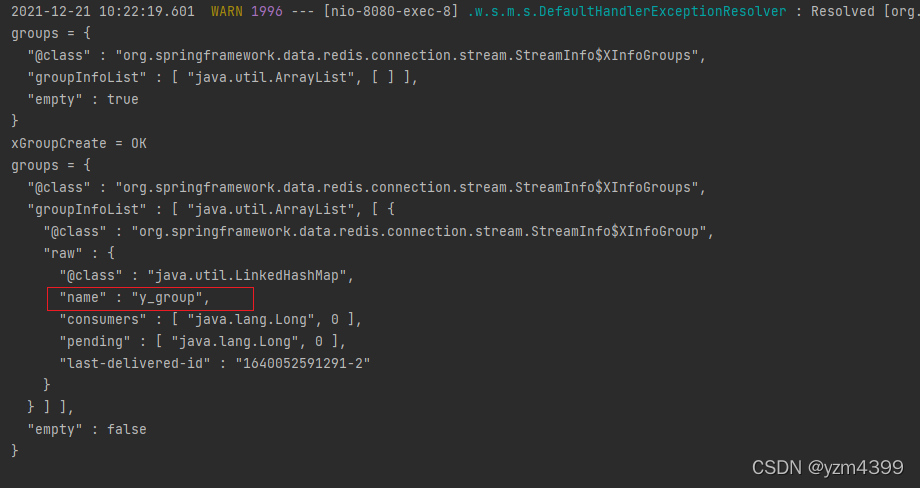

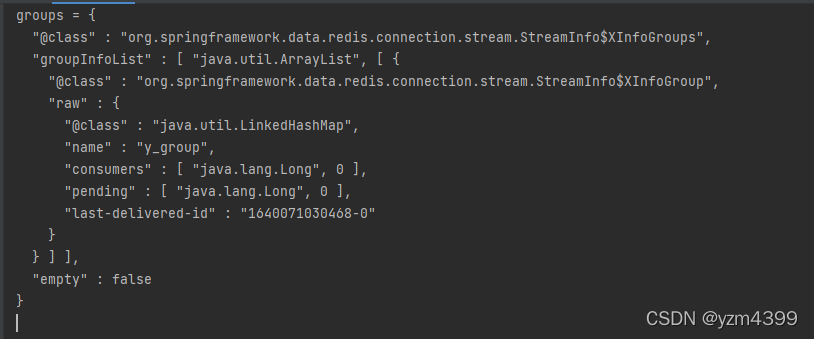

xGroupCreate:创建消费组

xInfoGroups:查看组信息

http://localhost:8080/groups?key=y_queue

http://localhost:8080/createGroup?key=y_queue&group=y_group

http://localhost:8080/groups?key=y_queue

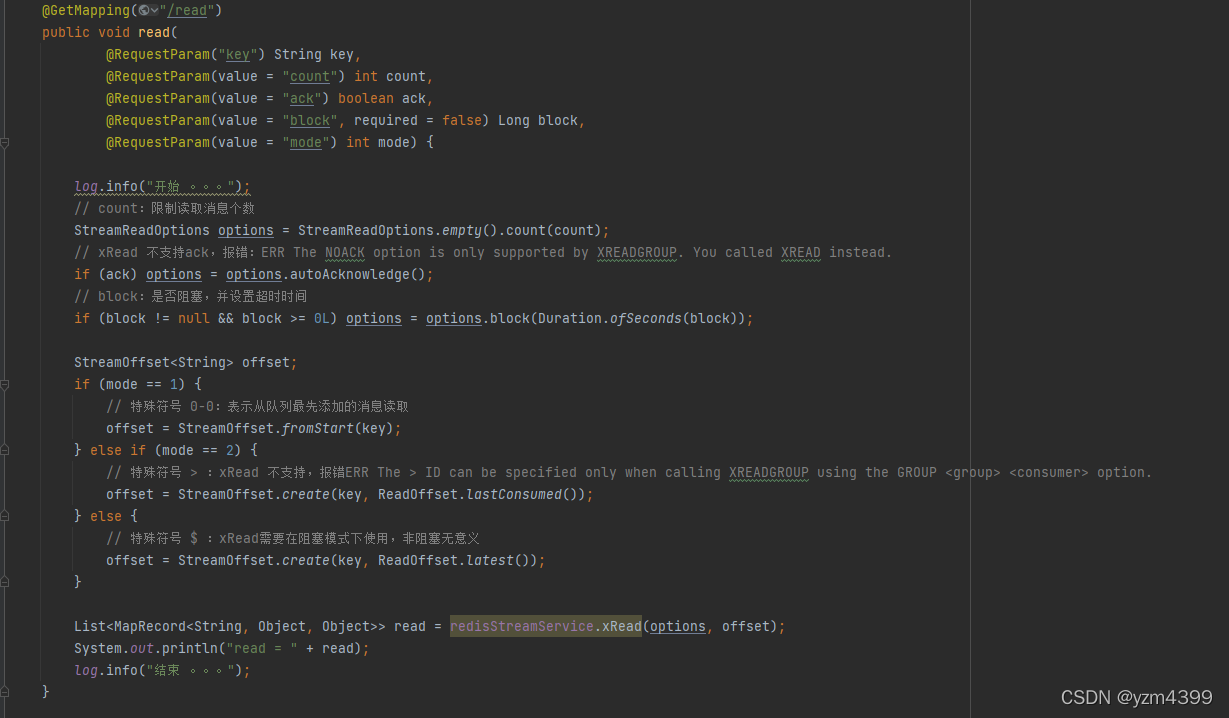

xRead 从一个或多个流中读取数据

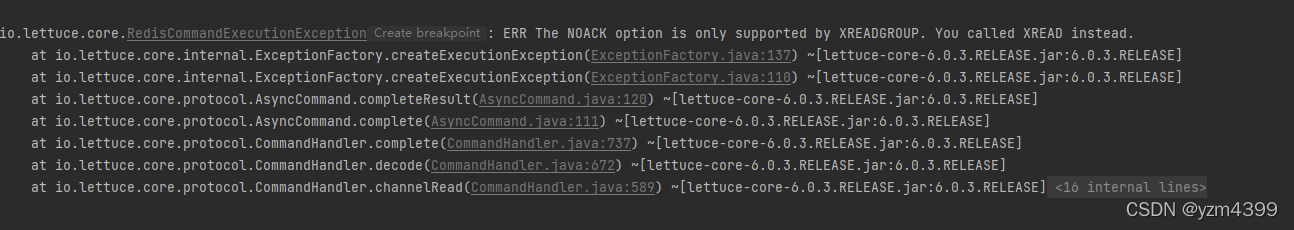

http://localhost:8080/read?key=y_queue&count=2&ack=true&block=10&mode=1

不支持ack

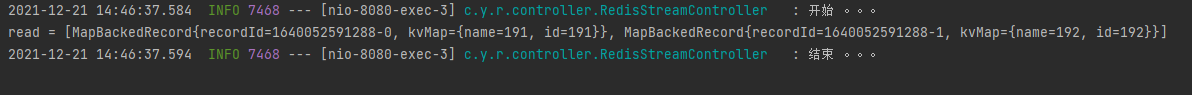

http://localhost:8080/read?key=y_queue&count=2&ack=false&block=10&mode=1

特殊符号 0-0,不支持阻塞

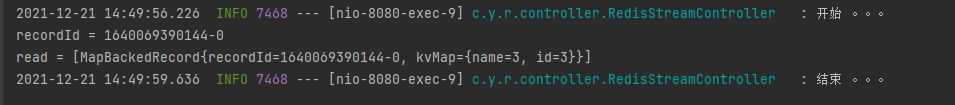

http://localhost:8080/read?key=y_queue&count=2&ack=false&block=10&mode=3

http://localhost:8080/add?key=y_queue&id=3&name=3

特殊符号 $ :xRead需要在阻塞模式下使用,非阻塞无意义

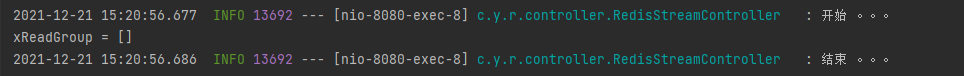

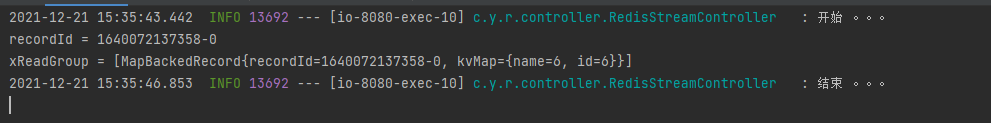

xReadGroup:读取消息

队列已有3条消息

消费组没有消费者

http://localhost:8080/groups?key=y_queue

http://localhost:8080/readG?key=y_queue&group=y_group&cons=consumer_1&count=1&ack=false&mode=2

创建消费者consumer_1

特殊符号>,不接受创建消费者之前的消息

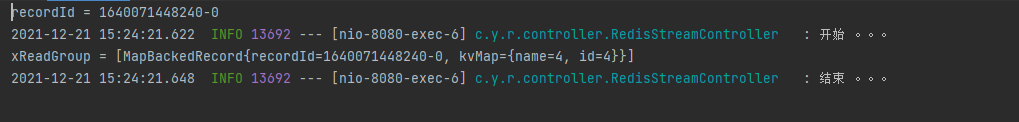

新生成消息id=4

http://localhost:8080/add?key=y_queue&id=4&name=4

http://localhost:8080/readG?key=y_queue&group=y_group&cons=consumer_1&count=1&ack=false&mode=2

没有ack。消息进入PEL列表

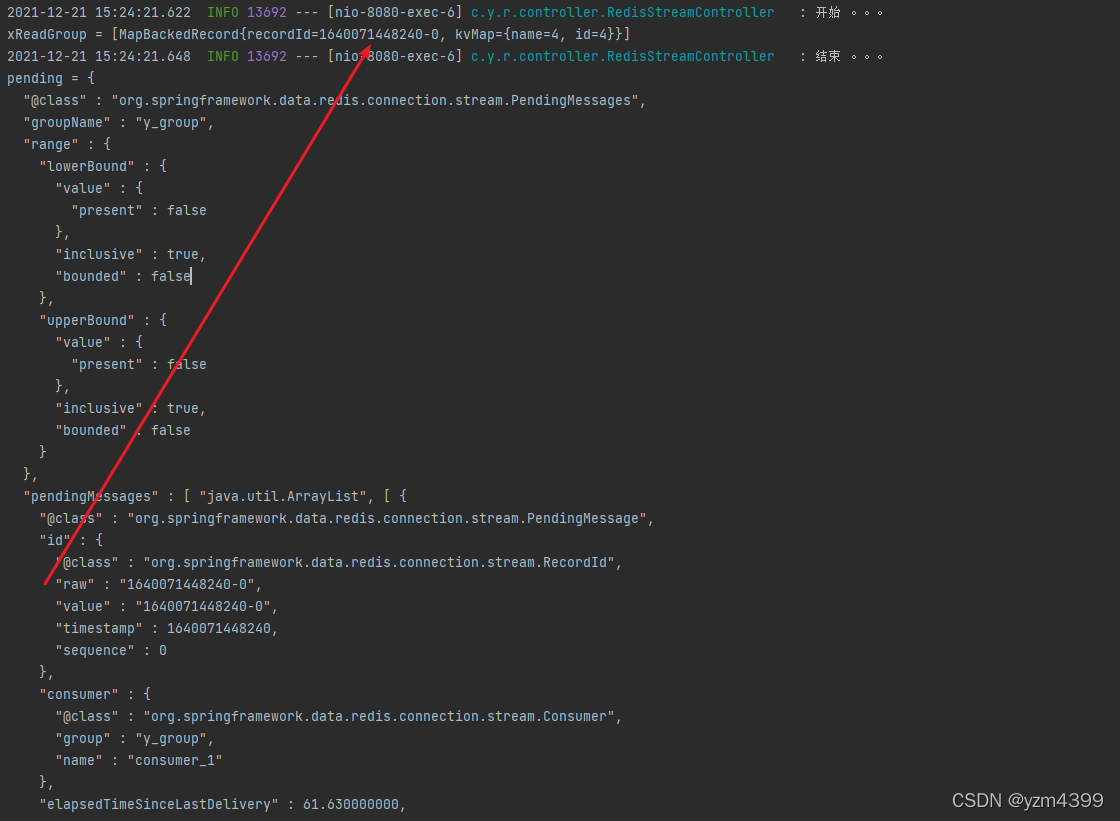

http://localhost:8080/pending2?key=y_queue&group=y_group&cons=consumer_1

自动确认

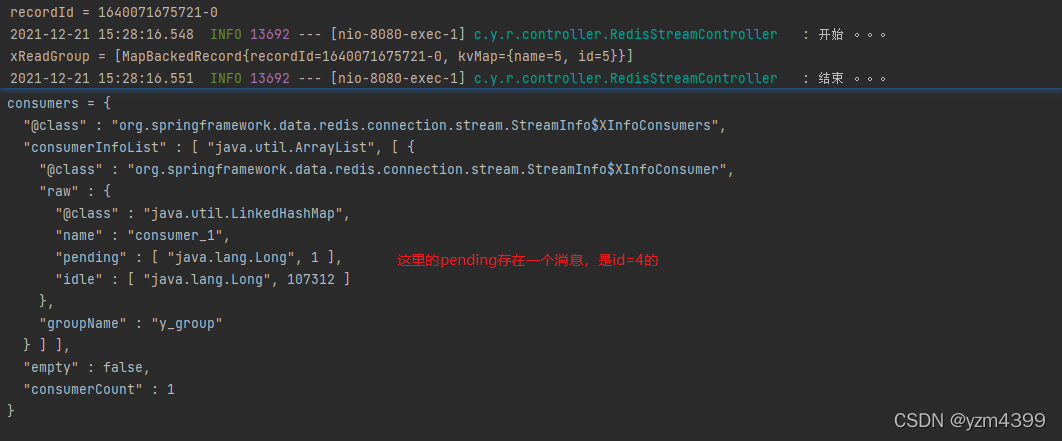

新增消息id=5,并自动确认,PEL列表仍然是一条消息id=4

http://localhost:8080/add?id=5&name=5

http://localhost:8080/readG?key=y_queue&group=y_group&cons=consumer_1&count=1&ack=true&mode=2

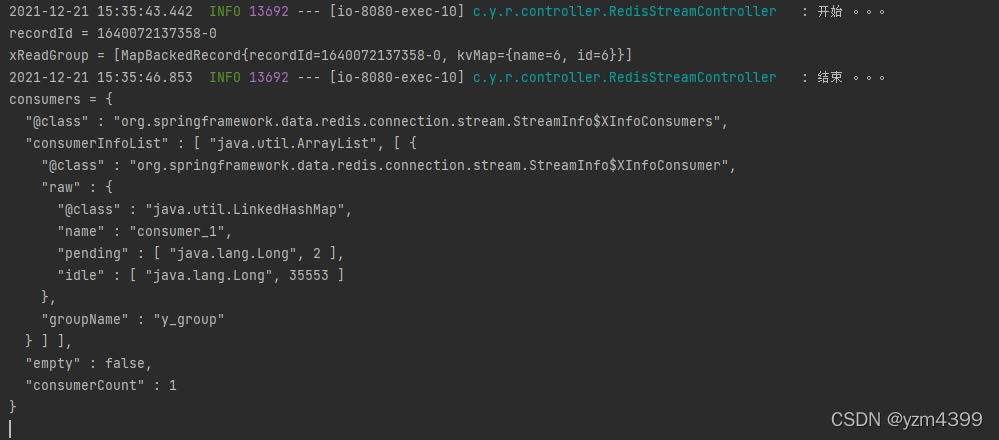

http://localhost:8080/consumers?key=y_queue&group=y_group

阻塞等待10s,不自动ack

注意:阻塞时间10s不能大于redis连接超时时间,我这里是60s没问题

先访问,转圈等待中

http://localhost:8080/readG?key=y_queue&group=y_group&cons=consumer_1&count=1&ack=false&block=10&mode=2

再生产消息id=6

http://localhost:8080/add?id=6&name=6

http://localhost:8080/consumers?key=y_queue&group=y_group

PEL列表有2条消息了,id=4和id=6

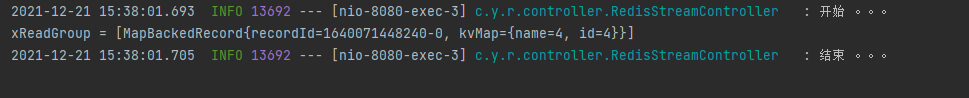

使用特殊符号 0-0:表示从PEL列表重新读取消息

http://localhost:8080/readG?key=y_queue&group=y_group&cons=consumer_1&count=1&ack=true&block=10&mode=1

自动ack但无效,阻塞了同样没有效果,因为都不支持

http://localhost:8080/consumers?key=y_queue&group=y_group

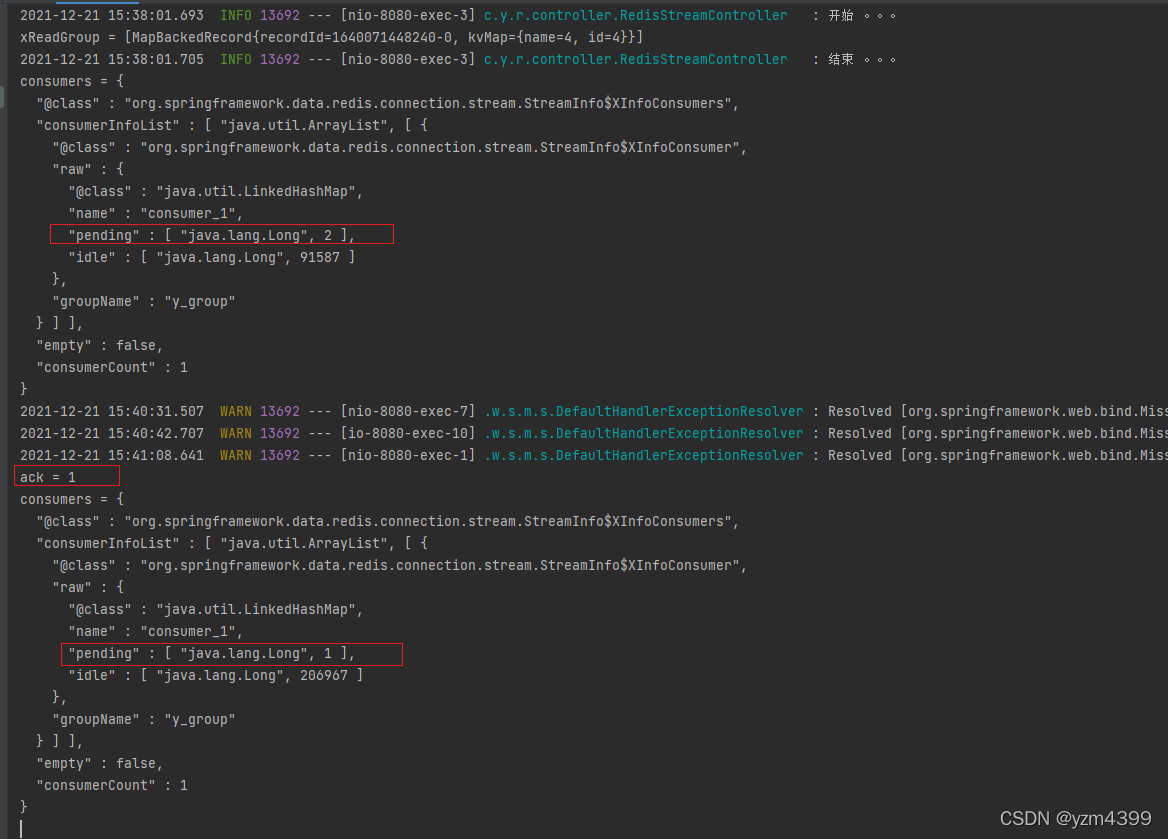

手动ack

http://localhost:8080/ack?key=y_queue&group=y_group&reId=1640071448240-0

http://localhost:8080/consumers?key=y_queue&group=y_group

再次访问

http://localhost:8080/readG?key=y_queue&group=y_group&cons=consumer_1&count=1&ack=true&block=10&mode=1

读取到id=6

5 消息消费

通过上面的演示,知道xReadGroup命令的一些基本使用

1.限制消费个数

2.自动ack

3.阻塞等待

4.拉取pending列表重复消费

通过这些特性再配合xPending和xAck命令就可以使用实现消息消费了

通过监听器自动监听消息并消费

package com.yzm.redis09.config;

import com.yzm.redis09.message.ListenerMessage;

import com.yzm.redis09.message.ListenerMessage2;

import com.yzm.redis09.message.ListenerMessage3;

import com.yzm.redis09.message.ListenerMessage4;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.connection.stream.*;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.serializer.StringRedisSerializer;

import org.springframework.data.redis.stream.StreamMessageListenerContainer;

import org.springframework.data.redis.stream.Subscription;

import org.springframework.scheduling.concurrent.ThreadPoolTaskExecutor;

import org.springframework.util.ErrorHandler;

import javax.annotation.PostConstruct;

import java.time.Duration;

import java.util.HashMap;

import java.util.List;

import java.util.stream.Collectors;

/**

* redis stream 配置

*/

@Configuration

public class RedisStreamConfig {

public static final String QUEUE = "y_queue";

public static final String[] GROUPS = {"y_group", "y_group_2", "y_group_3"};

public static final String[] CONSUMERS = {"consumer_1", "consumer_2", "consumer_3", "consumer_4"};

private final RedisTemplate<String, Object> redisTemplate;

private final ThreadPoolTaskExecutor threadPoolTaskExecutor;

public RedisStreamConfig(RedisTemplate<String, Object> redisTemplate, ThreadPoolTaskExecutor threadPoolTaskExecutor) {

this.redisTemplate = redisTemplate;

this.threadPoolTaskExecutor = threadPoolTaskExecutor;

}

// 启动项目,创建队列并绑定消费组

@PostConstruct

public void initQueue() {

Boolean hasKey = redisTemplate.hasKey(QUEUE);

if (hasKey == null || !hasKey) {

HashMap<String, Object> map = new HashMap<>();

map.put("id", 1);

map.put("name", "1");

redisTemplate.opsForStream().add(QUEUE, map);

}

StreamInfo.XInfoGroups groups = redisTemplate.opsForStream().groups(QUEUE);

List<String> groupNames = groups.stream().map(StreamInfo.XInfoGroup::groupName).collect(Collectors.toList());

for (String group : GROUPS) {

if (!groupNames.contains(group)) {

redisTemplate.opsForStream().createGroup(QUEUE, group);

}

}

}

// 创建配置对象

@Bean

public StreamMessageListenerContainer.StreamMessageListenerContainerOptions<String, ?> streamMessageListenerContainerOptions() {

return StreamMessageListenerContainer

.StreamMessageListenerContainerOptions

.builder()

// 一次性最多拉取多少条消息

.batchSize(1)

// 执行消息轮询的执行器

.executor(this.threadPoolTaskExecutor)

// 消息消费异常的handler

.errorHandler(new ErrorHandler() {

@Override

public void handleError(Throwable t) {

// throw new RuntimeException(t);

t.printStackTrace();

}

})

// 序列化器

.serializer(new StringRedisSerializer())

// 超时时间,设置为0,表示不超时(超时后会抛出异常)

.pollTimeout(Duration.ofSeconds(10))

.build();

}

// 根据配置对象创建监听容器对象

@Bean

public StreamMessageListenerContainer<String, ?> streamMessageListenerContainer(RedisConnectionFactory factory) {

StreamMessageListenerContainer<String, ?> listenerContainer = StreamMessageListenerContainer.create(factory, streamMessageListenerContainerOptions());

listenerContainer.start();

return listenerContainer;

}

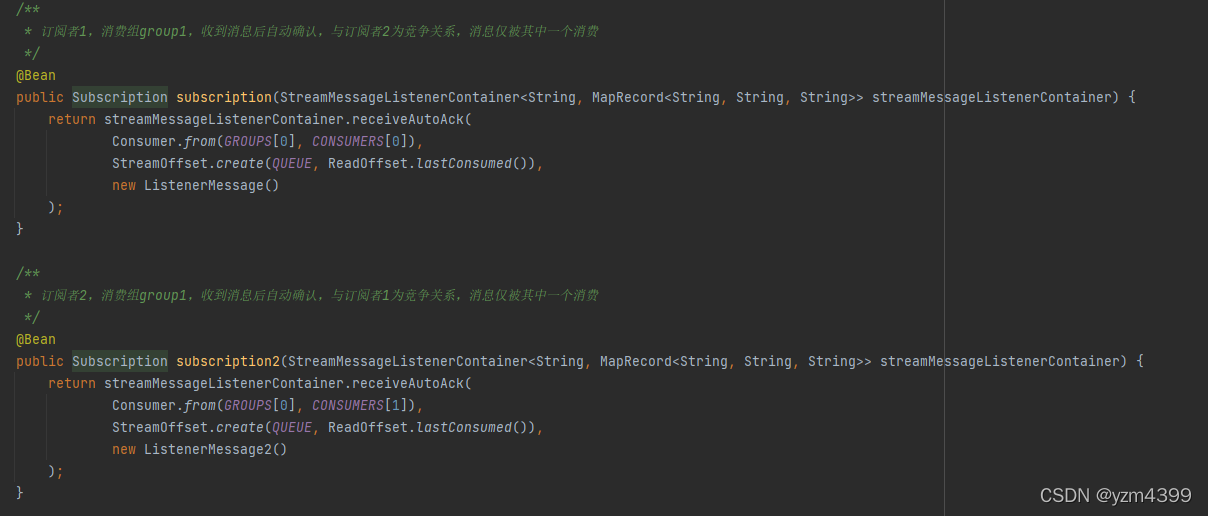

/**

* 订阅者1,消费组group1,收到消息后自动确认,与订阅者2为竞争关系,消息仅被其中一个消费

*/

@Bean

public Subscription subscription(StreamMessageListenerContainer<String, MapRecord<String, String, String>> streamMessageListenerContainer) {

return streamMessageListenerContainer.receiveAutoAck(

Consumer.from(GROUPS[0], CONSUMERS[0]),

StreamOffset.create(QUEUE, ReadOffset.lastConsumed()),

new ListenerMessage()

);

}

/**

* 订阅者2,消费组group1,收到消息后自动确认,与订阅者1为竞争关系,消息仅被其中一个消费

*/

@Bean

public Subscription subscription2(StreamMessageListenerContainer<String, MapRecord<String, String, String>> streamMessageListenerContainer) {

return streamMessageListenerContainer.receiveAutoAck(

Consumer.from(GROUPS[0], CONSUMERS[1]),

StreamOffset.create(QUEUE, ReadOffset.lastConsumed()),

new ListenerMessage2()

);

}

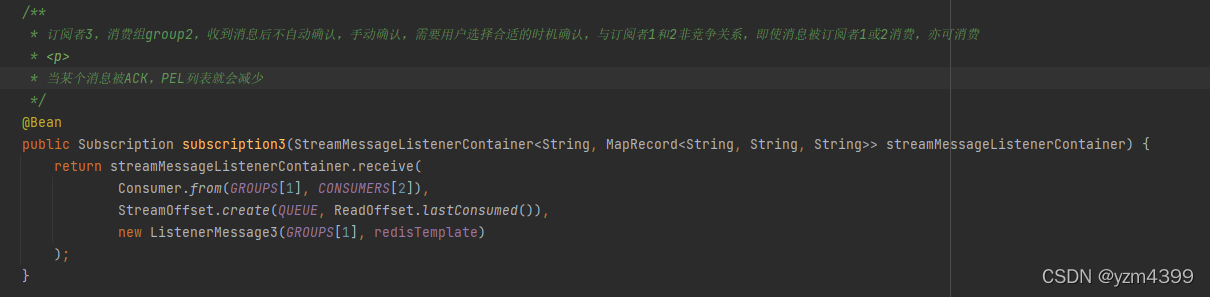

/**

* 订阅者3,消费组group2,收到消息后不自动确认,手动确认,需要用户选择合适的时机确认,与订阅者1和2非竞争关系,即使消息被订阅者1或2消费,亦可消费

* <p>

* 当某个消息被ACK,PEL列表就会减少

*/

@Bean

public Subscription subscription3(StreamMessageListenerContainer<String, MapRecord<String, String, String>> streamMessageListenerContainer) {

return streamMessageListenerContainer.receive(

Consumer.from(GROUPS[1], CONSUMERS[2]),

StreamOffset.create(QUEUE, ReadOffset.lastConsumed()),

new ListenerMessage3(GROUPS[1], redisTemplate)

);

}

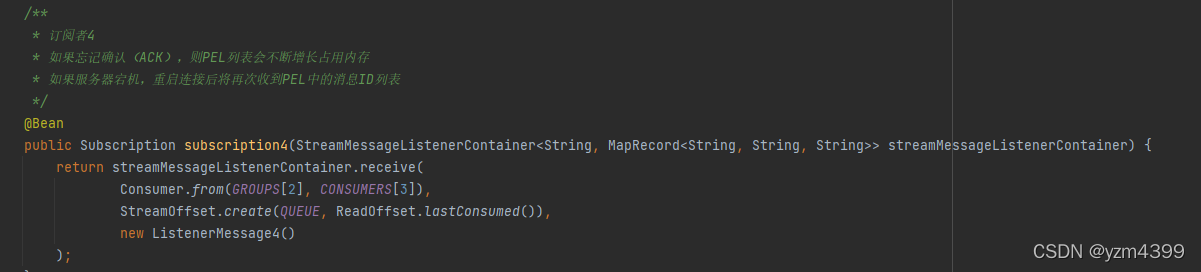

/**

* 订阅者4

* 如果忘记确认(ACK),则PEL列表会不断增长占用内存

* 如果服务器宕机,重启连接后将再次收到PEL中的消息ID列表

*/

@Bean

public Subscription subscription4(StreamMessageListenerContainer<String, MapRecord<String, String, String>> streamMessageListenerContainer) {

return streamMessageListenerContainer.receive(

Consumer.from(GROUPS[2], CONSUMERS[3]),

StreamOffset.create(QUEUE, ReadOffset.lastConsumed()),

new ListenerMessage4()

);

}

}

package com.yzm.redis09.message;

import lombok.extern.slf4j.Slf4j;

import org.springframework.data.redis.connection.stream.MapRecord;

import org.springframework.data.redis.stream.StreamListener;

/**

* redis stream监听消息

*/

@Slf4j

public class ListenerMessage implements StreamListener<String, MapRecord<String, String, String>> {

@Override

public void onMessage(MapRecord<String, String, String> message) {

log.info("监听");

System.out.println("message id " + message.getId());

System.out.println("stream " + message.getStream());

System.out.println("body " + message.getValue());

}

}

package com.yzm.redis09.message;

import lombok.extern.slf4j.Slf4j;

import org.springframework.data.redis.connection.stream.MapRecord;

import org.springframework.data.redis.stream.StreamListener;

/**

* redis stream监听消息

*/

@Slf4j

public class ListenerMessage2 implements StreamListener<String, MapRecord<String, String, String>> {

@Override

public void onMessage(MapRecord<String, String, String> message) {

log.info("监听");

System.out.println("2 ==> message id " + message.getId());

System.out.println("2 ==> stream " + message.getStream());

System.out.println("2 ==> body " + message.getValue());

}

}

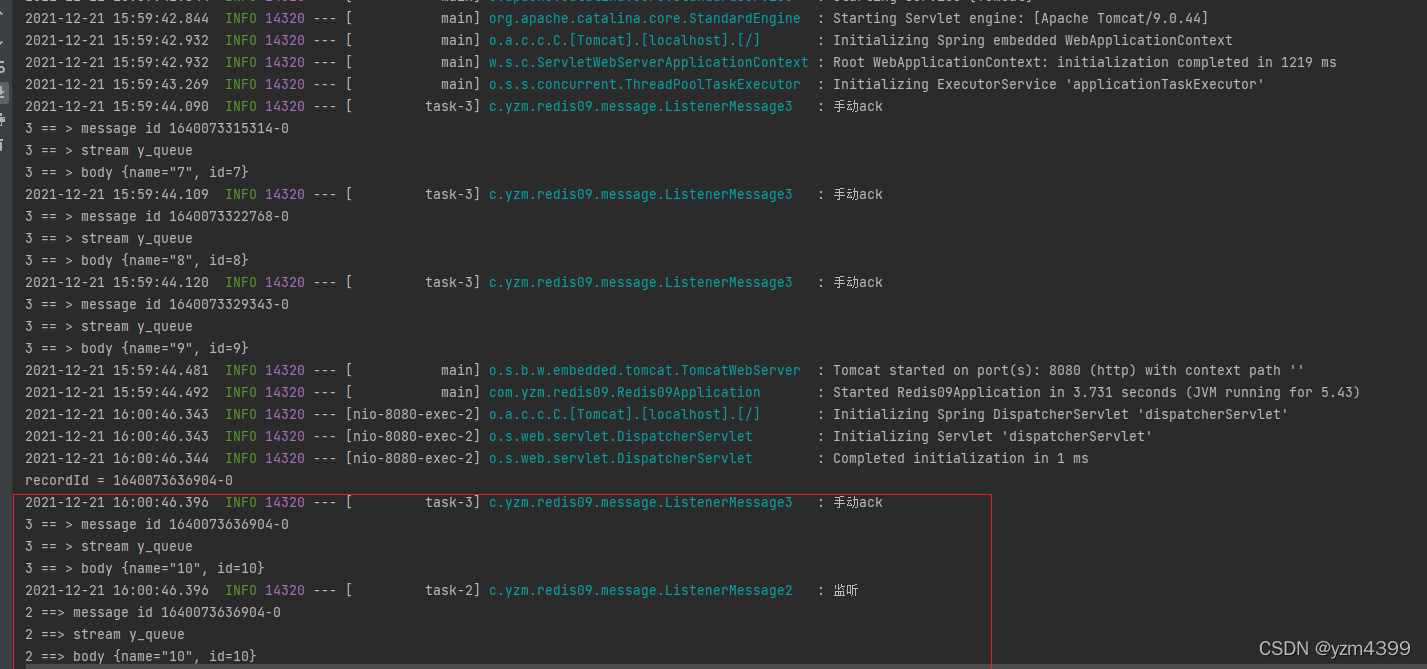

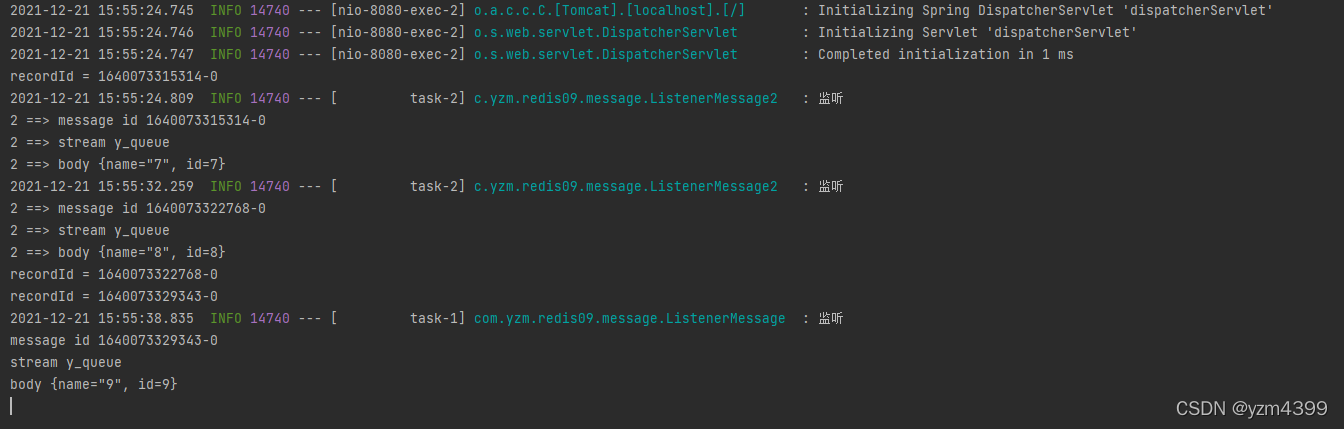

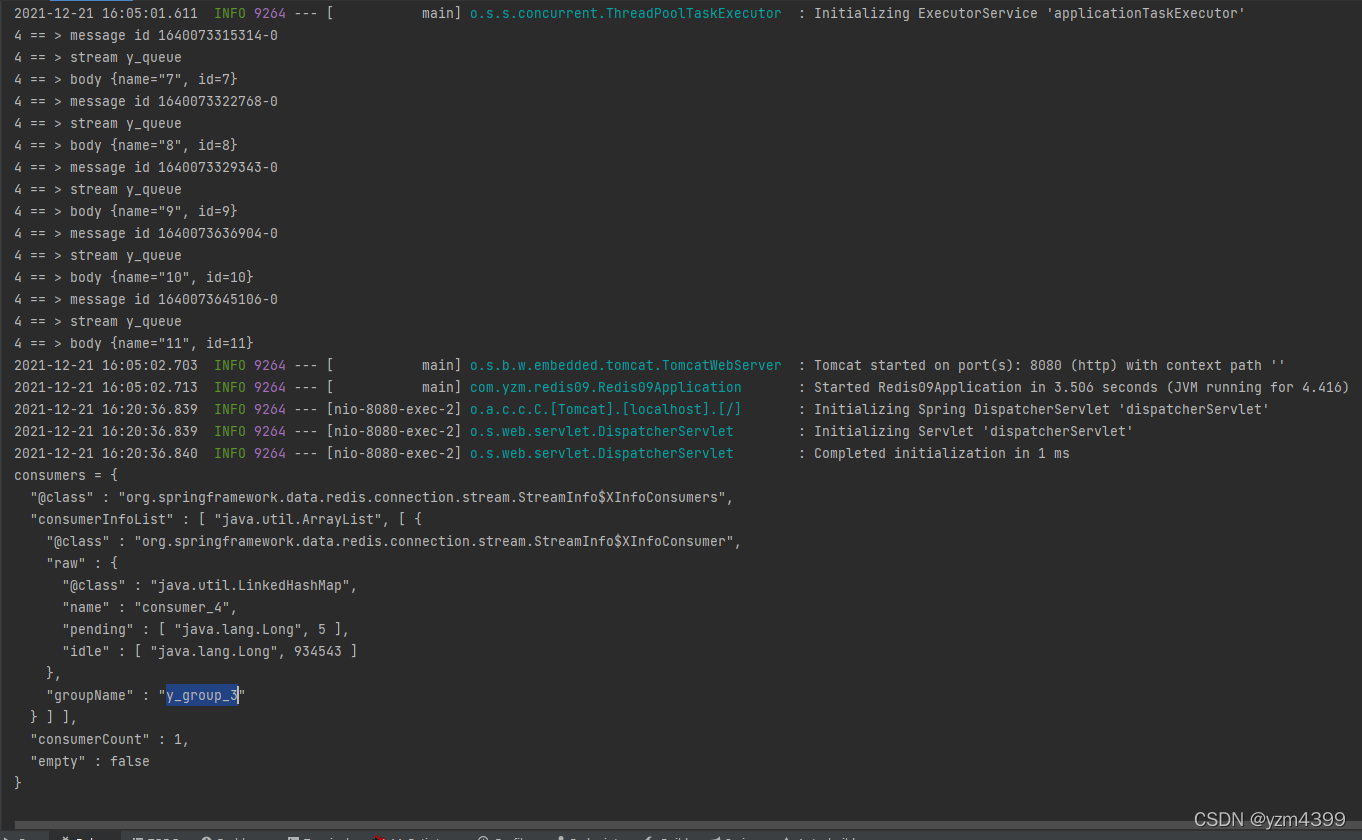

配置了2个订阅者(自动确认),竞争关系,3跟4暂时注释

http://localhost:8080/add?id=7&name=7

http://localhost:8080/add?id=8&name=8

http://localhost:8080/add?id=9&name=9

手动确认

package com.yzm.redis09.message;

import lombok.extern.slf4j.Slf4j;

import org.springframework.data.redis.connection.stream.MapRecord;

import org.springframework.data.redis.core.RedisTemplate;

import org.springframework.data.redis.stream.StreamListener;

/**

* redis stream监听消息

* 在消费完成后确认已消费

*/

@Slf4j

public class ListenerMessage3 implements StreamListener<String, MapRecord<String, String, String>> {

private final String group;

private final RedisTemplate<String,Object> redisTemplate;

public ListenerMessage3(String group,RedisTemplate<String,Object> redisTemplate) {

this.group = group;

this.redisTemplate = redisTemplate;

}

@Override

public void onMessage(MapRecord<String, String, String> message) {

log.info("手动ack");

// 接收到消息

System.out.println("3 == > message id " + message.getId());

System.out.println("3 == > stream " + message.getStream());

System.out.println("3 == > body " + message.getValue());

// 消费完成后确认消费(ACK)

redisTemplate.opsForStream().acknowledge(message.getStream(),group, message.getId());

}

}

由于测试订阅者1跟2的时候,就绑定了订阅者3,所有id7,8,9在重启的时候就被订阅者3消费

新建id=10,被订阅3和2消费,因为属于不同的消费组

6 消息迁移

订阅者4,不确认ack

package com.yzm.redis09.message;

import lombok.extern.slf4j.Slf4j;

import org.springframework.data.redis.connection.stream.MapRecord;

import org.springframework.data.redis.stream.StreamListener;

/**

* redis stream监听消息

* 在消费完成后确认已消费

*/

@Slf4j

public class ListenerMessage4 implements StreamListener<String, MapRecord<String, String, String>> {

@Override

public void onMessage(MapRecord<String, String, String> message) {

// 接收到消息

System.out.println("4 == > message id " + message.getId());

System.out.println("4 == > stream " + message.getStream());

System.out.println("4 == > body " + message.getValue());

}

}

http://localhost:8080/consumers?key=y_queue&group=y_group_3

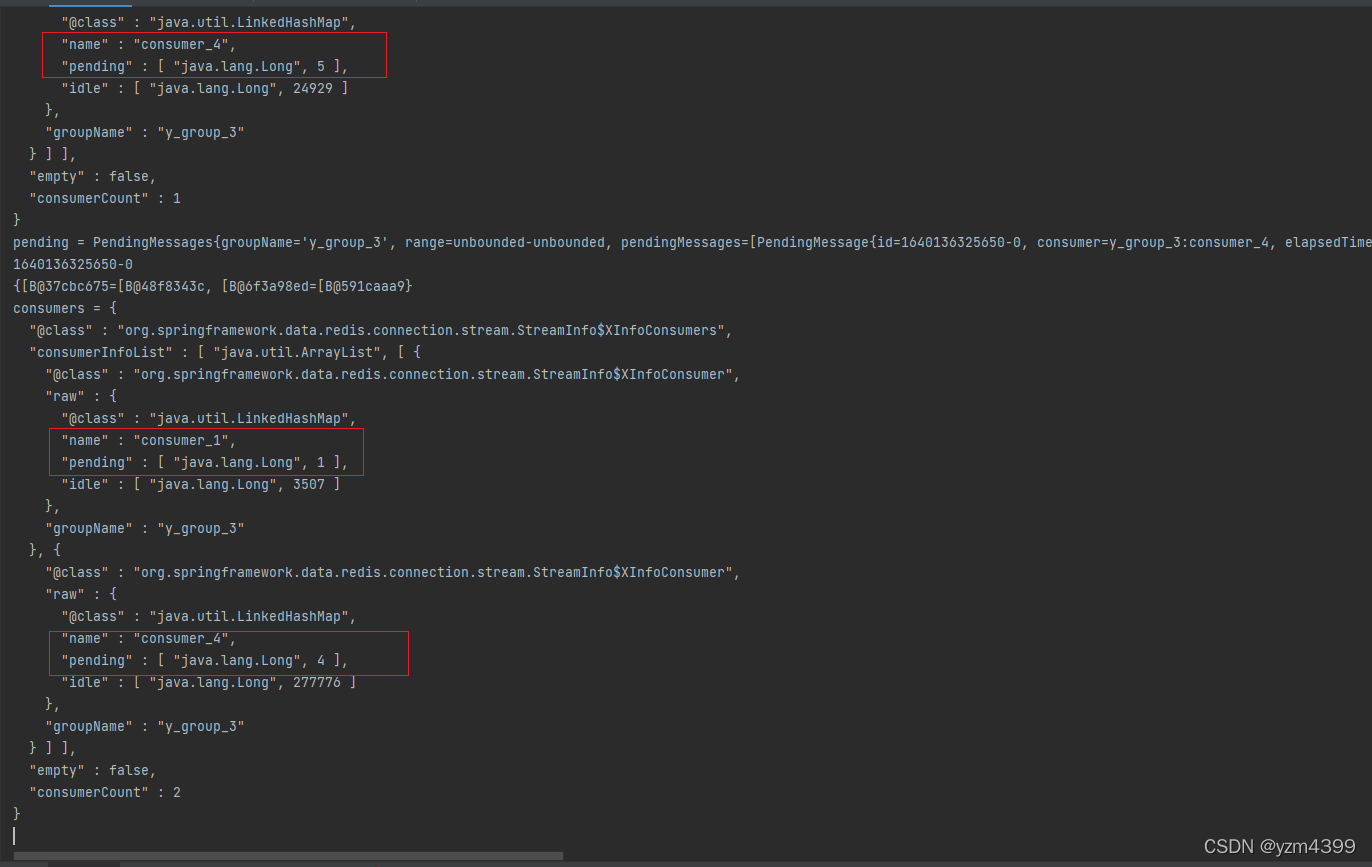

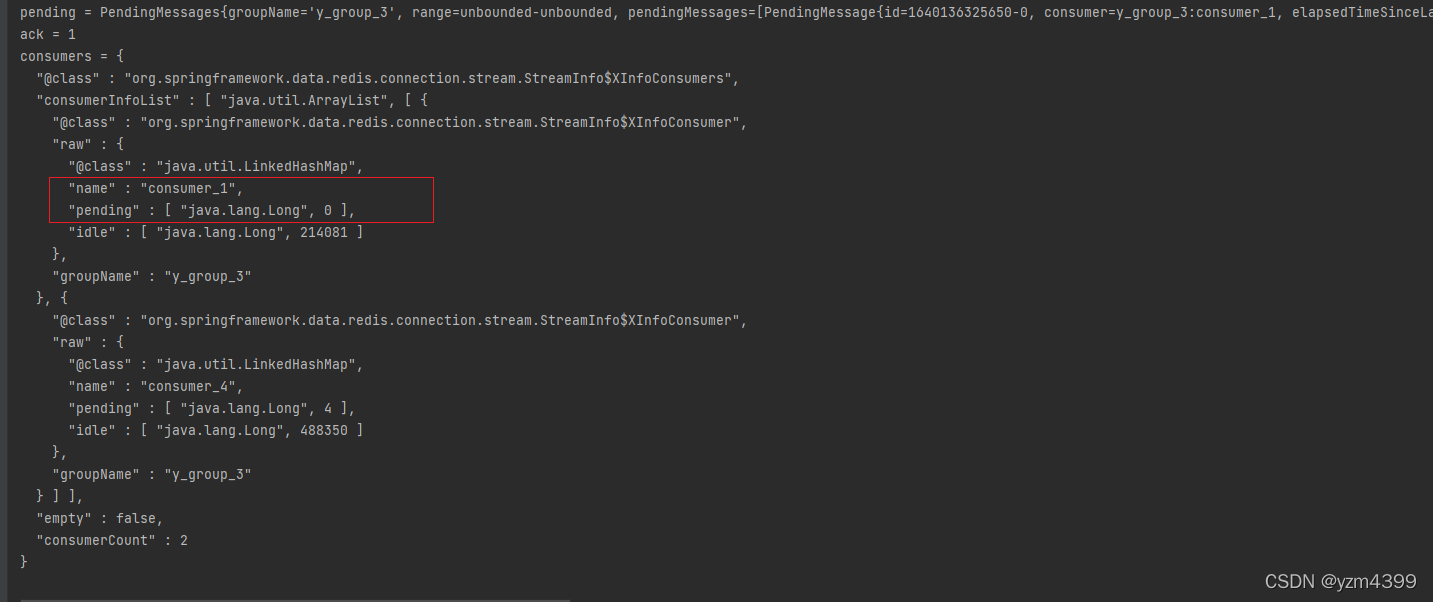

消息迁移,迁移只能在同一消费组中。比如消费者A、消费者B在同一消费组中,消费者A宕机了,那么可以将消费者A中PEL列表的消息迁移到消费者B。

将y_group_3组中的consumer_4消息迁移到consumer_1,consumer_1在迁移过程自动创建

http://localhost:8080/claim?key=y_queue&group=y_group_3&cons=consumer_1&time=10&reid=1640136325650-0

http://localhost:8080/pending2?key=y_queue&group=y_group_3&cons=consumer_1

http://localhost:8080/ack?key=y_queue&group=y_group_3&reId=1640136325650-0

http://localhost:8080/consumers?key=y_queue&group=y_group_3

13万+

13万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?