实际场景中有这么一种情况,我们需要在数据进入窗口统计之前进行一次过滤,但是数据不是单纯的被过滤掉,而是另有用途或者是数据只需要部分,多余的会产生报警信息。

所以不能单纯的使用filter进行数据过滤。

场景:读取广告点击记录,统计根据城市统计各个城市点击量。另外需要根据用户id统计,如果用户点击单个广告超过100次(一天之内,超过一天重新计算)则进行报警,输出到测输出流,并且超过100次的数据不进入城市统计。

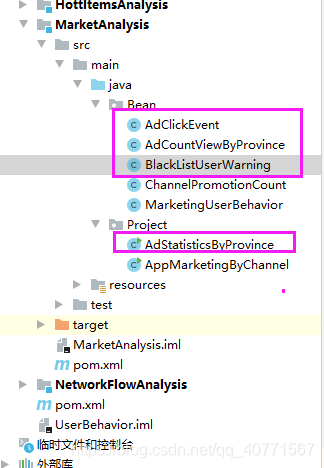

1、目录结构

2、pojo代码 首先是将接收到的数据转换成pojo类型

package Bean;

public class AdClickEvent {

private Long userId;

private Long adId;

private String province;

private String city;

private Long timestamp;

public AdClickEvent() {

}

public AdClickEvent(Long userId, Long adId, String province, String city, Long timestamp) {

this.userId = userId;

this.adId = adId;

this.province = province;

this.city = city;

this.timestamp = timestamp;

}

public Long getUserId() {

return userId;

}

public void setUserId(Long userId) {

this.userId = userId;

}

public Long getAdId() {

return adId;

}

public void setAdId(Long adId) {

this.adId = adId;

}

public String getProvince() {

return province;

}

public void setProvince(String province) {

this.province = province;

}

public String getCity() {

return city;

}

public void setCity(String city) {

this.city = city;

}

public Long getTimestamp() {

return timestamp;

}

public void setTimestamp(Long timestamp) {

this.timestamp = timestamp;

}

@Override

public String toString() {

return "AdClickEvent{" +

"userId=" + userId +

", adId=" + adId +

", province='" + province + '\'' +

", city='" + city + '\'' +

", timestamp=" + timestamp +

'}';

}

}

黑名单报警信息包装类:

package Bean;

public class BlackListUserWarning {

private Long userId;

private Long adId;

private String warnMsg;

public BlackListUserWarning() {

}

public BlackListUserWarning(Long userId, Long adId, String warnMsg) {

this.userId = userId;

this.adId = adId;

this.warnMsg = warnMsg;

}

public Long getUserId() {

return userId;

}

public void setUserId(Long userId) {

this.userId = userId;

}

public Long getAdId() {

return adId;

}

public void setAdId(Long adId) {

this.adId = adId;

}

public String getWarnMsg() {

return warnMsg;

}

public void setWarnMsg(String warnMsg) {

this.warnMsg = warnMsg;

}

@Override

public String toString() {

return "BlackListUserWarning{" +

"userId=" + userId +

", adId=" + adId +

", warnMsg='" + warnMsg + '\'' +

'}';

}

}

各省份点击量信息包装类:

package Bean;

public class AdCountViewByProvince {

private String province;

private String windowEnd;

private Long count;

public AdCountViewByProvince() {

}

public AdCountViewByProvince(String province, String windowEnd, Long count) {

this.province = province;

this.windowEnd = windowEnd;

this.count = count;

}

public String getProvince() {

return province;

}

public void setProvince(String province) {

this.province = province;

}

public String getWindowEnd() {

return windowEnd;

}

public void setWindowEnd(String windowEnd) {

this.windowEnd = windowEnd;

}

public Long getCount() {

return count;

}

public void setCount(Long count) {

this.count = count;

}

@Override

public String toString() {

return "AdCountViewByProvince{" +

"province='" + province + '\'' +

", windowEnd='" + windowEnd + '\'' +

", count='" + count + '\'' +

'}';

}

}

3、flink 主程序:

package Project;

import Bean.AdClickEvent;

import Bean.AdCountViewByProvince;

import Bean.BlackListUserWarning;

import org.apache.flink.api.common.functions.AggregateFunction;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.KeyedProcessFunction;

import org.apache.flink.streaming.api.functions.timestamps.AscendingTimestampExtractor;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

import org.apache.flink.util.OutputTag;

import java.net.URL;

import java.sql.Timestamp;

public class AdStatisticsByProvince {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(1);

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

URL resource = AdStatisticsByProvince.class.getResource("/AdClickLog.csv");

DataStream<AdClickEvent> adClickEventStream = env.readTextFile(resource.getPath())

.map(line -> {

String[] fields = line.split(",");

return new AdClickEvent(new Long(fields[0]), new Long(fields[1]), fields[2], fields[3], new Long(fields[4]));

})

.assignTimestampsAndWatermarks(new AscendingTimestampExtractor<AdClickEvent>() {

@Override

public long extractAscendingTimestamp(AdClickEvent adClickEvent) {

return adClickEvent.getTimestamp()*1000L;

}

});

//对同一个用户点击同一个广告的行为进行检测黑名单报警

SingleOutputStreamOperator<AdClickEvent> filterAdClickStream = adClickEventStream.keyBy("userId", "adId") //基于用户ID跟广告id做分组

.process(new FilterBlackListUser(100));

//基于省份分组 开窗聚合

DataStream<AdCountViewByProvince> adCountResultStream = filterAdClickStream.keyBy(AdClickEvent::getProvince)

.timeWindow(Time.hours(1), Time.minutes(5))

.aggregate(new AdCountAgg(), new AdCountResult());

adCountResultStream.print();

//打印测输出流

filterAdClickStream.getSideOutput(new OutputTag<BlackListUserWarning>("blacklist"){}).print("blacklist-user");

env.execute("ad count by province job");

}

//聚合操作 来一个+1

public static class AdCountAgg implements AggregateFunction<AdClickEvent, Long, Long>{

@Override

public Long createAccumulator() {

return 0L;

}

@Override

public Long add(AdClickEvent adClickEvent, Long aLong) {

return aLong+1;

}

@Override

public Long getResult(Long aLong) {

return aLong;

}

@Override

public Long merge(Long aLong, Long acc1) {

return aLong+acc1;

}

}

//实现窗口函数 输出AdCountViewByProvince信息

public static class AdCountResult implements WindowFunction<Long, AdCountViewByProvince, String, TimeWindow>{

@Override

public void apply(String s, TimeWindow timeWindow, Iterable<Long> iterable, Collector<AdCountViewByProvince> collector) throws Exception {

String windowEnd = new Timestamp(timeWindow.getEnd()).toString();

Long count = iterable.iterator().next();

collector.collect(new AdCountViewByProvince(s, windowEnd, count));

}

}

//实现自定义处理函数

public static class FilterBlackListUser extends KeyedProcessFunction<Tuple, AdClickEvent, AdClickEvent>{

//定义属性 点击次数上限

private Integer countUpperBound;

public FilterBlackListUser(Integer countUpperBound) {

this.countUpperBound = countUpperBound;

}

//定义状态 保存当前用户对某一广告的点击次数

ValueState<Long> countState;

//定义一个标志状态,保存当前用户是否已经发送到了黑名单里

ValueState<Boolean> isSentState;

//注册状态

@Override

public void open(Configuration parameters) throws Exception {

countState = getRuntimeContext().getState(new ValueStateDescriptor<Long>("ad-count", Long.class, 0L));

isSentState = getRuntimeContext().getState(new ValueStateDescriptor<Boolean>("is-sent", Boolean.class,false));

}

@Override

public void processElement(AdClickEvent adClickEvent, Context context, Collector<AdClickEvent> collector) throws Exception {

//判断当前用户对同一广告的点击次数 如果不够上限就+1 正常输出 如果达到上限 直接过滤掉并侧输出流报警

//当前count值

Long count = countState.value();

//判断是否是第一个数据,如果是 注册第二天零点定时器

if(count==0){

//第二天零点

Long ts = (context.timerService().currentProcessingTime() / (24*60*60*1000) + 1)*(24*60*60*1000) - 8*60*60*1000;

context.timerService().registerProcessingTimeTimer(ts);

}

if (count >= countUpperBound){

//判断是否输出过 如果没有就输出到测输出流

if(!isSentState.value()){

isSentState.update(true); //更新状态

context.output(new OutputTag<BlackListUserWarning>("blacklist"){}, new BlackListUserWarning(adClickEvent.getUserId(), adClickEvent.getAdId(),"click over "+ countUpperBound + "times."));

}

return;//不再执行下边操作

}

//如果没有返回 点击次数+1 更新状态 正常输出当前数据到主流

countState.update(count+1);

collector.collect(adClickEvent);

}

//清楚状态定时任务

@Override

public void onTimer(long timestamp, OnTimerContext ctx, Collector<AdClickEvent> out) throws Exception {

countState.clear();

isSentState.clear();

}

}

}

利用Flink处理广告点击数据,按省份统计点击量,并针对用户点击特定广告超过阈值的情况触发报警机制。

利用Flink处理广告点击数据,按省份统计点击量,并针对用户点击特定广告超过阈值的情况触发报警机制。

541

541

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?