序言:会了激励自己,现有时间的同时,努力坚持写博客,加深对flink的理解以及帮助有需要的朋友。

第一天水不水一天呢.............要不分享点学习视频吧,需要的留评论,网盘分享。

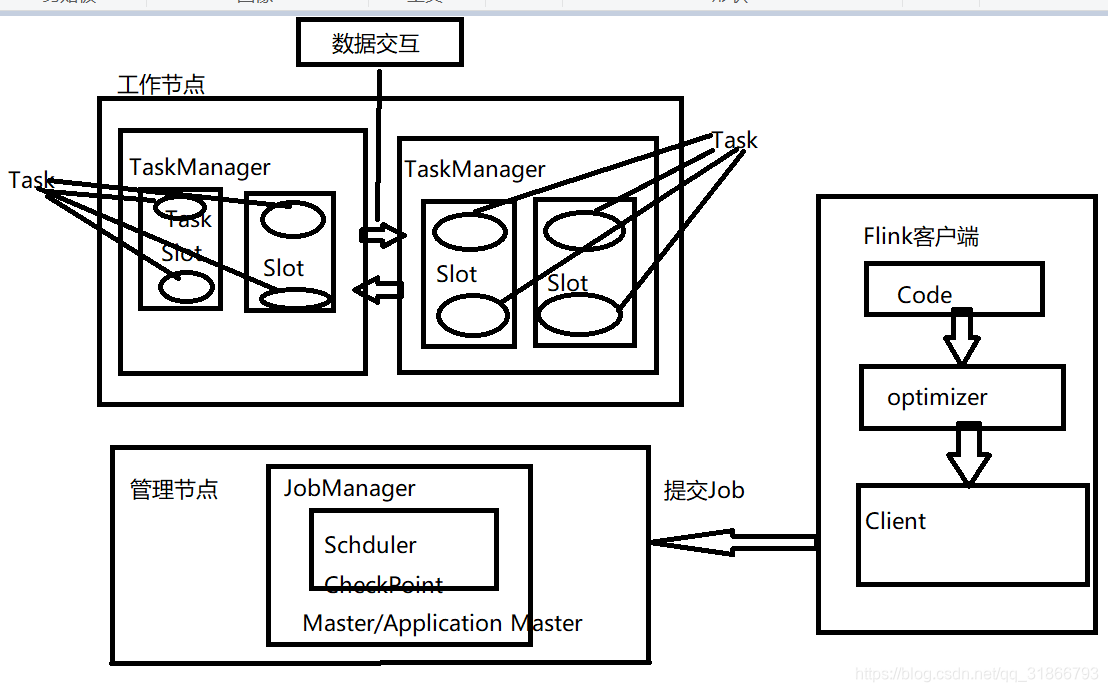

1,亲自动手画一画Flink的基础架构图

2,创建项目。具体过程省略,pom依赖摆上来:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>com.coder</groupId>

<artifactId>flink-wxgz</artifactId>

<version>0.0.1-SNAPSHOT</version>

</parent>

<artifactId>flink-wxgz-core</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>flink-wxgz-core</name>

<properties>

<java.version>1.8</java.version>

<flink.version>1.6.2</flink.version>

<scala.version>2.11.12</scala.version>

<scala.binary.version>2.11</scala.binary.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-elasticsearch</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<!--flink-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-core</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-cep-scala_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<!--flink to HDFS -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-filesystem_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-wikiedits_2.11</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.10_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.47</version>

</dependency>

<!--redis -->

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>2.9.0</version>

</dependency>

<dependency>

<groupId>org.apache.bahir</groupId>

<artifactId>flink-connector-redis_2.11</artifactId>

<version>1.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-rabbitmq_2.11</artifactId>

<version>1.8.0</version>

</dependency>

<!-- https://mvnrepository.com/artifact/com.rabbitmq/rabbitmq-client -->

<dependency>

<groupId>com.rabbitmq</groupId>

<artifactId>rabbitmq-client</artifactId>

<version>1.3.0</version>

</dependency>

<dependency>

<groupId>com.alibaba.rocketmq</groupId>

<artifactId>rocketmq-client</artifactId>

<version>3.2.6</version>

</dependency>

<!--定时器-->

<dependency>

<groupId>org.quartz-scheduler</groupId>

<artifactId>quartz</artifactId>

<version>2.2.1</version>

</dependency>

<dependency>

<groupId>org.quartz-scheduler</groupId>

<artifactId>quartz-jobs</artifactId>

<version>2.2.1</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>1.2.3</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.7.5</version>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>2.7.5</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.40</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-jdbc</artifactId>

<version>${flink.version}</version>

</dependency>

<!--谷歌引擎表达式-->

<dependency>

<groupId>com.googlecode.aviator</groupId>

<artifactId>aviator</artifactId>

<version>3.0.1</version>

</dependency>

<!--JSON依赖包-->

<dependency>

<groupId>org.json</groupId>

<artifactId>json</artifactId>

<version>20160810</version>

</dependency>

<!-- 解决报错问题 -->

<dependency>

<groupId>commons-io</groupId>

<artifactId>commons-io</artifactId>

<version>2.5</version>

</dependency>

<!--flink_hive依赖-->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-hadoop-fs</artifactId>

<version>1.6.2</version>

</dependency>

<dependency>

<groupId>com.jolbox</groupId>

<artifactId>bonecp</artifactId>

<version>0.8.0.RELEASE</version>

</dependency>

<dependency>

<groupId>com.twitter</groupId>

<artifactId>parquet-hive-bundle</artifactId>

<version>1.6.0</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-cli</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-common</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-service</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-shims</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.hive.hcatalog</groupId>

<artifactId>hive-hcatalog-core</artifactId>

<version>2.1.0</version>

</dependency>

<dependency>

<groupId>org.apache.thrift</groupId>

<artifactId>libfb303</artifactId>

<version>0.9.3</version>

<type>pom</type>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-hadoop-compatibility_2.11</artifactId>

<version>1.6.2</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-shaded-hadoop2</artifactId>

<version>1.6.2</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

</plugins>

</build>

</project>

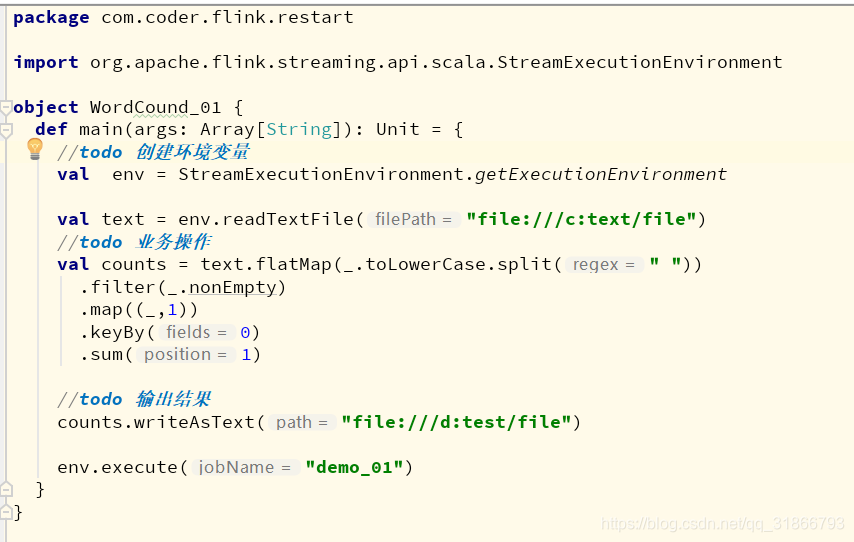

3,Flink程序结构,先写一个简单的实时流 wordCount,刚开始入门难免不是很懂,先写个几遍练练手。

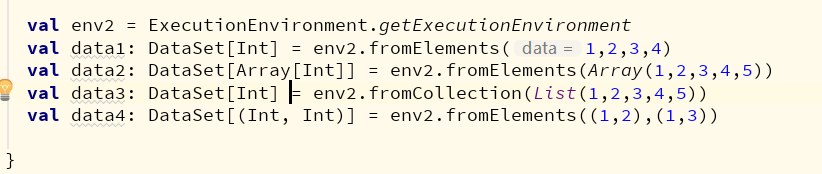

4,Flink的数据类型

1)原生的 自己就能搞定,自己写测试,使用 基本就这几种了:

2)jave Tuple类型

val data5: DataSet[(Int, Int)] = env2.fromElements(new Tuple2(1,2),new Tuple2(1,3))

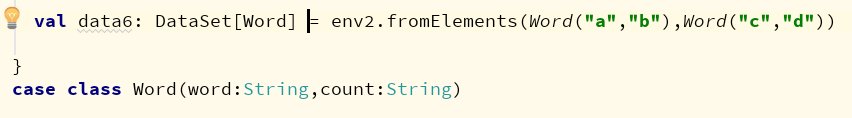

3)scala Case class类型

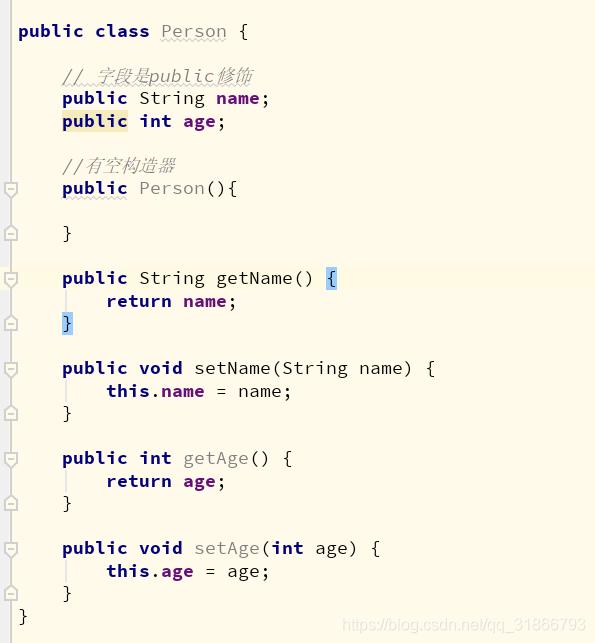

4)POJOs类型 要满足几个条件

定义好之后使用:

val data7: DataStream[Person] = env.fromElements(new Person("a",1),new Person("c",2))

data7.keyBy("name")

5,注意,在们执行scala 任务代码的时候,会报错 could not find implicit value for.....

这是因为隐式转换的原因,我们一定要在各个代码注意了,需要:

import org.apache.flink.api.scala._

或者

import org.apache.flink.streaming.api.scala._

6,Flink代码指定(开启)序列化

//todo 开启Avro序列化方式 env.getConfig.enableForceAvro() //todo 开启Kryo序列化 env.getConfig.enableForceKryo() //todo 如果Kryo 不能序列化POJOs 需要添加 env.getConfig.addDefaultKryoSerializer(classOf[?] type,Class<? extends Serializer<?>> serializerClass)

Flink学习入门与实践分享

Flink学习入门与实践分享

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?