白斩鸡的博客:https://me.youkuaiyun.com/weixin_47482194

====================================================================================

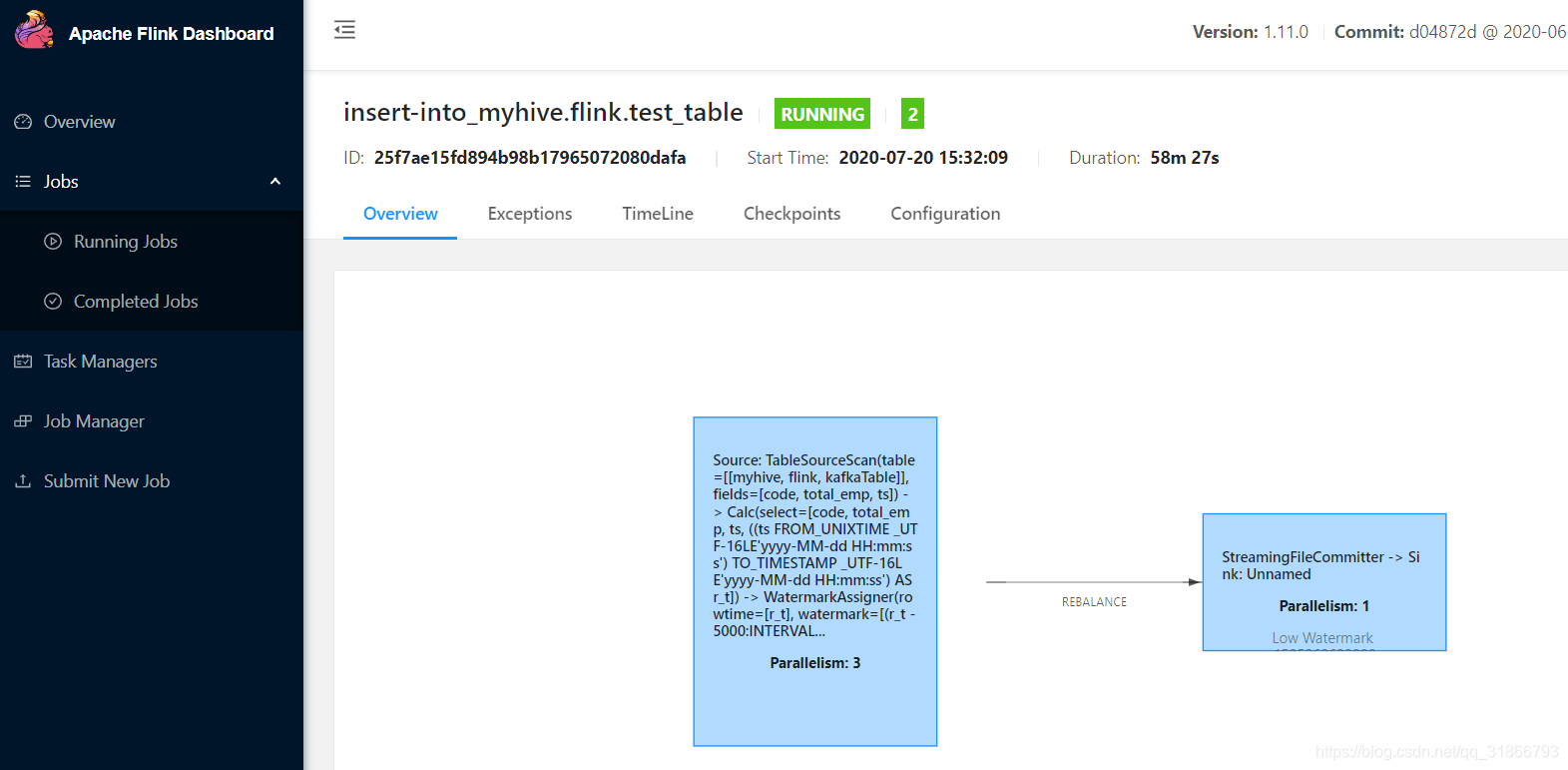

经历了几天的折磨,在白斩鸡的帮助下完成集群任务提交运行:

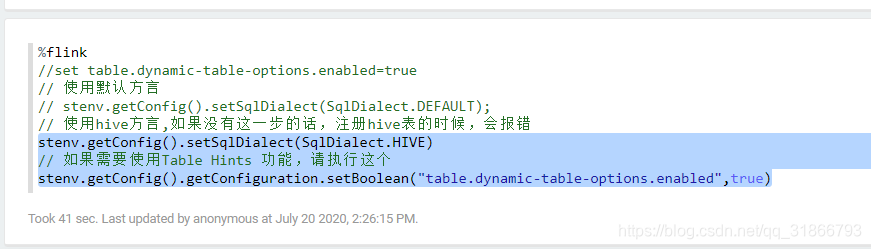

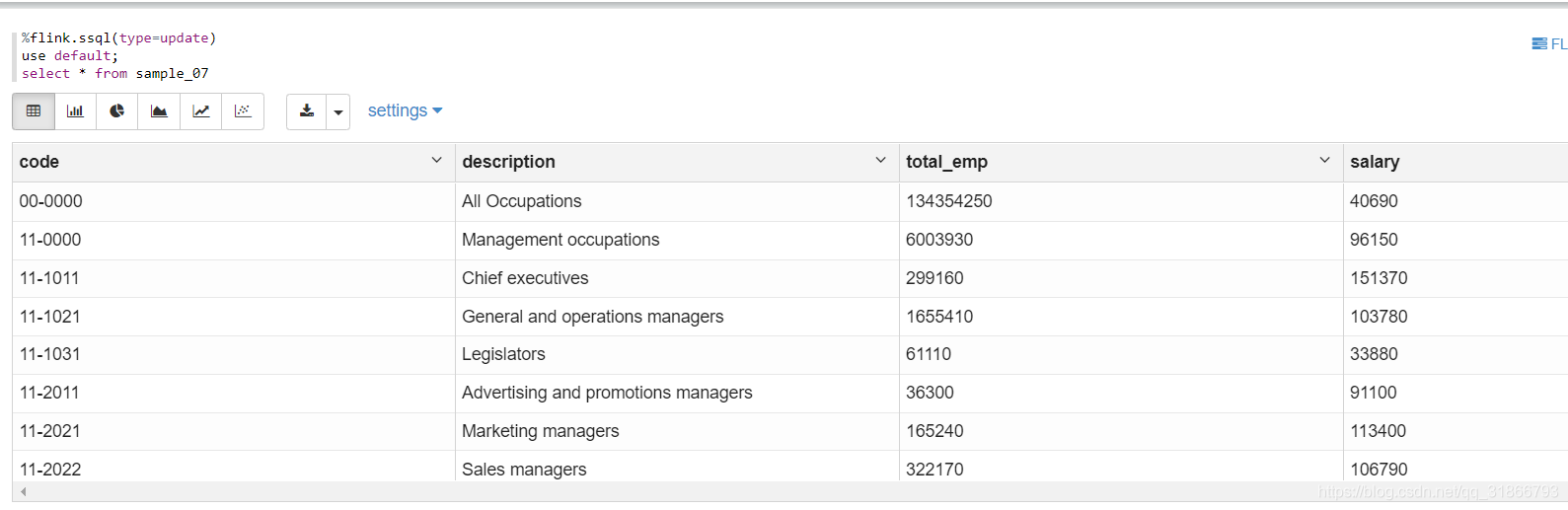

zeppelin提交任务运行:

遇到了很多的坑,其中很多报错信息没有留下来或者截图,相对原生集群与CDH集群的不同之处,就在于依赖包的问题,和解决依赖冲突,可以先参考1篇文章。

遇到了很多的坑,其中很多报错信息没有留下来或者截图,相对原生集群与CDH集群的不同之处,就在于依赖包的问题,和解决依赖冲突,可以先参考1篇文章。

https://developer.aliyun.com/article/761469.

之前不管怎么解决依赖冲突,或者放包最后都指向一个错误:

org.apache.flink.client.program.ProgramInvocationException: The main method caused an error: Failed to create Hive Metastore client

at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:302)

at org.apache.flink.client.program.PackagedProgram.invokeInteractiveModeForExecution(PackagedProgram.java:198)

at org.apache.flink.client.ClientUtils.executeProgram(ClientUtils.java:149)

at org.apache.flink.client.cli.CliFrontend.executeProgram(CliFrontend.java:699)

at org.apache.flink.client.cli.CliFrontend.run(CliFrontend.java:232)

at org.apache.flink.client.cli.CliFrontend.parseParameters(CliFrontend.java:916)

at org.apache.flink.client.cli.CliFrontend.lambda$main$10(CliFrontend.java:992)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1924)

at org.apache.flink.runtime.security.contexts.HadoopSecurityContext.runSecured(HadoopSecurityContext.java:41)

at org.apache.flink.client.cli.CliFrontend.main(CliFrontend.java:992)

Caused by: org.apache.flink.table.catalog.exceptions.CatalogException: Failed to create Hive Metastore client

at org.apache.flink.table.catalog.hive.client.HiveShimV120.getHiveMetastoreClient(HiveShimV120.java:58)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.createMetastoreClient(HiveMetastoreClientWrapper.java:240)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientWrapper.<init>(HiveMetastoreClientWrapper.java:71)

at org.apache.flink.table.catalog.hive.client.HiveMetastoreClientFactory.create(HiveMetastoreClientFactory.java:35)

at org.apache.flink.table.catalog.hive.HiveCatalog.open(HiveCatalog.java:223)

at org.apache.flink.table.catalog.CatalogManager.registerCatalog(CatalogManager.java:191)

at org.apache.flink.table.api.internal.TableEnvironmentImpl.registerCatalog(TableEnvironmentImpl.java:331)

at dataware.TestHive.main(TestHive.java:39)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flink.client.program.PackagedProgram.callMainMethod(PackagedProgram.java:288)

... 11 more

Caused by: java.lang.NoSuchMethodException: org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(org.apache.hadoop.hive.conf.HiveConf)

at java.lang.Class.getMethod(Class.java:1786)

at org.apache.flink.table.catalog.hive.client.HiveShimV120.getHiveMetastoreClient(HiveShimV120.java:54)

... 23 more

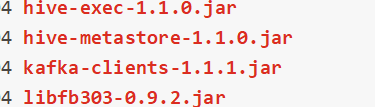

主要还是集群运行的依赖问题,有位同行说他用的CDH,在集群Flink lib下的依赖:

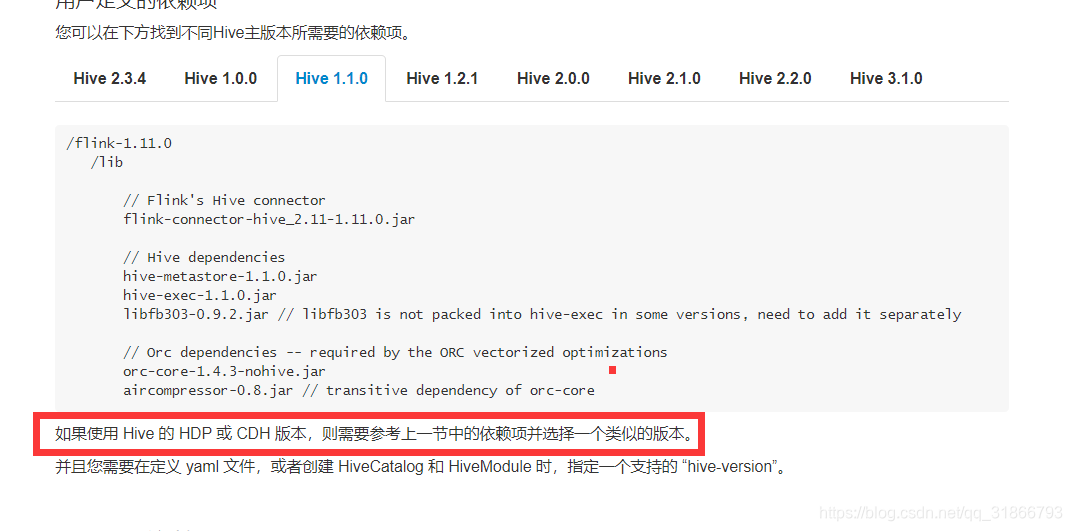

实际这是官网的推荐:

跟着官网走,下载

flink-sql-connector-hive-1.2.2_2.11-1.11.0.jar 包放入flink lib下

然后还有其余的包:

libfb303-0.9.3.jar

最重要的来了:

hive-exec-1.1.0-cdh5.16.2.jar

hive-metastore-1.1.0-cdh5.16.2.jar

这两个包在CDH 的/opt/cloudera/parcels/CDH/jars目录下拷贝过来。

如果启动之后还报错,比如下面的错误:

Caused by: org.apache.flink.util.FlinkException: Failed to execute job 'UnnamedTable__5'. at org.apache.flink.str

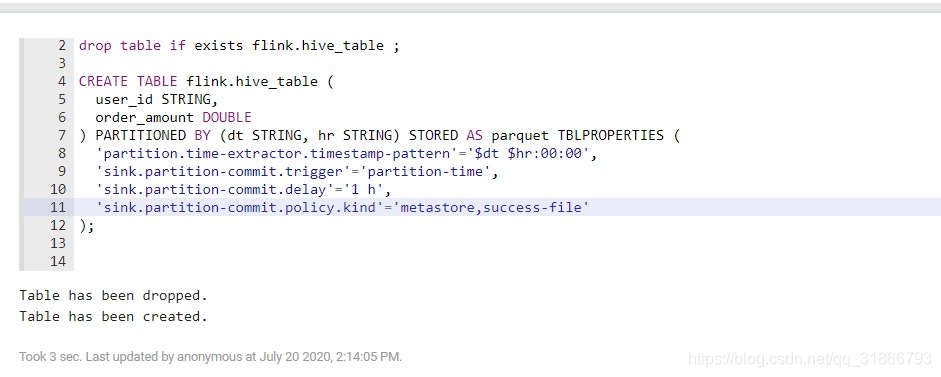

本文详细记录了在CDH5.16.2环境下,使用Flink1.11.0与Zeppelin进行任务提交时遇到的依赖问题及解决过程。主要错误是由于Hive Metastore客户端创建失败,通过查找官方文档和调整HADOOP_CLASSPATH环境变量,解决了依赖冲突和缺失的问题。建议在CDH环境下运行Flink时,确保正确引入Hive相关jar包,并在启动时加载Hadoop的环境变量。

本文详细记录了在CDH5.16.2环境下,使用Flink1.11.0与Zeppelin进行任务提交时遇到的依赖问题及解决过程。主要错误是由于Hive Metastore客户端创建失败,通过查找官方文档和调整HADOOP_CLASSPATH环境变量,解决了依赖冲突和缺失的问题。建议在CDH环境下运行Flink时,确保正确引入Hive相关jar包,并在启动时加载Hadoop的环境变量。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

881

881

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?