目录

1、com.xiong.bean.LuceneBean.java

2、package com.xiong.lucene.OperateLucene.java

3、com.xiong.utils.DocementUtil.java

一、项目需求

将文章作文Lucene的仓库和索引库,当输入一段话如“孙悟空很厉害”自动进行分词,并全文索引,返回其id号和文章内容,其中由于给多家银行,所以bean有银行号

二、项目开发所需基本知识

https://blog.youkuaiyun.com/qq_27339781/article/details/82814360

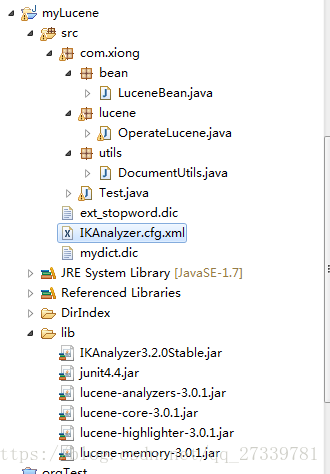

三、项目结构

四、代码

1、com.xiong.bean.LuceneBean.java

package com.xiong.bean;

import java.io.Serializable;

public class LuceneBean implements Serializable{

private static final long serialVersionUID = 1L;

private String id;

private String content;

private String bankId;

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

public String getBankId() {

return bankId;

}

public void setBankId(String bankId) {

this.bankId = bankId;

}

}

2、package com.xiong.lucene.OperateLucene.java

package com.xiong.lucene;

import java.io.File;

import java.io.IOException;

import java.io.StringReader;

import java.util.ArrayList;

import java.util.List;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.TokenStream;

import org.apache.lucene.analysis.tokenattributes.TermAttribute;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriter.MaxFieldLength;

import org.apache.lucene.index.Term;

import org.apache.lucene.queryParser.MultiFieldQueryParser;

import org.apache.lucene.queryParser.QueryParser;

import org.apache.lucene.search.BooleanClause.Occur;

import org.apache.lucene.search.BooleanQuery;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TermQuery;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.util.Version;

import org.junit.Test;

import org.wltea.analyzer.lucene.IKAnalyzer;

import com.xiong.bean.LuceneBean;

import com.xiong.utils.DocumentUtils;

public class OperateLucene {

private String dirPath;

public String getDirPath() {

return dirPath;

}

public void setDirPath(String dirPath) {

this.dirPath = dirPath;

}

public OperateLucene(String dirPath) {

super();

this.dirPath = dirPath;

}

/*-----------------------------------------------------------------------------------------------------------*/

/**

* 添加到Lucene的List数据库中

* @param luceneBeans lucene的List

* @param dirPath

*/

public void writeLucene(List<LuceneBean> luceneBeans){

IndexWriter indexWriter = null;

try {

/**

* 第一个参数 :索引库的位置

*/

Directory directory = FSDirectory.open(new File(dirPath));

/**

* 第二个参数:分词器

*/

Analyzer analyzer = new IKAnalyzer();

/**

* 第三个参数:限制索引库中字段的大小

*/

indexWriter = new IndexWriter(directory, analyzer, MaxFieldLength.LIMITED);

for (int i = 0; i < luceneBeans.size(); i++) {

LuceneBean luceneBean = luceneBeans.get(i);

//把article转化成document

Document document = DocumentUtils.bean2Document(luceneBean);

//把document加入到索引库中

indexWriter.addDocument(document);

}

} catch (IOException e) {

e.printStackTrace();

}finally{

try {

indexWriter.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

/*-----------------------------------------------------------------------------------------------------------*/

/**

* 添加到Lucene数据库中,单个

* @param luceneBeans lucene

* @param dirPath

*/

public boolean writeLucene(LuceneBean luceneBean){

IndexWriter indexWriter = null;

try {

/**

* 第一个参数 :索引库的位置

*/

Directory directory = FSDirectory.open(new File(dirPath));

/**

* 第二个参数:分词器

*/

Analyzer analyzer = new IKAnalyzer();

/**

* 第三个参数:限制索引库中字段的大小

*/

indexWriter = new IndexWriter(directory, analyzer, MaxFieldLength.LIMITED);

//把lucene转化成document

Document document = DocumentUtils.bean2Document(luceneBean);

//把document加入到索引库中

indexWriter.addDocument(document);

return true;

} catch (IOException e) {

e.printStackTrace();

return false;

}finally{

try {

indexWriter.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

/*-----------------------------------------------------------------------------------------------------------*/

/**

* 删除

* 不是把原来的cfs文件删除掉了,而是多了一个del文件

*/

@Test

public boolean delete(String id){

IndexWriter indexWriter = null;

try {

/**

* 第一个参数 :索引库的位置

*/

Directory directory = FSDirectory.open(new File(dirPath));

/**

* 第二个参数:分词器

*/

Analyzer analyzer = new IKAnalyzer();

/**

* 第三个参数:限制索引库中字段的大小

*/

indexWriter = new IndexWriter(directory, analyzer, MaxFieldLength.LIMITED);

Term term = new Term("id",id);

indexWriter.deleteDocuments(term);

return true;

} catch (IOException e) {

e.printStackTrace();

return false;

}finally{

try {

indexWriter.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

/*-----------------------------------------------------------------------------------------------------------*/

/**

* 修改

* 先删除后增加

*/

@Test

public boolean update(LuceneBean luceneBean){

IndexWriter indexWriter = null;

try {

/**

* 第一个参数 :索引库的位置

*/

Directory directory = FSDirectory.open(new File(dirPath));

/**

* 第二个参数:分词器

*/

Analyzer analyzer = new IKAnalyzer();

/**

* 第三个参数:限制索引库中字段的大小

*/

indexWriter = new IndexWriter(directory, analyzer, MaxFieldLength.LIMITED);

Term term = new Term("id",luceneBean.getId());

//把lucene转化成document

Document document = DocumentUtils.bean2Document(luceneBean);

indexWriter.updateDocument(term, document);

return true;

} catch (IOException e) {

e.printStackTrace();

return false;

}finally{

try {

indexWriter.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

/*-----------------------------------------------------------------------------------------------------------*/

/**

* 查找

* @throws Exception

*/

@Test

public boolean search(String content,String bankId){

try {

Directory directory = FSDirectory.open(new File(dirPath));

IndexSearcher indexSearcher = new IndexSearcher(directory);

BooleanQuery booleanQuery = new BooleanQuery();

Query query1 = new TermQuery(new Term("bankId", bankId));

booleanQuery.add(query1, Occur.MUST);

List<String> queryList = getQueryList(content);

for (int i = 0; i < queryList.size(); i++) {

String str = queryList.get(i);

Query query2 = new TermQuery(new Term("content", str));

booleanQuery.add(query2, Occur.SHOULD);

}

TopDocs topDocs = indexSearcher.search(booleanQuery, 5);

ScoreDoc[] scoreDocs = topDocs.scoreDocs;

List<LuceneBean> LuceneBeans = new ArrayList<LuceneBean>();

for (ScoreDoc scoreDoc : scoreDocs) {

int index = scoreDoc.doc;

Document document = indexSearcher.doc(index);

LuceneBean LuceneBean = DocumentUtils.document2Bean(document);

LuceneBeans.add(LuceneBean);

}

for (LuceneBean lucene : LuceneBeans) {

System.out.println(lucene.getId());

System.out.println(lucene.getContent());

System.out.println(lucene.getBankId());

}

return true;

} catch (Exception e) {

e.printStackTrace();

return false;

}finally{

}

}

/*-----------------------------------------------------------------------------------------------------------*/

/**

* 获取查询的组件

* @param content

* @return

* @throws IOException

*/

public List<String> getQueryList(String content) throws IOException{

Analyzer analyzer = new IKAnalyzer();

TokenStream tokenStream = analyzer.tokenStream("", new StringReader(content));

tokenStream.addAttribute(TermAttribute.class);

List<String> list = new ArrayList<String>();

while (tokenStream.incrementToken()) {

TermAttribute termAttribute = tokenStream.getAttribute(TermAttribute.class);

list.add(termAttribute.term());

System.out.println(termAttribute.term());

}

System.out.println("-------------------------------------------------");

tokenStream.end();

tokenStream.close();

return list;

}

/*-----------------------------------------------------------------------------------------------------------*/

}

3、com.xiong.utils.DocementUtil.java

package com.xiong.utils;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.Field.Index;

import org.apache.lucene.document.Field.Store;

import com.xiong.bean.LuceneBean;

public class DocumentUtils {

public static Document bean2Document(LuceneBean luceneBean){

Document document = new Document();

Field id = new Field("id", luceneBean.getId(), Store.YES, Index.NOT_ANALYZED);

Field content = new Field("content", luceneBean.getContent(), Store.YES, Index.ANALYZED);

Field bankId = new Field("bankId", luceneBean.getBankId(), Store.YES, Index.NOT_ANALYZED);

//把上面的field放入到document中

document.add(id);

document.add(content);

document.add(bankId);

return document;

}

public static LuceneBean document2Bean(Document document){

LuceneBean luceneBean = new LuceneBean();

luceneBean.setId(document.get("id"));

luceneBean.setContent(document.get("content"));

luceneBean.setBankId(document.get("bankId"));

return luceneBean;

}

}

4.com.xiong.Test.java

package com.xiong;

import java.io.IOException;

import com.xiong.lucene.OperateLucene;

public class Test {

public static void main(String[] args) throws IOException {

// List<LuceneBean> list = new ArrayList<LuceneBean>();

// for (int i = 0; i < 20; i++) {

// LuceneBean luceneBean = new LuceneBean();

// luceneBean.setId("2018"+i);

// luceneBean.setContent("我是银行的记录");

// luceneBean.setBankId(i+"");

// list.add(luceneBean);

// }

OperateLucene operateLucene = new OperateLucene("./DirIndex");

// operateLucene.writeLucene(list);

// operateLucene.delete("20182");

// LuceneBean luceneBean = new LuceneBean();

// luceneBean.setId("20183");

// luceneBean.setContent("我是银行的记录哈哈哈");

// luceneBean.setBankId("3");

// operateLucene.update(luceneBean);

operateLucene.search("记录","3");

// List<String> list = operateLucene.getQueryList("山西省太原市晋源区姚村镇枣园头关道街555号");

// for (int i = 0; i < list.size(); i++) {

// System.out.println(list.get(i));

// }

}

}

5.IKAnalyzer.cfg.xml

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE properties SYSTEM "http://java.sun.com/dtd/properties.dtd">

<properties>

<comment>IK Analyzer 扩展配置</comment>

<!--用户可以在这里配置自己的扩展字典-->

<entry key="ext_dict">/mydict.dic; </entry>

<!--用户可以在这里配置自己的扩展停止词字典

<entry key="ext_stopwords">/ext_stopword.dic</entry>

-->

</properties>

866

866

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?