Advanced Bayesian Learning

- 前言

- Review Bayes Optimal Classifier

- Naive Bayes Classifier

- Naive Bayes for Document Classi cation

- Bayesian Networks

- Summary

前言

本文将基于UoA的课件,连接上一篇博文介绍机器学习中的贝叶斯。看不太懂的读者请先阅读:贝叶斯学习(Bayesian Learning)基础篇

涉及的英语比较基础,所以为节省时间(不是full-time,还有其他三门课程,所以时间还是比较紧的),只在我以为需要解释的地方进行解释。

此文不用于任何商业用途,仅仅是个人学习过程笔记以及心得体会,侵必删。

Review Bayes Optimal Classifier

Bayes Optimal Classifier, also known as Bayes classifier, is a probabilistic model used for classification tasks. It is based on Bayes’ theorem and makes a decision based on the posterior probability of each class given the observed data.

The Bayes Optimal Classifier assumes that the distribution of the input data and the conditional probabilities of each class given the data are known. It then computes the posterior probability of each class given the data and chooses the class with the highest posterior probability as the classification result.

One of the advantages of the Bayes Optimal Classifier is that it is theoretically optimal, meaning it achieves the lowest possible classification error rate for any given distribution of the data. However, in practice, it may be difficult to estimate the distribution of the data accurately, and the assumptions made by the model may not hold.

The Bayes Optimal Classifier is often used as a benchmark for evaluating the performance of other classifiers, and it serves as a theoretical foundation for many probabilistic classification methods such as Naive Bayes, Bayesian Networks, and Bayesian Linear Regression.

Naive Bayes Classifier

For the concept

For the concept

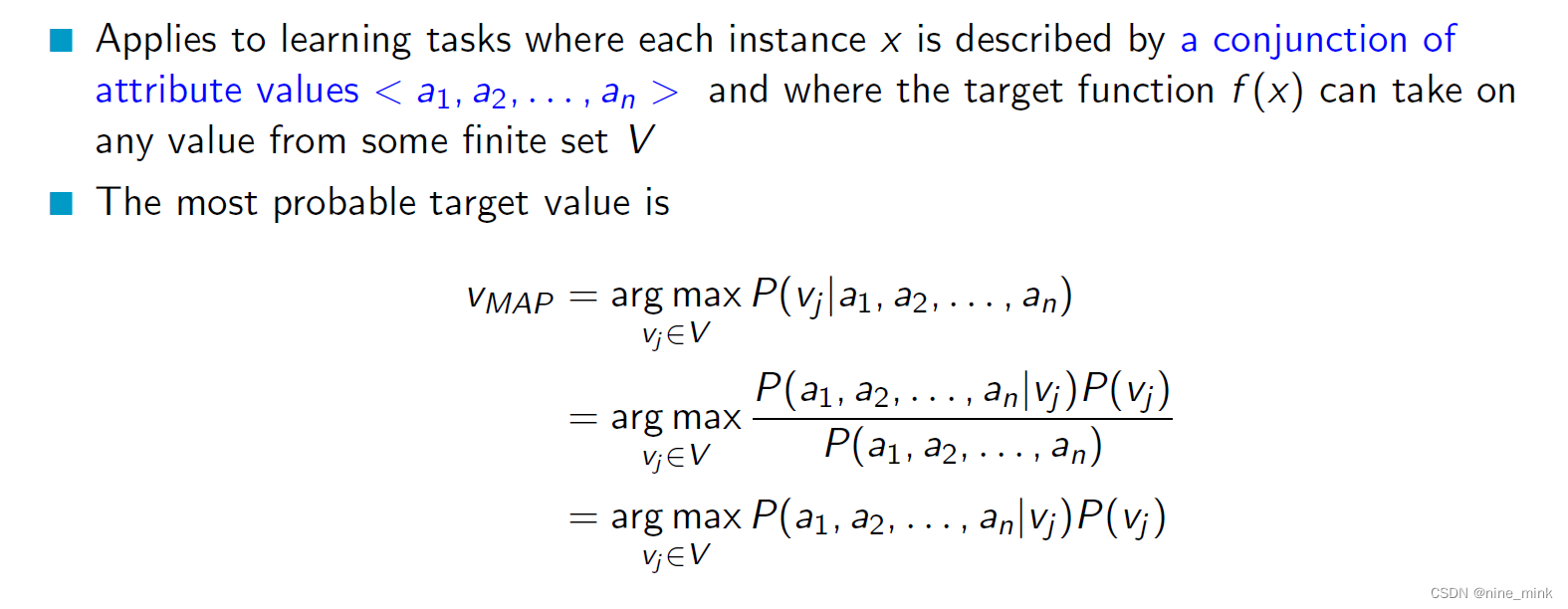

The Naive Bayes Classifier is a probabilistic algorithm that is commonly used for classification tasks. It is based on the Bayes theorem and applies to learning tasks where each instance is described by a conjunction of attribute values and where the target function can take on any value from some finite set.

In other words, the Naive Bayes Classifier is used when we have a set of attributes that describe an instance, and we want to predict the value of a target variable based on these attributes. The attributes are assumed to be independent of each other, and this assumption is known as the “naive” assumption, hence the name “Naive Bayes”.

For example, consider a dataset of emails that are labeled as either spam or not spam. Each email is described by a set of attributes such as the presence of certain words or phrases, the length of the email, etc. The target variable is the label (spam or not spam) that we want to predict for new emails. The Naive Bayes Classifier can be used to learn a model from this data, which can then be used to predict the label of new emails based on their attributes.

First, given the training data D, we can estimate the probability of each target value Vj by counting its frequency in D.

First, given the training data D, we can estimate the probability of each target value Vj by counting its frequency in D.

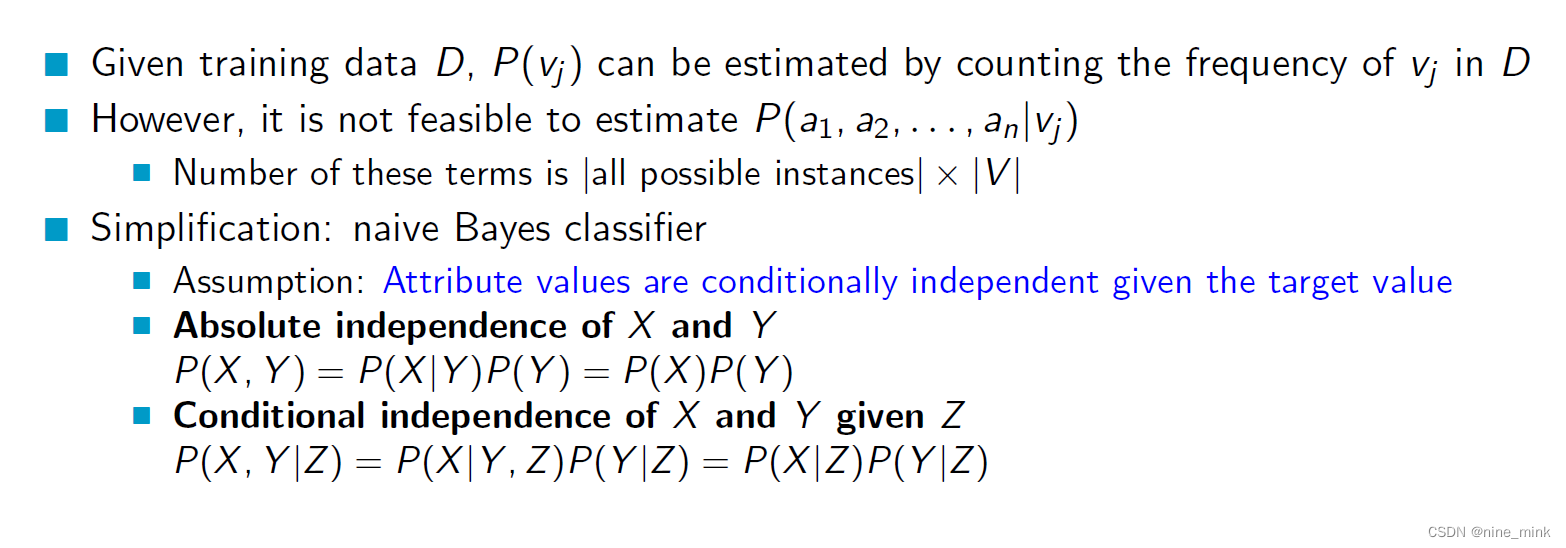

However, estimating the joint probability of all attribute values and target value P(a1, a2, …, an | Vj) is not feasible because the number of possible instances is too large (|all possible instances| x |V|).

To simplify the problem, the naive Bayes classifier assumes that the attribute values

本文介绍了贝叶斯学习中的BayesOptimalClassifier和NaiveBayesClassifier,讨论了条件独立性和拉普拉斯平滑在处理数据中的作用。此外,还探讨了朴素贝叶斯在文档分类问题上的应用,并提到了贝叶斯网络的概念,强调了变量间的关系和联合概率分布的重要性。

本文介绍了贝叶斯学习中的BayesOptimalClassifier和NaiveBayesClassifier,讨论了条件独立性和拉普拉斯平滑在处理数据中的作用。此外,还探讨了朴素贝叶斯在文档分类问题上的应用,并提到了贝叶斯网络的概念,强调了变量间的关系和联合概率分布的重要性。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

3418

3418

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?