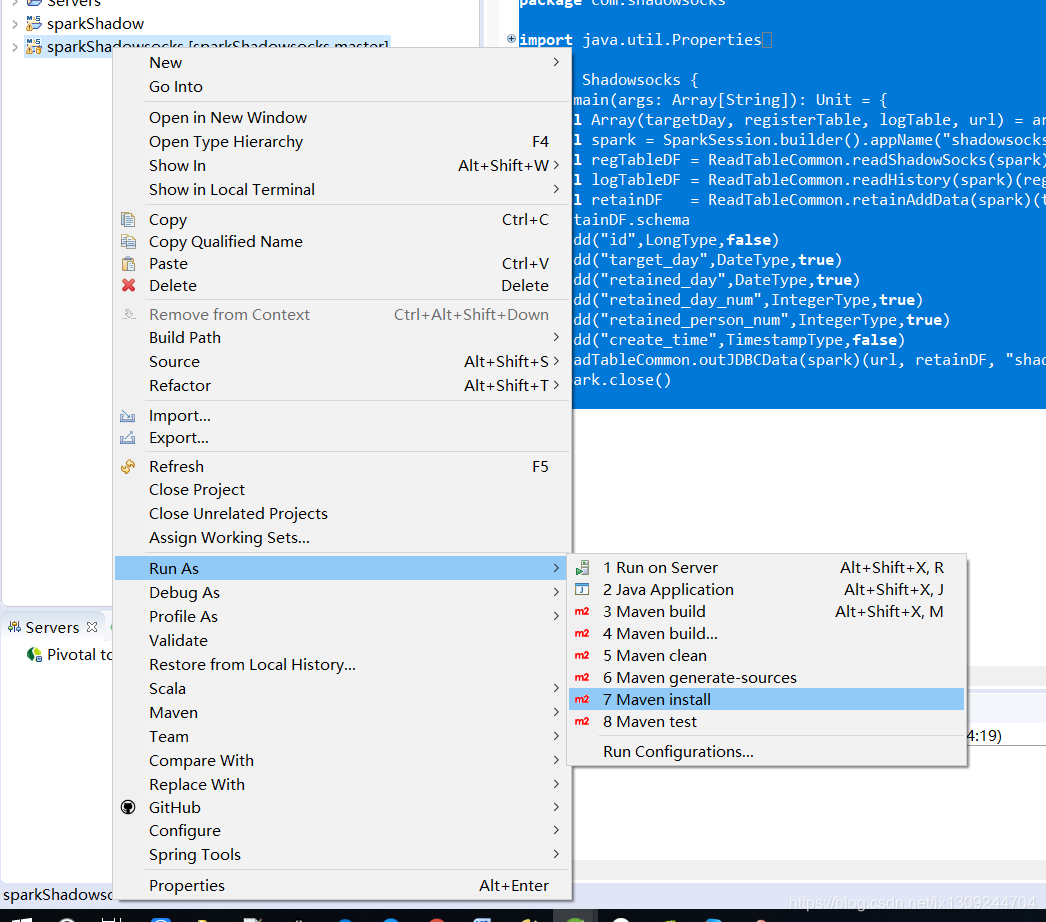

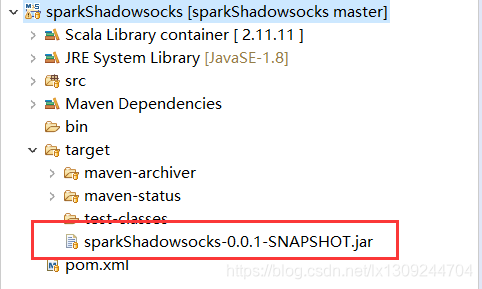

代码我们已经写好了,接下来我们就是打包,然后再环境上去执行了,打包方式如下:

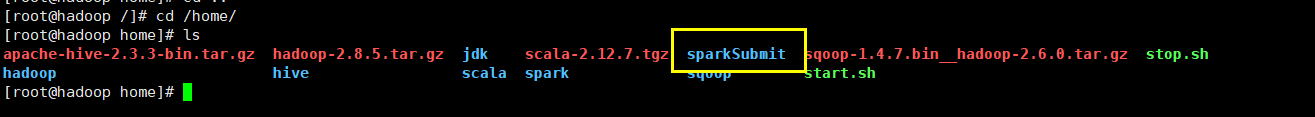

在服务器的home目录下创建一个文件夹sparkSubmit

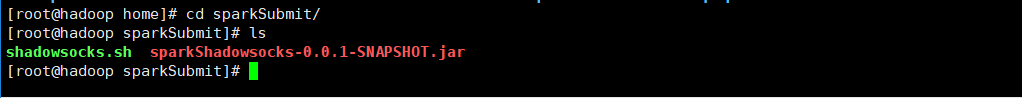

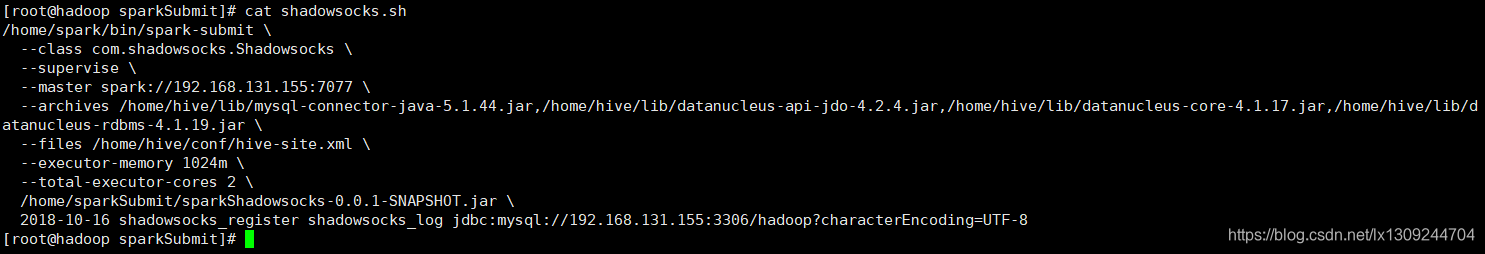

然后将打包的jar包上传sparkSubmit,然后再写一个启动脚本

任务提交方式和参数请参考官方文档:http://spark.apache.org/docs/latest/submitting-applications.html,shadowsocks.sh解析:

/home/spark/bin/spark-submit \

--class com.shadowsocks.Shadowsocks \

--supervise \

--master spark://192.168.131.155:7077 \

--archives /home/hive/lib/mysql-connector-java-5.1.44.jar,/home/hive/lib/datanucleus-api-jdo-4.2.4.jar,/home/hive/lib/datanucleus-core-4.1.17.jar,/home/hive/lib/datanucleus-rdbms-4.1.19.jar \

--files /home/hive/conf/hive-site.xml \

--executor-memory 1024m \

--total-executor-cores 2 \

/home/sparkSubmit/sparkShadowsocks-0.0.1-SNAPSHOT.jar \

2018-10-16 shadowsocks_register shadowsocks_log jdbc:mysql://192.168.131.155:3306/hadoop?characterEncoding=UTF-8

本文详细介绍如何在Spark环境中打包并部署应用程序,包括创建文件夹、上传jar包、编写启动脚本,以及通过spark-submit命令提交任务的具体参数设置。

本文详细介绍如何在Spark环境中打包并部署应用程序,包括创建文件夹、上传jar包、编写启动脚本,以及通过spark-submit命令提交任务的具体参数设置。

1100

1100

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?