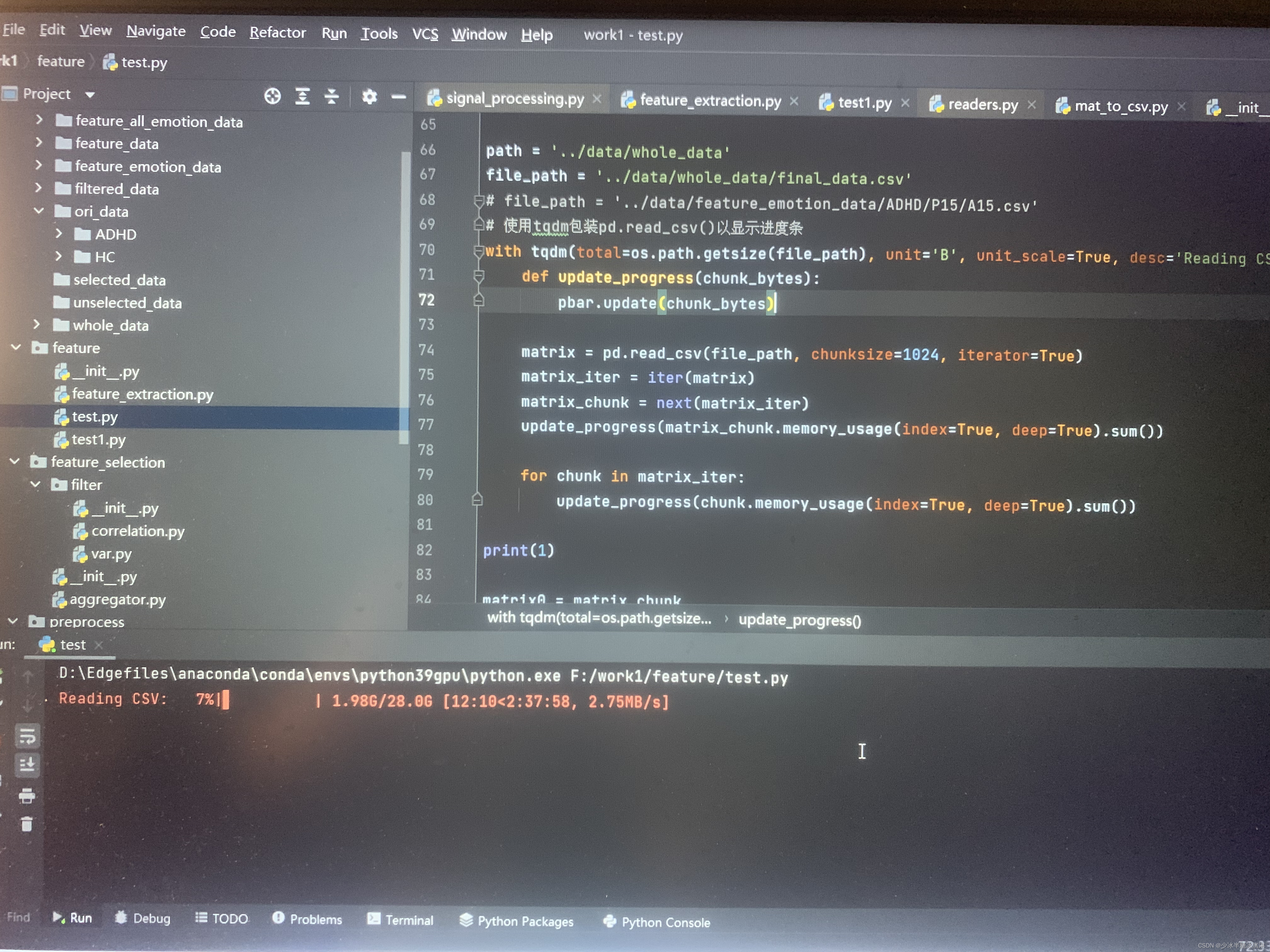

今天遇到了需要处理大型数据集的情况,对主流的方法自己都进行了测试。如果还在头铁遇到下图这种情况,建议跑路使用如下方法:

使用了pandas, polars, datatable, dask等主流库测试打开大型csv文件的速度,测试代码如下:

import dask.dataframe as dd

import time

import polars as pl

from sys import getsizeof

import datatable as dt

# file_path = '../data/whole_data/final_data.csv'

file_path = '../data/feature_emotion_data/ADHD/P15/A15.csv'

times = []

time_start = time.time()

py_data = pd.read_csv(file_path, encoding="gbk",engine="python")

time_end = time.time()

print("耗时{}秒".format(time_end - time_start))

print("数据共{}kB".format(round(getsizeof(py_data) / 1024, 2)))

times.append(time_end - time_start)

#pandas c

time_start = time.time()

c_data = pd.read_csv(file_path, encoding="gbk", engine="c")

time_end = time.time()

print("耗时{}秒".format(time_end - time_start))

print("数据共{}kB".format(round(getsizeof(c_data) / 1024, 2)))

times.append(time_end - time_start)

#polars

time_start = time.time()

polars_data = pl.read_csv(file_path, encoding="utf8-lossy")

end_time = time.time()

print("耗时{}秒".format(end_time - time_start))

print("data对象共{}kB".format(round(getsizeof(polars_data) / 1024, 2)))

pandas_df = polars_data.to_pandas()

memory_usage_bytes = pandas_df.memory_usage(deep=True).sum()

memory_usage_kb = round(memory_usage_bytes / 1024, 2)

print("数据共{}KB".format(memory_usage_kb))

times.append(end_time - time_start)

#datatable

time_start = time.time()

dt_data = dt.fread(file_path, encoding="gbk")

end_time = time.time()

print("耗时{}秒".format(end_time - time_start))

print("datatable版本:", dt.__version__)

print(type(dt_data))

print("数据共{}kB".format(round(getsizeof(dt_data) / 1024, 2)))

times.append(end_time - time_start)

# # dask读取大型CSV文件

# df = dd.read_csv(file_path)

print(times)

print(1)最后运行的结果如下:

耗时7.5155909061431885秒

数据共56527.17kB

耗时19.84559988975525秒

数据共56527.17kB

耗时1.9473328590393066秒

data对象共0.05kB

数据共56527.16KB

耗时4.629514455795288秒

datatable版本: 1.1.0

<class 'datatable.Frame'>

数据共29202.6kB

[7.5155909061431885, 19.84559988975525, 1.9473328590393066, 4.629514455795288]

1从这里,我们可发现,在速度上polars大于datatable大于pandas

128

128

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?