1 添加flume配置文件 flume-pull-streaming.conf

# Name the components on this agent

simple-agent.sources = netcat-source

simple-agent.sinks = spark-sink

simple-agent.channels = memory-channel

simple-agent.sources.netcat-source.type = netcat

simple-agent.sources.netcat-source.bind = localhost

simple-agent.sources.netcat-source.port = 44444

simple-agent.sinks.spark-sink.type = org.apache.spark.streaming.flume.sink.SparkSink

simple-agent.sinks.spark-sink.hostname=localhost

simple-agent.sinks.spark-sink.port=41414

simple-agent.channels.memory-channel.type = memory

simple-agent.sources.netcat-source.channels = memory-channel

simple-agent.sinks.spark-sink.channel = memory-channel2 添加依赖

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-flume-sink_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.5</version>

</dependency>3 FlumePullWC.scala

package com.lihaogn.sparkFlume

import org.apache.spark.SparkConf

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.streaming.flume.FlumeUtils

/**

* spark streaming & flume => pull

*/

object FlumePullWC {

def main(args: Array[String]): Unit = {

if (args.length != 2) {

System.err.println("usage: flumepushwc <hostname> <por>")

System.exit(1)

}

val Array(hostname, port) = args

val sparkConf = new SparkConf() //.setMaster("local[2]").setAppName("FlumePushWC")

val ssc = new StreamingContext(sparkConf, Seconds(5))

// 使用sparkstreaming整合flume

// val flumeStream = FlumeUtils.createStream(ssc, "localhost", 41414)

val flumeStream = FlumeUtils.createPollingStream(ssc, hostname, port.toInt)

flumeStream.map(x => new String(x.event.getBody.array()).trim)

.flatMap(_.split(" ")).map((_, 1)).reduceByKey(_ + _).print()

ssc.start()

ssc.awaitTermination()

}

}

4 编译代码

mvn clean package -DskipTests5 启动flume

flume-ng agent \

--conf $FLUME_HOME/conf \

--conf-file $FLUME_HOME/conf/flume-pull-streaming.conf \

--name simple-agent \

-Dflume.root.logger=INFO,console6 提交spark代码运行

spark-submit \

--class com.lihaogn.sparkFlume.FlumePullWC \

--master local[2] \

--jars /Users/Mac/software/spark-streaming-flume-assembly_2.11-2.2.0.jar \

/Users/Mac/my-lib/Kafka-train-1.0.jar \

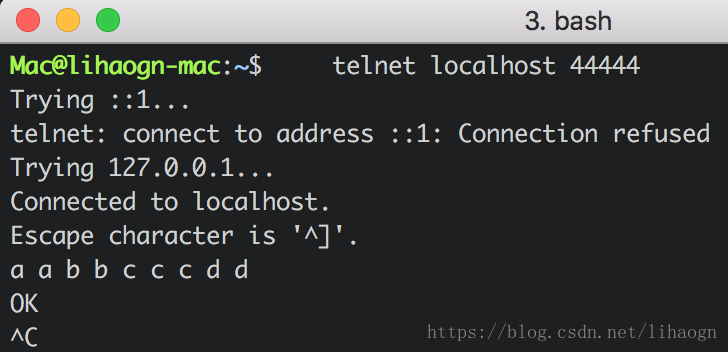

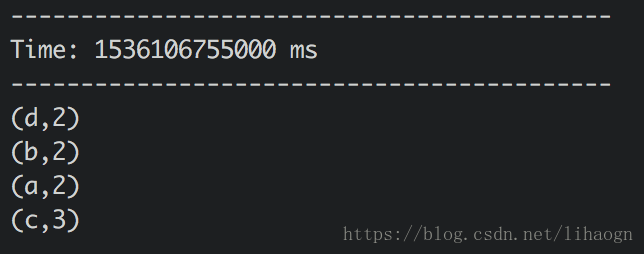

localhost 414147 启动telnet,查看结果

本文介绍如何通过Spark Streaming与Flume集成实现日志实时处理。具体步骤包括配置Flume和Spark Streaming,创建Flume配置文件,添加所需依赖,编写Scala代码实现日志收集与处理,并最终启动Flume及Spark应用。

本文介绍如何通过Spark Streaming与Flume集成实现日志实时处理。具体步骤包括配置Flume和Spark Streaming,创建Flume配置文件,添加所需依赖,编写Scala代码实现日志收集与处理,并最终启动Flume及Spark应用。

876

876

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?