背景:

在马哥发布关于豆包手机安全危险权限文章:

聊一聊豆包AI手机助手高度敏感权限CAPTURE_SECURE_VIDEO_OUTPUT

后一天豆包手机助手官方也有一个声明文章,当然本文先不对这些敏感权限危险程度做啥解释,只是作为一个fw系统开发者,技术角度深入了解系统剖析这些权限的使用场景和作用。

下面来看看权限READ_FRAME_BUFFER。

下面来看看权限READ_FRAME_BUFFER。

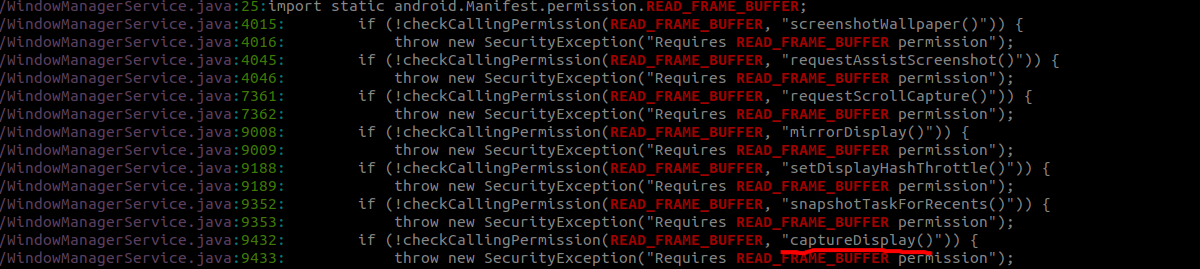

系统中使用READ_FRAME_BUFFER

搜索系统中对这个权限校验地方如下:

上面可以看出主要是针对截图,截屏等方法的需要该权限的判断,熟悉点的方法比如:screenshotWallpaper,captureDisplay都是截图相关的api接口。

上面可以看出主要是针对截图,截屏等方法的需要该权限的判断,熟悉点的方法比如:screenshotWallpaper,captureDisplay都是截图相关的api接口。

captureDisplay为案例进行分析:

frameworks/base/services/core/java/com/android/server/wm/WindowManagerService.java

@Override

public void captureDisplay(int displayId, @Nullable ScreenCapture.CaptureArgs captureArgs,

ScreenCapture.ScreenCaptureListener listener) {

Slog.d(TAG, "captureDisplay");

//检验READ_FRAME_BUFFER权限是否有

if (!checkCallingPermission(READ_FRAME_BUFFER, "captureDisplay()")) {

throw new SecurityException("Requires READ_FRAME_BUFFER permission");

}

//权限校验通过则

ScreenCapture.LayerCaptureArgs layerCaptureArgs = getCaptureArgs(displayId, captureArgs);

ScreenCapture.captureLayers(layerCaptureArgs, listener);

if (Binder.getCallingUid() != SYSTEM_UID) {

// Release the SurfaceControl objects only if the caller is not in system server as no

// parcelling occurs in this case.

layerCaptureArgs.release();

}

}

接下来看看captureLayers这部分的源码。

frameworks/base/core/java/android/window/ScreenCapture.java

/**

* @param captureArgs Arguments about how to take the screenshot

* @param captureListener A listener to receive the screenshot callback

* @hide

*/

public static int captureLayers(@NonNull LayerCaptureArgs captureArgs,

@NonNull ScreenCaptureListener captureListener) {

return nativeCaptureLayers(captureArgs, captureListener.mNativeObject, false /* sync */);

}

captureArgs:封装捕获参数,核心包括 displayId(目标显示屏 ID)、待捕获的图层列表、捕获分辨率 / 格式,其实这个参数是核心中核心。

captureListener:异步回调,接收捕获结果(成功返回 ScreenCaptureResult,包含图层帧数据;失败返回错误码)。

frameworks/base/core/jni/android_window_ScreenCapture.cpp

static jint nativeCaptureLayers(JNIEnv* env, jclass clazz, jobject layerCaptureArgsObject,

jlong screenCaptureListenerObject, jboolean sync) {

LayerCaptureArgs layerCaptureArgs;

getCaptureArgs(env, layerCaptureArgsObject, layerCaptureArgs.captureArgs);

SurfaceControl* layer = reinterpret_cast<SurfaceControl*>(

env->GetLongField(layerCaptureArgsObject, gLayerCaptureArgsClassInfo.layer));

if (layer == nullptr) {

return BAD_VALUE;

}

//参数转换

layerCaptureArgs.layerHandle = layer->getHandle();

layerCaptureArgs.childrenOnly =

env->GetBooleanField(layerCaptureArgsObject, gLayerCaptureArgsClassInfo.childrenOnly);

sp<gui::IScreenCaptureListener> captureListener =

reinterpret_cast<gui::IScreenCaptureListener*>(screenCaptureListenerObject);

//调用到 ScreenshotClient::captureLayers

return ScreenshotClient::captureLayers(layerCaptureArgs, captureListener, sync);

}

下面看看ScreenshotClient::captureLayers方法:

frameworks/native/libs/gui/SurfaceComposerClient.cpp

status_t ScreenshotClient::captureLayers(const LayerCaptureArgs& captureArgs,

const sp<IScreenCaptureListener>& captureListener,

bool sync) {

sp<gui::ISurfaceComposer> s(ComposerServiceAIDL::getComposerService());

if (s == nullptr) return NO_INIT;

binder::Status status;

if (sync) {

gui::ScreenCaptureResults captureResults;

//这里会调用到sf层面

status = s->captureLayersSync(captureArgs, &captureResults);

captureListener->onScreenCaptureCompleted(captureResults);

} else {

status = s->captureLayers(captureArgs, captureListener);

}

return statusTFromBinderStatus(status);

}

SurfaceFlinger部分的captureLayers方法:

void SurfaceFlinger::captureLayers(const LayerCaptureArgs& args,

const sp<IScreenCaptureListener>& captureListener) {

SFTRACE_CALL();

const auto& captureArgs = args.captureArgs;

//这里会再次校验权限READ_FRAME_BUFFER

status_t validate = validateScreenshotPermissions(captureArgs);

if (validate != OK) {

ALOGD("Permission denied to captureLayers");

invokeScreenCaptureError(validate, captureListener);

return;

}

//省略

ScreenshotArgs screenshotArgs;

screenshotArgs.captureTypeVariant = parent->getSequence();

screenshotArgs.childrenOnly = args.childrenOnly;

screenshotArgs.sourceCrop = crop;

screenshotArgs.reqSize = reqSize;

screenshotArgs.dataspace = static_cast<ui::Dataspace>(captureArgs.dataspace);

screenshotArgs.isSecure = captureArgs.captureSecureLayers;

screenshotArgs.seamlessTransition = captureArgs.hintForSeamlessTransition;

//准备参数调用到captureScreenCommon方法

captureScreenCommon(screenshotArgs, getLayerSnapshotsFn, reqSize,

static_cast<ui::PixelFormat>(captureArgs.pixelFormat),

captureArgs.allowProtected, captureArgs.grayscale, captureListener);

}

这里主要就是先进行权限检测,再调用到captureScreenCommon方法。

先看看validateScreenshotPermissions校验方法

static status_t validateScreenshotPermissions(const CaptureArgs& captureArgs) {

IPCThreadState* ipc = IPCThreadState::self();

const int pid = ipc->getCallingPid();

const int uid = ipc->getCallingUid();

//检测ReadFrameBuffer权限

if (uid == AID_GRAPHICS || uid == AID_SYSTEM ||

PermissionCache::checkPermission(sReadFramebuffer, pid, uid)) {

return OK;

}

ALOGE("Permission Denial: can't take screenshot pid=%d, uid=%d", pid, uid);

return PERMISSION_DENIED;

}

再看看captureScreenCommon方法

void SurfaceFlinger::captureScreenCommon(ScreenshotArgs& args,

GetLayerSnapshotsFunction getLayerSnapshotsFn,

ui::Size bufferSize, ui::PixelFormat reqPixelFormat,

bool allowProtected, bool grayscale,

const sp<IScreenCaptureListener>& captureListener) {

//省略

//这里会调用到captureScreenshot方法,而且返回fence进行等待

auto futureFence =

captureScreenshot(args, texture, false /* regionSampling */, grayscale, isProtected,

captureListener, layers, hdrTexture, gainmapTexture);

futureFence.get();

}

那么看看captureScreenshot方法:

ftl::SharedFuture<FenceResult> SurfaceFlinger::captureScreenshot(

ScreenshotArgs& args, const std::shared_ptr<renderengine::ExternalTexture>& buffer,

bool regionSampling, bool grayscale, bool isProtected,

const sp<IScreenCaptureListener>& captureListener,

const std::vector<std::pair<Layer*, sp<LayerFE>>>& layers,

const std::shared_ptr<renderengine::ExternalTexture>& hdrBuffer,

const std::shared_ptr<renderengine::ExternalTexture>& gainmapBuffer) {

SFTRACE_CALL();

ScreenCaptureResults captureResults;

ftl::SharedFuture<FenceResult> renderFuture;

float hdrSdrRatio = args.displayBrightnessNits / args.sdrWhitePointNits;

if (hdrBuffer && gainmapBuffer) {

//省略

} else {

//这里主要又调用到renderScreenImpl

renderFuture = renderScreenImpl(args, buffer, regionSampling, grayscale, isProtected,

captureResults, layers);

}

if (captureListener) {

// Defer blocking on renderFuture back to the Binder thread.

//进行回调到captureListener

return ftl::Future(std::move(renderFuture))

.then([captureListener, captureResults = std::move(captureResults),

hdrSdrRatio](FenceResult fenceResult) mutable -> FenceResult {

captureResults.fenceResult = std::move(fenceResult);

captureResults.hdrSdrRatio = hdrSdrRatio;

captureListener->onScreenCaptureCompleted(captureResults);

return base::unexpected(NO_ERROR);

})

.share();

}

return renderFuture;

}

那么看看renderScreenImpl方法:

ftl::SharedFuture<FenceResult> SurfaceFlinger::renderScreenImpl(

ScreenshotArgs& args, const std::shared_ptr<renderengine::ExternalTexture>& buffer,

bool regionSampling, bool grayscale, bool isProtected, ScreenCaptureResults& captureResults,

const std::vector<std::pair<Layer*, sp<LayerFE>>>& layers) {

//省略部分

//创建渲染layer到截图的表达式present

auto present = [this, buffer = capturedBuffer, dataspace = captureResults.capturedDataspace,

grayscale, isProtected, layers, layerStack, regionSampling, args, renderIntent,

enableLocalTonemapping]() -> FenceResult {

std::unique_ptr<compositionengine::CompositionEngine> compositionEngine =

mFactory.createCompositionEngine();

compositionEngine->setRenderEngine(mRenderEngine.get());

compositionEngine->setHwComposer(mHWComposer.get());

std::vector<sp<compositionengine::LayerFE>> layerFEs;

layerFEs.reserve(layers.size());

for (auto& [layer, layerFE] : layers) {

// Release fences were not yet added for non-threaded render engine. To avoid

// deadlocks between main thread and binder threads waiting for the future fence

// result, fences should be added to layers in the same hop onto the main thread.

if (!mRenderEngine->isThreaded()) {

attachReleaseFenceFutureToLayer(layer, layerFE.get(), ui::INVALID_LAYER_STACK);

}

layerFEs.push_back(layerFE);

}

compositionengine::Output::ColorProfile colorProfile{.dataspace = dataspace,

.renderIntent = renderIntent};

float targetBrightness = 1.0f;

if (enableLocalTonemapping) {

// Boost the whole scene so that SDR white is at 1.0 while still communicating the hdr

// sdr ratio via display brightness / sdrWhite nits.

targetBrightness = args.sdrWhitePointNits / args.displayBrightnessNits;

} else if (dataspace == ui::Dataspace::BT2020_HLG) {

const float maxBrightnessNits =

args.displayBrightnessNits / args.sdrWhitePointNits * 203;

// With a low dimming ratio, don't fit the entire curve. Otherwise mixed content

// will appear way too bright.

if (maxBrightnessNits < 1000.f) {

targetBrightness = 1000.f / maxBrightnessNits;

}

}

// Capturing screenshots using layers have a clear capture fill (0 alpha).

// Capturing via display or displayId, which do not use args.layerSequence,

// has an opaque capture fill (1 alpha).

const float layerAlpha =

std::holds_alternative<int32_t>(args.captureTypeVariant) ? 0.0f : 1.0f;

// Screenshots leaving the device must not dim in gamma space.

const bool dimInGammaSpaceForEnhancedScreenshots =

mDimInGammaSpaceForEnhancedScreenshots && args.seamlessTransition;

std::shared_ptr<ScreenCaptureOutput> output = createScreenCaptureOutput(

ScreenCaptureOutputArgs{.compositionEngine = *compositionEngine,

.colorProfile = colorProfile,

.layerStack = layerStack,

.sourceCrop = args.sourceCrop,

.buffer = std::move(buffer),

.displayIdVariant = args.displayIdVariant,

.reqBufferSize = args.reqSize,

.sdrWhitePointNits = args.sdrWhitePointNits,

.displayBrightnessNits = args.displayBrightnessNits,

.targetBrightness = targetBrightness,

.layerAlpha = layerAlpha,

.regionSampling = regionSampling,

.treat170mAsSrgb = mTreat170mAsSrgb,

.dimInGammaSpaceForEnhancedScreenshots =

dimInGammaSpaceForEnhancedScreenshots,

.isSecure = args.isSecure,

.isProtected = isProtected,

.enableLocalTonemapping = enableLocalTonemapping});

const float colorSaturation = grayscale ? 0 : 1;

//准备好CompositionRefreshArgs参数

compositionengine::CompositionRefreshArgs refreshArgs{

.outputs = {output},

.layers = std::move(layerFEs),

.updatingOutputGeometryThisFrame = true,

.updatingGeometryThisFrame = true,

.colorTransformMatrix = calculateColorMatrix(colorSaturation),

};

//调用present进行合成

compositionEngine->present(refreshArgs);

return output->getRenderSurface()->getClientTargetAcquireFence();

};

// If RenderEngine is threaded, we can safely call CompositionEngine::present off the main

// thread as the RenderEngine::drawLayers call will run on RenderEngine's thread. Otherwise,

// we need RenderEngine to run on the main thread so we call CompositionEngine::present

// immediately.

//

// TODO(b/196334700) Once we use RenderEngineThreaded everywhere we can always defer the call

// to CompositionEngine::present.

ftl::SharedFuture<FenceResult> presentFuture = mRenderEngine->isThreaded()

? ftl::yield(present()).share()

: mScheduler->schedule(std::move(present)).share();

return presentFuture;

}

这个方法比较多,这个方法本身也在马哥SurfaceFlinger课程中有讲解分析过,大概干了以下几个事情:

收集待渲染图层的元信息(Secure/HDR 标记);

适配色彩 / 亮度参数(保证显示一致性);

通过 CompositionEngine 将图层合成到目标缓冲区;

基于线程模型调度渲染逻辑,返回Fence。

本文主要带大家来剖析一下READ_FRAME_BUFFER权限的使用场景,这块其实整体上和豆包官方助手说的基本上没啥大差别。READ_FRAME_BUFFER是安卓系统级权限需要系统签名,普通第三方app无法获取,核心功能是调用sf接口,sf把对应layer进行gpu绘制到截图buffer上,完全不是网络说的没有任何限制的直接读取gpu内容。

但是最后留下个疑问思考:

READ_FRAME_BUFFER真的只能截图普通图层,就不可以截取secure的窗口图层吗?

原文地址:

https://mp.weixin.qq.com/s/QZuZrQkLgbIouz9BrVZ66Q

更多framework是做开发干货,请关注下面“千里马学框架”

183

183

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?