20220624

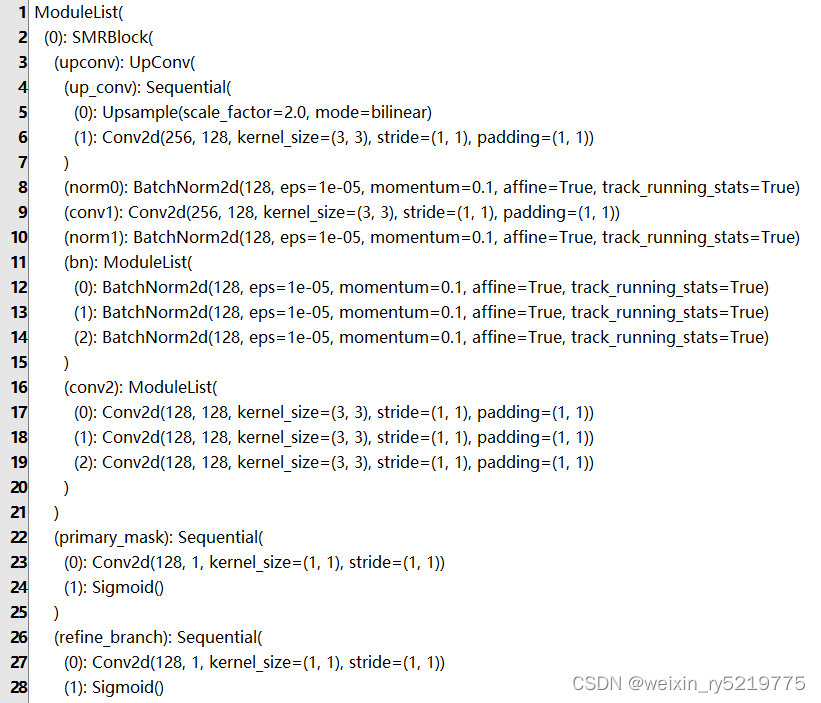

显示出的结构就是init方法里面对应的结构

20220609

查看网络结构

20210614

https://blog.youkuaiyun.com/kyle1314608/article/details/117910862

dropout,batch norm 区别 顺序

https://blog.youkuaiyun.com/hexuanji/article/details/103635335

Batch Normalization和Dropout如何搭配使用?

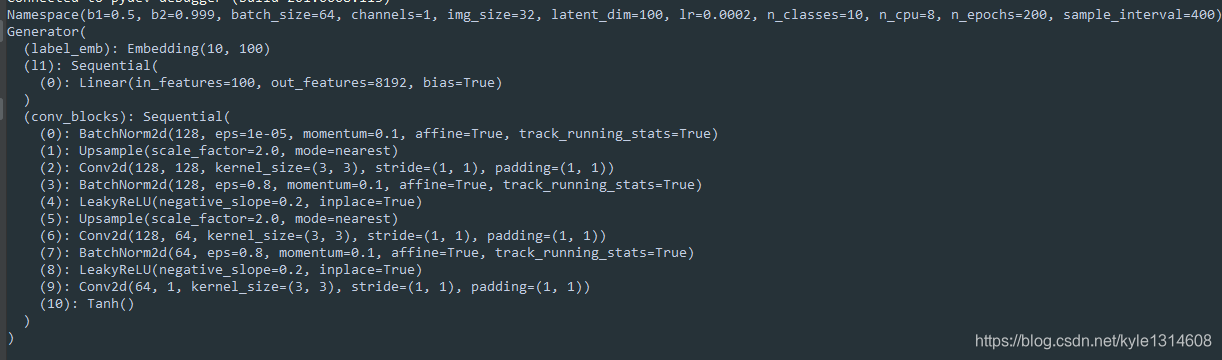

GAN 生成对抗 生成器

Generator(

(label_emb): Embedding(10, 100)

(l1): Sequential(

(0): Linear(in_features=100, out_features=8192, bias=True)

)

(conv_blocks): Sequential(

(0): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(1): Upsample(scale_factor=2.0, mode=nearest)

(2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(3): BatchNorm2d(128, eps=0.8, momentum=0.1, affine=True, track_running_stats=True)

(4): LeakyReLU(negative_slope=0.2, inplace=True)

(5): Upsample(scale_factor=2.0, mode=nearest)

(6): Conv2d(128, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(7): BatchNorm2d(64, eps=0.8, momentum=0.1, affine=True, track_running_stats=True)

(8): LeakyReLU(negative_slope=0.2, inplace=True)

(9): Conv2d(64, 1, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(10): Tanh()

)

)

Discriminator(

(conv_blocks): Sequential(

(0): Conv2d(1, 16, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(1): LeakyReLU(negative_slope=0.2, inplace=True)

(2): Dropout2d(p=0.25, inplace=False)

(3): Conv2d(16, 32, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(4): LeakyReLU(negative_slope=0.2, inplace=True)

(5): Dropout2d(p=0.25, inplace=False)

(6): BatchNorm2d(32, eps=0.8, momentum=0.1, affine=True, track_running_stats=True)

(7): Conv2d(32, 64, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(8): LeakyReLU(negative_slope=0.2, inplace=True)

(9): Dropout2d(p=0.25, inplace=False)

(10): BatchNorm2d(64, eps=0.8, momentum=0.1, affine=True, track_running_stats=True)

(11): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(12): LeakyReLU(negative_slope=0.2, inplace=True)

(13): Dropout2d(p=0.25, inplace=False)

(14): BatchNorm2d(128, eps=0.8, momentum=0.1, affine=True, track_running_stats=True)

)

(adv_layer): Sequential(

(0): Linear(in_features=512, out_features=1, bias=True)

(1): Sigmoid()

)

(aux_layer): Sequential(

(0): Linear(in_features=512, out_features=10, bias=True)

(1): Softmax(dim=None)

)

)

生成器和判别器

为什么有些用dropout

有些用batch norm 是因为图像越来越复杂?

上面的网络结构时有问题的? 有BN的的时候就不要用dropout了,或者只能在softmax之前用一下

36万+

36万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?