集群搭建

参考单机部署时操作

三台主机:

192.168.243.137

192.168.243.138

192.168.243.139

分别解压,复制zoo.cfg文件

每个文件写入

server.1 = 192.168.243.137:2888:3888

server.2 = 192.168.243.138:2888:3888

server.3 = 192.168.243.139:2888:3888

同时找到dataDir对应的路径下创建myid文件,写入值:137写1,138写2,139写3

2888 是数据同步时用到的监听端口

3888 是leader选举时监听的端口

分别启动,zookeeper集群自己分选举一个leader

客户端的使用

原生

curator

引入依赖

<dependency><!--基础功能,节点的增删改查...-->

<groupId>org.apache.curator</groupId>

<artifactId>curator-framework</artifactId>

<version>4.2.0</version>

</dependency>

<dependency><!--分布式锁、leader选举、队列...-->

<groupId>org.apache.curator</groupId>

<artifactId>curator-recipes</artifactId>

<version>4.2.0</version>

</dependency>

创建链接

CuratorFramework curatorClient = CuratorFrameworkFactory.builder().

connectString("192.168.243.137:2181"). //zkClint连接地址

sessionTimeoutMs(5000). //连接超时时间

retryPolicy(new ExponentialBackoffRetry(1000, 3)). //有4种重试策略,1、这个是衰减重试;2、重试直到最大重试时长为止 3、最大重试次数4、一直重试

connectionTimeoutMs(4000).//会话超时时间

namespace("zookeeper/mynode"). //命名空间,默认节点

build();

curatorClient.start();

创建节点

public static String create(CuratorFramework client) throws Exception

{

String path = client.create().creatingParentsIfNeeded().

withMode(CreateMode.PERSISTENT). //节点的类型,临时/持久/有序

forPath("/first", "hello GP".getBytes()); //节点名 值

System.out.println("=========节点创建成功" + path);

return path;

}

修改节点

public static void update(CuratorFramework client) throws Exception

{

client.setData().forPath("/first", "hello GP 22".getBytes());

System.out.println("=====修改节点成功");

get(client);

}

查询节点

public static String get(CuratorFramework client) throws Exception

{

String str = new String(client.getData().forPath("/first"));

System.out.println("=======该节点的值是:" + str);

return str;

}

异步创建

public static void operatorWithAsync(CuratorFramework clien) throws Exception

{

CountDownLatch countDownLatch = new CountDownLatch(1);

clien.create().creatingParentsIfNeeded().withMode(CreateMode.PERSISTENT).inBackground(new BackgroundCallback()

{

@Override

public void processResult(CuratorFramework curatorFramework, CuratorEvent curatorEvent) throws Exception

{

//打印线程

System.out.println(Thread.currentThread().getName() + ":" + curatorEvent.getResultCode());

countDownLatch.countDown();

}

}).forPath("/second", "second GP".getBytes());

System.out.println("=======开始创建");

countDownLatch.await();

System.out.println("=======创建成功");

}

权限

节点监听

监听当前节点

public static void addNodeCacheListener(CuratorFramework client, String path) throws Exception

{

NodeCache nodeCache = new NodeCache(client, path);

NodeCacheListener listener = new NodeCacheListener()

{

@Override

public void nodeChanged() throws Exception

{

System.out.println("节点变化了");

System.out.println("节点变化的值======" + nodeCache.getCurrentData().getPath() + "->" + new String(nodeCache.getCurrentData().getData()));

}

};

nodeCache.getListenable().addListener(listener);

nodeCache.start();

}

执行

addNodeCacheListener(curatorClient, "/first");

System.in.read(); //挂住主线程

监听子节点变化

public static void addPathChildCacheListener(CuratorFramework client, String path) throws Exception

{

PathChildrenCache childrenCache = new PathChildrenCache(client, path, true);

PathChildrenCacheListener listener = new PathChildrenCacheListener()

{

@Override

public void childEvent(CuratorFramework curatorFramework, PathChildrenCacheEvent pathChildrenCacheEvent) throws Exception

{

System.out.println("=========子节点变化了");

ChildData childData = pathChildrenCacheEvent.getData();

System.out.println(childData.getPath() + "--->" + new String(childData.getData()));

}

};

childrenCache.getListenable().addListener(listener);

childrenCache.start(PathChildrenCache.StartMode.NORMAL);

}

执行

addPathChildCacheListener(curatorClient, "/first");

System.in.read();

分布式锁

何谓锁,其实就是一种规定,只要大家都按照这种规定有序的使用共享数据,就不会有产生并发的情况下。

分布式锁跟单机锁没什么不一样,就像换了个裁判,从国内的裁判换成了国际裁判,还是有一套规则来限制

所有的线程。

zk分布式锁的原理

利用zk的临时有序的节点,来实现分布式锁。

因为所有的线程都能连接ZK,在ZK里管理的,所以是具有分布式功能的。

所有的线程再ZK上创建临时有序节点,创建完之后比较下自己是不是最小的节点,

不是的话就阻塞,并创建一个监听,监听前一个节点。

当前一个线程执行完业务后,会删除临时节点,产生删除事件,

后一个节点就会感知到,再判断下自己是否是最小的节点,是最小的节点就开始操作共享数据。

curator代码实现

CuratorFramework curatorFramework=

CuratorFrameworkFactory.builder().

connectString("192.168.243.137:2181").

sessionTimeoutMs(5000).

retryPolicy(new ExponentialBackoffRetry

(1000,3)).

connectionTimeoutMs(4000).build();

curatorFramework.start(); //表示启动.

/**

* locks 表示命名空间

* 锁的获取逻辑是放在zookeeper

* 当前锁是跨进程可见

*/

InterProcessMutex lock=new InterProcessMutex(curatorFramework,"/locks");

for(int i=0;i<10;i++){

new Thread(()->{

System.out.println(Thread.currentThread().getName()+"->尝试抢占锁");

try {

lock.acquire();//抢占锁,没有抢到,则阻塞

System.out.println(Thread.currentThread().getName()+"->获取锁成功");

} catch (Exception e) {

e.printStackTrace();

}

try {

Thread.sleep(4000);

lock.release(); //释放锁

System.out.println(Thread.currentThread().getName()+"->释放锁成功");

} catch (InterruptedException e) {

e.printStackTrace();

} catch (Exception e) {

e.printStackTrace();

}

},"t-"+i).start();

}

主要就两个方法

acquire(); 抢占锁;

release();释放锁;

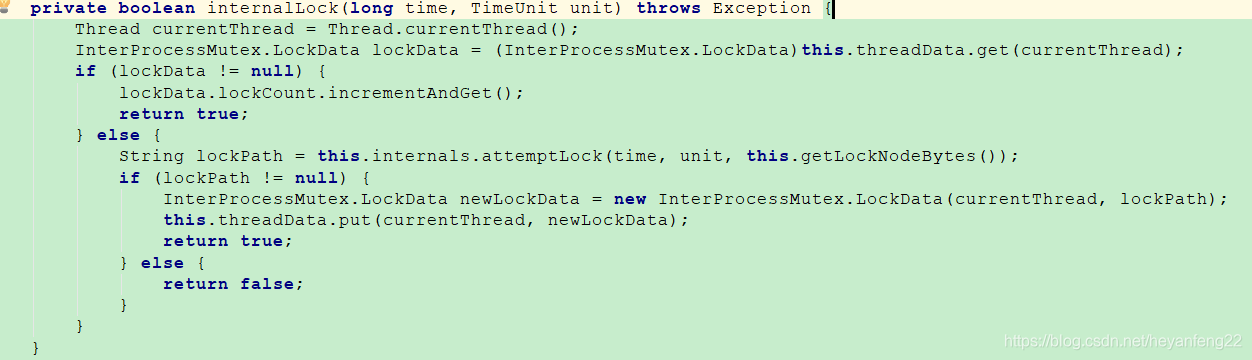

源码分析

acquire()入口

先判断是否重入

不是重入去竞争锁:attemptLock

如果抢到锁就保存currentThread

首先是创建一个临时有序节点

再判断自己是不是最小的节点

如果不是最小的,先创建监听前一个节点的watch,再阻塞

release()

同样先判断是否重入,是重入的先减1

最终删除watch、删除节点、再删除本地currentThread

自己利用ZK实现一个分布式锁

实现思路

1、每个客户端要创建节点,得需要一个父节点,最好是持久化节点

2、每个客户端上来创建子节点

3、抢锁:判断自己是不是父节点下最小的子节点

4、线程唤醒:前一个节点删除后,下一个节点收到删除事件,判断下自己是否是最小,去竞争锁

5、释放锁:执行完业务后,删除节点,让别的节点再去竞争

创建一个接口 Lock

就俩方法,加锁和释放锁

package com.crazymakercircle.zk.distributedLock;

/**

*

**/

public interface Lock {

/**

* 加锁方法

*

* @return 是否成功加锁

*/

boolean lock() throws Exception;

/**

* 解锁方法

*

* @return 是否成功解锁

*/

boolean unlock();

}

加锁的实现

package com.crazymakercircle.zk.distributedLock;

import com.crazymakercircle.zk.ZKclient;

import lombok.extern.slf4j.Slf4j;

import org.apache.curator.framework.CuratorFramework;

import org.apache.zookeeper.WatchedEvent;

import org.apache.zookeeper.Watcher;

import java.util.Collections;

import java.util.List;

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.atomic.AtomicInteger;

/**

*

**/

@Slf4j

public class ZkLock implements Lock {

//ZkLock的节点链接

private static final String ZK_PATH = "/test/lock";

private static final String LOCK_PREFIX = ZK_PATH + "/";

private static final long WAIT_TIME = 1000;

//Zk客户端

CuratorFramework client = null;

private String locked_short_path = null;

private String locked_path = null;

private String prior_path = null;

final AtomicInteger lockCount = new AtomicInteger(0);

private Thread thread;

public ZkLock() {

ZKclient.instance.init();

synchronized (ZKclient.instance) {

if (!ZKclient.instance.isNodeExist(ZK_PATH)) {

ZKclient.instance.createNode(ZK_PATH, null);

}

}

client = ZKclient.instance.getClient();

}

@Override

public boolean lock() {

//可重入,确保同一线程,可以重复加锁

synchronized (this) {

if (lockCount.get() == 0) {

thread = Thread.currentThread();

lockCount.incrementAndGet();

} else {

if (!thread.equals(Thread.currentThread())) {

return false;

}

lockCount.incrementAndGet();

return true;

}

}

try {

boolean locked = false;

//首先尝试着去加锁

locked = tryLock();

if (locked) {

return true;

}

//如果加锁失败就去等待

while (!locked) {

await();

//获取等待的子节点列表

List<String> waiters = getWaiters();

//判断,是否加锁成功

if (checkLocked(waiters)) {

locked = true;

}

}

return true;

} catch (Exception e) {

e.printStackTrace();

unlock();

}

return false;

}

//...省略其他的方法

}

竞争锁

/**

* 尝试加锁

* @return 是否加锁成功

* @throws Exception 异常

*/

private boolean tryLock() throws Exception {

//创建临时Znode

locked_path = ZKclient.instance

.createEphemeralSeqNode(LOCK_PREFIX);

//然后获取所有节点

List<String> waiters = getWaiters();

if (null == locked_path) {

throw new Exception("zk error");

}

//取得加锁的排队编号

locked_short_path = getShortPath(locked_path);

//获取等待的子节点列表,判断自己是否第一个

if (checkLocked(waiters)) {

return true;

}

// 判断自己排第几个

int index = Collections.binarySearch(waiters, locked_short_path);

if (index < 0) { // 网络抖动,获取到的子节点列表里可能已经没有自己了

throw new Exception("节点没有找到: " + locked_short_path);

}

//如果自己没有获得锁,则要监听前一个节点

prior_path = ZK_PATH + "/" + waiters.get(index - 1);

return false;

}

private String getShortPath(String locked_path) {

int index = locked_path.lastIndexOf(ZK_PATH + "/");

if (index >= 0) {

index += ZK_PATH.length() + 1;

return index <= locked_path.length() ? locked_path.substring(index) : "";

}

return null;

}

判断是否有锁

private boolean checkLocked(List<String> waiters) {

//节点按照编号,升序排列

Collections.sort(waiters);

// 如果是第一个,代表自己已经获得了锁

if (locked_short_path.equals(waiters.get(0))) {

log.info("成功的获取分布式锁,节点为{}", locked_short_path);

return true;

}

return false;

}

监听前一个节点是否释放锁

private void await() throws Exception {

if (null == prior_path) {

throw new Exception("prior_path error");

}

final CountDownLatch latch = new CountDownLatch(1);

//订阅比自己次小顺序节点的删除事件

Watcher w = new Watcher() {

@Override

public void process(WatchedEvent watchedEvent) {

System.out.println("监听到的变化 watchedEvent = " + watchedEvent);

log.info("[WatchedEvent]节点删除");

latch.countDown();

}

};

client.getData().usingWatcher(w).forPath(prior_path);

/*

//订阅比自己次小顺序节点的删除事件

TreeCache treeCache = new TreeCache(client, prior_path);

TreeCacheListener l = new TreeCacheListener() {

@Override

public void childEvent(CuratorFramework client,

TreeCacheEvent event) throws Exception {

ChildData data = event.getData();

if (data != null) {

switch (event.getType()) {

case NODE_REMOVED:

log.debug("[TreeCache]节点删除, path={}, data={}",

data.getPath(), data.getData());

latch.countDown();

break;

default:

break;

}

}

}

};

treeCache.getListenable().addListener(l);

treeCache.start();*/

latch.await(WAIT_TIME, TimeUnit.SECONDS);

}

释放锁

/**

* 释放锁

*

* @return 是否成功释放锁

*/

@Override

public boolean unlock() {

//只有加锁的线程,能够解锁

if (!thread.equals(Thread.currentThread())) {

return false;

}

//减少可重入的计数

int newLockCount = lockCount.decrementAndGet();

//计数不能小于0

if (newLockCount < 0) {

throw new IllegalMonitorStateException("Lock count has gone negative for lock: " + locked_path);

}

//如果计数不为0,直接返回

if (newLockCount != 0) {

return true;

}

//删除临时节点

try {

if (ZKclient.instance.isNodeExist(locked_path)) {

client.delete().forPath(locked_path);

}

} catch (Exception e) {

e.printStackTrace();

return false;

}

return true;

}

测试

@Test

public void testLock() throws InterruptedException {

for (int i = 0; i < 10; i++) {

FutureTaskScheduler.add(() -> {

//创建锁

ZkLock lock = new ZkLock();

lock.lock();

//每条线程,执行10次累加

for (int j = 0; j < 10; j++) {

//公共的资源变量累加

count++;

}

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

log.info("count = " + count);

//释放锁

lock.unlock();

});

}

Thread.sleep(Integer.MAX_VALUE);

}

1400

1400

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?