文档分词

在分词的同时需要设置停用词和自定义词典

import jieba

from tqdm import tqdm

jieba.load_userdict('userdict.txt') # 本地文档

words_stop= [line.strip() for line in open('stop.txt','r',encoding ='utf-8').readlines()] # 本地文档

words_stop =['',' ','\n','\r\n','\t']+words_stop

tqdm.pandas(desc="deal col")

cut_txt = lambda x: [i for i in list(jieba.cut(str(x)) ) if i not in words_stop]

data_comment['words'] = data_comment['content'].progress_apply(cut_txt)

gensim--CBOW,skip-gram模型

import numpy as np

from gensim.models import Word2Vec

from gensim.test.utils import common_texts

vector_size =100

# 里面有很多参数需要深挖

#参数:sg ({0, 1}, optional) – Training algorithm: 1 for skip-gram; otherwise CBOW.

model = Word2Vec(common_texts, size=vector_size, window=5, min_count=1, workers=4,seed=123)

#查看词向量

model['human']

# 保存

model.save("word2vec.model")

#加载模型

model = Word2Vec.load("word2vec.model")

#词向量转化为句子向量

def w2v_to_d2v(model,common_texts,vector_size):

w2v_feat = np.zeros((len(common_texts), vector_size))

w2v_feat_avg = np.zeros((len(common_texts), vector_size))

i = 0

for line in common_texts:

num = 0

for word in line:

num += 1

vec = model.wv[word]

w2v_feat[i,:] += vec

w2v_feat_avg[i, :] = w2v_feat[i, :] / num

i += 1

return w2v_feat,w2v_feat_avg

w2v_feat,w2v_feat_avg = w2v_to_d2v(model,common_texts,vector_size)

TfidfVectorizer--TF-IDF权重

from gensim.test.utils import common_texts

from sklearn.feature_extraction.text import TfidfVectorizer

common_texts_list =[' '.join(x) for x in common_texts]

cv=TfidfVectorizer(binary=False,decode_error='ignore',stop_words='english')

vec=cv.fit_transform(common_texts_list)#传入句子组成的list

生成新的tfidf值

vec_sample = cv.transform(['human interface computer'])

#获取每句话的权重

arr=vec.toarray()

#获取每个词

word=cv.get_feature_names()

gensim--TF-IDF

from gensim.test.utils import common_texts

from gensim.corpora.dictionary import Dictionary

from gensim.models import TfidfModel

dictionary = Dictionary(common_texts)

#获取每个词语的位置

print(dictionary.token2id)

corpus = [dictionary.doc2bow(text) for text in common_texts]

model = TfidfModel(corpus)

model.save("tfidf.model")

TfidfModel.load("tfidf.model")

corpus_tfidf = model[corpus]

#获取第一个句子的tf-idf

tf_sample = corpus_tfidf[0]

gensim--DBOW_D2C模型

# 主要处理模型维数、增量训练次

from gensim.test.utils import common_texts

from gensim.models.doc2vec import Doc2Vec, TaggedDocument

import numpy as np

# dm=0 dbow模型,dm = 1模型

documents = [TaggedDocument(doc, [i]) for i, doc in enumerate(common_texts)]

#多次训练

model=Doc2Vec(dm=0,vector_size=10, window=5, min_count=2, workers=4)

model.build_vocab(documents)

model.train(documents, total_examples=model.corpus_count, epochs=model.iter)

# 保存模型

model.save('d2v_cbow.model')

# 加载模型

model=Doc2Vec.load('d2v_cbow.model')

# 查看每个句子的向量

model.docvecs[1]

#获取词向量

X_d2v = np.array([model.docvecs[i] for i in range(len(common_texts))])

gensim--DM_D2C模型

# 主要处理模型维数、增量训练次

from gensim.test.utils import common_texts

from gensim.models.doc2vec import Doc2Vec, TaggedDocument

import numpy as np

# dm=0 dbow模型,dm = 1模型

documents = [TaggedDocument(doc, [i]) for i, doc in enumerate(common_texts)]

#多次训练

model=Doc2Vec(dm=1,vector_size=10, window=5, min_count=2, workers=4)

model.build_vocab(documents)

model.train(documents, total_examples=model.corpus_count, epochs=model.epochs)

#保存模型

model.save('d2v_dw.model')

# 模型加载

model=Doc2Vec.load('d2v_dw.model')

# 查看每个句子的向量

model.docvecs[0]

#获取词向量

X_d2v = np.array([model.docvecs[i] for i in range(len(common_texts))])

gensim--LDA模型

from gensim.test.utils import common_texts

from gensim.corpora.dictionary import Dictionary

from gensim.models import LdaModel

# Create a corpus from a list of texts

common_dictionary = Dictionary(common_texts)

common_corpus = [common_dictionary.doc2bow(text) for text in common_texts]

# Train the model on the corpus.

lda = LdaModel(common_corpus, id2word=common_dictionary,num_topics=10)

doc_topic = [a for a in lda[common_corpus]]

doc_topic[1]

for i in lda.print_topics(num_topics=10):

print(i)

# 利用上面生成的tfidf权重作为语料

#方法一

from gensim.models import LdaModel

lda = LdaModel(corpus=corpus_tfidf, id2word=dictionary, num_topics=10)

doc_topic = [a for a in lda[corpus_tfidf]]

#方法二

lda = LdaModel(corpus=corpus, id2word=dictionary, num_topics=10)

doc_topic = [a for a in lda[corpus_tfidf]]

def doc_topic_df(doc_topic):

df_list = []

for i in doc_topic:

top_list=[]

for j in i:

top_list.append(j[1])

df_list.append(top_list)

df_top = pd.DataFrame(df_list)

return df_top

# 转化为数据框

df_topic = doc_topic_df(doc_topic)

gensim-- LSI模型

import pandas as pd

from gensim.test.utils import common_texts

from gensim.corpora.dictionary import Dictionary

from gensim.models import LsiModel

common_dictionary = Dictionary(common_texts)

common_corpus = [common_dictionary.doc2bow(text) for text in common_texts]

#也可以用tf-idf训练的权重当做 common_corpus

model = LsiModel(common_corpus, id2word=common_dictionary)

#model = LsiModel(corpus_tfidf, id2word=common_dictionary)

vectorized_corpus = model[common_corpus] # vectorize input copus in BoW format

#获取第一个句子的lsi

vectorized_corpus[0]

doc_lsi = [a for a in vectorized_corpus]

def doc_lsic_df(doc_lsi):

df_list = []

for i in doc_lsi:

top_list=[]

for j in i:

top_list.append(j[1])

df_list.append(top_list)

df_lsi = pd.DataFrame(df_list)

return df_lsi

#获取数据框

df_doc_lsi = doc_lsic_df(doc_lsi)

特征统计

这里还可以做的事情有:

- 文本的词的数目

- 文本中的一些关键词做特征,例如:酒驾、毒品等

- 文本中出现的日期

- 文本中出现的地点

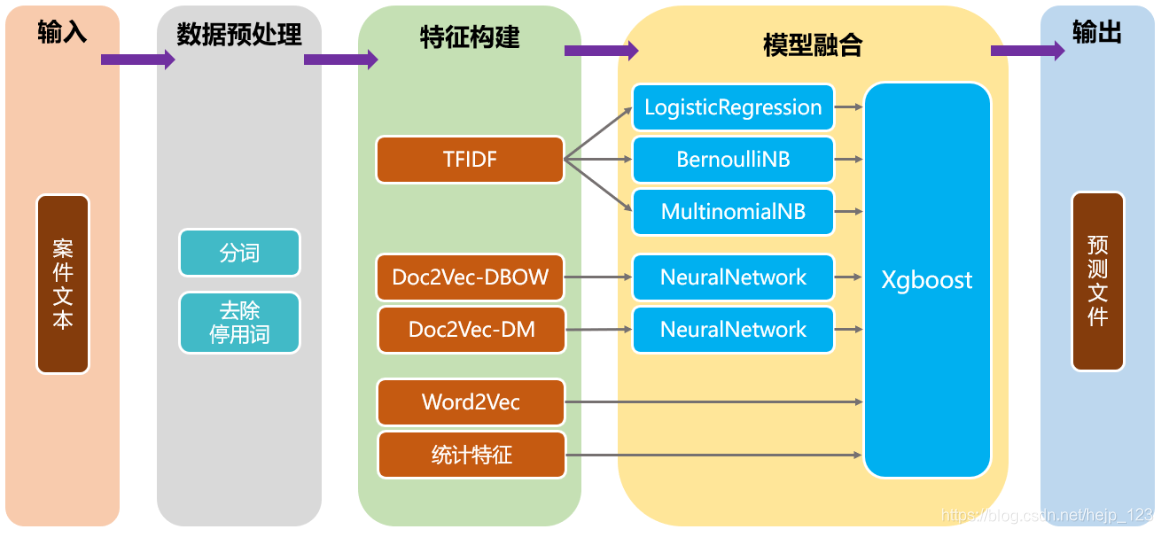

本文详细介绍使用jieba进行中文分词,结合停用词与自定义词典优化分词效果;并运用gensim实现词向量(Word2Vec)、句向量(Doc2Vec)、TF-IDF、LDA及LSI模型的构建与应用,涵盖模型训练、参数调整、模型保存与加载等关键步骤。

本文详细介绍使用jieba进行中文分词,结合停用词与自定义词典优化分词效果;并运用gensim实现词向量(Word2Vec)、句向量(Doc2Vec)、TF-IDF、LDA及LSI模型的构建与应用,涵盖模型训练、参数调整、模型保存与加载等关键步骤。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?