索引迁移:

使用_reindex命令做索引迁移:

slices: 切片,可以同时独立运行,性能提高很多倍。 auto时切片数=分片数,也可手动指定,但不要大于分片数。

source: 原索引

dest: 目标索引

version_type:

internal: 不管新索引中有没有相同数据,直接覆盖。

external: 如果有相同数据,查看_version版本号,根据乐观锁机制更新。

size: 每批处理迁移的数据量,对性能也有影响,最好5-15 MB。 所以要大概计算每条数据大小,不断调整找到合适的size。

POST /_reindex?slices=auto&refresh

{

"source": {

"index": "my_test",

"size": 2000

},

"dest": {

"index": "my_test_bak",

"version_type": "internal"

}

}

当然,迁移的时候也可以带查询条件

//最上面的size表示总的查多少条记录

//下面的size表示没批查多少记录

POST /_reindex?slices=auto&refresh

{

"size": 10,

"source": {

"index": "my_test",

"query": {

"term": {

"name": {

"value": "张三"

}

}

},

"sort": {

"id": "desc"

},

"size": 2000

},

"dest": {

"index": "my_test_bak"

}

}

智能搜索:

根据输入的词,推荐相近的词,如京东的搜索

先创建一个索引并插入数据

PUT /my_test/

{

"mappings": {

"properties": {

"body":{

"type": "text"

}

}

}

}

POST /my_test/_bulk

{"create":{"_id":1}}

{"body":"Lucene is cool"}

{"create":{"_id":2}}

{ "body": "Elasticsearch builds on top of lucene"}

{"create":{"_id":3}}

{ "body": "Elasticsearch rocks"}

{"create":{"_id":4}}

{ "body": "Elastic is the company behind ELK stack"}

{"create":{"_id":5}}

{ "body": "elk rocks"}

{"create":{"_id":6}}

{ "body": "elasticsearch is rock solid"}

查找推荐有几种:

1、term

GET /my_test/_search

{

"suggest": {

"my_suggest": {

"text": "lucne rock",

"term": {

"suggest_mode":"missing",

"field": "body"

}

}

}

}

GET /my_test/_search

{

"suggest": {

"my_suggest": {

"text": "lucne rock",

"term": {

"suggest_mode":"popular",

"field": "body"

}

}

}

}

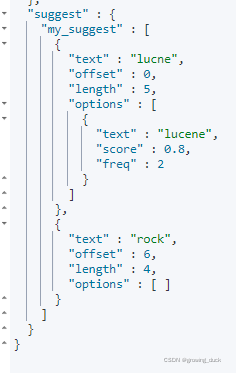

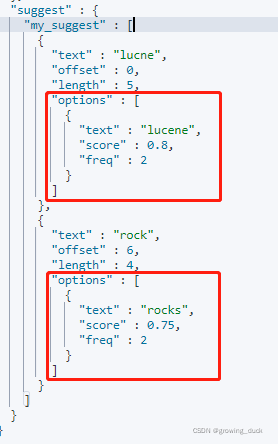

term查找会把参数和数据分词,按每个分词找出相近的词进行推荐,所以它会把参数拆分成多个分词进行多组推荐。

另外:在term中,suggest_mode可以为 missing和popular。

missing: 当参数中的词写错了,会进行相近的推荐。 如果写对了,就不推荐了。

popular: 不管参数是否写对,都会推荐一个更高频的相近词。

如下:lucene写错了,两种都会推荐。 rock写对了,使用popular时会推荐rocks,因为rocks出现的频率更高。

2、phrase

GET /my_test/_search

{

"suggest": {

"my_suggest": {

"text": "lucne and elasticsear rock",

"phrase":{

"field": "body",

"highlight": { "pre_tag": "<em>", "post_tag": "</em>" }

}

}

}

}

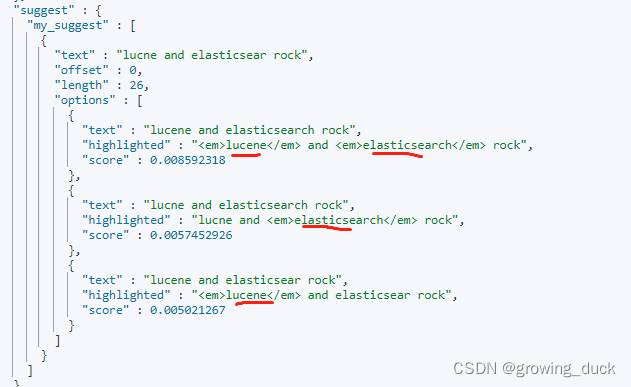

phrase中,不会单独分词去推荐,会将多个term整体结合在一起比较,推荐出来的结果尽量都包含这些参数。 在例子中,我们将推荐的词语高亮显示,推荐了三种结果:

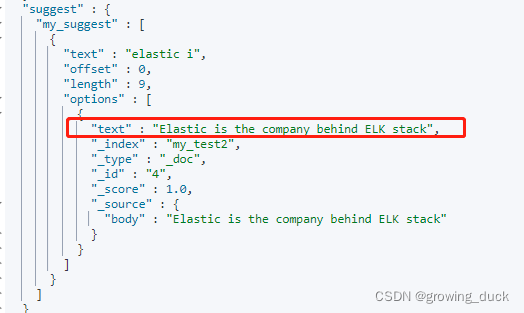

3、completion

补全推荐:每输入一个搜索字,会把后面完整的内容补全。 也就是说,每输入一个字,就要发送一次请求获取相关推荐。 为了性能,它使用的索引结构不是倒排索引,而是在插入数据时,将分词过的数据编码成FST和索引一起存放,并加载到内存中。 FST后面说,它只能用于前缀查找,字段类型也必须指定为completion。

PUT /my_test2/

{

"mappings": {

"properties": {

"body":{

"type": "completion"

}

}

}

}

POST /my_test2/_bulk

{"create":{"_id":1}}

{"body":"Lucene is cool"}

{"create":{"_id":2}}

{"body":"Elasticsearch builds on top of lucene"}

{"create":{"_id":3}}

{"body":"Elasticsearch rocks"}

{"create":{"_id":4}}

{"body":"Elastic is the company behind ELK stack"}

{"create":{"_id":5}}

{"body":"the elk stack rocks"}

{"create":{"_id":6}}

{"body":"elasticsearch is rock solid"}

GET /my_test2/_search

{

"suggest": {

"my_suggest": {

"prefix": "elastic i",

"completion": {

"field": "body"

}

}

}

}

结果:自动补全完整的结果

精准程度上(Precision)看: completion > phrase > term,completion性能也最好。做搜索推荐时,根据输入参数先自动补全后面内容,进行推荐,随着输入越来越多,推荐越来越精确,选项也越来越少。 最终,如果输入了错词,没法自动补全,再依次选用phrase , term进行相似推荐。

Springboot + RestClient

pom.xml:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.6.1</version>

</parent>

<groupId>com.example</groupId>

<artifactId>demo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>edu-demo</name>

<description>Demo project for Spring Boot</description>

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>elasticsearch-rest-high-level-client</artifactId>

<version>7.3.0</version>

<exclusions>

<exclusion>

<groupId>org.elasticsearch</groupId>

<artifactId>elasticsearch</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.elasticsearch</groupId>

<artifactId>elasticsearch</artifactId>

<version>7.3.0</version>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<scope>test</scope>

<version>4.12</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

<version>2.6.1</version>

</plugin>

</plugins>

</build>

</project>

application.yml:

lagouelasticsearch:

elasticsearch:

hostlist: localhost:9200 #多个结点中间用逗号分隔

password: admin

username: elastic

config配置类:

package com.example.demo.config;

import org.apache.http.HttpHost;

import org.apache.http.auth.AuthScope;

import org.apache.http.auth.UsernamePasswordCredentials;

import org.apache.http.client.CredentialsProvider;

import org.apache.http.impl.client.BasicCredentialsProvider;

import org.elasticsearch.client.RestClient;

import org.elasticsearch.client.RestClientBuilder;

import org.elasticsearch.client.RestHighLevelClient;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

@Configuration

public class ElasticsearchConfig {

@Value("${lagouelasticsearch.elasticsearch.hostlist}")

private String hostlist;

@Bean

public RestHighLevelClient restHighLevelClient() {

return new RestHighLevelClient(restClientBuilder());

}

@Bean

public RestClient restClient() {

return restClientBuilder().build();

}

private RestClientBuilder restClientBuilder() {

//解析hostlist配置信息

String[] split = hostlist.split(",");

//创建HttpHost数组,其中存放es主机和端口的配置信息

HttpHost[] httpHostArray = new HttpHost[split.length];

for (int i = 0; i < split.length; i++) {

String item = split[i];

httpHostArray[i] = new HttpHost(item.split(":")[0],

Integer.parseInt(item.split(":")[1]), "http");

}

final CredentialsProvider credentialsProvider = new BasicCredentialsProvider();

//es账号密码(默认用户名为elastic)

credentialsProvider.setCredentials(AuthScope.ANY,new UsernamePasswordCredentials("elastic", "es-09qgksOcBx5up5xP"));

//创建RestHighLevelClient客户端

return RestClient.builder(httpHostArray).setHttpClientConfigCallback(r -> {

r.disableAuthCaching();

return r.setDefaultCredentialsProvider(credentialsProvider);

});

}

}

测试类:

package com.example.demo;

import java.io.IOException;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.Date;

import java.util.HashMap;

import java.util.Map;

import org.apache.lucene.search.TotalHits;

import org.elasticsearch.action.DocWriteResponse;

import org.elasticsearch.action.admin.indices.create.CreateIndexRequest;

import org.elasticsearch.action.admin.indices.create.CreateIndexResponse;

import org.elasticsearch.action.admin.indices.delete.DeleteIndexRequest;

import org.elasticsearch.action.get.GetRequest;

import org.elasticsearch.action.get.GetResponse;

import org.elasticsearch.action.index.IndexRequest;

import org.elasticsearch.action.index.IndexResponse;

import org.elasticsearch.action.search.SearchRequest;

import org.elasticsearch.action.search.SearchResponse;

import org.elasticsearch.action.support.master.AcknowledgedResponse;

import org.elasticsearch.client.IndicesClient;

import org.elasticsearch.client.RequestOptions;

import org.elasticsearch.client.RestHighLevelClient;

import org.elasticsearch.common.xcontent.XContentBuilder;

import org.elasticsearch.common.xcontent.XContentFactory;

import org.elasticsearch.index.query.QueryBuilders;

import org.elasticsearch.search.SearchHit;

import org.elasticsearch.search.SearchHits;

import org.elasticsearch.search.builder.SearchSourceBuilder;

import org.junit.Test;

import org.junit.runner.RunWith;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.boot.test.context.SpringBootTest;

import org.springframework.test.context.junit4.SpringRunner;

@SpringBootTest(classes = EduDemoApplication.class)

@RunWith(SpringRunner.class)

public class EduDemoApplicationTests {

@Qualifier("restHighLevelClient")

@Autowired

RestHighLevelClient client;

// 创建索引库

/*

*

PUT /elasticsearch_test

{

"settings": {},

"mappings": {

"properties": {

"description": {

"type": "text",

"analyzer": "ik_max_word"

},

"name": {

"type": "keyword"

},

"pic": {

"type": "text",

"index": false

},

"studymodel": {

"type": "keyword"

}

}

}

}

* */

@Test

public void testCreateIndex() throws IOException {

// 创建一个索引创建请求对象

CreateIndexRequest createIndexRequest = new CreateIndexRequest("elasticsearch_test");

//设置映射

XContentBuilder builder = XContentFactory.jsonBuilder()

.startObject()

.field("properties")

.startObject()

.field("description").startObject().field("type", "text")

.field("analyzer", "ik_max_word").endObject()

.field("name").startObject().field("type", "keyword").endObject()

.field("pic").startObject().field("type", "text")

.field("index", "false").endObject()

.field("studymodel").startObject().field("type", "keyword").endObject()

.endObject()

.endObject();

createIndexRequest.mapping("doc", builder);

// 操作索引的客户端

IndicesClient indicesClient = client.indices();

CreateIndexResponse createIndexResponse = null;

try {

createIndexResponse = indicesClient.create(createIndexRequest, RequestOptions.DEFAULT);

} catch (IOException e) {

e.printStackTrace();

}

// 得到响应

boolean acknowledged = createIndexResponse.isAcknowledged();

System.out.println(acknowledged);

}

@Test

public void testDeleteIndex() throws IOException {

//删除索引的请求对象

DeleteIndexRequest deleteIndexRequest = new DeleteIndexRequest("elasticsearch_test");

//操作索引的客户端

IndicesClient indices = client.indices();

//执行删除索引

AcknowledgedResponse delete = indices.delete(deleteIndexRequest, RequestOptions.DEFAULT);

//得到响应

boolean acknowledged = delete.isAcknowledged();

System.out.println(acknowledged);

}

@Test

public void testAddDoc() throws IOException {

//创建索引请求对象

IndexRequest indexRequest = new IndexRequest("elasticsearch_test", "doc");

indexRequest.id("1");

//文档内容 准备json数据

Map<String, Object> jsonMap = new HashMap<>();

jsonMap.put("name", "spring cloud实战");

jsonMap.put("description", "本课程主要从四个章节进行讲解: 1.微服务架构入门 2.spring cloud 基础入门 3.实战Spring Boot 4.注册中心eureka。");

jsonMap.put("studymodel", "201001");

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

jsonMap.put("timestamp", dateFormat.format(new Date()));

jsonMap.put("price", 5.6f);

indexRequest.source(jsonMap);

//通过client进行http的请求

IndexResponse indexResponse = client.index(indexRequest, RequestOptions.DEFAULT);

DocWriteResponse.Result result = indexResponse.getResult();

System.out.println(result);

}

//查询文档

@Test

public void testGetDoc() throws IOException {

//查询请求对象

GetRequest getRequest = new GetRequest("elasticsearch_test", "1");

GetResponse getResponse = client.get(getRequest, RequestOptions.DEFAULT);

//得到文档的内容

Map<String, Object> sourceAsMap = getResponse.getSourceAsMap();

System.out.println(sourceAsMap);

}

@Test

public void testSearchAll() throws IOException, ParseException {

//搜索请求对象

SearchRequest searchRequest = new SearchRequest("elasticsearch_test");

//搜索源构建对象

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

//搜索方式

// matchAllQuery搜索全部

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

//设置源字段过虑,第一个参数结果集包括哪些字段,第二个参数表示结果集不包括哪些字段

searchSourceBuilder.fetchSource(new String[]{"name", "studymodel", "price", "timestamp"}, new String[]{});

//向搜索请求对象中设置搜索源

searchRequest.source(searchSourceBuilder);

//执行搜索,向ES发起http请求

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//搜索结果

SearchHits hits = searchResponse.getHits();

//匹配到的总记录数

TotalHits totalHits = hits.getTotalHits();

//得到匹配度高的文档

SearchHit[] searchHits = hits.getHits();

//日期格式化对象

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

for (SearchHit hit : searchHits) {

//文档的主键

String id = hit.getId();

//源文档内容

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

//由于前边设置了源文档字段过虑,这时description是取不到的

String description = (String) sourceAsMap.get("description");

//学习模式

String studymodel = (String) sourceAsMap.get("studymodel");

//价格

Double price = (Double) sourceAsMap.get("price");

//日期

Date timestamp = dateFormat.parse((String) sourceAsMap.get("timestamp"));

System.out.println(name);

System.out.println(studymodel);

System.out.println(description);

}

}

@Test

public void testTermQuery() throws IOException, ParseException {

//搜索请求对象

SearchRequest searchRequest = new SearchRequest("elasticsearch_test");

//搜索源构建对象

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

//搜索方式

// termQuery

searchSourceBuilder.query(QueryBuilders.termQuery("name", "spring cloud实战"));

//设置源字段过虑,第一个参数结果集包括哪些字段,第二个参数表示结果集不包括哪些字段

searchSourceBuilder.fetchSource(new String[]{"name", "studymodel", "price", "timestamp"}, new String[]{});

//向搜索请求对象中设置搜索源

searchRequest.source(searchSourceBuilder);

//执行搜索,向ES发起http请求

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//搜索结果

SearchHits hits = searchResponse.getHits();

//匹配到的总记录数

TotalHits totalHits = hits.getTotalHits();

//得到匹配度高的文档

SearchHit[] searchHits = hits.getHits();

//日期格式化对象

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

for (SearchHit hit : searchHits) {

//文档的主键

String id = hit.getId();

//源文档内容

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

//由于前边设置了源文档字段过虑,这时description是取不到的

String description = (String) sourceAsMap.get("description");

//学习模式

String studymodel = (String) sourceAsMap.get("studymodel");

//价格

Double price = (Double) sourceAsMap.get("price");

//日期

Date timestamp = dateFormat.parse((String) sourceAsMap.get("timestamp"));

System.out.println(name);

System.out.println(studymodel);

System.out.println(description);

}

}

//分页查询

@Test

public void testSearchPage() throws IOException, ParseException {

//搜索请求对象

SearchRequest searchRequest = new SearchRequest("elasticsearch_test");

//指定类型

searchRequest.types("doc");

//搜索源构建对象

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

//设置分页参数

// 页码

int page = 1;

//每页记录数

int size = 2;

//计算出记录起始下标

int from = (page - 1) * size;

searchSourceBuilder.from(from);

//起始记录下标,从0开始

searchSourceBuilder.size(size);

//每页显示的记录数

// 搜索方式

//matchAllQuery搜索全部

searchSourceBuilder.query(QueryBuilders.matchAllQuery());

//设置源字段过虑,第一个参数结果集包括哪些字段,第二个参数表示结果集不包括哪些字段

searchSourceBuilder.fetchSource(new String[]{"name", "studymodel", "price", "timestamp"}, new String[]{});

//向搜索请求对象中设置搜索源

searchRequest.source(searchSourceBuilder);

//执行搜索,向ES发起http请求

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//搜索结果

SearchHits hits = searchResponse.getHits();

//匹配到的总记录数

TotalHits totalHits = hits.getTotalHits();

//得到匹配度高的文档

SearchHit[] searchHits = hits.getHits();

//日期格式化对象

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

for (SearchHit hit : searchHits) {

//文档的主键

String id = hit.getId();

//源文档内容

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

//由于前边设置了源文档字段过虑,这时description是取不到的

String description = (String) sourceAsMap.get("description");

//学习模式

String studymodel = (String) sourceAsMap.get("studymodel");

//价格

Double price = (Double) sourceAsMap.get("price");

//日期

Date timestamp = dateFormat.parse((String) sourceAsMap.get("timestamp"));

System.out.println(name);

System.out.println(studymodel);

System.out.println(description);

}

}

//TermQuery 分页

@Test

public void testTermQueryPage() throws IOException, ParseException {

//搜索请求对象

SearchRequest searchRequest = new SearchRequest("elasticsearch_test");

//搜索源构建对象

SearchSourceBuilder searchSourceBuilder = new SearchSourceBuilder();

//设置分页参数

// 页码

int page = 1;

//每页记录数

int size = 2;

//计算出记录起始下标

int from = (page - 1) * size;

searchSourceBuilder.from(from);//起始记录下标,从0开始

searchSourceBuilder.size(size);//每页显示的记录数

// 搜索方式

//termQuery

searchSourceBuilder.query(QueryBuilders.termQuery("name", "spring cloud实战"));

//设置源字段过虑,第一个参数结果集包括哪些字段,第二个参数表示结果集不包括哪些字段

searchSourceBuilder.fetchSource(new String[]{"name", "studymodel", "price", "timestamp"}, new String[]{});

//向搜索请求对象中设置搜索源

searchRequest.source(searchSourceBuilder);

//执行搜索,向ES发起http请求

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

//搜索结果

SearchHits hits = searchResponse.getHits();

//匹配到的总记录数

TotalHits totalHits = hits.getTotalHits();

//得到匹配度高的文档

SearchHit[] searchHits = hits.getHits();

//日期格式化对象

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

for (SearchHit hit : searchHits) {

//文档的主键

String id = hit.getId();

//源文档内容

Map<String, Object> sourceAsMap = hit.getSourceAsMap();

String name = (String) sourceAsMap.get("name");

//由于前边设置了源文档字段过虑,这时description是取不到的

String description = (String) sourceAsMap.get("description");

//学习模式

String studymodel = (String) sourceAsMap.get("studymodel");

//价格

Double price = (Double) sourceAsMap.get("price");

//日期

Date timestamp = dateFormat.parse((String) sourceAsMap.get("timestamp"));

System.out.println(name);

System.out.println(studymodel);

System.out.println(description);

}

}

}

本文详细介绍了Elasticsearch的索引迁移方法,包括_reindex命令的使用,以及智能搜索中的term、phrase和completion建议的实现。此外,还展示了Springboot应用如何集成Elasticsearch进行RESTful操作,包括创建索引、文档操作和搜索推荐。

本文详细介绍了Elasticsearch的索引迁移方法,包括_reindex命令的使用,以及智能搜索中的term、phrase和completion建议的实现。此外,还展示了Springboot应用如何集成Elasticsearch进行RESTful操作,包括创建索引、文档操作和搜索推荐。

5735

5735

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?