a.前端编码:

创建Vue项目,Vue-Router Vuex ElementUI Axios

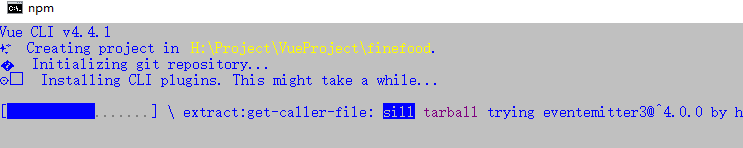

1.创建Vue项目

vue create finefood

默认需要的组件:Babel Vue-Router Vuex

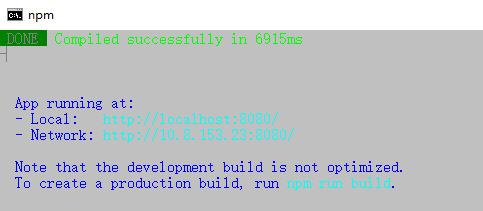

2.启动Vue项目

确保创建的项目可以使用

cd finefood

npm run serve

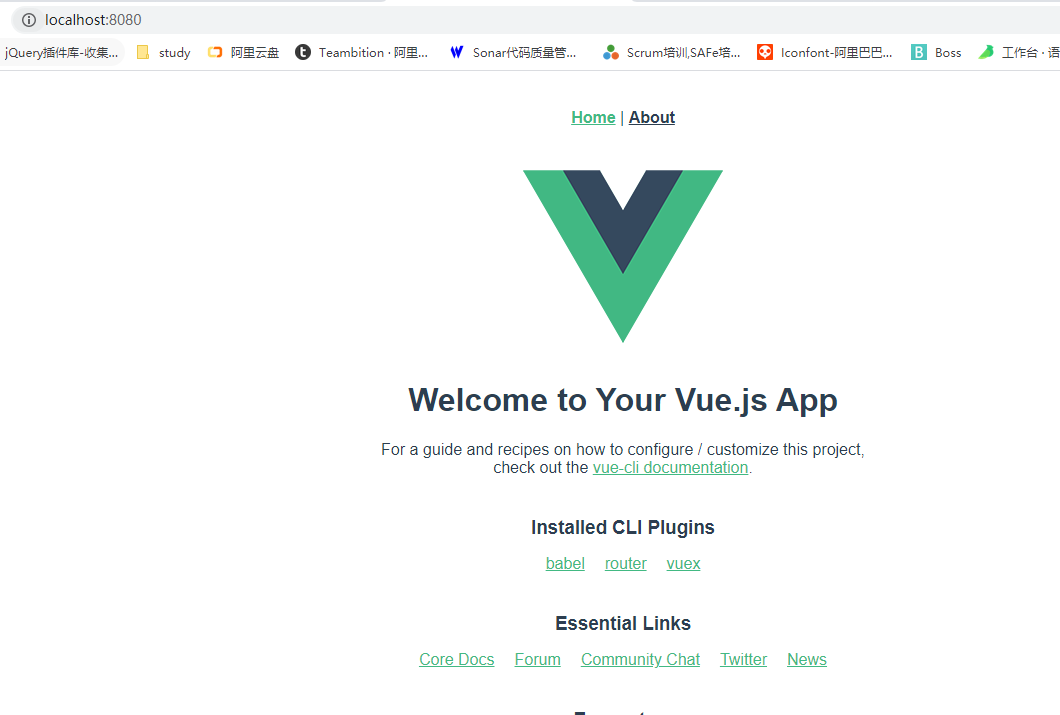

浏览器访问:

http://localhost:8080/

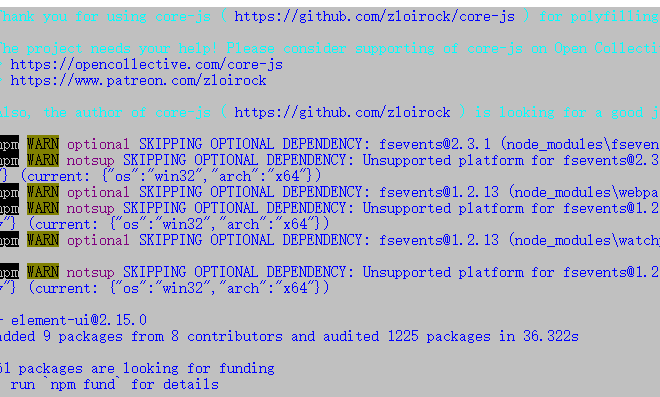

3.安装需要的插件并实现配置

终止程序运行

安装Element-UI插件

npm i element-ui -S

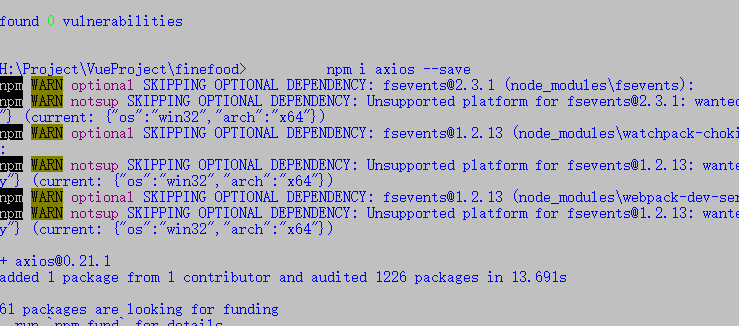

安装Axios

npm i axios --save

import Vue from 'vue'

import App from './App.vue'

import router from './router'

import store from './store'

import ElementUI from 'element-ui';

import 'element-ui/lib/theme-chalk/index.css';

import axios from 'axios';

Vue.config.productionTip = false

Vue.use(ElementUI);

Vue.prototype.$axios=axios;

new Vue({

router,

store,

render: h => h(App)

}).$mount('#app')

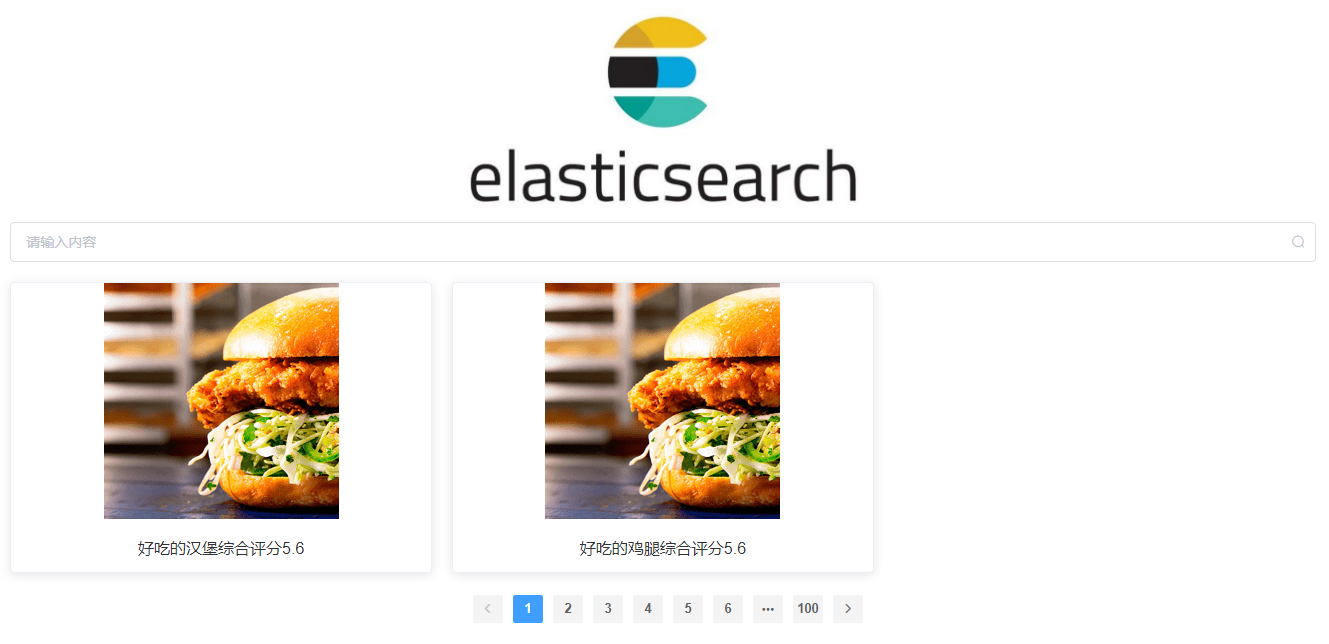

4.根据需求绘制页面

核心代码:

<template>

<div>

<!-- 1.搜索框 -->

<el-col :offset="1" :span="22" style="margin-top: 10px;margin-bottom: 20px;">

<el-autocomplete

class="dvsearch"

v-model="searchMsg"

:fetch-suggestions="querySearchAsync"

placeholder="请输入内容"

@select="handleSelect"

suffix-icon="el-icon-search"

></el-autocomplete>

</el-col>

<!-- 2.结果显示 -->

<el-col :offset="1" :span="22">

<el-row :gutter="20">

<el-col :span="8" v-for="f in searchdata" :key="f">

<el-card :body-style="{ padding: '0px' }">

<img :src="f.imgurl" class="image">

<div style="padding: 14px;">

<span>{{f.value}}</span>

<span>综合评分{{f.score}}</span>

</div>

</el-card>

</el-col>

</el-row>

</el-col>

<!-- 3.分页 -->

<el-col :offset="6" :span="12" style="margin-top: 20px;">

<el-pagination

style="width: 100%;font-size: 25px;"

background

layout="prev, pager, next"

:total="1000">

</el-pagination>

</el-col>

<el-col :span="24" style="height: 50px;">

</el-col>

</div>

</template>

<script>

export default{

data(){

return {

searchMsg:"",

searchdata:[

{value:"好吃的汉堡",imgurl:"https://shadow.elemecdn.com/app/element/hamburger.9cf7b091-55e9-11e9-a976-7f4d0b07eef6.png",score:5.6}

,{value:"好吃的鸡腿",imgurl:"https://shadow.elemecdn.com/app/element/hamburger.9cf7b091-55e9-11e9-a976-7f4d0b07eef6.png",score:5.6}

]

}

},methods:{

querySearchAsync(qmsg, cb) {

console.log(qmsg);

//axios发起网络请求

cb(this.searchdata);

},

handleSelect(item) {

console.log(item);

}

}

}

</script>

<style>

.dvsearch{

width: 100%;

}

</style>

b.基于爬虫实现数据抓取

爬虫:网页追逐者、蜘蛛、蠕虫、网页收集器、爬虫

获取网站的信息的方式

WebMagic,你可以快速开发出一个高效,易维护的爬虫。

官网:WebMagic

核心:

1.Downloader

Downloader负责从互联网上下载页面,以便后续处理。WebMagic默认使用了Apache HttpClient作为下载工具。

2.PageProcessor

PageProcessor负责解析页面,抽取有用信息,以及发现新的链接。WebMagic使用Jsoup作为HTML解析工具,并基于其开发了解析XPath的工具Xsoup。

在这四个组件中,PageProcessor对于每个站点每个页面都不一样,是需要使用者定制的部分。

3.Scheduler

Scheduler负责管理待抓取的URL,以及一些去重的工作。WebMagic默认提供了JDK的内存队列来管理URL,并用集合来进行去重。也支持使用Redis进行分布式管理。

除非项目有一些特殊的分布式需求,否则无需自己定制Scheduler。

4.Pipeline

Pipeline负责抽取结果的处理,包括计算、持久化到文件、数据库等。WebMagic默认提供了“输出到控制台”和“保存到文件”两种结果处理方案。

Pipeline定义了结果保存的方式,如果你要保存到指定数据库,则需要编写对应的Pipeline。对于一类需求一般只需编写一个Pipeline。基于Webmagic实现 数据的爬取

找到目标网站:家常菜做法大全有图_家常菜菜谱大全做法_好吃的家常菜_下厨房

实现步骤:

1.依赖jar

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-core</artifactId>

<version>0.7.3</version>

</dependency>

<dependency>

<groupId>us.codecraft</groupId>

<artifactId>webmagic-extension</artifactId>

<version>0.7.3</version>

</dependency>2.编写页面处理器

@Component

public class XiaChuFangPageProcessor implements PageProcessor {

@Override

public void process(Page page) {

//爬取整个网页内容,需要在网页中获取需要的数据

//获取饭菜的列表信息

List<Selectable> list=page.getHtml().css("div.category-recipe-list div.normal-recipe-list ul.list li").nodes();

//存储爬取的食物信息

List<Food> foodList=new ArrayList<>();

//遍历获取内容

for(Selectable s:list) {

Food food=new Food();

//分布式唯一id

food.setId(IdGenerator.getInstance().nextId());

//获取图片的路径

String iu=s.css("li div a div.pure-u img", "data-src").get();

//生成OSS上传的对象名称

String objname=UUID.randomUUID().toString().replace("-","") +"_"+iu.substring(iu.indexOf("/")+1);

//下载图片 获取图片的内容

byte[] data= HttpUtil.downLoad(iu);

//上传到OSS 获取访问路径

String url= AliOssUtil.upload("zzjava2008", objname,data);

food.setImgurl(url);

food.setScore(Double.parseDouble(s.css("li div div p.stats span", "text").get()));

food.setValue(s.css("li div div p.name a", "text").get());

foodList.add(food);

}

//传递到结果处理器

page.putField("foodlist",foodList);

//深度爬取 分页爬取

if(page.getUrl().get().equals("https://www.xiachufang.com/category/40076")){

List<String> urls=new ArrayList<>();

for(int i=2;i<20;i++){

//https://www.xiachufang.com/category/40076/?page=4

urls.add("https://www.xiachufang.com/category/40076/?page="+i);

}

//设置继续爬取的网页

page.addTargetRequests(urls);

}

}

@Override

public Site getSite() {

return Site.me().setRetryTimes(200).setTimeOut(10000).setSleepTime(1000);

}

}c.实现搜索建议索引的创建

访问:

http://39.105.189.141:5601/app/kibana

创建索引:

PUT finefood

创建索引的文档结构:

POST finefood/_mapping

{

"properties": {

"_class": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"score": {

"type": "double",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

}

}

},

"id": {

"type": "long"

},

"value": {

"type": "text",

"fields": {

"keyword": {

"type": "keyword",

"ignore_above": 256

},

"suggest": {

"type": "completion",

"analyzer": "simple",

"preserve_separators": true,

"preserve_position_increments": true,

"max_input_length": 50

}

}

}

}

}

d.Elasticsearch操作

Spring Data Elasticsearch实现es的操作

1.批量新增

2.搜索建议查询

3.高亮显示

@Override

public R search(String msg) throws IOException {

//搜索建议 同时 实现 高亮显示

CompletionSuggestionBuilder completionSuggestion= SuggestBuilders.completionSuggestion("value.suggest");

completionSuggestion.prefix(msg).size(50);

SuggestBuilder suggestBuilder=new SuggestBuilder();

suggestBuilder.addSuggestion("suggest-value",completionSuggestion);

SearchResponse response=restTemplate.suggest(suggestBuilder, IndexCoordinates.of("finefood"));

Suggest.Suggestion suggestions=response.getSuggest().getSuggestion("suggest-value");

List<FoodSuggestDto> list=new ArrayList<>();

for(Object o :suggestions.getEntries()){

if(o instanceof Suggest.Suggestion.Entry){

Suggest.Suggestion.Entry e=(Suggest.Suggestion.Entry)o;

for(Object obj:e.getOptions()){

if(obj instanceof Suggest.Suggestion.Entry.Option){

Suggest.Suggestion.Entry.Option option=(Suggest.Suggestion.Entry.Option)obj;

list.add(new FoodSuggestDto(option.getText().toString()));

}

}

}

}

return R.ok(list);

}

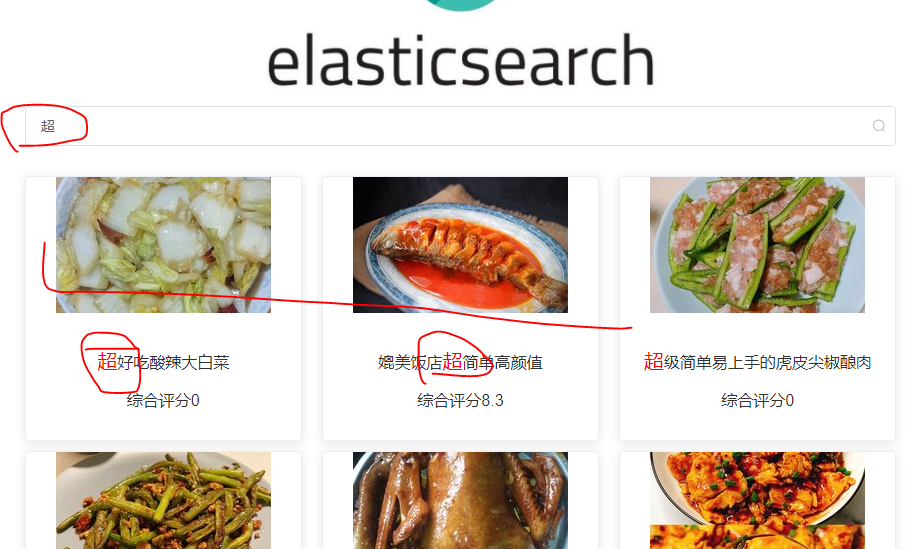

@Override

public R searchHeigh(String msg) throws IOException {

//搜索资源对象

SearchSourceBuilder sourceBuilder=new SearchSourceBuilder();

//高亮搜索对象

HighlightBuilder highlightBuilder=new HighlightBuilder();

//设置高亮的字段和高亮的结构

highlightBuilder.field("value").fragmentSize(1).preTags("<span style='color:red;font-size:20px'>").postTags("</span>");

//模糊查询

// FuzzyQueryBuilder fuzzyQueryBuilder= QueryBuilders.fuzzyQuery("value","%"+msg+"%");

// fuzzyQueryBuilder.prefixLength(1);

//通配符查询

WildcardQueryBuilder wildcardQueryBuilder=QueryBuilders.wildcardQuery("value",msg+"*");

sourceBuilder.highlighter(highlightBuilder);

sourceBuilder.query(wildcardQueryBuilder);

sourceBuilder.sort("id", SortOrder.DESC);

sourceBuilder.from(0).size(20);

//创建搜索请求对象

SearchRequest searchRequest=new SearchRequest();

searchRequest.source(sourceBuilder);

//执行搜索

SearchResponse response=levelClient.search(searchRequest,RequestOptions.DEFAULT);

List<Food> foods=new ArrayList<>();

//遍历结果

for(SearchHit sh:response.getHits().getHits()){

System.err.println(sh.getHighlightFields().get("value").getFragments()[0]);

Food food= JSON.parseObject(sh.getSourceAsString(),Food.class);

//获取高亮的结果

food.setValue(sh.getHighlightFields().get("value").getFragments()[0].string());

foods.add(food);

}

return R.ok(foods);

}e.Axios交互

querySearchAsync(qmsg, cb) {

console.log(qmsg);

var that=this;

//axios发起网络请求

//搜索建议请求

that.$axios.get("http://localhost:8888/searchsuggest.do?msg="+qmsg).then(function(r){

if(r.data.code==1){

cb(r.data.data);

//请求高亮查询接口

that.$axios.get("http://localhost:8888/searchhight.do?msg="+qmsg).then(function(res){

if(res.data.code==1){

that.searchdata=res.data.data;

}else{

that.$message("亲,模糊搜索暂无数据");

}

})

}else{

this.$message("亲,搜索建议暂无数据");

}

})

}五、分布式唯一id生成器

1.雪花算法

2.百度

3.美团

比较主流的雪花算法:

7144

7144

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?