部署spark任务在Kubernetes上报错

使用官方案例部署失败

`21/07/14 02:19:14 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

21/07/14 02:19:15 WARN DependencyUtils: Local jar /opt/spark/examples/jars/spark-examples_2.12-3.1.1.jar does not exist, skipping.

**Error: Failed to load class org.apache.spark.examples.SparkPi.**

21/07/14 02:19:15 INFO ShutdownHookManager: Shutdown hook called

21/07/14 02:19:15 INFO ShutdownHookManager: Deleting directory /tmp/spark-531eef47-e7c2-4632-9232-e45f68ac0396

`

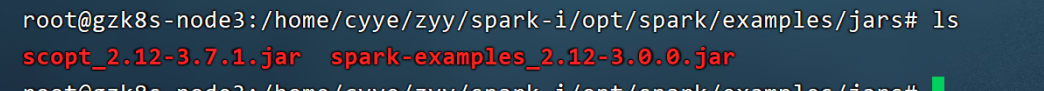

将容器中的文件拷贝出进行查看文件路径

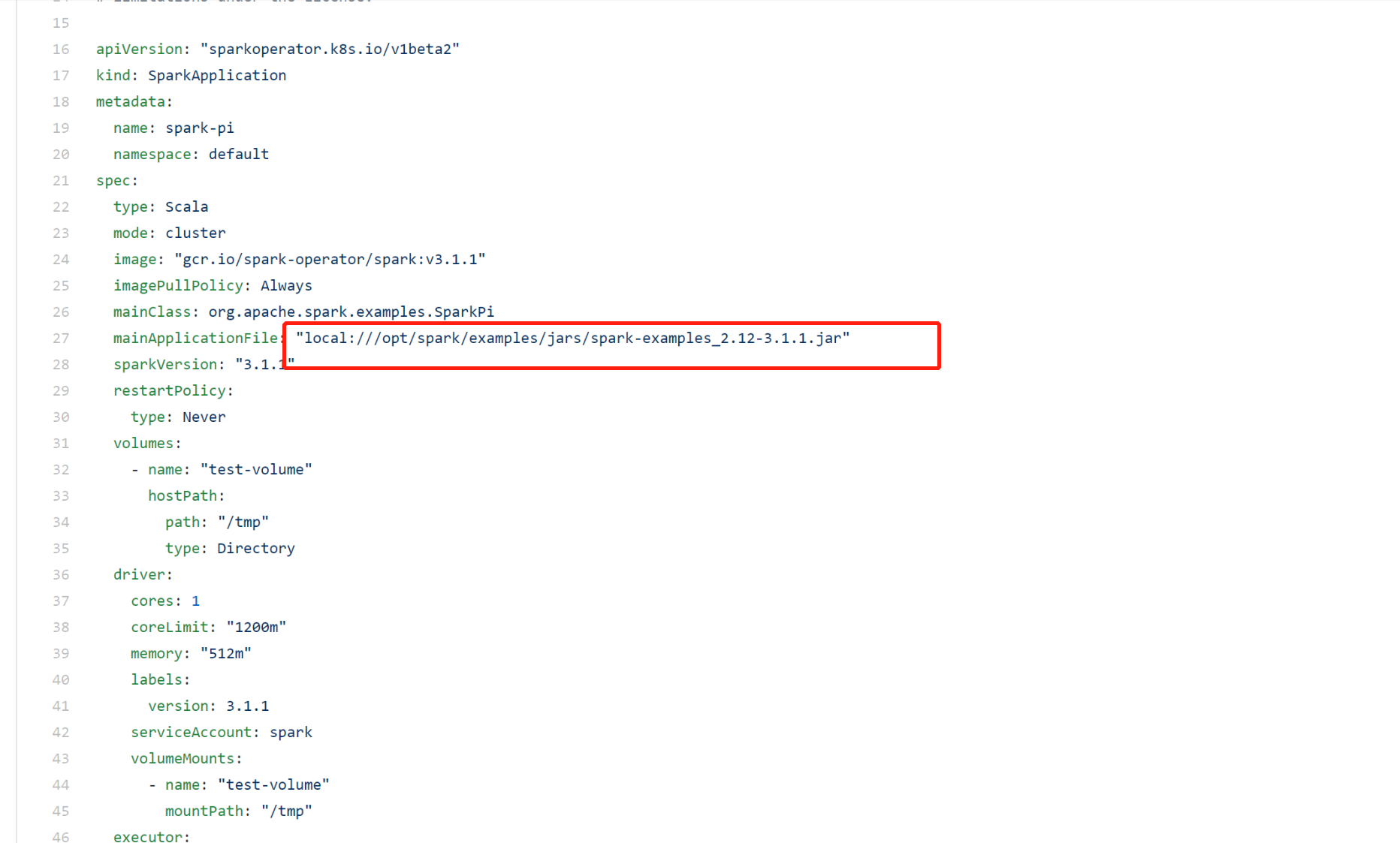

官方yaml的路径

对比jar名称错误,并且路径错误

将mainApplicationFile:改为/opt/spark/examples/jars/spark-examples_2.12-3.0.0.jar即可

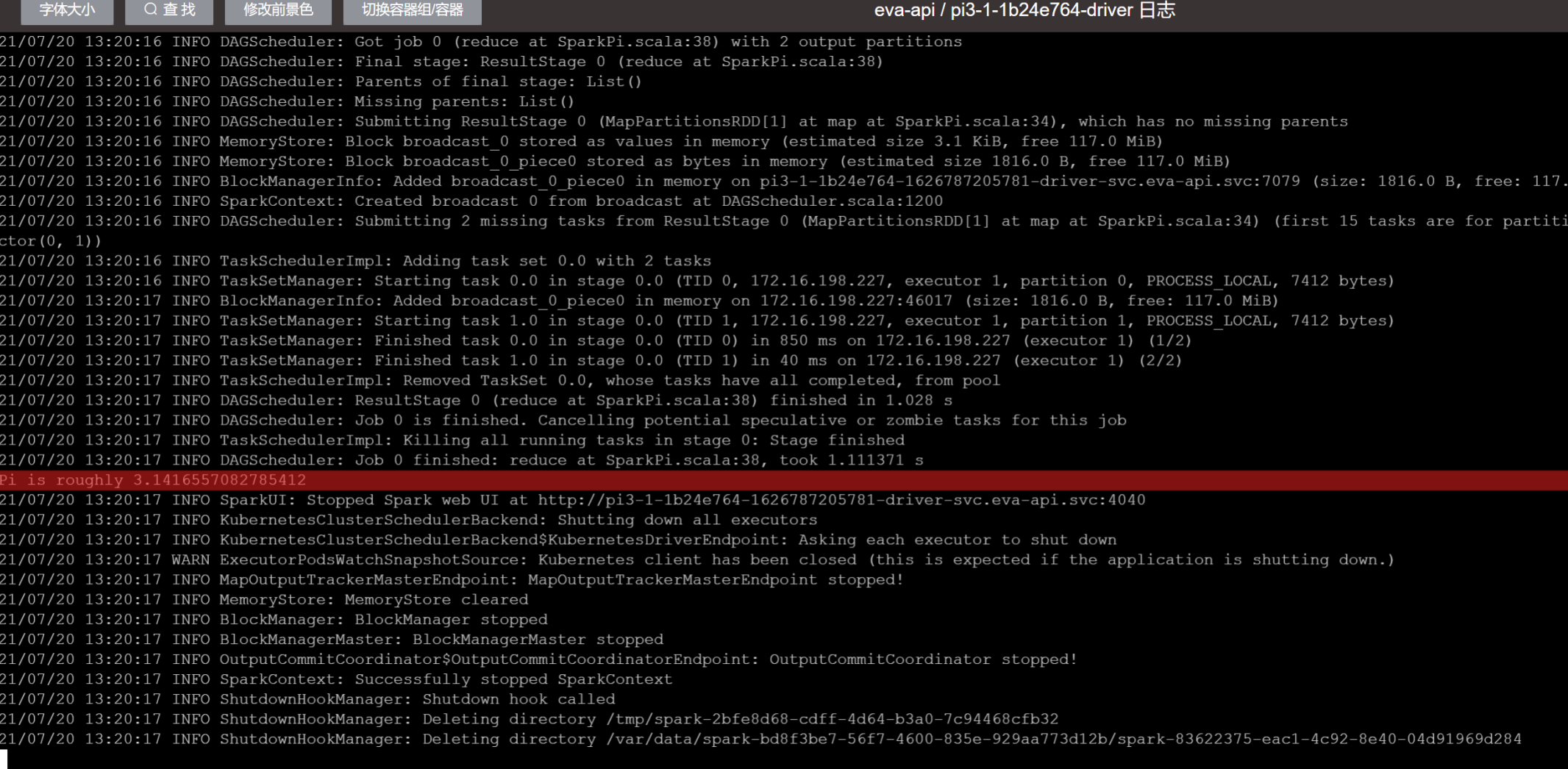

在尝试使用官方示例部署Spark任务到Kubernetes集群时遇到错误。问题包括文件路径和jar名称不正确。解决方案是修正jar的路径到/opt/spark/examples/jars/spark-examples_2.12-3.0.0.jar。

在尝试使用官方示例部署Spark任务到Kubernetes集群时遇到错误。问题包括文件路径和jar名称不正确。解决方案是修正jar的路径到/opt/spark/examples/jars/spark-examples_2.12-3.0.0.jar。

5201

5201

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?