1. 创建maven项目

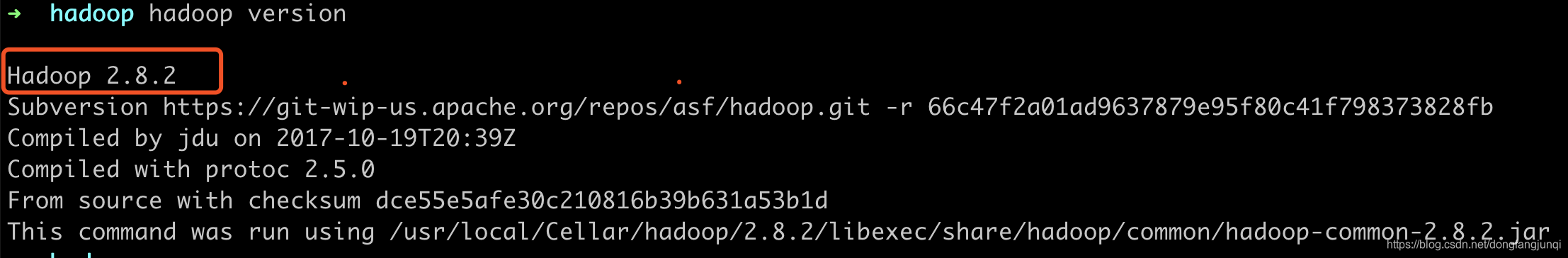

根据Hadoop版本来引入相应的maven依赖,我当前版本是2.8.2,引入2.8.2的maven依赖

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.8.2</version>

</dependency>

2. 编写操作Hadoop的代码

2.1 创建Configuration

2.2 获取FileSystem

Configuration configuration = new Configuration();

FileSystem fileSystem = FileSystem.get(new URI("hdfs://localhost:8020"), configuration);

2.3 HDFS常用API的Junit操作

package com.immoc;

import javafx.util.Pair;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.util.Progressable;

import org.junit.After;

import org.junit.Before;

import org.junit.Test;

import java.io.BufferedInputStream;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStream;

import java.net.URI;

/**

* Hello world!

*/

public class HDFSApp {

public static final String HDFS_PATH = "hdfs://localhost:8020";

FileSystem fileSystem = null;

Configuration configuration = null;

@Before

public void setUp() throws Exception {

System.out.println("----setUp----");

configuration = new Configuration();

// configuration.set("dfs.replication", "1");

/**

* 构造一个访问HDFS系统的客户端对象

* 第一个参数: HDFS的URI

* 第二个参数: 客户端指定的配置参数

* 第三个参数: 客户端的身份,也就是用户名

*/

fileSystem = FileSystem.get(new URI(HDFS_PATH), configuration, "martin");

}

/**

* 在HDFS文件系统上创建目录

* @throws Exception

*/

@Test

public void mkdir() throws Exception {

fileSystem.mkdirs(new Path("/hdfsapi/test"));

}

/**

* 查看HDFS内容

* @throws Exception

*/

@Test

public void text() throws Exception {

FSDataInputStream in = fileSystem.open(new Path("/README.txt"));

IOUtils.copyBytes(in, System.out, 1024);

}

/**

* 创建文件

* @throws Exception

*/

@Test

public void create() throws Exception {

FSDataOutputStream out = fileSystem.create(new Path("/hdfsapi/test/b.txt"));

out.writeUTF("hello melonydi");

out.flush();

out.close();

}

/**

* 重命名HDFS上的文件

* @throws Exception

*/

@Test

public void rename() throws Exception {

Path oldPath = new Path("/hdfsapi/test/b.txt");

Path newPath = new Path("/hdfsapi/test/c.txt");

boolean result = fileSystem.rename(oldPath, newPath);

System.out.println(result);

}

/**

* 拷贝文件到HDFS系统

* @throws Exception

*/

@Test

public void copyFromLocalFile() throws Exception {

Path src = new Path("/Users/martin/Downloads/IMG_1471.jpeg");

Path dst = new Path("/hdfsapi/test/");

fileSystem.copyFromLocalFile(src, dst);

}

/**

* 拷贝大文件到HDFS文件系统: 带进度

* @throws Exception

*/

@Test

public void copyFromLocalBigFile() throws Exception {

InputStream in = new BufferedInputStream(new FileInputStream(new File("/Users/martin/Documents/study/算法/动态规划/DP1_9C.wmv")));

FSDataOutputStream out = fileSystem.create(new Path("/hdfsapi/test/DP1_9C.wmv"),

new Progressable() {

@Override

public void progress() {

System.out.print(".");

}

}

);

IOUtils.copyBytes(in, out, 4096);

}

/**

* 拷贝HDFS文件到本地:下载

* @throws Exception

*/

@Test

public void copyToLocalFile() throws Exception {

Path src = new Path("/hdfsapi/test/IMG_1471.jpeg");

Path dst = new Path("/Users/martin/");

fileSystem.copyToLocalFile(src, dst);

}

/**

* 查看目录文件夹下的所有文件

* @throws Exception

*/

@Test

public void listFiles() throws Exception {

FileStatus[] statuses = fileSystem.listStatus(new Path("/hdfsapi/test"));

for(FileStatus file : statuses) {

String isDir = file.isDirectory() ? "文件夹" : "文件";

String permission = file.getPermission().toString();

short replication = file.getReplication();

long length = file.getLen();

String path = file.getPath().toString();

System.out.println(isDir + "\t" + permission + "\t" + replication + "\t" + length + "\t" + path);

}

}

/**

* 递归查看目标文件夹下的所有文件

* @throws Exception

*/

@Test

public void listFilesRecursive() throws Exception {

RemoteIterator<LocatedFileStatus> files = fileSystem.listFiles(new Path("/hdfsapi/test"), true);

while (files.hasNext()) {

LocatedFileStatus file = files.next();

String isDir = file.isDirectory() ? "文件夹" : "文件";

String permission = file.getPermission().toString();

short replication = file.getReplication();

long length = file.getLen();

String path = file.getPath().toString();

System.out.println(isDir + "\t" + permission + "\t" + replication + "\t" + length + "\t" + path);

}

}

@Test

public void getFileBlockLocation() throws Exception {

FileStatus fileStatus = fileSystem.getFileStatus(new Path("/hdfsapi/test/DP1_9C.wmv"));

BlockLocation[] blocks = fileSystem.getFileBlockLocations(fileStatus, 0, fileStatus.getLen());

for(BlockLocation block : blocks) {

for (String name : block.getNames()) {

System.out.println(name + " : " + block.getOffset() + " : " + block.getLength() + " : " + block.getHosts()[0);

}

}

}

@Test

public void testReplication() {

System.out.println(configuration.get("dfs.replication"));

}

@Test

public void delete() throws Exception {

boolean result = fileSystem.delete(new Path("/hdfsapi/test/IMG_1471.jpeg"), true);

System.out.println(result);

}

@After

public void tearDown() throws Exception {

configuration = null;

fileSystem = null;

System.out.println("----tearDown----");

}

}

601

601

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?