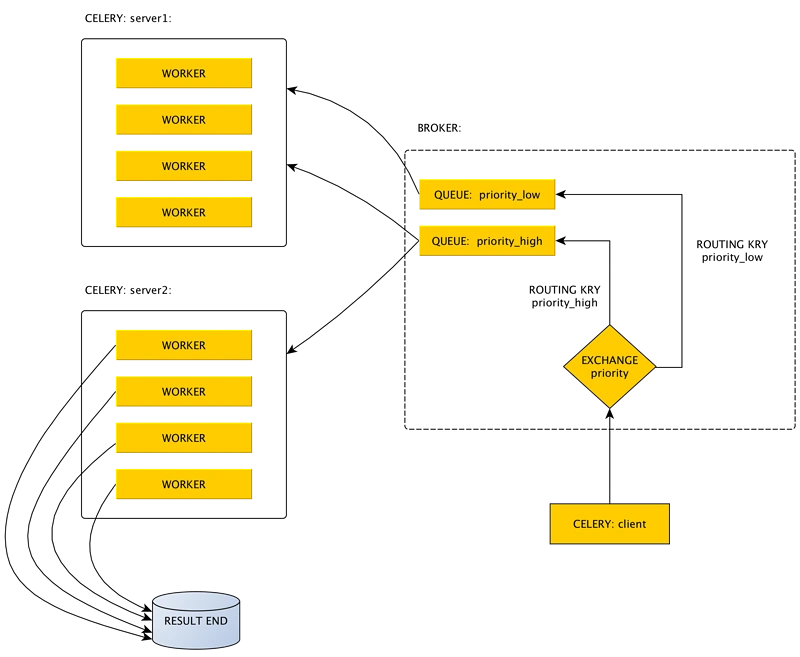

celery分布式任务部署多台机器,形成一发布者,多消费者(每个消费者处理不同任务)

先上图

主要结构分为

主要结构分为

任务发布者:发布任务到调度者中

调度者:一般为消息队列的存储,如redis

任务消费者:从调度者获取任务,消费任务,返回结果

结果存储:和调度者配对,消费者可以将结果反馈给结果存储队列

直接上代码

任务发布者:

main.py

# This is a sample Python script.

# Press Shift+F10 to execute it or replace it with your code.

# Press Double Shift to search everywhere for classes, files, tool windows, actions, and settings.

from celery import Celery

import celery_config

if __name__ == '__main__':

try:

app = Celery('tasks', broker=celery_config.broker_url)

# 此处的 celeryConfig 即 服务器A 上的配置,可以完全复制过来

app.config_from_object(celery_config)

print(app)

# 1. 此处 send_task(), 直接使用任务的名称,之前使用 tasks.say_hello 没有成功

# 2. 如果有 queue ,建议指定

# 3. 可以直接传参数的

for i in range(1, 10):

print(i)

app.send_task("tasks.add", queue=celery_config.task_queues[0], args=(i, i))

app.send_task("tasks.multiply", queue=celery_config.task_queues[1], args=(i, i))

except Exception as e:

print(e)

print("error")

任务发布者配置文件celery_config.py

from celery.schedules import crontab

from kombu import Queue

from kombu import Exchange

# celery的配置

from datetime import timedelta

broker_url = r"redis://IP:6379/5"

# broker_url = r"redis://127.0.0.1:6379/5"

# 结果存储地址

# CELERY_RESULT_BACKEND = r"redis://127.0.0.1:6379/3"

result_backend = r"redis://IP:6379/6"

# result_backend = r"redis://127.0.0.1:6379/6"

# 任务序列化方式, 4.0版本后默认为json

# CELERY_TASK_SERIALIZER ='json'

task_serializer = "json"

# 任务执行结果序列化方式

serializer = 'json'

# 任务结果保存时间,超过这个会删除结果

result_expires = 60 * 60 * 5

# 指定任务接受的内容类型(序列化),默认值:Default: {'json'} (set, list, or tuple). 按需要可以变成['application/json']

accept_content = {'json'}

# 时区

timezone = "Asia/Shanghai"

enable_utc = True

worker_concurrency = 5 # celery worker并发数

worker_max_tasks_per_child = 5 # 每个worker最大执行任务数

"""

这里task_queues和task_routes需要成对出现

"""

task_queues = (

Queue('priority_low', exchange=Exchange('priority', type='direct'), routing_key='priority_low'),

Queue('priority_high', exchange=Exchange('priority', type='direct'), routing_key='priority_high'),

)

task_routes = ([

('tasks.add', {'queue': 'priority_low'}),

('tasks.multiply', {'queue': 'priority_high'}),

],)

# task_annotations = {

# 'tasks.add': {'rate_limit': '10/m'}

# }

假设任务发布者在机器A:114.10.112.1

消费者代码可以在机器B:114.10.112.3,机器C:114.10.112.3

任务消费者代码tasks.py

from celery import Celery

from celery import Task

from kombu import Queue

import time

app = Celery('tasks')

app.config_from_object('celery_config')

class CallbackTask(Task):

def on_success(self, retval, task_id, args, kwargs):

print

"----%s is done" % task_id

def on_failure(self, exc, task_id, args, kwargs, einfo):

pass

@app.task(base=CallbackTask)

def add(x, y):

return x + y

@app.task(base=CallbackTask)

def multiply(x, y):

return x * y

消费者配置文件其实可以和发布者同一份的celery_config.py

from celery.schedules import crontab

from kombu import Queue

from kombu import Exchange

# celery的配置

from datetime import timedelta

broker_url = r"redis://IP:6379/5"

# broker_url = r"redis://127.0.0.1:6379/5"

# 结果存储地址

# CELERY_RESULT_BACKEND = r"redis://127.0.0.1:6379/3"

result_backend = r"redis://IP:6379/6"

# result_backend = r"redis://127.0.0.1:6379/6"

# 任务序列化方式, 4.0版本后默认为json

# CELERY_TASK_SERIALIZER ='json'

task_serializer = "json"

# 任务执行结果序列化方式

serializer = 'json'

# 任务结果保存时间,超过这个会删除结果

result_expires = 60 * 60 * 5

# 指定任务接受的内容类型(序列化),默认值:Default: {'json'} (set, list, or tuple). 按需要可以变成['application/json']

accept_content = {'json'}

# 时区

timezone = "Asia/Shanghai"

enable_utc = True

worker_concurrency = 5 # celery worker并发数

worker_max_tasks_per_child = 5 # 每个worker最大执行任务数

"""

这里task_queues和task_routes需要成对出现

"""

task_queues = (

Queue('priority_low', exchange=Exchange('priority', type='direct'), routing_key='priority_low'),

Queue('priority_high', exchange=Exchange('priority', type='direct'), routing_key='priority_high'),

)

task_routes = ([

('tasks.add', {'queue': 'priority_low'}),

('tasks.multiply', {'queue': 'priority_high'}),

],)

# task_annotations = {

# 'tasks.add': {'rate_limit': '10/m'}

# }

##执行:

- 在消费者的机器上执行语句

celery -A tasks worker -l info

- 运行任务发布者代码main.py

- 在消费者上查看运行:例如

[2022-06-28 01:18:29,584: INFO/ForkPoolWorker-32] Task tasks.add[7e13e4b8-c1b7-43b8-a2a7-51c1b2d1030e] succeeded in 0.0162409320474s: 60

[2022-06-28 01:18:29,593: INFO/MainProcess] Received task: tasks.multiply[2b31ef5f-bab3-4180-8354-8f8d9bfec5b7]

[2022-06-28 01:18:29,603: INFO/ForkPoolWorker-32] Task tasks.multiply[2b31ef5f-bab3-4180-8354-8f8d9bfec5b7] succeeded in 0.00781027879566s: 2401

[2022-06-28 01:18:35,576: INFO/ForkPoolWorker-32] Task tasks.add[ccdf0e93-e251-49ab-9fed-f1243d62d704] succeeded in 0.00809998437762s: 62

[2022-06-28 01:18:41,451: INFO/ForkPoolWorker-32] Task tasks.add[95f7e944-5212-414e-b9f7-1b7b6bd9767f] succeeded in 0.00829892139882s: 64

[2022-06-28 01:18:47,467: INFO/ForkPoolWorker-32] Task tasks.add[979d91dc-3383-4da0-8421-3b5022dc32d3] succeeded in 0.00784247461706s: 66

[2022-06-28 01:18:52,999: INFO/ForkPoolWorker-33] Task tasks.add[2d6607a2-7827-4004-bcd2-434e5a3d2e55] succeeded in 0.0149132078514s: 68

[2022-06-28 01:18:59,003: INFO/ForkPoolWorker-33] Task tasks.add[f1edfbb2-868b-480a-926e-cfccb7f0511c] succeeded in 0.00704497192055s: 70

[2022-06-28 01:19:05,017: INFO/ForkPoolWorker-33] Task tasks.add[14c57953-c245-4b7b-81de-fa3fe9a8fabb] succeeded in 0.00671916175634s: 72

[2022-06-28 01:19:11,029: INFO/ForkPoolWorker-33] Task tasks.add[e59431df-f8f8-4c5f-a6f2-b6a593cea887] succeeded in 0.00702722277492s: 74

[2022-06-28 01:19:17,047: INFO/ForkPoolWorker-33] Task tasks.add[c644e4a4-ac3d-4867-a368-9c6dec327447] succeeded in 0.0072121405974s: 76

[2022-06-28 01:19:23,586: INFO/ForkPoolWorker-34] Task tasks.add[bd9a7dd0-9dda-4085-af66-a42ad5c4236e] succeeded in 0.015624540858s: 78

[2022-06-28 01:19:29,578: INFO/ForkPoolWorker-34] Task tasks.add[e26475a6-b6eb-446a-818a-cab551acc0b6] succeeded in 0.0080521190539s: 80

[2022-06-28 01:19:35,578: INFO/ForkPoolWorker-34] Task tasks.add[1f9760be-db5b-4963-b908-b1f2fb61cec8] succeeded in 0.00765205454081s: 82

[2022-06-28 01:19:41,577: INFO/ForkPoolWorker-34] Task tasks.add[96a27b4a-26bf-4d9e-8bea-8ecc3674cfab] succeeded in 0.00761856790632s: 84

[2022-06-28 01:19:47,577: INFO/ForkPoolWorker-34] Task tasks.add[35b507c5-1e30-4c00-a892-b43e04fde8ce] succeeded in 0.00773773994297s: 86

[2022-06-28 01:19:53,239: INFO/ForkPoolWorker-35] Task tasks.add[e7a3a2cc-9783-47e3-b46c-f28bce2e25be] succeeded in 0.0138604240492s: 88

[2022-06-28 01:19:59,264: INFO/ForkPoolWorker-35] Task tasks.add[af855ecf-b338-4036-be65-be4873811ae6] succeeded in 0.00668231397867s: 90

[2022-06-28 01:20:05,083: INFO/ForkPoolWorker-35] Task tasks.add[53946d25-c546-4b82-9fd5-843c4a296644] succeeded in 0.00653785746545s: 92

[2022-06-28 01:20:11,096: INFO/ForkPoolWorker-35] Task tasks.add[d06681d6-f2fc-4595-a879-7763af5f9355] succeeded in 0.00734963081777s: 94

[2022-06-28 01:20:17,107: INFO/ForkPoolWorker-35] Task tasks.add[98542ae6-6bf3-4894-8c3b-ee4d298f12c8] succeeded in 0.00668479222804s: 96

[2022-06-28 01:20:22,628: INFO/ForkPoolWorker-36] Task tasks.add[8f3c80e1-b84d-4a07-9b9c-0b369ceca9c3] succeeded in 0.014791207388s: 98

[2022-06-28 01:28:38,974: INFO/MainProcess] Received task: tasks.add[5acbc926-2a1f-4e8e-bf61-a3bff440d2e2]

[2022-06-28 01:28:38,982: INFO/ForkPoolWorker-36] Task tasks.add[5acbc926-2a1f-4e8e-bf61-a3bff440d2e2] succeeded in 0.00682080816478s: 2

[2022-06-28 01:28:39,106: INFO/MainProcess] Received task: tasks.multiply[dd50cc15-8575-4c6f-8967-c4981048075f]

[2022-06-28 01:28:39,115: INFO/ForkPoolWorker-36] Task tasks.multiply[dd50cc15-8575-4c6f-8967-c4981048075f] succeeded in 0.00673878751695s: 1

[2022-06-28 01:28:39,150: INFO/MainProcess] Received task: tasks.add[43761afa-a0d1-4be0-9600-df7a4d24fe9e]

[2022-06-28 01:28:39,220: INFO/MainProcess] Received task: tasks.multiply[fc8fed3c-ad94-4347-9cde-63dacd11f6cc]

[2022-06-28 01:28:39,228: INFO/ForkPoolWorker-36] Task tasks.multiply[fc8fed3c-ad94-4347-9cde-63dacd11f6cc] succeeded in 0.00698931328952s: 4

[2022-06-28 01:28:39,288: INFO/MainProcess] Received task: tasks.add[d29fccd3-2f8d-4092-8630-b453d6ae7f4f]

[2022-06-28 01:28:39,343: INFO/MainProcess] Received task: tasks.multiply[0e8bf18b-0d1f-48fc-8a87-dc21dbf51d43]

[2022-06-28 01:28:39,352: INFO/ForkPoolWorker-36] Task tasks.multiply[0e8bf18b-0d1f-48fc-8a87-dc21dbf51d43] succeeded in 0.00705090351403s: 9

[2022-06-28 01:28:39,386: INFO/MainProcess] Received task: tasks.add[5f89d120-3333-45ef-8f2b-ec7445e8f433]

[2022-06-28 01:28:39,430: INFO/MainProcess] Received task: tasks.multiply[de2d4151-ca05-4af4-930c-ca529bec4145]

[2022-06-28 01:28:39,502: INFO/MainProcess] Received task: tasks.add[a0b176c3-e3f0-4f82-a6ea-22d4c0bada6b]

[2022-06-28 01:28:39,511: INFO/ForkPoolWorker-28] Task tasks.multiply[de2d4151-ca05-4af4-930c-ca529bec4145] succeeded in 0.00704519171268s: 16

[2022-06-28 01:28:39,518: INFO/MainProcess] Received task: tasks.multiply[e4ad007e-9567-44f9-a62c-b93f9516d9dc]

[2022-06-28 01:28:39,536: INFO/ForkPoolWorker-37] Task tasks.multiply[e4ad007e-9567-44f9-a62c-b93f9516d9dc] succeeded in 0.0156515864655s: 25

[2022-06-28 01:28:39,570: INFO/MainProcess] Received task: tasks.add[dd601cec-40fa-4451-aaba-bb3b340dcc42]

[2022-06-28 01:28:39,686: INFO/MainProcess] Received task: tasks.multiply[3d340759-2552-4b45-a08a-7c93b4b81ce3]

[2022-06-28 01:28:39,695: INFO/ForkPoolWorker-37] Task tasks.multiply[3d340759-2552-4b45-a08a-7c93b4b81ce3] succeeded in 0.00707320123911s: 36

[2022-06-28 01:28:39,696: INFO/MainProcess] Received task: tasks.add[240b1951-92b3-4c36-a6bf-2dee2d57554a]

[2022-06-28 01:28:39,734: INFO/MainProcess] Received task: tasks.multiply[fae3dc33-ad25-4fd4-ba2a-65f6b6477915]

[2022-06-28 01:28:39,743: INFO/ForkPoolWorker-37] Task tasks.multiply[fae3dc33-ad25-4fd4-ba2a-65f6b6477915] succeeded in 0.00721634179354s: 49

[2022-06-28 01:28:39,778: INFO/MainProcess] Received task: tasks.add[c8a32c9a-51ea-4e8c-b7c1-bab9b25ef81c]

[2022-06-28 01:28:39,824: INFO/MainProcess] Received task: tasks.multiply[0b98e952-72de-46c9-a5e6-711e1b7cc3b3]

[2022-06-28 01:28:39,833: INFO/ForkPoolWorker-37] Task tasks.multiply[0b98e952-72de-46c9-a5e6-711e1b7cc3b3] succeeded in 0.00693809334189s: 64

[2022-06-28 01:28:39,871: INFO/MainProcess] Received task: tasks.add[a35d9339-45ac-48e7-b2ed-6c30e44709fe]

[2022-06-28 01:28:39,922: INFO/MainProcess] Received task: tasks.multiply[90553245-97bd-40d5-9fe4-35ac81744225]

[2022-06-28 01:28:39,931: INFO/ForkPoolWorker-37] Task tasks.multiply[90553245-97bd-40d5-9fe4-35ac81744225] succeeded in 0.00734540447593s: 81

[2022-06-28 01:28:45,716: INFO/ForkPoolWorker-39] Task tasks.add[43761afa-a0d1-4be0-9600-df7a4d24fe9e] succeeded in 0.0192958954722s: 4

[2022-06-28 01:28:51,331: INFO/ForkPoolWorker-39] Task tasks.add[d29fccd3-2f8d-4092-8630-b453d6ae7f4f] succeeded in 0.00756212696433s: 6

[2022-06-28 01:28:57,276: INFO/ForkPoolWorker-39] Task tasks.add[5f89d120-3333-45ef-8f2b-ec7445e8f433] succeeded in 0.00807804707438s: 8

[2022-06-28 01:29:03,454: INFO/ForkPoolWorker-39] Task tasks.add[a0b176c3-e3f0-4f82-a6ea-22d4c0bada6b] succeeded in 0.00738424248993s: 10

[2022-06-28 01:29:09,755: INFO/ForkPoolWorker-39] Task tasks.add[dd601cec-40fa-4451-aaba-bb3b340dcc42] succeeded in 0.00734733697027s: 12

[2022-06-28 01:29:15,777: INFO/ForkPoolWorker-40] Task tasks.add[240b1951-92b3-4c36-a6bf-2dee2d57554a] succeeded in 0.0133697129786s: 14

[2022-06-28 01:29:21,593: INFO/ForkPoolWorker-40] Task tasks.add[c8a32c9a-51ea-4e8c-b7c1-bab9b25ef81c] succeeded in 0.00677690654993s: 16

[2022-06-28 01:29:27,102: INFO/ForkPoolWorker-40] Task tasks.add[a35d9339-45ac-48e7-b2ed-6c30e44709fe] succeeded in 0.00660693272948s: 18

本文介绍了如何使用Celery在多台机器上部署分布式任务,形成一个发布者和多个消费者模式。发布者负责发布任务到调度者(如Redis),消费者从调度者获取并执行任务,结果存储在结果存储队列中。任务发布者和消费者代码分别位于不同的机器上,通过配置文件`celery_config.py`进行设置。消费者机器上运行`main.py`启动任务发布,通过查看消费者状态确认任务执行。

本文介绍了如何使用Celery在多台机器上部署分布式任务,形成一个发布者和多个消费者模式。发布者负责发布任务到调度者(如Redis),消费者从调度者获取并执行任务,结果存储在结果存储队列中。任务发布者和消费者代码分别位于不同的机器上,通过配置文件`celery_config.py`进行设置。消费者机器上运行`main.py`启动任务发布,通过查看消费者状态确认任务执行。

7891

7891

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?