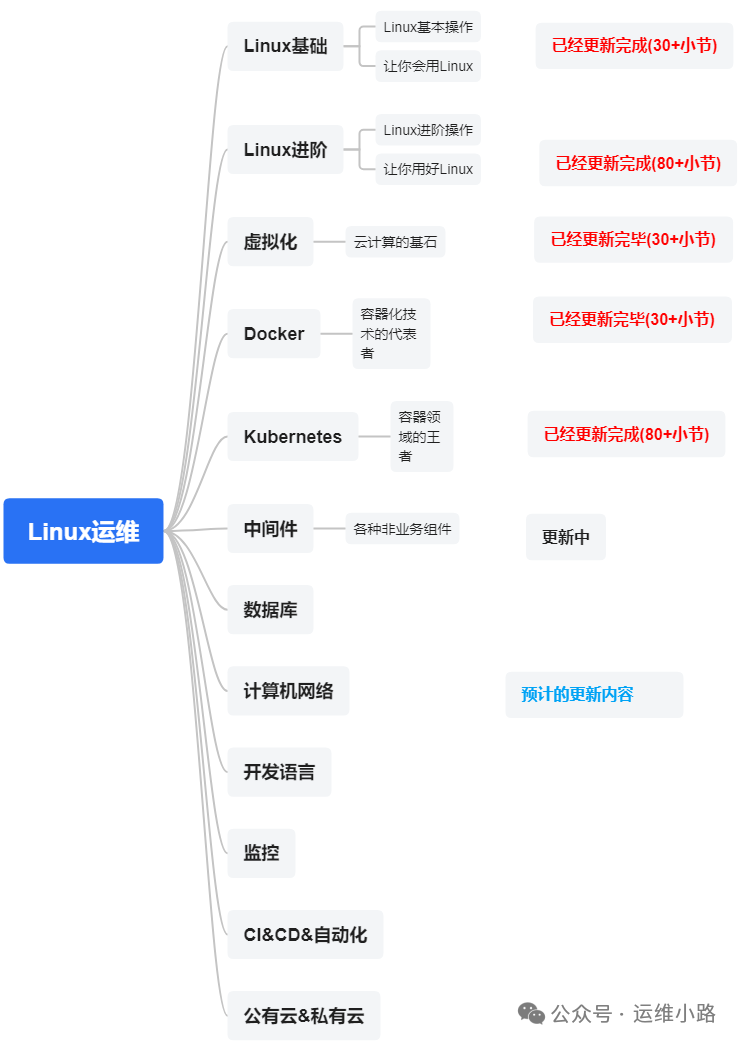

作者介绍:简历上没有一个精通的运维工程师。请点击上方的蓝色《运维小路》关注我,下面的思维导图也是预计更新的内容和当前进度(不定时更新)。

我们上一章介绍了中间件:Zookeeper,本章将介绍另外一个中间件:Kafka。目前这2个中间件都是基于JAVA语言的。

本篇文章大概是我3年前写的,当时写这篇文章的背景是一个容器化部署的Kafka集群,其中一个节点宕机以后无法恢复,所以想了解下这个Kafak集群崩溃以后的恢复过程(下面的内容参考原文做了部分修改和脱敏)。

背景

在日常运维当中,会经常遇到Kafka宕机的情况,有的时候可能宕机了很久才发现,有的时候可能宕机的Kafka节点数据已经丢失,也可能数据出现了损坏,宕机的时候Kafka本地的数据可能很大,也可能很小,在不同的时候恢复是否有什么相同或者异同的地方,是否有什么兜底方案,在确保任何情况下都能保证恢复Kafka的情况。

有一个类似的CASE:是数据大概有700g左右,在同步数据需要花费10分钟以上,而Kubernetes的健康检查的程序会在程序启动10分钟以后开始执行,导致每次都因为数据未同步完,健康检查无法通过,触发Pod重建,形成死循环。

宕机情况1

broker节点重启数据加载过程:原有数据大概50G,宕机10个小时左右,可模拟节点宕机或者维护下线当节点恢复以后,恢复节点调度,Pod重新拉起的过程。

#启动服务,连接zookeeper

[2022-12-14 02:04:59,608] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$)

[2022-12-14 02:04:59,868] INFO starting (kafka.server.KafkaServer)

[2022-12-14 02:04:59,869] INFO Connecting to zookeeper on x.x.x.x:2181/kafka/kafka (kafka.server.KafkaServer)

#读取本地配置文件

[2022-12-14 02:05:00,158] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = PLAINTEXT://x.x.x.x:xxx

#读取生产者状态,和加载生产者快照数据应该没什么先后顺序

[2022-12-14 02:05:00,249] INFO Loading logs. (kafka.log.LogManager)

[2022-12-14 02:05:00,311] INFO [Log partition=Barad_Ping-1, dir=/var/lib/kafka/data/topics] Loading producer state from offset 0 with message format version 2 (kafka.log.Log)

[2022-12-14 02:05:00,318] INFO [Log partition=Barad_Ping-1, dir=/var/lib/kafka/data/topics] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 26 ms (kafka.log.Log)

#加载生产者快照数据,和读取生产者状态应该没什么先后顺序

[2022-12-14 02:05:00,662] INFO [ProducerStateManager partition=xxxxx-1] Loading producer state from snapshot file '/var/lib/kafka/data/topics/xxxxxxx-1/00000000000000005390.snapshot' (kafka.log.ProducerStateManager)

[2022-12-14 02:05:00,662] INFO [Log partition=xxxxxxxx-1, dir=/var/lib/kafka/data/topics] Completed load of log with 1 segments, log start offset 5021 and log end offset 5390 in 2 ms (kafka.log.Log)

#数据加载完成

Logs loading complete in 1081 ms. (kafka.log.LogManager)

#初始化完成,开始比对注册到zk,并提供服务

[2022-12-14 02:05:01,336] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2022-12-14 02:05:01,336] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2022-12-14 02:05:01,518] INFO Awaiting socket connections on 0.0.0.0:xxx. (kafka.network.Acceptor)

[2022-12-14 02:05:01,542] INFO [SocketServer brokerId=0] Started 1 acceptor threads (kafka.network.SocketServer)

[2022-12-14 02:05:01,556] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 02:05:01,557] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 02:05:01,557] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 02:05:01,564] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2022-12-14 02:05:01,607] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient)

[2022-12-14 02:05:01,609] INFO Result of znode creation at /brokers/ids/0 is: OK (kafka.zk.KafkaZkClient)

[2022-12-14 02:05:01,610] INFO Registered broker 0 at path /brokers/ids/0 with addresses: ArrayBuffer(EndPoint(x.x.x.x,xxxx,ListenerName(PLAINTEXT),PLAINTEXT)) (kafka.zk.KafkaZkClient)

[2022-12-14 02:05:01,643] INFO [ExpirationReaper-0-topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 02:05:01,650] INFO [ExpirationReaper-0-Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 02:05:01,650] INFO [ExpirationReaper-0-Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 02:05:01,666] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.group.GroupCoordinator)

[2022-12-14 02:05:01,668] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.group.GroupCoordinator)

[2022-12-14 02:05:01,668] INFO [GroupMetadataManager brokerId=0] Removed 0 expired offsets in 0 milliseconds. (kafka.coordinator.group.GroupMetadataManager)

[2022-12-14 02:05:01,677] INFO [ProducerId Manager 0]: Acquired new producerId block (brokerId:0,blockStartProducerId:39000,blockEndProducerId:39999) by writing to Zk with path version 40 (kafka.coordinator.transaction.ProducerIdManager)

[2022-12-14 02:05:01,691] INFO [TransactionCoordinator id=0] Starting up. (kafka.coordinator.transaction.TransactionCoordinator)

[2022-12-14 02:05:01,693] INFO [Transaction Marker Channel Manager 0]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2022-12-14 02:05:01,693] INFO [TransactionCoordinator id=0] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator)

[2022-12-14 02:05:01,762] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2022-12-14 02:05:01,823] WARN [Log partition=Barad_Comm-0, dir=/var/lib/kafka/data/topics] retention.ms for topic xxxxxx is set to 3600. It is smaller than message.timestamp.difference.max.ms's value 9223372036854775807. This may result in frequent log rolling. (kafka.log.Log)

[2022-12-14 02:05:01,837] INFO [SocketServer brokerId=0] Started processors for 1 acceptors (kafka.network.SocketServer)

[2022-12-14 02:05:01,839] INFO Kafka version : 1.1.1 (org.apache.kafka.common.utils.AppInfoParser)

[2022-12-14 02:05:01,839] INFO Kafka commitId : 8e07427ffb493498 (org.apache.kafka.common.utils.AppInfoParser)

[2022-12-14 02:05:01,840] INFO [KafkaServer id=0] started (kafka.server.KafkaServer)

#开始读取本地分区状态

[2022-12-14 02:05:02,304] INFO Replica loaded for partition tp_qemu_disk_info_ph2-0 with initial high watermark 26092182 (kafka.cluster.Replica)

[2022-12-14 02:05:02,305] INFO Replica loaded for partition tp_qemu_disk_info_ph2-0 with initial high watermark 0 (kafka.cluster.Replica)

#中间看不太懂了

#比对了偏移量,开始删除本地过期的数据

[2022-12-14 02:06:04,456] INFO Deleted log /var/lib/kafka/data/topics/xxxxxx-4/00000000000527817511.log.deleted. (kafka.log.LogSegment)

[2022-12-14 02:06:04,478] INFO Deleted offset index /var/lib/kafka/data/topics/xxxxx-4/00000000000527817511.index.deleted. (kafka.log.LogSegment)

#移除不是leader的分区,并从leader同步数据

[2022-12-14 02:07:51,842] INFO [ReplicaFetcher replicaId=0, leaderId=1, fetcherId=0] Remote broker is not the leader for partition xxxxxx-2, which could indicate that the partition is being moved (kafka.server.ReplicaFetcherThread)

[2022-12-14 02:07:51,842] INFO [ReplicaFetcher replicaId=0, leaderId=2, fetcherId=0] Remote broker is not the leader for partition xxxxxx-5, which could indicate that the partition is being moved (kafka.server.ReplicaFetcherThread)

宕机情况2

删除pv或移除数据broker恢复过程:原有数据大概50G,宕机10个小时,需要删除pvc重建,由于删除pv和宕机时间无关,可模拟节点故障短时间无法修复需要迁移到另外的节点进行运行,来确保集群的健壮性。

如果是手工模拟则可以手工删除PV(卡住),新开窗口删除Pod,然后两边会一起删除,由于模拟故障所以最好等待kafka判断broker挂掉,完成副本迁移再操作恢复。

日志分析

#启动服务,连接zookeeper,这个和是否丢失数据是无关

[2022-12-14 06:43:03,171] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$)

[2022-12-14 06:43:03,403] INFO starting (kafka.server.KafkaServer)

[2022-12-14 06:43:03,403] INFO Connecting to zookeeper on xxxxx:2181/kafka/kafka (kafka.server.KafkaServer)

#连接城以后,读取本地配置文件

[2022-12-14 06:43:03,672] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = PLAINTEXT://x.x.x.x:xxxx

advertised.port = null

#先读取数据目录,发现数据目录为空,创建了新的目录,然后读取完成

[2022-12-14 06:43:03,694] INFO [ThrottledRequestReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[2022-12-14 06:43:03,694] INFO [ThrottledRequestReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[2022-12-14 06:43:03,695] INFO [ThrottledRequestReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledRequestReaper)

[2022-12-14 06:43:03,753] INFO Log directory '/var/lib/kafka/data/topics' not found, creating it. (kafka.log.LogManager)

[2022-12-14 06:43:03,759] INFO Loading logs. (kafka.log.LogManager)

[2022-12-14 06:43:03,764] INFO Logs loading complete in 5 ms. (kafka.log.LogManager)

#初始化完成,开始比对注册到zk,并提供服务

[2022-12-14 06:43:03,771] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2022-12-14 06:43:03,772] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2022-12-14 06:43:03,983] INFO Awaiting socket connections on 0.0.0.0:65528. (kafka.network.Acceptor)

[2022-12-14 06:43:04,006] INFO [SocketServer brokerId=0] Started 1 acceptor threads (kafka.network.SocketServer)

[2022-12-14 06:43:04,019] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 06:43:04,019] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 06:43:04,020] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 06:43:04,027] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2022-12-14 06:43:04,061] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient)

[2022-12-14 06:43:04,062] INFO Result of znode creation at /brokers/ids/0 is: OK (kafka.zk.KafkaZkClient)

[2022-12-14 06:43:04,063] INFO Registered broker 0 at path /brokers/ids/0 with addresses: ArrayBuffer(EndPoint(10.30.1.145,65528,ListenerName(PLAINTEXT),PLAINTEXT)) (kafka.zk.KafkaZkClient)

[2022-12-14 06:43:04,064] WARN No meta.properties file under dir /var/lib/kafka/data/topics/meta.properties (kafka.server.BrokerMetadataCheckpoint)

[2022-12-14 06:43:04,094] INFO [ExpirationReaper-0-topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 06:43:04,096] INFO [ExpirationReaper-0-Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 06:43:04,096] INFO [ExpirationReaper-0-Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2022-12-14 06:43:04,110] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.group.GroupCoordinator)

[2022-12-14 06:43:04,110] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.group.GroupCoordinator)

[2022-12-14 06:43:04,111] INFO [GroupMetadataManager brokerId=0] Removed 0 expired offsets in 1 milliseconds. (kafka.coordinator.group.GroupMetadataManager)

[2022-12-14 06:43:04,119] INFO [ProducerId Manager 0]: Acquired new producerId block (brokerId:0,blockStartProducerId:41000,blockEndProducerId:41999) by writing to Zk with path version 42 (kafka.coordinator.transaction.ProducerIdManager)

[2022-12-14 06:43:04,130] INFO [TransactionCoordinator id=0] Starting up. (kafka.coordinator.transaction.TransactionCoordinator)

[2022-12-14 06:43:04,131] INFO [Transaction Marker Channel Manager 0]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2022-12-14 06:43:04,131] INFO [TransactionCoordinator id=0] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator)

[2022-12-14 06:43:04,152] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2022-12-14 06:43:04,189] INFO [SocketServer brokerId=0] Started processors for 1 acceptors (kafka.network.SocketServer)

[2022-12-14 06:43:04,191] INFO Kafka version : 1.1.1 (org.apache.kafka.common.utils.AppInfoParser)

[2022-12-14 06:43:04,191] INFO Kafka commitId : 8e07427ffb493498 (org.apache.kafka.common.utils.AppInfoParser)

[2022-12-14 06:43:04,192] INFO [KafkaServer id=0] started (kafka.server.KafkaServer)

#开始同步生产者状态,创建对应的top目录,开始同步数据

[2022-12-14 06:43:04,460] INFO [Log partition=tp_qemu_disk_info_ph2-0, dir=/var/lib/kafka/data/topics] Loading producer state from offset 0 with message format version 2 (kafka.log.Log)

[2022-12-14 06:43:04,464] INFO [Log partition=tp_qemu_disk_info_ph2-0, dir=/var/lib/kafka/data/topics] Completed load of log with 1 segments, log start offset 0 and log end offset 0 in 17 ms (kafka.log.Log)

[2022-12-14 06:43:04,466] INFO Created log for partition tp_qemu_disk_info_ph2-0 in /var/lib/kafka/data/topics with properties {compression.type -> producer, message.format.version -> 1.1-IV0, file.delete.delay.ms -> 60000, max.message.bytes -> 1000012, min.compaction.lag.ms -> 0, message.timestamp.type -> CreateTime, min.insync.replicas -> 1, segment.jitter.ms -> 0, preallocate -> false, min.cleanable.dirty.ratio -> 0.5, index.interval.bytes -> 4096, unclean.leader.election.enable -> false, retention.bytes -> -1, delete.retention.ms -> 86400000, cleanup.policy -> [delete], flush.ms -> 3000, segment.ms -> 604800000, segment.bytes -> 1073741824, retention.ms -> 259200000, message.timestamp.difference.max.ms -> 9223372036854775807, segment.index.bytes -> 10485760, flush.messages -> 10000}. (kafka.log.LogManager)

[2022-12-14 06:43:04,467] INFO [Partition tp_qemu_disk_info_ph2-0 broker=0] No checkpointed highwatermark is found for partition tp_qemu_disk_info_ph2-0 (kafka.cluster.Partition)

[2022-12-14 06:43:04,468] INFO Replica loaded for partition tp_qemu_disk_info_ph2-0 with initial high watermark 0 (kafka.cluster.Replica)

[2022-12-14 06:43:04,469] INFO Replica loaded for partition tp_qemu_disk_info_ph2-0 with initial high watermark 0 (kafka.cluster.Replica)

#中间看不懂

#开始删除过期数据,由于本地没有过期数据,所以删除的都是偏移量圈是0的,正常的节点就是历史真正的偏移量

[2022-12-14 06:44:05,620] INFO Deleted log /var/lib/kafka/data/topics/xxxxxx-14/00000000000000000000.log.deleted. (kafka.log.LogSegment)

[2022-12-14 06:44:05,627] INFO Deleted offset index /var/lib/kafka/data/topics/xxxxxx-14/00000000000000000000.index.deleted. (kafka.log.LogSegment)

[2022-12-14 06:44:05,628] INFO Deleted time index /var/lib/kafka/data/topics/xxxxxx-14/00000000000000000000.timeindex.deleted. (kafka.log.LogSegment)

宕机情况3

数据有损坏,恢复方法:原有数据大概50G,宕机10个小时,数据有损坏,可模拟在服务器意外宕机,导致磁盘文件损坏或者丢失部分情况,由于无法模拟,所以本地操作会随机删除部分Topic目录的数据和通过追加数据方式修复部分文件

这个是人工破坏数据,当时偷了个懒,未记录日志。

总结

1.只要确保整个集群的健壮,可以通过兜底删除PV方式来恢复。

2.如果kafka数据容量大于500G,就存在一定风险无法加载成功,而无法通过健康检查,建议是缩小kafka的占用空间较大的Topic数据保留时长,默认是7天,一般而言1-3天均可,具体以现场为主。

运维小路

一个不会开发的运维!一个要学开发的运维!一个学不会开发的运维!欢迎大家骚扰的运维!

关注微信公众号《运维小路》获取更多内容。

999

999

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?