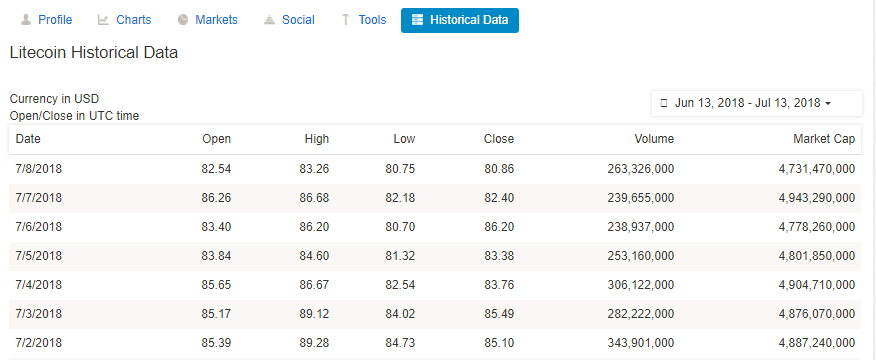

Cryptographic currency has become a new focus of market assets. From the analysis of litecoin price history data, As bitcoin prices continue to rise, many investors are turning to other cryptocurrencies. In 2017, Litecoin has risen by 1400%, although the relative increase in bitcoin prices is not high, but There are also many investors in Litecoin.

The price of Litecoin did not continue to rise after breaking through the pressure of 165 US dollars, but began to fall, and has fallen below the support of 155 US dollars and 150 US dollars. This was a big drop, and the price subsequently fell below $140. The current price is under $150 and 100 hours SMA. After falling to a low of $137, prices have rebounded slightly. The first pressure is near the 23.6% correction rate of the $163-137 decline. Due to the numerous resistances around $150-155, any significant increase may be limited in this block.

In addition, the 50% correction rate for the $163-137 range is around $150. Therefore, $150 may also be the resistance to the current uptrend. All in all, the current price is under the state of $150. The price of Litecoin is likely to continue to fall in the short term.

About the future of Litecoin

Analyze litecoin price history data, some people think it will rise to a high this year. This view has also been praised by analysts. And unlike Bitcoin, Litecoin's anonymous transactions are implemented on the main chain. Litecoin began to choose a different development path from Bitcoin. As a supplementary currency of Bitcoin, Litecoin continued to sit firmly at the leading position of the competition currency.

According to the data analysis, the recent cryptocurrency market has relatively strong market performance, while Bitcoin has similar properties of Litecoin, which has a relatively low price, long running time and high user size and popularity around the world, plus recent The trend of Bitcoin has skyrocketed. Therefore, the market sentiment is soaring, driving a large amount of funds into the Litecoin fund, which is one of the reasons for the increase.

Charlie Lee, the founder of Litecoin, once interviewed through Youtube. He said: "I still think this is a correct move, but what makes me suspicious is that in the long run, I think this is a correct behavior. However, in the short term, only the price of Litecoin is falling, and there is no new high, which may not be the most important decision."

285

285

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?