一、Datax介绍

官网: DataX/introduction.md at master · alibaba/DataX · GitHub

DataX 是阿里云 DataWorks数据集成 的开源版本,在阿里巴巴集团内被广泛使用的

离线数据同步工具/平台。DataX 实现了包括 MySQL、Oracle、OceanBase、SqlServer、Postgre、HDFS、Hive、ADS、HBase、TableStore(OTS)、MaxCompute(ODPS)、Hologres、DRDS, databend 等各种异构数据源之间高效的数据同步功能。

Datax架构说明

Datax数据处理流程

二、Datax的使用说明

Datax在使用是主要编写json文件,在json中定义read如何读取 write如何写入

-

格式

{

"job": {

"setting": {

"speed": {

"channel": 3

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "123456",

"column": [

"id",

"name"

],

"splitPk": "db_id",

"connection": [

{

"table": [

"table"

],

"jdbcUrl": [

"jdbc:mysql://127.0.0.1:3306/database"

]

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"print":true

}

}

}

]

}

}

简单使用

读取mysql数据在终端中输出结果

-- 在mysql中创建库表 create database itcast charset=utf8; use itcast; create table student( id int, name varchar(20), age int, gender varchar(20) ); insert into student values(1,'张三',20,'男'), (2,'李四',21,'男'), (3,'王五',19,'男'), (4,'赵六',22,'男');

编写datax的json文件

{

"job": {

"setting": {

"speed": {

"channel": 3

},

"errorLimit": {

"record": 0,

"percentage": 0.02

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "123456",

"column": [

"id",

"name",

"age",

"gender"

],

"splitPk": "id",

"connection": [

{

"table": [

"student"

],

"jdbcUrl": [

"jdbc:mysql://192.168.88.80:3306/itcast"

]

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"print":true

}

}

}

]

}

}

在datax的job目录下创建json文件

cd /export/server/datax/job/

执行json文件中的配置信息

cd /export/server/datax/bin python datax.py ../job/mysql_data.json

Mysql使用sql语句读取数据

sql语句可以实现对数据的筛选过滤

{

"job": {

"setting": {

"speed": {

"channel":1

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "123456",

"connection": [

{

"querySql": [

"select * from student where id>=3;"

],

"jdbcUrl": [

"jdbc:mysql://192.168.88.80:3306/itcast"

]

}

]

}

},

"writer": {

"name": "streamwriter",

"parameter": {

"print": true,

"encoding": "UTF-8"

}

}

}

]

}

}

三、Mysql数据导入HDFS

读取mysql数据

写入到hdfs

{

"job": {

"setting": {

"speed": {

"channel":1

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "123456",

"column": [

"id",

"name",

"age",

"gender"

],

"splitPk": "id",

"connection": [

{

"table": [

"student"

],

"jdbcUrl": [

"jdbc:mysql://192.168.88.80:3306/itcast"

]

}

]

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"defaultFS": "hdfs://192.168.88.80:8020",

"fileType": "text",

"path": "/data",

"fileName": "student",

"column": [

{

"name": "id",

"type": "int"

},

{

"name": "name",

"type": "string"

},

{

"name": "age",

"type": "INT"

},

{

"name": "gender",

"type": "string"

}

],

"writeMode": "append",

"fieldDelimiter": "\t"

}

}

}

]

}

}

使用sql语句导入需要指定jdbc连接参数

当数据中有中文是需要增加参数

jdbc:mysql://192.168.88.80:3306/itcast?useSSL=false&characterEncoding=utf8

{

"job": {

"setting": {

"speed": {

"channel":1

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "123456",

"connection": [

{

"querySql": [

"select * from student where gender='男';"

],

"jdbcUrl": [

"jdbc:mysql://192.168.88.80:3306/itcast?useSSL=false&characterEncoding=utf8"

]

}

]

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"defaultFS": "hdfs://192.168.88.80:8020",

"fileType": "text",

"path": "/data",

"fileName": "student",

"column": [

{

"name": "id",

"type": "int"

},

{

"name": "name",

"type": "string"

},

{

"name": "age",

"type": "INT"

},

{

"name": "gender",

"type": "string"

}

],

"writeMode": "append",

"fieldDelimiter": "\t"

}

}

}

]

}

}

四、Mysql数据导入Hive表

hive的表是由两部分构成的

表的元数据 hive的metastore管理

表的行数据 hdfs上以文件的方式存储

导入hive表的数据本质就是将mysql中的数据导入hdfs中,将数据按照hive表的路径进行导入

1-启动hive服务 metastore hiveserve2

2-配置datagrip连接

3-创建hive表

show databases ;

create database itcast;

use itcast;

create table stu(

id int,

name string,

age int,

gender string

)row format delimited fields terminated by ',';

select * from stu;

4-hive表的数据导入,本质就是将数据写入hdfs的表目录中

编写json文件

{

"job": {

"setting": {

"speed": {

"channel":1

}

},

"content": [

{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "root",

"password": "123456",

"column": [

"id",

"name",

"age",

"gender"

],

"splitPk": "id",

"connection": [

{

"table": [

"student"

],

"jdbcUrl": [

"jdbc:mysql://192.168.88.80:3306/itcast"

]

}

]

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"defaultFS": "hdfs://192.168.88.80:8020",

"fileType": "text",

"path": "/user/hive/warehouse/itcast.db/stu",

"fileName": "stu",

"column": [

{

"name": "id",

"type": "int"

},

{

"name": "name",

"type": "string"

},

{

"name": "age",

"type": "INT"

},

{

"name": "gender",

"type": "string"

}

],

"writeMode": "append",

"fieldDelimiter": ","

}

}

}

]

}

}

五、Datax-web介绍

datax-web是基于datax进行的二次开发,提供了一个可视化web页面,方便开发人员定义datax任务,并且能自动生成json文件

六、Datax-Web使用

6-1 启动服务

/export/server/datax-web-2.1.2/bin/start-all.sh

6-2 访问页面

6-3 使用

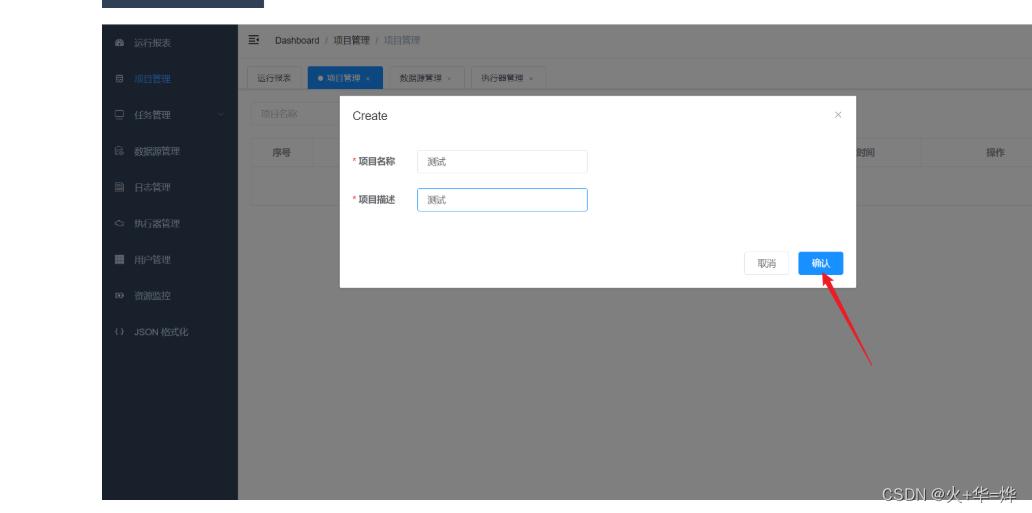

6-3-1 创建项目

6-3-2 创建数据源连接

6-3-3 任务管理的模板生成

可以设置定时执行

6-3-4 生成datax任务

6-3-5 任务执行

6-3-6 定时执行

本文介绍了DataX,阿里云DataWorks的数据集成开源版本,其用于异构数据源间的高效同步,包括JSON配置、MySQL到HDFS、Hive表的导入,以及DataX-web的可视化管理和任务生成。

本文介绍了DataX,阿里云DataWorks的数据集成开源版本,其用于异构数据源间的高效同步,包括JSON配置、MySQL到HDFS、Hive表的导入,以及DataX-web的可视化管理和任务生成。

2444

2444

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?