import pandas as pd import requests from lxml import etree import multiprocessing import threading from functools import partial import datetime import time base_url = 'https://www.readnovel.com/finish?pageSize=10&pageNum={}' detail_base = 'https://www.readnovel.com' headers = {'Accept': '*/*', 'Accept-Language': 'en-US,en;q=0.8', 'Cache-Control': 'max-age=0', 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/48.0.2564.116 Safari/537.36', 'Connection': 'keep-alive', 'Referer': 'https://www.readnovel.com/' } def get_page_source_url(data, lock, url): res = requests.get(url=url, headers=headers) res_text = etree.HTML(res.text.encode('utf-8')) detail_urls = res_text.xpath('//div[@class="book-info"]/h3/a/@href') parse(detail_urls, data, lock) def parse(detail_urls, data, lock): # with lock: # for detail_url in detail_urls: # t = threading.Thread(target=get_one, args=(detail_url, data)) # t.start() for detail_url in detail_urls: t = threading.Thread(target=get_one, args=(detail_url, data)) t.start() def get_one(detail_url, data): url = detail_base + detail_url # print('正在爬取:{}'.format(url)) res = requests.get(url=url, headers=headers) content = etree.HTML(res.text.encode('utf-8')) try: result = { '小说名': content.xpath('//div[@class="book-info"]/h1/em/text()')[0], '作者': content.xpath('//div[@class="book-info"]/h1/a/text()')[0].split()[0], '字数': content.xpath('//div[@class="author-state"]//div[@class="total-wrap"]//li/p/text()')[1], '月票数': content.xpath('//i[@id="monthCount"]/text()')[0], '类别': content.xpath('//span[@class="tag"]/i/text()')[-1], '创作天数': content.xpath('//div[@class="author-state"]//div[@class="total-wrap"]//li/p/text()')[2] } except: print('需特殊处理的url:{}'.format(url)) result = { '小说名': content.xpath('//div[@class="book-info"]/h1/em/text()')[0], '作者': content.xpath('//div[@class="book-info"]/h1/a/text()')[0].split()[0], '字数': content.xpath('//p[@class="total"]/span/text()')[0] + '万字', '月票数': content.xpath('//i[@id="monthCount"]/text()')[0], '类别': content.xpath('//span[@class="tag"]/i/text()')[-1], '创作天数': None } data.append(result) return data.append(result) if __name__ == '__main__': print('开始爬取...') start = datetime.datetime.now() #start_a = time.clock() manager = multiprocessing.Manager() lock = manager.Lock() data = manager.list() pool = multiprocessing.Pool(processes=20) func = partial(get_page_source_url, data, lock) pool.map(func, [base_url.format(i) for i in range(1, 21, 1)]) pool.close() pool.join() data_final = pd.DataFrame(data=list(data)) data_final.to_csv('bookinfo.csv') end = datetime.datetime.now() #end_a = time.clock() print('爬取结束,总共爬取{}个url,耗时:{}s'.format(data_final.shape[0], (end-start).seconds)) #print('爬取结束,总共爬取{}个,耗时:{}s'.format(data_final.shape[0], end_a-start_a))

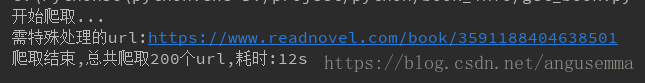

爬取结果:

本文介绍了一个使用Python实现的小说信息爬虫项目。该爬虫利用requests和lxml库从指定网站抓取小说详情页链接,并通过多进程和多线程技术高效地获取每部小说的具体信息,包括小说名、作者、字数等。最后将爬取到的数据保存为CSV文件。

本文介绍了一个使用Python实现的小说信息爬虫项目。该爬虫利用requests和lxml库从指定网站抓取小说详情页链接,并通过多进程和多线程技术高效地获取每部小说的具体信息,包括小说名、作者、字数等。最后将爬取到的数据保存为CSV文件。

399

399

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?