目录

摘要:

记录postgres刷新页缓存到磁盘的流程

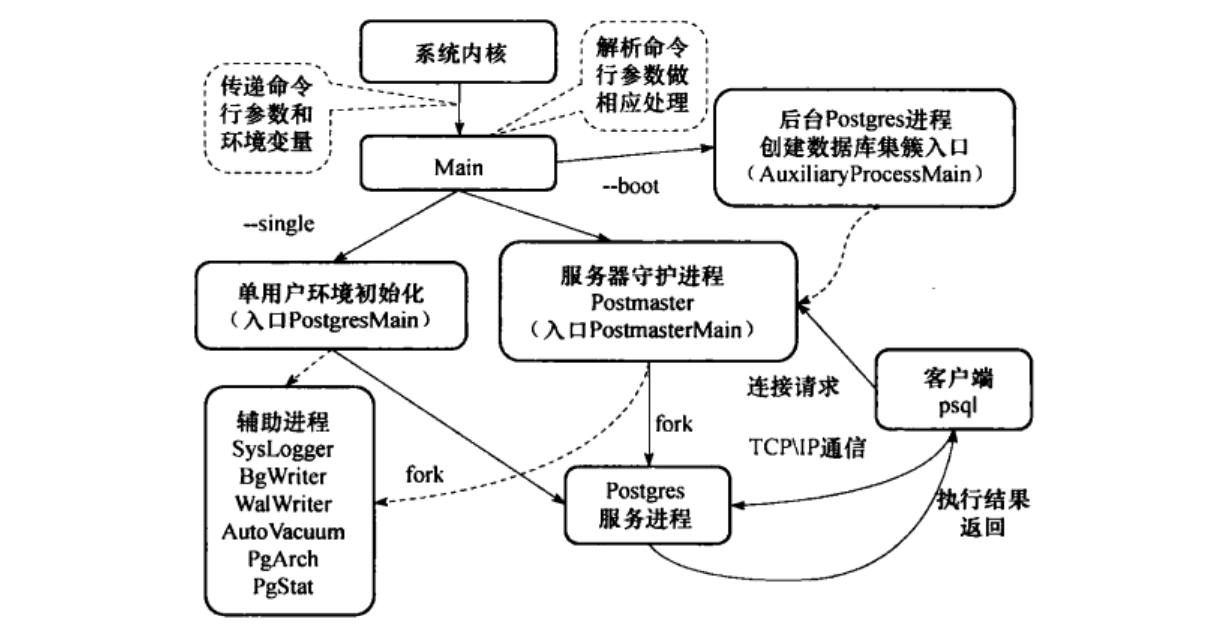

postgres进程关系:

postgres是采用了多进程架构,不同模块创建了子进程去处理,进程关系:

kevin 419546 1 0 06:14 ? 00:00:00 /usr/local/pgsql/bin/postgres

kevin 419604 419546 0 06:26 ? 00:00:00 postgres: checkpointer

kevin 419605 419546 0 06:26 ? 00:00:00 postgres: background writer

kevin 419606 419546 0 06:26 ? 00:00:00 postgres: walwriter

kevin 419607 419546 0 06:26 ? 00:00:00 postgres: autovacuum launcher

kevin 419608 419546 0 06:26 ? 00:00:00 postgres: stats collector

kevin 419609 419546 0 06:26 ? 00:00:00 postgres: logical replication launcher

PostgreSQL会把table数据和index以page的形式存储在缓存中,同时在某些情况下(使用 prepared)也会把查询计划缓存下来,但是不会去缓存具体的查询结果。它是把查询到的数据页缓存起来,这个页会包含连续的数据,即不仅仅是你所要的查询的数据。

缓存指的是共享缓存,shared_buffers,所代表的内存区域可以看成是一个以8KB的block为单位的数组,即最小的分配单位是8KB。当Postgres想要从disk获取(主要是table和index)数据(page)时,他会(根据page的元数据)先搜索shared_buffers,确认该page是否在shared_buffers中,如果存在,则直接命中,返回缓存的数据以避免I/O。如果不存在,Postgres才会通过I/O访问disk获取数据。

缓冲区的分配

我们知道Postgres是基于进程工作的系统,即对于每一个服务器的connection,Postgres主进程都会向操作系统fork一个新的子进程(backend)去提供服务。同时,Postgres本身除了主进程之外也会起一些辅助的进程。

因此,对于每一个connection的数据请求,对应的后端进程(backend)都会首先向LRU cache中请求数据页page(这个数据请求不一定指的是SQL直接查询的表或者视图的page,比如index和系统表),这个时候就发起了一次缓冲区的分配请求。那么,这个时候我们就要抉择了。如果要请求的block就在cache中,那最好,我们"pinned"这个block,并且返回cache中的数据。所谓的"pinned"指的是增加这个block的"usage count"。

当"usage count"为0时,我们就认为这个block没用了,在后面cache满的时候,它就该挪挪窝了。

那也就是说,只有当buffers/slots已满的情况下,才会引发缓存区的换出操作。

缓存区的换出

决定哪个page该从内存中换出并写回到disk中,这是一个经典的计算机科学的问题。

一个最简单的LRU算法在实际情况下基本上很难work起来。因为LRU是要把最近最少使用的page换出去,但是我们没有记录上次运行时的状态。

因此,作为一个折中和替代,我们追踪并记录每个page的"usage count",在有需要时,将那些"usage count"为0的page换出并写回到disk中。后面也会提到,脏页面也会被写回disk。

核心函数:

SyncOneBuffer

(gdb) bt

#0 SyncOneBuffer (buf_id=8425, skip_recently_used=true, wb_context=0x7ffe2ea4cf80) at bufmgr.c:2520

#1 0x00000000008b8767 in BgBufferSync (wb_context=0x7ffe2ea4cf80) at bufmgr.c:2440

#2 0x000000000083a851 in BackgroundWriterMain () at bgwriter.c:244

#3 0x000000000056ad60 in AuxiliaryProcessMain (argc=2, argv=0x7ffe2ea4e520) at bootstrap.c:455

#4 0x000000000084b090 in StartChildProcess (type=BgWriterProcess) at postmaster.c:5481

#5 0x000000000084894a in reaper (postgres_signal_arg=17) at postmaster.c:3047

#6 <signal handler called>

#7 0x00007fa5ca7b447b in select () from /lib64/libc.so.6

#8 0x0000000000846c1f in ServerLoop () at postmaster.c:1709

#9 0x0000000000846612 in PostmasterMain (argc=1, argv=0x216ef80) at postmaster.c:1417

#10 0x0000000000756f66 in main (argc=1, argv=0x216ef80) at main.c:209

(gdb) ptype bufHdr

type = struct BufferDesc {

BufferTag tag;

int buf_id;

pg_atomic_uint32 state;

int wait_backend_pid;

int freeNext;

LWLock content_lock;

} *

(gdb) p *bufHdr

$2 = {

tag = {

rnode = {

spcNode = 0,

dbNode = 0,

relNode = 0

},

forkNum = InvalidForkNumber,

blockNum = 4294967295

},

buf_id = 8425,

state = {

value = 0

},

wait_backend_pid = 0,

freeNext = 8426,

content_lock = {

tranche = 56,

state = {

value = 536870912

},

waiters = {

head = 2147483647,

tail = 2147483647

}

}

}

/*

* SyncOneBuffer -- process a single buffer during syncing.

*

* If skip_recently_used is true, we don't write currently-pinned buffers, nor

* buffers marked recently used, as these are not replacement candidates.

*

* Returns a bitmask containing the following flag bits:

* BUF_WRITTEN: we wrote the buffer.

* BUF_REUSABLE: buffer is available for replacement, ie, it has

* pin count 0 and usage count 0.

*

* (BUF_WRITTEN could be set in error if FlushBuffer finds the buffer clean

* after locking it, but we don't care all that much.)

*

* Note: caller must have done ResourceOwnerEnlargeBuffers.

*/

static int

SyncOneBuffer(int buf_id, bool skip_recently_used, WritebackContext *wb_context)

{

BufferDesc *bufHdr = GetBufferDescriptor(buf_id);

int result = 0;

uint32 buf_state;

BufferTag tag;

ReservePrivateRefCountEntry();

/*

* Check whether buffer needs writing.

*

* We can make this check without taking the buffer content lock so long

* as we mark pages dirty in access methods *before* logging changes with

* XLogInsert(): if someone marks the buffer dirty just after our check we

* don't worry because our checkpoint.redo points before log record for

* upcoming changes and so we are not required to write such dirty buffer.

*/

buf_state = LockBufHdr(bufHdr);

if (BUF_STATE_GET_REFCOUNT(buf_state) == 0 &&

BUF_STATE_GET_USAGECOUNT(buf_state) == 0)

{

result |= BUF_REUSABLE;

}

else if (skip_recently_used)

{

/* Caller told us not to write recently-used buffers */

UnlockBufHdr(bufHdr, buf_state);

return result;

}

if (!(buf_state & BM_VALID) || !(buf_state & BM_DIRTY))

{

/* It's clean, so nothing to do */

UnlockBufHdr(bufHdr, buf_state);

return result;

}

/*

* Pin it, share-lock it, write it. (FlushBuffer will do nothing if the

* buffer is clean by the time we've locked it.)

*/

PinBuffer_Locked(bufHdr);

LWLockAcquire(BufferDescriptorGetContentLock(bufHdr), LW_SHARED);

FlushBuffer(bufHdr, NULL);

LWLockRelease(BufferDescriptorGetContentLock(bufHdr));

tag = bufHdr->tag;

UnpinBuffer(bufHdr, true);

ScheduleBufferTagForWriteback(wb_context, &tag);

return result | BUF_WRITTEN;

}BgBufferSync

/*

* BgBufferSync -- Write out some dirty buffers in the pool.

*

* This is called periodically by the background writer process.

*

* Returns true if it's appropriate for the bgwriter process to go into

* low-power hibernation mode. (This happens if the strategy clock sweep

* has been "lapped" and no buffer allocations have occurred recently,

* or if the bgwriter has been effectively disabled by setting

* bgwriter_lru_maxpages to 0.)

*/

bool

BgBufferSync(WritebackContext *wb_context)

{

/* info obtained from freelist.c */

int strategy_buf_id;

uint32 strategy_passes;

uint32 recent_alloc;

/*

* Information saved between calls so we can determine the strategy

* point's advance rate and avoid scanning already-cleaned buffers.

*/

static bool saved_info_valid = false;

static int prev_strategy_buf_id;

static uint32 prev_strategy_passes;

static int next_to_clean;

static uint32 next_passes;

/* Moving averages of allocation rate and clean-buffer density */

static float smoothed_alloc = 0;

static float smoothed_density = 10.0;

/* Potentially these could be tunables, but for now, not */

float smoothing_samples = 16;

float scan_whole_pool_milliseconds = 120000.0;

/* Used to compute how far we scan ahead */

long strategy_delta;

int bufs_to_lap;

int bufs_ahead;

float scans_per_alloc;

int reusable_buffers_est;

int upcoming_alloc_est;

int min_scan_buffers;

/* Variables for the scanning loop proper */

int num_to_scan;

int num_written;

int reusable_buffers;

/* Variables for final smoothed_density update */

long new_strategy_delta;

uint32 new_recent_alloc;

/*

* Find out where the freelist clock sweep currently is, and how many

* buffer allocations have happened since our last call.

*/

strategy_buf_id = StrategySyncStart(&strategy_passes, &recent_alloc);

/* Report buffer alloc counts to pgstat */

BgWriterStats.m_buf_alloc += recent_alloc;

/*

* If we're not running the LRU scan, just stop after doing the stats

* stuff. We mark the saved state invalid so that we can recover sanely

* if LRU scan is turned back on later.

*/

if (bgwriter_lru_maxpages <= 0)

{

saved_info_valid = false;

return true;

}

/*

* Compute strategy_delta = how many buffers have been scanned by the

* clock sweep since last time. If first time through, assume none. Then

* see if we are still ahead of the clock sweep, and if so, how many

* buffers we could scan before we'd catch up with it and "lap" it. Note:

* weird-looking coding of xxx_passes comparisons are to avoid bogus

* behavior when the passes counts wrap around.

*/

if (saved_info_valid)

{

int32 passes_delta = strategy_passes - prev_strategy_passes;

strategy_delta = strategy_buf_id - prev_strategy_buf_id;

strategy_delta += (long) passes_delta * NBuffers;

Assert(strategy_delta >= 0);

if ((int32) (next_passes - strategy_passes) > 0)

{

/* we're one pass ahead of the strategy point */

bufs_to_lap = strategy_buf_id - next_to_clean;

#ifdef BGW_DEBUG

elog(DEBUG2, "bgwriter ahead: bgw %u-%u strategy %u-%u delta=%ld lap=%d",

next_passes, next_to_clean,

strategy_passes, strategy_buf_id,

strategy_delta, bufs_to_lap);

#endif

}

else if (next_passes == strategy_passes &&

next_to_clean >= strategy_buf_id)

{

/* on same pass, but ahead or at least not behind */

bufs_to_lap = NBuffers - (next_to_clean - strategy_buf_id);

#ifdef BGW_DEBUG

elog(DEBUG2, "bgwriter ahead: bgw %u-%u strategy %u-%u delta=%ld lap=%d",

next_passes, next_to_clean,

strategy_passes, strategy_buf_id,

strategy_delta, bufs_to_lap);

#endif

}

else

{

/*

* We're behind, so skip forward to the strategy point and start

* cleaning from there.

*/

#ifdef BGW_DEBUG

elog(DEBUG2, "bgwriter behind: bgw %u-%u strategy %u-%u delta=%ld",

next_passes, next_to_clean,

strategy_passes, strategy_buf_id,

strategy_delta);

#endif

next_to_clean = strategy_buf_id;

next_passes = strategy_passes;

bufs_to_lap = NBuffers;

}

}

else

{

/*

* Initializing at startup or after LRU scanning had been off. Always

* start at the strategy point.

*/

#ifdef BGW_DEBUG

elog(DEBUG2, "bgwriter initializing: strategy %u-%u",

strategy_passes, strategy_buf_id);

#endif

strategy_delta = 0;

next_to_clean = strategy_buf_id;

next_passes = strategy_passes;

bufs_to_lap = NBuffers;

}

/* Update saved info for next time */

prev_strategy_buf_id = strategy_buf_id;

prev_strategy_passes = strategy_passes;

saved_info_valid = true;

/*

* Compute how many buffers had to be scanned for each new allocation, ie,

* 1/density of reusable buffers, and track a moving average of that.

*

* If the strategy point didn't move, we don't update the density estimate

*/

if (strategy_delta > 0 && recent_alloc > 0)

{

scans_per_alloc = (float) strategy_delta / (float) recent_alloc;

smoothed_density += (scans_per_alloc - smoothed_density) /

smoothing_samples;

}

/*

* Estimate how many reusable buffers there are between the current

* strategy point and where we've scanned ahead to, based on the smoothed

* density estimate.

*/

bufs_ahead = NBuffers - bufs_to_lap;

reusable_buffers_est = (float) bufs_ahead / smoothed_density;

/*

* Track a moving average of recent buffer allocations. Here, rather than

* a true average we want a fast-attack, slow-decline behavior: we

* immediately follow any increase.

*/

if (smoothed_alloc <= (float) recent_alloc)

smoothed_alloc = recent_alloc;

else

smoothed_alloc += ((float) recent_alloc - smoothed_alloc) /

smoothing_samples;

/* Scale the estimate by a GUC to allow more aggressive tuning. */

upcoming_alloc_est = (int) (smoothed_alloc * bgwriter_lru_multiplier);

/*

* If recent_alloc remains at zero for many cycles, smoothed_alloc will

* eventually underflow to zero, and the underflows produce annoying

* kernel warnings on some platforms. Once upcoming_alloc_est has gone to

* zero, there's no point in tracking smaller and smaller values of

* smoothed_alloc, so just reset it to exactly zero to avoid this

* syndrome. It will pop back up as soon as recent_alloc increases.

*/

if (upcoming_alloc_est == 0)

smoothed_alloc = 0;

/*

* Even in cases where there's been little or no buffer allocation

* activity, we want to make a small amount of progress through the buffer

* cache so that as many reusable buffers as possible are clean after an

* idle period.

*

* (scan_whole_pool_milliseconds / BgWriterDelay) computes how many times

* the BGW will be called during the scan_whole_pool time; slice the

* buffer pool into that many sections.

*/

min_scan_buffers = (int) (NBuffers / (scan_whole_pool_milliseconds / BgWriterDelay));

if (upcoming_alloc_est < (min_scan_buffers + reusable_buffers_est))

{

#ifdef BGW_DEBUG

elog(DEBUG2, "bgwriter: alloc_est=%d too small, using min=%d + reusable_est=%d",

upcoming_alloc_est, min_scan_buffers, reusable_buffers_est);

#endif

upcoming_alloc_est = min_scan_buffers + reusable_buffers_est;

}

/*

* Now write out dirty reusable buffers, working forward from the

* next_to_clean point, until we have lapped the strategy scan, or cleaned

* enough buffers to match our estimate of the next cycle's allocation

* requirements, or hit the bgwriter_lru_maxpages limit.

*/

/* Make sure we can handle the pin inside SyncOneBuffer */

ResourceOwnerEnlargeBuffers(CurrentResourceOwner);

num_to_scan = bufs_to_lap;

num_written = 0;

reusable_buffers = reusable_buffers_est;

/* Execute the LRU scan */

while (num_to_scan > 0 && reusable_buffers < upcoming_alloc_est)

{

int sync_state = SyncOneBuffer(next_to_clean, true,

wb_context);

if (++next_to_clean >= NBuffers)

{

next_to_clean = 0;

next_passes++;

}

num_to_scan--;

if (sync_state & BUF_WRITTEN)

{

reusable_buffers++;

if (++num_written >= bgwriter_lru_maxpages)

{

BgWriterStats.m_maxwritten_clean++;

break;

}

}

else if (sync_state & BUF_REUSABLE)

reusable_buffers++;

}

BgWriterStats.m_buf_written_clean += num_written;

#ifdef BGW_DEBUG

elog(DEBUG1, "bgwriter: recent_alloc=%u smoothed=%.2f delta=%ld ahead=%d density=%.2f reusable_est=%d upcoming_est=%d scanned=%d wrote=%d reusable=%d",

recent_alloc, smoothed_alloc, strategy_delta, bufs_ahead,

smoothed_density, reusable_buffers_est, upcoming_alloc_est,

bufs_to_lap - num_to_scan,

num_written,

reusable_buffers - reusable_buffers_est);

#endif

/*

* Consider the above scan as being like a new allocation scan.

* Characterize its density and update the smoothed one based on it. This

* effectively halves the moving average period in cases where both the

* strategy and the background writer are doing some useful scanning,

* which is helpful because a long memory isn't as desirable on the

* density estimates.

*/

new_strategy_delta = bufs_to_lap - num_to_scan;

new_recent_alloc = reusable_buffers - reusable_buffers_est;

if (new_strategy_delta > 0 && new_recent_alloc > 0)

{

scans_per_alloc = (float) new_strategy_delta / (float) new_recent_alloc;

smoothed_density += (scans_per_alloc - smoothed_density) /

smoothing_samples;

#ifdef BGW_DEBUG

elog(DEBUG2, "bgwriter: cleaner density alloc=%u scan=%ld density=%.2f new smoothed=%.2f",

new_recent_alloc, new_strategy_delta,

scans_per_alloc, smoothed_density);

#endif

}

/* Return true if OK to hibernate */

return (bufs_to_lap == 0 && recent_alloc == 0);

}

本文详细解析了PostgreSQL中进程间的协作,特别是核心函数SyncOneBuffer和BgBufferSync,探讨了数据缓存的分配、LRU策略以及脏页的处理。通过跟踪buffer状态和内存管理,展示了刷新页面到磁盘的流程。

本文详细解析了PostgreSQL中进程间的协作,特别是核心函数SyncOneBuffer和BgBufferSync,探讨了数据缓存的分配、LRU策略以及脏页的处理。通过跟踪buffer状态和内存管理,展示了刷新页面到磁盘的流程。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?