一、前言:

WebRTC媒体协商的具体流程见此链接:WebRTC媒体协商01 流程介绍-优快云博客

二、媒体协商的作用:

呼叫端和被呼叫端把自己的能力拿出来,看看两者共同能力是什么,选择一个共同且最优的能力进行通信;这个发送的形式是json字符串。因此,我们要做的就是:

- 得到自己的能力(类对象)

- 转换成json发给对方;

- 接收方将json转为类对象;

- 协商;

- 用协商结果配置编解码器等引擎,并开始收发数据流;

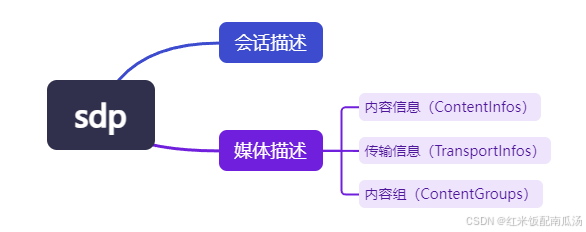

三、SDP类图:

我们以创建Offer为例,看看WebRTC中SDP类图长啥样,之前文章从功能分,从代码角度如下:

1、代码角度结构:

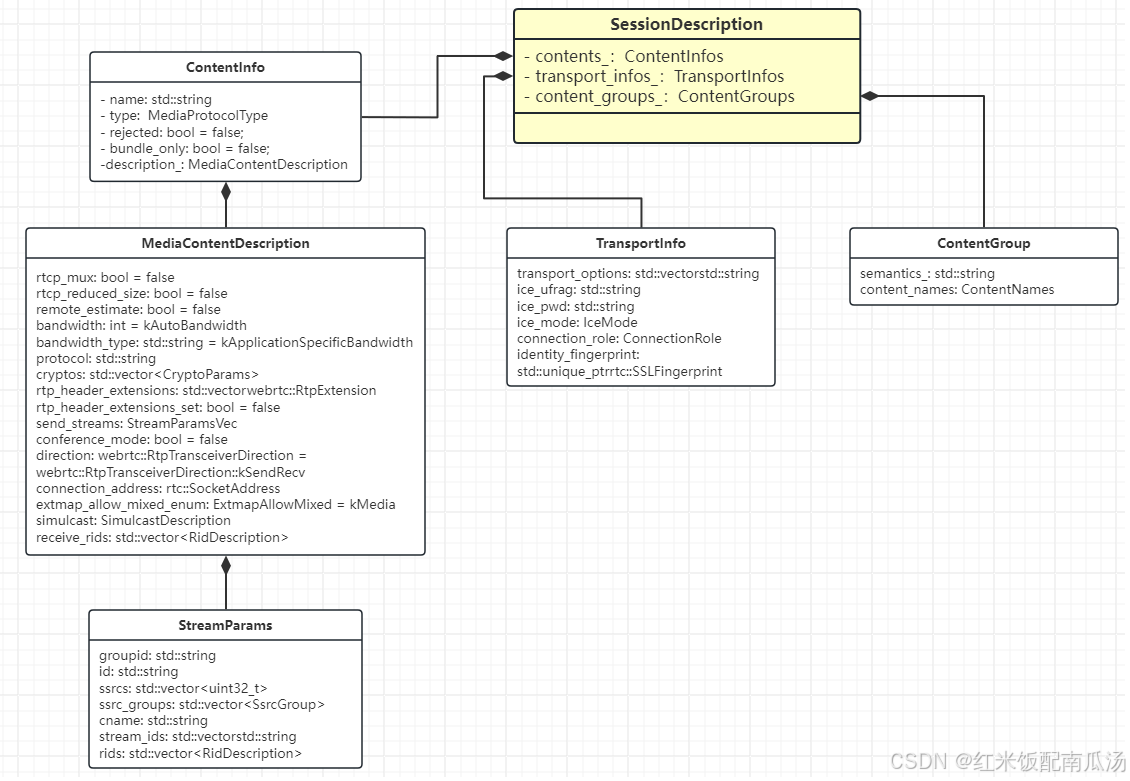

2、类图:

也就是说我们目标是生成一个SessionDescription类,这个类里面又按照分类分了三个类ContentInfo、TransportInfo、ContentGroup,这几个类里面又可以细分;

-

SessionDescription:代表一个SDP;里面包含了内容信息,传输信息,内容组;

-

ContentInfo:描述媒体信息中包括了哪些内容;对应SDP中的m行;

-

name:代表名字;

-

type:代表协议类型,比如audio/video;

-

rejected:协商是否被拒绝;

-

description:m行中更详细的信息都记录在这个类中;

- MediaContenDescription:这个其实对应的每个m行下面的所有a行(属性行)的每个字段;

- send_stream:对应一个StreamParams,主要是一些SSRC信息;

- StreamParams:主要是一些ssrc信息;

- rids:主要是一些RidDescription信息;

- RidDescription:一些rtp的扩展信息,也就是rtpmap(最常用的就是payload type);

- send_stream:对应一个StreamParams,主要是一些SSRC信息;

- MediaContenDescription:这个其实对应的每个m行下面的所有a行(属性行)的每个字段;

-

-

TransportInfo:传输层,主要是对一些传输的比如uflag/pwd/fingerprint的描述;

-

ContentGroup:和SDP中的a=group相对应;

- semantics:表示BUNDLE就是绑定,音视频端口复用;

- content_name:0,1,表示谁都来参与绑定了,0表示音频,1表示视频;

注意:

我们的SDP类中的三个成员ContentInfo、TransportInfo、ContentGroup都有一个content_name,而且代表的意思也一样,0表示音频,1表示视频;这样就可以将每一层的SDP内容串起来。很容易将每一层串起来翻译成一个SDP文本,也可以很容易将一个文本翻译成SDP类;

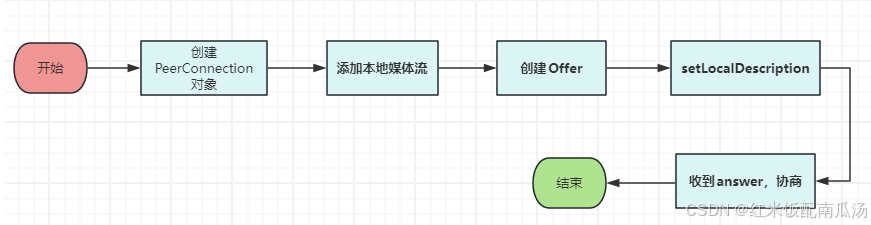

四、类关系图的创建:

也就是想想怎么写一些代码(类),很容易翻译成SDP json串中的所有成员。(可以简单理解成,类成员中某个变量对应SDP某一行中某一关键字)

流程图总结如下:

1、官方demo调用:

// 代码路径:.\examples\peerconnection\client\conductor.cc

bool Conductor::InitializePeerConnection() {

peer_connection_factory_ = webrtc::CreatePeerConnectionFactory(// 非重要参数省略..);

// 创建PeerConnection对象

if (!CreatePeerConnection(/*dtls=*/true)) {

main_wnd_->MessageBox("Error", "CreatePeerConnection failed", true);

DeletePeerConnection();

}

// 添加track到PeerConnection中

AddTracks();

return peer_connection_ != nullptr;

}

发现调用顺序是:先创建工厂 对象-> 通过工厂方法创建PeerConnection对象 -> 添加Track到PeerConnection;从这儿为入口进行分析;

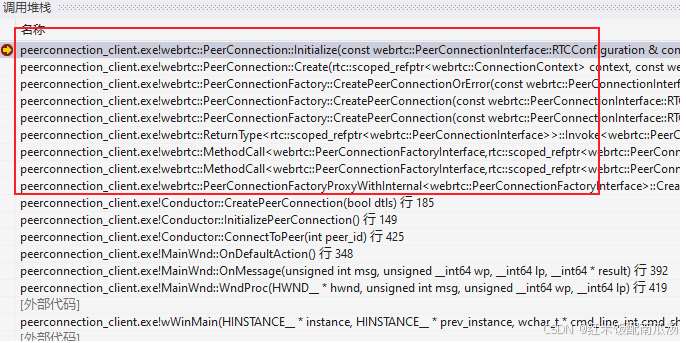

2、创建PeerConnection对象:

-

CreatePeerConnection:

-

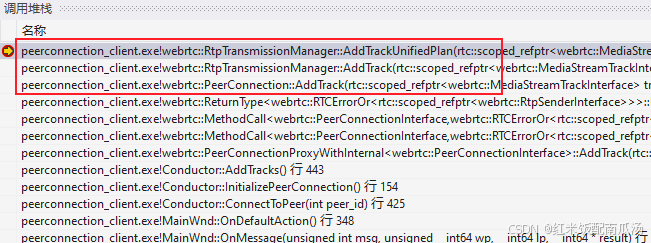

我用windows官方demo跑出来的堆栈,便于理解可以先看看:

-

demo代码入口:

bool Conductor::CreatePeerConnection(bool dtls) { // 省略非关键代码... peer_connection_ = peer_connection_factory_->CreatePeerConnection( config, nullptr, nullptr, this); return peer_connection_ != nullptr; }接下来正式进入webrtc代码(非demo代码):

// 代码路径:.\src\pc\peer_connection_factory.cc rtc::scoped_refptr<PeerConnectionInterface> PeerConnectionFactory::CreatePeerConnection( const PeerConnectionInterface::RTCConfiguration& configuration, PeerConnectionDependencies dependencies) { // 创建PeerConnection auto result = CreatePeerConnectionOrError(configuration, std::move(dependencies)); if (result.ok()) { return result.MoveValue(); } else { return nullptr; } } // 代码路径:.\src\pc\peer_connection_factory.cc RTCErrorOr<rtc::scoped_refptr<PeerConnectionInterface>> PeerConnectionFactory::CreatePeerConnectionOrError( const PeerConnectionInterface::RTCConfiguration& configuration, PeerConnectionDependencies dependencies) { // 省略非关键代码... // 创建PeerConnection auto result = PeerConnection::Create(context_, options_, std::move(event_log), std::move(call), configuration, std::move(dependencies)); if (!result.ok()) { return result.MoveError(); } // 这儿是在网络线程设置了一个代理类(线程那一章有分析) rtc::scoped_refptr<PeerConnectionInterface> result_proxy = PeerConnectionProxy::Create(signaling_thread(), network_thread(), result.MoveValue()); return result_proxy; }离开工厂,调用PeerConnection::Create

RTCErrorOr<rtc::scoped_refptr<PeerConnection>> PeerConnection::Create( rtc::scoped_refptr<ConnectionContext> context, const PeerConnectionFactoryInterface::Options& options, std::unique_ptr<RtcEventLog> event_log, std::unique_ptr<Call> call, const PeerConnectionInterface::RTCConfiguration& configuration, PeerConnectionDependencies dependencies) { // 省略非关键代码 // The PeerConnection constructor consumes some, but not all, dependencies. // 构造PeerConnection,并初始化 rtc::scoped_refptr<PeerConnection> pc( new rtc::RefCountedObject<PeerConnection>( context, options, is_unified_plan, std::move(event_log), std::move(call), dependencies, dtls_enabled)); RTCError init_error = pc->Initialize(configuration, std::move(dependencies)); if (!init_error.ok()) { RTC_LOG(LS_ERROR) << "PeerConnection initialization failed"; return init_error; } return pc; }至此,PeerConnection就已经创建;

-

3、添加本地媒体流:

AddTracks:(Web层API是getUserMedia)这一步很关键,此刻就可以初步确定类关系图

-

看看调用栈:

-

其实想想都知道,每一个track就是一个音频源,后面所有的基本都是围绕这个媒体源展开的;通过AddTrack将媒体元添加到PeerConnection中,然后,通过PeerConnection才能创建SDP文本;

-

添加思路如下:

- AddTrack中创建了Sender和Receiver以及Transceiver;

- 创建的Sender和Receiver之后,将这两者作为参数传递给Transceiver;

这样就把媒体源添加进去了,便于后续创建Offer;

-

以音频为例走读代码,视频部分类似,不再赘述:

// 代码路径:.\examples\peerconnection\client\conductor.cc

void Conductor::AddTracks() {

// 省略非关键代码...

// 创建audio track

rtc::scoped_refptr<webrtc::AudioTrackInterface> audio_track(

peer_connection_factory_->CreateAudioTrack(

kAudioLabel, peer_connection_factory_->CreateAudioSource(

cricket::AudioOptions())));

// 并将track添加到PeerConnection中

auto result_or_error = peer_connection_->AddTrack(audio_track, {kStreamId});

// 视频部分类似,省略

}

进入webrtc代码:

// 代码路径:.\pc\peer_connection.cc

RTCErrorOr<rtc::scoped_refptr<RtpSenderInterface>> PeerConnection::AddTrack(

rtc::scoped_refptr<MediaStreamTrackInterface> track,

const std::vector<std::string>& stream_ids) {

// 省略非关键代码...

// 通过kind获取音频源和视频源

if (!(track->kind() == MediaStreamTrackInterface::kAudioKind ||

track->kind() == MediaStreamTrackInterface::kVideoKind)) {

LOG_AND_RETURN_ERROR(RTCErrorType::INVALID_PARAMETER,

"Track has invalid kind: " + track->kind());

}

if (IsClosed()) {

LOG_AND_RETURN_ERROR(RTCErrorType::INVALID_STATE,

"PeerConnection is closed.");

}

if (rtp_manager()->FindSenderForTrack(track)) {

LOG_AND_RETURN_ERROR(

RTCErrorType::INVALID_PARAMETER,

"Sender already exists for track " + track->id() + ".");

}

// 这个rtp_manager用来管理Sender、Receiver、Transceiver的

auto sender_or_error = rtp_manager()->AddTrack(track, stream_ids);

if (sender_or_error.ok()) {

sdp_handler_->UpdateNegotiationNeeded();

stats_->AddTrack(track);

}

return sender_or_error;

}

// 代码路径:.\src\pc\rtp_transmission_manager.cc

RTCErrorOr<rtc::scoped_refptr<RtpSenderInterface>>

RtpTransmissionManager::AddTrack(

rtc::scoped_refptr<MediaStreamTrackInterface> track,

const std::vector<std::string>& stream_ids) {

RTC_DCHECK_RUN_ON(signaling_thread());

// 我们就按照新的格式UnifiedPlan走读

return (IsUnifiedPlan() ? AddTrackUnifiedPlan(track, stream_ids)

: AddTrackPlanB(track, stream_ids));

}

-

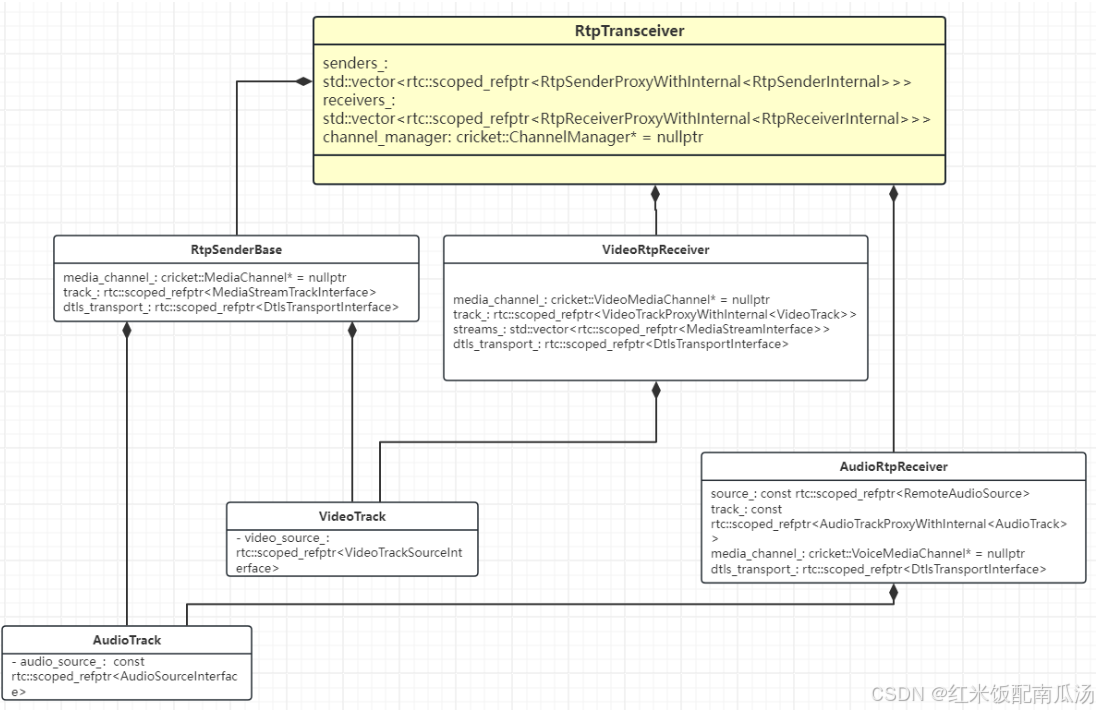

那么Sender、Receiver、Transceiver之间是什么关系呢?

-

对于webrtc中,接口层含有SDP,都属于Session层;

-

所有的SDP都是通过PeerConnection创建的,里面包含tranceivers,每一个就是SDP中的一个m行;

-

里面重要的三个成员变量,分别是音视频的Sender、Receiver、Transceiver;

-

AudioRtpSender和VideoRtpSender都是继承自RtpSenderBase;

-

对于AudioRtpReceiver/VideoRtpReceiver都是独立的,里面也包含了media_channel代表解码器,dtls_transport代表传输层;

-

发现Sender和Receiver中都使用了AddTrack,这样就可以和上层track连接;

-

这时候发现RtpTransceiver上层连接了track,中间连接了channel(编解码器),下层连接了传输层;这样就可以通过transceiver来生成类关系图,进而生成SDP文本;

4、创建Offer:

1)证书生成流程:

- 生成offer之前先要生成证书;

- 证书创建在pc->Initialize()中;

- 当收到证书Ready事件自会后,开始生成offer;

2)证书生成代码:

从PeerConnection::Initialize开始:

// 代码路径:.\pc\peer_connection.cc

RTCError PeerConnection::Initialize(

const PeerConnectionInterface::RTCConfiguration& configuration,

PeerConnectionDependencies dependencies) {

// 删除不相关代码...

// SDP的offer或者answer最终都通过这个handler来操作

sdp_handler_ =

SdpOfferAnswerHandler::Create(this, configuration, dependencies);

// 创建 RtpTransmissionManager 对象,用来管理下面的Sender、Receiver、Transceiver

rtp_manager_ = std::make_unique<RtpTransmissionManager>(

IsUnifiedPlan(), signaling_thread(), worker_thread(), channel_manager(),

&usage_pattern_, observer_, stats_.get(), [this]() {

RTC_DCHECK_RUN_ON(signaling_thread());

sdp_handler_->UpdateNegotiationNeeded();

});

// 删除不相关代码...

return RTCError::OK();

}

下面这个函数是个static函数:

// 代码路径: .\pc\sdp_offer_answer.cc

// Static

std::unique_ptr<SdpOfferAnswerHandler> SdpOfferAnswerHandler::Create(

PeerConnection* pc,

const PeerConnectionInterface::RTCConfiguration& configuration,

PeerConnectionDependencies& dependencies) {

// 这儿通过PeerConnection创建了一个SdpOfferAnswerHandler智能指针

auto handler = absl::WrapUnique(new SdpOfferAnswerHandler(pc));

handler->Initialize(configuration, dependencies);

return handler;

}

// 开始初始化

void SdpOfferAnswerHandler::Initialize(

const PeerConnectionInterface::RTCConfiguration& configuration,

PeerConnectionDependencies& dependencies) {

// 删除不相关代码...

// Obtain a certificate from RTCConfiguration if any were provided (optional).

// 如果提供了任何证书,则从 RTCConfiguration 获取证书(可选)

rtc::scoped_refptr<rtc::RTCCertificate> certificate;

if (!configuration.certificates.empty()) {

// TODO(hbos,torbjorng): Decide on certificate-selection strategy instead of

// just picking the first one. The decision should be made based on the DTLS

// handshake. The DTLS negotiations need to know about all certificates.

certificate = configuration.certificates[0];

}

// 构造一个WebRtcSessionDescriptionFactory(会给worker线程发送一个创建证书的事件(Certificate))

webrtc_session_desc_factory_ =

std::make_unique<WebRtcSessionDescriptionFactory>(

signaling_thread(), channel_manager(), this, pc_->session_id(),

pc_->dtls_enabled(), std::move(dependencies.cert_generator),

certificate, &ssrc_generator_,

[this](const rtc::scoped_refptr<rtc::RTCCertificate>& certificate) {

transport_controller()->SetLocalCertificate(certificate);

});

// 删除不相关代码...

}

构造函数中请求生成证书:

// 代码路径:.\pc\webrtc_session_description_factory.cc

WebRtcSessionDescriptionFactory::WebRtcSessionDescriptionFactory(

rtc::Thread* signaling_thread,

cricket::ChannelManager* channel_manager,

const SdpStateProvider* sdp_info,

const std::string& session_id,

bool dtls_enabled,

std::unique_ptr<rtc::RTCCertificateGeneratorInterface> cert_generator,

const rtc::scoped_refptr<rtc::RTCCertificate>& certificate,

UniqueRandomIdGenerator* ssrc_generator,

std::function<void(const rtc::scoped_refptr<rtc::RTCCertificate>&)>

on_certificate_ready)

: signaling_thread_(signaling_thread),

session_desc_factory_(channel_manager,

&transport_desc_factory_,

ssrc_generator),

// RFC 4566 suggested a Network Time Protocol (NTP) format timestamp

// as the session id and session version. To simplify, it should be fine

// to just use a random number as session id and start version from

// |kInitSessionVersion|.

session_version_(kInitSessionVersion),

cert_generator_(dtls_enabled ? std::move(cert_generator) : nullptr),

sdp_info_(sdp_info),

session_id_(session_id),

certificate_request_state_(CERTIFICATE_NOT_NEEDED),

on_certificate_ready_(on_certificate_ready) {

RTC_DCHECK(signaling_thread_);

// 删除无关代码...

// Generate certificate.

certificate_request_state_ = CERTIFICATE_WAITING;

rtc::scoped_refptr<WebRtcCertificateGeneratorCallback> callback(

new rtc::RefCountedObject<WebRtcCertificateGeneratorCallback>());

callback->SignalRequestFailed.connect(this, &WebRtcSessionDescriptionFactory::OnCertificateRequestFailed);

// 设置监听证书创建成功的回调函数

callback->SignalCertificateReady.connect(this, &WebRtcSessionDescriptionFactory::SetCertificate);

rtc::KeyParams key_params = rtc::KeyParams();

RTC_LOG(LS_VERBOSE)

<< "DTLS-SRTP enabled; sending DTLS identity request (key type: "

<< key_params.type() << ").";

// 请求证书。此过程异步进行,以便调用者获得

// 连接到 |SignalCertificateReady| 的机会。

cert_generator_->GenerateCertificateAsync(key_params, absl::nullopt,

callback);

}

至此,信号线程创建证书的流程就走完了,交给工作线程去创建证书,创建好之后,回调回来通知。

3)创建Offer代码:

上面步骤已经说了,信令线程发送消息让工作线程创建证书了,创建好了之后会将证书放入信令线程的消息队列,并触发信令线程的回调,步骤如下:

- 工作线程创建证书的过程中,信令线程没必要等,继续往下执行创建Offer的任务: 将任务添加到create_session_description_requests_ 当中;

- 当工作线程创建好证书并触发信令线程回调之后,信令线程执行SetCertificate;

a)开始创建offer:

// 代码路径:.\pc\peer_connection.cc

// 开始创建Offer

void PeerConnection::CreateOffer(CreateSessionDescriptionObserver* observer,

const RTCOfferAnswerOptions& options) {

RTC_DCHECK_RUN_ON(signaling_thread());

sdp_handler_->CreateOffer(observer, options);

}

进入SdpOfferAnswerHandler:

// 代码路径:.\pc\sdp_offer_answer.cc

void SdpOfferAnswerHandler::CreateOffer(

CreateSessionDescriptionObserver* observer,

const PeerConnectionInterface::RTCOfferAnswerOptions& options) {

// 省略不相关代码...

// 干活的地方

this_weak_ptr->DoCreateOffer(options, observer_wrapper);

}

void SdpOfferAnswerHandler::DoCreateOffer(

const PeerConnectionInterface::RTCOfferAnswerOptions& options,

rtc::scoped_refptr<CreateSessionDescriptionObserver> observer) {

// 删除不相关代码..

// 创建Offer

webrtc_session_desc_factory_->CreateOffer(observer, options, session_options);

}

进入:

// 代码路径:.\pc\webrtc_session_description_factory.cc

void WebRtcSessionDescriptionFactory::CreateOffer(

CreateSessionDescriptionObserver* observer,

const PeerConnectionInterface::RTCOfferAnswerOptions& options,

const cricket::MediaSessionOptions& session_options) {

// 删除非关键代码...

// 请求创建Offer请求先放到队列

CreateSessionDescriptionRequest request(

CreateSessionDescriptionRequest::kOffer, observer, session_options);

if (certificate_request_state_ == CERTIFICATE_WAITING) {

create_session_description_requests_.push(request);

} else {

RTC_DCHECK(certificate_request_state_ == CERTIFICATE_SUCCEEDED ||

certificate_request_state_ == CERTIFICATE_NOT_NEEDED);

InternalCreateOffer(request);

}

}

至此,创建Offer的步骤就已经走完了,接下来等待证书创建完成之后,执行后续流程;

b)证书创建完成回调:

-

当收到工作线程创建好的证书之后(自定义消息队列中有数据),便会触发SetCertificate,SetCertificate会去 create_session_description_requests_ 拿到最前面任务,进行执行创建SessionDescription(拿到音视频信息,最终创建SDP类关系图):

-

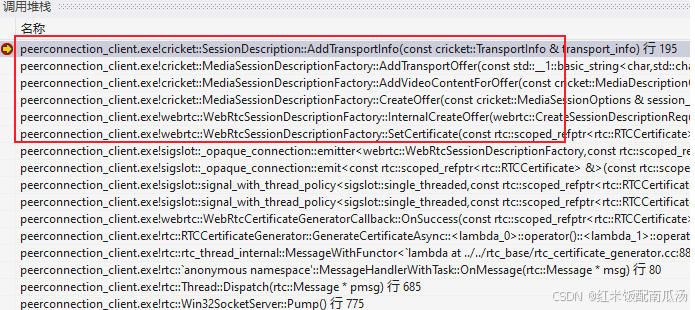

看下调用堆栈:

-

代码走读:

// .\pc\webrtc_session_description_factory.cc

void WebRtcSessionDescriptionFactory::OnMessage(rtc::Message* msg) {

switch (msg->message_id) {

// 删除无关代码...

case MSG_USE_CONSTRUCTOR_CERTIFICATE: {

rtc::ScopedRefMessageData<rtc::RTCCertificate>* param =

static_cast<rtc::ScopedRefMessageData<rtc::RTCCertificate>*>(

msg->pdata);

RTC_LOG(LS_INFO) << "Using certificate supplied to the constructor.";

SetCertificate(param->data());

delete param;

break;

}

}

}

// 设置证书,创建offer

void WebRtcSessionDescriptionFactory::SetCertificate(

const rtc::scoped_refptr<rtc::RTCCertificate>& certificate) {

RTC_DCHECK(certificate);

RTC_LOG(LS_VERBOSE) << "Setting new certificate.";

certificate_request_state_ = CERTIFICATE_SUCCEEDED;

on_certificate_ready_(certificate);

transport_desc_factory_.set_certificate(certificate);

transport_desc_factory_.set_secure(cricket::SEC_ENABLED);

while (!create_session_description_requests_.empty()) {

if (create_session_description_requests_.front().type ==

CreateSessionDescriptionRequest::kOffer) {

// 拿到队列头的创建Offer指令,去创建Offer

InternalCreateOffer(create_session_description_requests_.front());

} else {

InternalCreateAnswer(create_session_description_requests_.front());

}

create_session_description_requests_.pop();

}

}

void WebRtcSessionDescriptionFactory::InternalCreateOffer(

CreateSessionDescriptionRequest request) {

// 删除非关键代码...

// 生成SDP的类对象desc

std::unique_ptr<cricket::SessionDescription> desc =

session_desc_factory_.CreateOffer(

request.options, sdp_info_->local_description()

? sdp_info_->local_description()->description()

: nullptr);

if (!desc) {

PostCreateSessionDescriptionFailed(request.observer,

"Failed to initialize the offer.");

return;

}

// 删除非关键代码...

// 通知 SessionDescription 创建成功

PostCreateSessionDescriptionSucceeded(request.observer, std::move(offer));

}

进入MediaSession:

// 代码路径:.\pc\media_session.cc

std::unique_ptr<SessionDescription> MediaSessionDescriptionFactory::CreateOffer(

const MediaSessionOptions& session_options,

const SessionDescription* current_description) const {

// Must have options for each existing section.

if (current_description) {

RTC_DCHECK_LE(current_description->contents().size(),

session_options.media_description_options.size());

}

IceCredentialsIterator ice_credentials(

session_options.pooled_ice_credentials);

std::vector<const ContentInfo*> current_active_contents;

if (current_description) {

current_active_contents =

GetActiveContents(*current_description, session_options);

}

StreamParamsVec current_streams =

GetCurrentStreamParams(current_active_contents);

AudioCodecs offer_audio_codecs;

VideoCodecs offer_video_codecs;

RtpDataCodecs offer_rtp_data_codecs;

GetCodecsForOffer(

current_active_contents, &offer_audio_codecs, &offer_video_codecs,

session_options.data_channel_type == DataChannelType::DCT_SCTP

? nullptr

: &offer_rtp_data_codecs);

if (!session_options.vad_enabled) {

// If application doesn't want CN codecs in offer.

StripCNCodecs(&offer_audio_codecs);

}

AudioVideoRtpHeaderExtensions extensions_with_ids =

GetOfferedRtpHeaderExtensionsWithIds(

current_active_contents, session_options.offer_extmap_allow_mixed,

session_options.media_description_options);

auto offer = std::make_unique<SessionDescription>();

// Iterate through the media description options, matching with existing media

// descriptions in |current_description|.

size_t msection_index = 0;

for (const MediaDescriptionOptions& media_description_options :

session_options.media_description_options) {

const ContentInfo* current_content = nullptr;

if (current_description &&

msection_index < current_description->contents().size()) {

current_content = ¤t_description->contents()[msection_index];

// Media type must match unless this media section is being recycled.

RTC_DCHECK(current_content->name != media_description_options.mid ||

IsMediaContentOfType(current_content,

media_description_options.type));

}

switch (media_description_options.type) {

case MEDIA_TYPE_AUDIO:

// 添加为Offer添加Content内容

if (!AddAudioContentForOffer(media_description_options, session_options,

current_content, current_description,

extensions_with_ids.audio,

offer_audio_codecs, ¤t_streams,

offer.get(), &ice_credentials)) {

return nullptr;

}

break;

// 删除不重要代码...

}

++msection_index;

}

// 删除不重要代码...

}

i)添加ContentInfo:

SDP三大成员之一!

// 代码路径:.\pc\media_session.cc

// 给Offer添加ContentInfo

bool MediaSessionDescriptionFactory::AddAudioContentForOffer(

const MediaDescriptionOptions& media_description_options,

const MediaSessionOptions& session_options,

const ContentInfo* current_content,

const SessionDescription* current_description,

const RtpHeaderExtensions& audio_rtp_extensions,

const AudioCodecs& audio_codecs,

StreamParamsVec* current_streams,

SessionDescription* desc,

IceCredentialsIterator* ice_credentials) const {

// Filter audio_codecs (which includes all codecs, with correctly remapped

// payload types) based on transceiver direction.

// 由于direction是双向的,因此,需要获取编码器和解码器两个方向的所有信息

const AudioCodecs& supported_audio_codecs =

GetAudioCodecsForOffer(media_description_options.direction);

AudioCodecs filtered_codecs;

// 判断是否有首选的编码器

if (!media_description_options.codec_preferences.empty()) {

// Add the codecs from the current transceiver's codec preferences.

// They override any existing codecs from previous negotiations.

filtered_codecs = MatchCodecPreference(

media_description_options.codec_preferences, supported_audio_codecs);

} else {

// Add the codecs from current content if it exists and is not rejected nor

// recycled. 由于rejected不为true,因此,下面逻辑不会执行

if (current_content && !current_content->rejected &&

current_content->name == media_description_options.mid) {

RTC_CHECK(IsMediaContentOfType(current_content, MEDIA_TYPE_AUDIO));

const AudioContentDescription* acd =

current_content->media_description()->as_audio();

for (const AudioCodec& codec : acd->codecs()) {

if (FindMatchingCodec<AudioCodec>(acd->codecs(), audio_codecs, codec,

nullptr)) {

filtered_codecs.push_back(codec);

}

}

}

// Add other supported audio codecs.

// 遍历支持的所有编解码器,如果匹配,就添加到匹配列表filtered_codecs

AudioCodec found_codec;

for (const AudioCodec& codec : supported_audio_codecs) {

if (FindMatchingCodec<AudioCodec>(supported_audio_codecs, audio_codecs,

codec, &found_codec) &&

!FindMatchingCodec<AudioCodec>(supported_audio_codecs,

filtered_codecs, codec, nullptr)) {

// Use the |found_codec| from |audio_codecs| because it has the

// correctly mapped payload type.

filtered_codecs.push_back(found_codec);

}

}

}

cricket::SecurePolicy sdes_policy =

IsDtlsActive(current_content, current_description) ? cricket::SEC_DISABLED

: secure();

auto audio = std::make_unique<AudioContentDescription>();

std::vector<std::string> crypto_suites;

GetSupportedAudioSdesCryptoSuiteNames(session_options.crypto_options,

&crypto_suites);

// codecs作为参数传到该函数中

if (!CreateMediaContentOffer(media_description_options, session_options,

filtered_codecs, sdes_policy,

GetCryptos(current_content), crypto_suites,

audio_rtp_extensions, ssrc_generator_,

current_streams, audio.get())) {

return false;

}

bool secure_transport = (transport_desc_factory_->secure() != SEC_DISABLED);

// 添加 UDP/TLS/RTP/SAVPF 信息

SetMediaProtocol(secure_transport, audio.get());

audio->set_direction(media_description_options.direction);

// 生成ContentInfo(SDP类图中的三大成员之一),将上面创建的AudioContent设置到其中

desc->AddContent(media_description_options.mid, MediaProtocolType::kRtp,

media_description_options.stopped, std::move(audio));

// 为Offer添加transport信息

if (!AddTransportOffer(media_description_options.mid,

media_description_options.transport_options,

current_description, desc, ice_credentials)) {

return false;

}

return true;

}

// 其中CreateMediaContentOffer

template <class C>

static bool CreateMediaContentOffer(

const MediaDescriptionOptions& media_description_options,

const MediaSessionOptions& session_options,

const std::vector<C>& codecs,

const SecurePolicy& secure_policy,

const CryptoParamsVec* current_cryptos,

const std::vector<std::string>& crypto_suites,

const RtpHeaderExtensions& rtp_extensions,

UniqueRandomIdGenerator* ssrc_generator,

StreamParamsVec* current_streams,

MediaContentDescriptionImpl<C>* offer) {

// 支持的编解码器添加到offer中

offer->AddCodecs(codecs);

// 添加流相关参数(stream、ssrc)

if (!AddStreamParams(media_description_options.sender_options,

session_options.rtcp_cname, ssrc_generator,

current_streams, offer)) {

return false;

}

// 创建MediaContentDescription中间的内容,最后一个参数offer是出参

return CreateContentOffer(media_description_options, session_options,

secure_policy, current_cryptos, crypto_suites,

rtp_extensions, ssrc_generator, current_streams,

offer);

}

// 创建MediaContentDescription中间的内容,最后一个参数offer是出参

static bool CreateContentOffer(

const MediaDescriptionOptions& media_description_options,

const MediaSessionOptions& session_options,

const SecurePolicy& secure_policy,

const CryptoParamsVec* current_cryptos,

const std::vector<std::string>& crypto_suites,

const RtpHeaderExtensions& rtp_extensions,

UniqueRandomIdGenerator* ssrc_generator,

StreamParamsVec* current_streams,

MediaContentDescription* offer) {

offer->set_rtcp_mux(session_options.rtcp_mux_enabled);

if (offer->type() == cricket::MEDIA_TYPE_VIDEO) {

offer->set_rtcp_reduced_size(true);

}

// Build the vector of header extensions with directions for this

// media_description's options.

// 创建offer里面扩展头相关内容

RtpHeaderExtensions extensions;

for (auto extension_with_id : rtp_extensions) {

for (const auto& extension : media_description_options.header_extensions) {

if (extension_with_id.uri == extension.uri) {

// TODO(crbug.com/1051821): Configure the extension direction from

// the information in the media_description_options extension

// capability.

extensions.push_back(extension_with_id);

}

}

}

offer->set_rtp_header_extensions(extensions);

// simulcast相关

AddSimulcastToMediaDescription(media_description_options, offer);

// 我们默认使用的是DTLS,这一块是SDES(也就是明文的)

if (secure_policy != SEC_DISABLED) {

if (current_cryptos) {

AddMediaCryptos(*current_cryptos, offer);

}

if (offer->cryptos().empty()) {

if (!CreateMediaCryptos(crypto_suites, offer)) {

return false;

}

}

}

if (secure_policy == SEC_REQUIRED && offer->cryptos().empty()) {

return false;

}

return true;

}

ii)添加TransportInfo:

// 代码路径:.\pc\media_session.cc

bool MediaSessionDescriptionFactory::AddTransportOffer(

const std::string& content_name,

const TransportOptions& transport_options,

const SessionDescription* current_desc,

SessionDescription* offer_desc,

IceCredentialsIterator* ice_credentials) const {

if (!transport_desc_factory_)

return false;

const TransportDescription* current_tdesc =

GetTransportDescription(content_name, current_desc);

std::unique_ptr<TransportDescription> new_tdesc(

transport_desc_factory_->CreateOffer(transport_options, current_tdesc,

ice_credentials));

if (!new_tdesc) {

RTC_LOG(LS_ERROR) << "Failed to AddTransportOffer, content name="

<< content_name;

}

// 收集TransportInfo

offer_desc->AddTransportInfo(TransportInfo(content_name, *new_tdesc));

return true;

}

// 代码路径:.\pc\session_description.cc

void SessionDescription::AddTransportInfo(const TransportInfo& transport_info) {

transport_infos_.push_back(transport_info);

}

iii)最终生成SDP:

发现又回到InternalCreateOffer,生成了最终的SDP类(JsepSessionDescription)对象;

// 代码路径:pc\webrtc_session_description_factory.cc

void WebRtcSessionDescriptionFactory::InternalCreateOffer(

CreateSessionDescriptionRequest request) {

// 删除非关键代码...

// 生成SDP的类对象desc

std::unique_ptr<cricket::SessionDescription> desc =

session_desc_factory_.CreateOffer(

request.options, sdp_info_->local_description()

? sdp_info_->local_description()->description()

: nullptr);

if (!desc) {

PostCreateSessionDescriptionFailed(request.observer,

"Failed to initialize the offer.");

return;

}

// 最终生成 offer SDP,也就是 JsepSessionDescription

auto offer = std::make_unique<JsepSessionDescription>(

SdpType::kOffer, std::move(desc), session_id_,

rtc::ToString(session_version_++));

if (sdp_info_->local_description()) {

for (const cricket::MediaDescriptionOptions& options :

request.options.media_description_options) {

if (!options.transport_options.ice_restart) {

CopyCandidatesFromSessionDescription(sdp_info_->local_description(),

options.mid, offer.get());

}

}

}

PostCreateSessionDescriptionSucceeded(request.observer, std::move(offer));

}

至此,SDP类对象已经生成。

5、设置本地描述:

入口函数:PeerConnection::SetLocalDescription,由于前面贴太多代码了,这次我打算先写出关键函数,然后稍微贴点代码。主要分为三大步:

1)创建各种transport:

调用堆栈:

----> JsepTransport::SetLocalJsepTransportDescription

----> JsepTransportController::MaybeCreateJsepTransport

—> JsepTransportController::ApplyDescription_n // 里面会切换到网络线程执行

–> SdpOfferAnswerHandler::PushdownTransportDescription // 里面创建各种transport(ice、dtls、dtlsRtcp)

-> SdpOfferAnswerHandler::ApplyLocalDescription

-> SdpOfferAnswerHandler::DoSetLocalDescription

-> SdpOfferAnswerHandler::SetLocalDescription

-> PeerConnection::SetLocalDescription

上面主要是创建了传输相关的信息:

- ICE就是收集的一些传输通路;

- DtlsTransport就是用于通过握手交换证书;密钥也是在这个里面获取的;

- DtlsTransSrtpTransport对最终要发送的而数据进行加密;

- 还有一些其他transport没有列出来,不重要,比如应用数据的transport;

- ApplyDescription_n,这个n代表网络线程,也就是交由网络线程完成;

2)创建transceivers:

调用堆栈:

------> ChannelManager::voice_channels_(最终的WebRtcVoiceMediaChannel对象)

--------> new WebRtcVoiceMediaChannel(engine, call) // 创建了一个MediaChannel

-------> WebRtcVoiceEngine::CreateMediaChannel

------> ChannelManager::CreateVoiceChannel

-----> SdpOfferAnswerHandler::CreateVoiceChannel

----> SdpOfferAnswerHandler::UpdateTransceiverChannel

—> SdpOfferAnswerHandler::UpdateTransceiversAndDataChannels // 创建VoiceChannel、VideoChannel

–> SdpOfferAnswerHandler::ApplyLocalDescription // 看看这一步在创建transport的堆栈的位置

UpdateTransceiversAndDataChannels主要是创建一些transceivers,每个transceiver其实就是SDP中的一个m行;包括一些音视频收发相关的channel;

- CreateVoiceChannel创建音频通道;

- 最终创建WebRtcVoiceMediaChannel;

- 并且将voice_channel和MediaChannel进行绑定;

3)关联:

调用堆栈:

-----------> std::make_unique()

----------> AudioSendStream构造函数中会voe::CreateChannelSend

---------> webrtc::AudioSendStream* Call::CreateAudioSendStream

--------> WebRtcAudioSendStream // 在构造函数中添加一个AudioSendStream(stream_ = call_->CreateAudioSendStream(config_))

-------> BaseChannel::UpdateLocalStreams_w // 将这些信息更新到工作线程的stream中

------> VoiceChannel::SetLocalContent_w // 切换到工作线程

-----> BaseChannel::SetLocalContent

----> SdpOfferAnswerHandler::PushdownMediaDescription // 根据SDP媒体部分的描述,更新内部对象

—> SdpOfferAnswerHandler::UpdateSessionState

–> SdpOfferAnswerHandler::ApplyLocalDescription // 都从这儿开始

UpdateSessionState:

- 上一步创建的channel要和编解码器关联起来,就是在这一步完成的;

- 更新媒体协商的状态机(webrtc入门与实战中有介绍);

- PushdownMediaDescription从SDP中找出Media相关信息,并进行设置;

- UpdateLocalStreams_w就是将这些信息更新到工作线程的stream中(stream是编解码器和channel中间的一个概念);

- ChannelSend就是最终我们的编解码器相关联;视频默认是VP8,音频默认是Opus;

五、各种Channel:

我们知道,整个webrtc其实是按照分层设计的,底层的设备层,中间的引擎层,上层的Session层,Api层;

引擎层非常庞大,又分为视频引擎层、音频引擎层、传输层;

音视频引擎层又分为很多小的层,比如编码层,这样就会导致上面出现了很多channel;

可以看看我写的这篇文章Channel和Stream那一节:

WebRTC音频 04 - 关键类

六、音视频流水线:

前面都是解释怎么生成SDP的类对象,对象生成之后要转换成json,然后,互相交换最终要流水线跑起来,音视频数据才能源源不断进行交换。

1、调用栈:

-------> WebRtcAudioSendStream::UpdateSendState // 启动编码器

------> WebRtcAudioSendStream::SetSource // 设置WebRtcAudioSendStream的源,并将它设置为源的输出

-----> WebRtcVoiceMediaChannel::SetLocalSource // 根据ssrc找到对应SendStream

----> WebRtcVoiceMediaChannel::SetAudioSend // 切换到工作线程去执行

—> AudioRtpSender::SetSend

—> RtpSenderBase::SetSsrc // AudioRtpSender的基类,里面会将编码器启动起来

–> SdpOfferAnswerHandler::ApplyLocalDescription // 开始媒体协商

2、代码走读:

/**

* 开始进行媒体协商

*/

RTCError SdpOfferAnswerHandler::ApplyLocalDescription(

std::unique_ptr<SessionDescriptionInterface> desc) {

// 删除非关键的代码...

// 里面会创建各种transport(创建dtls的时候,会根据呼叫方还是被呼叫方去创建)

RTCError error = PushdownTransportDescription(cricket::CS_LOCAL, type);

if (!error.ok()) {

return error;

}

if (IsUnifiedPlan()) {

// 里面会创建VoiceChannel、VideoChannel以及引擎Channel

RTCError error = UpdateTransceiversAndDataChannels(

cricket::CS_LOCAL, *local_description(), old_local_description,

remote_description());

if (!error.ok()) {

return error;

}

std::vector<rtc::scoped_refptr<RtpTransceiverInterface>> remove_list;

std::vector<rtc::scoped_refptr<MediaStreamInterface>> removed_streams;

for (const auto& transceiver : transceivers()->List()) {

if (transceiver->stopped()) {

continue;

}

// 2.2.7.1.1.(6-9): Set sender and receiver's transport slots.

// Note that code paths that don't set MID won't be able to use

// information about DTLS transports.

if (transceiver->mid()) {

auto dtls_transport = LookupDtlsTransportByMid(

pc_->network_thread(), transport_controller(), *transceiver->mid());

transceiver->internal()->sender_internal()->set_transport(

dtls_transport);

transceiver->internal()->receiver_internal()->set_transport(

dtls_transport);

}

const ContentInfo* content =

FindMediaSectionForTransceiver(transceiver, local_description());

if (!content) {

continue;

}

const MediaContentDescription* media_desc = content->media_description();

// 2.2.7.1.6: If description is of type "answer" or "pranswer", then run

// the following steps:

if (type == SdpType::kPrAnswer || type == SdpType::kAnswer) {

// 2.2.7.1.6.1: If direction is "sendonly" or "inactive", and

// transceiver's [[FiredDirection]] slot is either "sendrecv" or

// "recvonly", process the removal of a remote track for the media

// description, given transceiver, removeList, and muteTracks.

if (!RtpTransceiverDirectionHasRecv(media_desc->direction()) &&

(transceiver->internal()->fired_direction() &&

RtpTransceiverDirectionHasRecv(

*transceiver->internal()->fired_direction()))) {

ProcessRemovalOfRemoteTrack(transceiver, &remove_list,

&removed_streams);

}

// 2.2.7.1.6.2: Set transceiver's [[CurrentDirection]] and

// [[FiredDirection]] slots to direction.

transceiver->internal()->set_current_direction(media_desc->direction());

transceiver->internal()->set_fired_direction(media_desc->direction());

}

}

auto observer = pc_->Observer();

for (const auto& transceiver : remove_list) {

observer->OnRemoveTrack(transceiver->receiver());

}

for (const auto& stream : removed_streams) {

observer->OnRemoveStream(stream);

}

} else {

// Media channels will be created only when offer is set. These may use new

// transports just created by PushdownTransportDescription.

if (type == SdpType::kOffer) {

// TODO(bugs.webrtc.org/4676) - Handle CreateChannel failure, as new local

// description is applied. Restore back to old description.

RTCError error = CreateChannels(*local_description()->description());

if (!error.ok()) {

return error;

}

}

// Remove unused channels if MediaContentDescription is rejected.

RemoveUnusedChannels(local_description()->description());

}

// 更新媒体协商状态机、媒体流、编解码器(创建一些与编码器相关的类)

error = UpdateSessionState(type, cricket::CS_LOCAL,

local_description()->description());

if (!error.ok()) {

return error;

}

// 删除非关键的代码...

if (IsUnifiedPlan()) {

for (const auto& transceiver : transceivers()->List()) {

if (transceiver->stopped()) {

continue;

}

const ContentInfo* content =

FindMediaSectionForTransceiver(transceiver, local_description());

if (!content) {

continue;

}

cricket::ChannelInterface* channel = transceiver->internal()->channel();

if (content->rejected || !channel || channel->local_streams().empty()) {

// 0 is a special value meaning "this sender has no associated send

// stream". Need to call this so the sender won't attempt to configure

// a no longer existing stream and run into DCHECKs in the lower

// layers.

// 0 是一个特殊值,表示“此发送方没有关联的发送流”。

// 需要调用此函数,以便发送方不会尝试配置一个不再存在的流并在较低层次的代码中触发 DCHECK 断言。

// AudioRtpSender的基类,里面会将编码器启动起来

transceiver->internal()->sender_internal()->SetSsrc(0);

} else {

// Get the StreamParams from the channel which could generate SSRCs.

const std::vector<StreamParams>& streams = channel->local_streams();

transceiver->internal()->sender_internal()->set_stream_ids(

streams[0].stream_ids());

transceiver->internal()->sender_internal()->SetSsrc(

streams[0].first_ssrc());

}

}

// 删除非关键的代码...

}

/**

* AudioRtpSender的基类,里面会将编码器启动起来

*/

void RtpSenderBase::SetSsrc(uint32_t ssrc) {

TRACE_EVENT0("webrtc", "RtpSenderBase::SetSsrc");

if (stopped_ || ssrc == ssrc_) {

return;

}

// If we are already sending with a particular SSRC, stop sending.

if (can_send_track()) {

ClearSend();

RemoveTrackFromStats();

}

ssrc_ = ssrc;

if (can_send_track()) {

SetSend();

AddTrackToStats();

}

// 删除非关键代码...

}

void AudioRtpSender::SetSend() {

// 删除非关键代码..

// |track_->enabled()| hops to the signaling thread, so call it before we hop

// to the worker thread or else it will deadlock.

// 切换到工作线程去执行

bool track_enabled = track_->enabled();

bool success = worker_thread_->Invoke<bool>(RTC_FROM_HERE, [&] {

return voice_media_channel()->SetAudioSend(ssrc_, track_enabled, &options,

sink_adapter_.get());

});

if (!success) {

RTC_LOG(LS_ERROR) << "SetAudioSend: ssrc is incorrect: " << ssrc_;

}

}

// 切换到工作线程去执行

bool WebRtcVoiceMediaChannel::SetAudioSend(uint32_t ssrc,

bool enable,

const AudioOptions* options,

AudioSource* source) {

RTC_DCHECK_RUN_ON(worker_thread_);

// TODO(solenberg): The state change should be fully rolled back if any one of

// these calls fail.

// 根据ssrc找到对应的SendStream

if (!SetLocalSource(ssrc, source)) {

return false;

}

if (!MuteStream(ssrc, !enable)) {

return false;

}

if (enable && options) {

return SetOptions(*options);

}

return true;

}

/**

* 根据ssrc找到对应SendStream

*/

bool WebRtcVoiceMediaChannel::SetLocalSource(uint32_t ssrc,

AudioSource* source) {

auto it = send_streams_.find(ssrc);

if (it == send_streams_.end()) {

if (source) {

// Return an error if trying to set a valid source with an invalid ssrc.

RTC_LOG(LS_ERROR) << "SetLocalSource failed with ssrc " << ssrc;

return false;

}

// The channel likely has gone away, do nothing.

return true;

}

if (source) {

// 设置WebRtcAudioSendStream的源,并将它设置为源的输出

it->second->SetSource(source);

} else {

it->second->ClearSource();

}

return true;

}

// 设置WebRtcAudioSendStream的源,并将它设置为源的输出

void SetSource(AudioSource* source) {

RTC_DCHECK_RUN_ON(&worker_thread_checker_);

RTC_DCHECK(source);

if (source_) {

RTC_DCHECK(source_ == source);

return;

}

source->SetSink(this);

source_ = source;

// 启动编码器

UpdateSendState();

}

// 代码路径:.\src\media\engine\webrtc_voice_engine.cc

void UpdateSendState() {

RTC_DCHECK_RUN_ON(&worker_thread_checker_);

RTC_DCHECK(stream_);

RTC_DCHECK_EQ(1UL, rtp_parameters_.encodings.size());

if (send_ && source_ != nullptr && rtp_parameters_.encodings[0].active) {

stream_->Start();

} else { // !send || source_ = nullptr

stream_->Stop();

}

}

七、总结:

一句话总结:通过拿到SDP信息,然后分发给子层各自建立自己的类对象,再将音视频流水线启动起来。

因为媒体协商会将WebRTC所有代码串起来,代码实在太多了,阅读时候一不小心就离开主干了,因此,好多子函数没有展开,大家顺着关键函数往下自己读吧!

239

239