一、环境安装

conda create -n gpu_umap_env -c conda-forge -c rapidsai -c nvidia \

cuml=24.02 python=3.10 cudatoolkit=11.7 \

-c https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/ \

-c https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/ \

-c https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/r/

二、激活环境

conda activate gpu_umap_env

三、安装umap库

pip install umap-learn

四、运行测试用例

import numpy as np

import time

# GPU 加速相关

try:

from cuml import UMAP as GPU_UMAP

import cupy as cp

gpu_available = True

except ImportError:

gpu_available = False

# CPU 版 UMAP

from umap import UMAP as CPU_UMAP

# 构造高维数据

n_samples = 10000

n_features = 512

print(f"\nGenerating synthetic data: shape=({n_samples}, {n_features})")

X_cpu = np.random.rand(n_samples, n_features)

# ----------------------------

# CPU 版测试

# ----------------------------

print("\nRunning CPU UMAP...")

cpu_start = time.time()

umap_cpu = CPU_UMAP(n_components=2, random_state=42)

embedding_cpu = umap_cpu.fit_transform(X_cpu)

cpu_time = time.time() - cpu_start

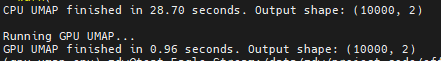

print(f"CPU UMAP finished in {cpu_time:.2f} seconds. Output shape: {embedding_cpu.shape}")

# ----------------------------

# GPU 版测试(如果可用)

# ----------------------------

if gpu_available:

print("\nRunning GPU UMAP...")

X_gpu = cp.asarray(X_cpu) # 转为 GPU 数据

gpu_start = time.time()

umap_gpu = GPU_UMAP(n_components=2, random_state=42)

embedding_gpu = umap_gpu.fit_transform(X_gpu)

embedding_gpu_np = cp.asnumpy(embedding_gpu) # 可选:转为 numpy 显示

gpu_time = time.time() - gpu_start

print(f"GPU UMAP finished in {gpu_time:.2f} seconds. Output shape: {embedding_gpu_np.shape}")

else:

print("\n⚠️ GPU UMAP not available. Please install RAPIDS cuML.")

可以看到GPU的umap快了近30倍!

4510

4510

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?