self.learning_rate = tf.train.exponential_decay(

self.initial_learning_rate,

self.global_step,

self.decay_steps,

self.decay_rate,

self.staircase = Ture, name='learning_rate')

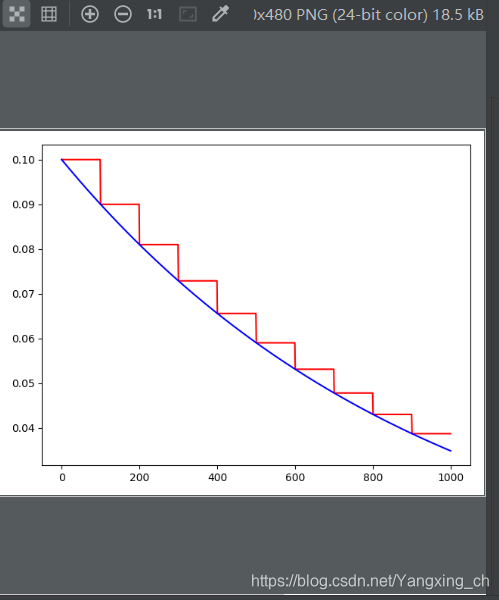

采用了学习率衰减,主要是根据训练过程中训练步数来改变学习率,可以每步都改变,也可以每间隔steps来改变,上面的例子就是每经过decay_steps步,原始的学习率将变为decay_steps倍。具体详情请看下面的代码和结果图。

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

import yolo.config as cfg

class LearningRate(object):

def __init__(self):

self.init_learningrate = cfg.LEARNING_RATE

self.decay_rate = cfg.DECAY_RATE

self.global_steps = 1000

self.decay_steps = cfg.DECAY_STEPS

self.global_ = tf.Variable(tf.constant(0))

#每decay_steps对学习率进行衰减

self.learning_rate1 = tf.train.exponential_decay(

self.init_learningrate, self.global_, self.decay_steps,

self.decay_rate, staircase = True, name='learning_rate1')

#每步都对学习率进行衰减

self.learning_rate2 = tf.train.exponential_decay(

self.init_learningrate, self.global_, self.decay_steps,

self.decay_rate, staircase=False, name='learning_rate2')

T = []

F = []

def main():

with tf.Session() as sess:

a = LearningRate()

for i in range(a.global_steps):

F_c = sess.run(a.learning_rate2,feed_dict={a.global_:i})

F.append(F_c)

T_c = sess.run(a.learning_rate1,feed_dict={a.global_: i})

T.append(T_c)

plt.figure(1)

plt.plot(range(a.global_steps),T,'r-')

plt.plot(range(a.global_steps), F, 'b-')

plt.show()

if __name__ == '__main__':

main()

结果:

本文详细介绍了使用TensorFlow实现的学习率衰减策略,包括每步衰减和按固定步数衰减两种方式,并通过代码实例展示了如何在训练过程中动态调整学习率,以提升模型训练效果。

本文详细介绍了使用TensorFlow实现的学习率衰减策略,包括每步衰减和按固定步数衰减两种方式,并通过代码实例展示了如何在训练过程中动态调整学习率,以提升模型训练效果。

1395

1395

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?