今天以起始数据为起始点,统计热度前三个电影。

我是以每个电影用户评论多少最为热度的评选。

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class CountTen implements WritableComparable<CountTen> {

private String movie;

private String timeStamp;

private Integer rate;

private String uid;

public String getMovie() {

return movie;

}

public void setMovie(String movie) {

this.movie = movie;

}

public String getTimeStamp() {

return timeStamp;

}

public void setTimeStamp(String timeStamp) {

this.timeStamp = timeStamp;

}

public Integer getRate() {

return rate;

}

public void setRate(Integer rate) {

this.rate = rate;

}

public String getUid() {

return uid;

}

public void setUid(String uid) {

this.uid = uid;

}

@Override

public int compareTo(CountTen o) {

return o.getMovie().compareTo(this.movie);

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeUTF(this.movie);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

this.movie = dataInput.readUTF();

}

}

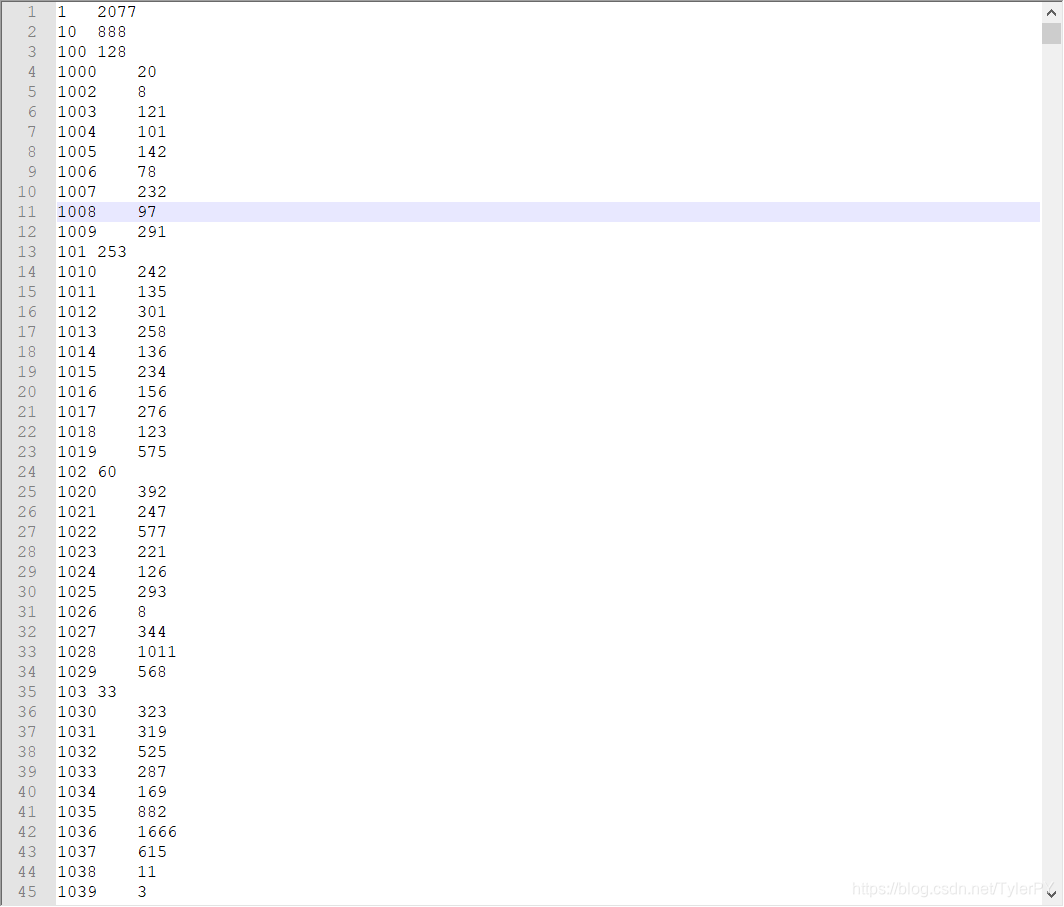

第一步:先将初始数据统计出每个电影评论的总数。

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.codehaus.jackson.map.ObjectMapper;

import java.io.IOException;

public class Woedount {

public static class CountMap extends Mapper<LongWritable, Text,Text, IntWritable>{

ObjectMapper objectMapper = new ObjectMapper();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String word = value.toString();

CountTen contTen = objectMapper.readValue(word,CountTen.class);

context.write(new Text(contTen.getMovie()),new IntWritable(1));

}

public static class CountRaduce extends Reducer<Text, IntWritable,Text, IntWritable>{

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

Integer count=0;

for (IntWritable s:values) {

count++;

}

context.write(key,new IntWritable(count));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

conf.set("yarn.resorcemanager.hostname","192.168.72.110");

conf.set("fs.deafutFS", "hdfs://192.168.72.110:9000/");

Job job = Job.getInstance();

job.setJarByClass(Woedount.class);

job.setMapperClass(CountMap.class);

job.setReducerClass(CountRaduce.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

job.submit();

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

}

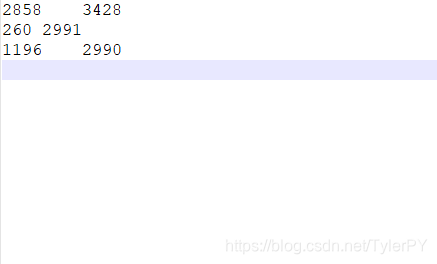

第二步 用第一步的结果进行排序,取出最热的电影。

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import java.io.IOException;

import java.util.Comparator;

import java.util.Map;

import java.util.TreeMap;

public class WordSort2 {

//(输出key:count,value:movie)

public static class WordSort2Map extends Mapper<LongWritable, Text, IntWritable,Text>{

Text v = new Text();

IntWritable k = new IntWritable();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String[] split = value.toString().split("\t");

String count = split[1];

String movie = split[0];

k.set(Integer.parseInt(split[1]));

v.set(split[0]);

//在 write里 new放在了map方法外面,减少了占用内存

context.write(k,v);

}

}

//(输入key:count,value:movie)

//(输出key:movie;value:count)

public static class WordSort2Reduce extends Reducer<IntWritable,Text,Text,IntWritable>{

//创建treeMap 以键:值存储, 还有就是方便下面的map.pollFirstEntry()方法

//此方法是拿出一对,容器里就少了一对。

TreeMap<IntWritable,Text> map;

@Override

protected void setup(Context context) throws IOException, InterruptedException {

map = new TreeMap<IntWritable,Text>(new Comparator<IntWritable>() {

@Override

public int compare(IntWritable o1, IntWritable o2) {

return -o1.compareTo(o2);

}

});

}

@Override

protected void reduce(IntWritable key, Iterable<Text> values, Context context) throws IOException, InterruptedException {

String movie = values.iterator().next().toString();

Integer count = key.get();

map.put(new IntWritable(count),new Text(movie));

}

@Override

protected void cleanup(Context context) throws IOException, InterruptedException {

for (int i = 0; i < 3; i++) {

Map.Entry<IntWritable, Text> entry = map.pollFirstEntry();

IntWritable count = entry.getKey();

Text movie = entry.getValue();

context.write(movie,count);

}

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

conf.set("yarn.resorcemanager.hostname","192.168.72.110");

conf.set("fs.deafutFS", "hdfs://192.168.72.110:9000/");

job.setJarByClass(WordSort2.class);

job.setMapperClass(WordSort2Map.class);

job.setReducerClass(WordSort2Reduce.class);

job.setMapOutputKeyClass(IntWritable.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

// FileInputFormat.setInputPaths(job,new Path("D:\\eclipse\\wc\\input"));

// FileOutputFormat.setOutputPath(job,new Path("D:\\eclipse\\wc\\output"));

job.submit();

boolean b = job.waitForCompletion(true);

System.exit(b?0:1);

}

}

将两部写在一个类中

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.codehaus.jackson.map.ObjectMapper;

import java.io.IOException;

import java.util.Comparator;

import java.util.Map;

import java.util.TreeMap;

public class UserSortTopN {

public static class UserSortTopNMap extends Mapper<LongWritable, Text, Text,IntWritable>{

ObjectMapper objectMapper = new ObjectMapper();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

CountTen countTen = objectMapper.readValue(line, CountTen.class);

String movie = countTen.getMovie();

context.write(new Text(movie),new IntWritable(1));

}

}

public static class UserSortTopNReduce extends Reducer<Text,IntWritable,Text,IntWritable>{

TreeMap<IntWritable,Text> map;

@Override

protected void setup(Context context) throws IOException, InterruptedException {

map = new TreeMap<IntWritable,Text>(new Comparator<IntWritable>() {

@Override

public int compare(IntWritable o1, IntWritable o2) {

return -o1.compareTo(o2);

}

});

}

@Override

protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

Integer count = 0;

for (IntWritable v:values) {

count++;

}

map.put(new IntWritable(count),new Text(key));

}

@Override

protected void cleanup(Context context) throws IOException, InterruptedException {

for (int i = 0; i < 3; i++) {

Map.Entry<IntWritable, Text> entry = map.pollFirstEntry();

IntWritable count = entry.getKey();

Text movie = entry.getValue();

context.write(movie,count);

}

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

conf.set("yarn.resorcemanager.hostname", "192.168.72.110");

conf.set("fs.deafutFS", "hdfs://192.168.72.110:9000/");

job.setJarByClass(UserSortTopN.class);

job.setMapperClass(UserSortTopNMap.class);

job.setReducerClass(UserSortTopNReduce.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

// FileInputFormat.setInputPaths(job,new Path("D:\\eclipse\\wc\\input"));

// FileOutputFormat.setOutputPath(job,new Path("D:\\eclipse\\wc\\output"));

job.submit();

boolean b = job.waitForCompletion(true);

System.exit(b ? 0 : 1);

}

}

Hadoop热度电影Top3

Hadoop热度电影Top3

本文介绍了一个使用Hadoop MapReduce实现的电影热度排名算法,通过统计每部电影的用户评论数量来确定电影的热度,并从中选取热度最高的前三部电影。

本文介绍了一个使用Hadoop MapReduce实现的电影热度排名算法,通过统计每部电影的用户评论数量来确定电影的热度,并从中选取热度最高的前三部电影。

4万+

4万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?