今天我们将json数据以用户排序,取出每个用户评分最高的前三个。

UserRateTop 类

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class UserRateTop implements WritableComparable<UserRateTop> {

private String movie;

private String timeStamp;

private Integer rate;

private String uid;

public String getMovie() {

return movie;

}

public void setMovie(String movie) {

this.movie = movie;

}

public String getTimeStamp() {

return timeStamp;

}

public void setTimeStamp(String timeStamp) {

this.timeStamp = timeStamp;

}

public Integer getRate() {

return rate;

}

public void setRate(Integer rate) {

this.rate = rate;

}

public String getUid() {

return uid;

}

public void setUid(String uid) {

this.uid = uid;

}

@Override

public String toString() {

return "UserRateTop{" +

"movie='" + movie + '\'' +

", timeStamp='" + timeStamp + '\'' +

", rate=" + rate +

", uid='" + uid + '\'' +

'}';

}

@Override

public int compareTo(UserRateTop o) {

return o.getRate().compareTo(this.rate);

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeUTF(this.movie);

dataOutput.writeInt(this.rate);

dataOutput.writeUTF(this.timeStamp);

dataOutput.writeUTF(this.uid);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

this.movie = dataInput.readUTF();

this.rate = dataInput.readInt();

this.timeStamp = dataInput.readUTF();

this.uid = dataInput.readUTF();

}

}

MapReduce类

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.codehaus.jackson.map.ObjectMapper;

import java.io.IOException;

import java.util.ArrayList;

import java.util.Collections;

public class UserRateSort {

public static class UserMap extends Mapper<LongWritable, Text,Text, UserRateTop>{

ObjectMapper objectMapper = new ObjectMapper();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

UserRateTop userRateTop = objectMapper.readValue(line,UserRateTop.class);

context.write(new Text(userRateTop.getUid()),userRateTop);

}

}

public static class UserReduce extends Reducer<Text,UserRateTop,UserRateTop, NullWritable>{

@Override

protected void reduce(Text key, Iterable<UserRateTop> values, Context context) throws IOException, InterruptedException {

ArrayList<UserRateTop> userRateTops = new ArrayList<UserRateTop>();

for (UserRateTop value: values) {

//在这里要在创建个UserRateTop对象,获取里面的属性。如果不获取,每次循环都会将

//前面的数据覆盖掉。即都是用户评分的最后一行数据。

UserRateTop top = new UserRateTop();

top.setMovie(value.getMovie());

top.setRate(value.getRate());

top.setTimeStamp(value.getTimeStamp());

top.setUid(value.getUid());

userRateTops.add(top);

}

Collections.sort(userRateTops);

for (int i = 0; i < 3; i++) {

context.write(userRateTops.get(i),NullWritable.get());

}

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance();

conf.set("yarn.resorcemanager.hostname","192.168.72.110");

conf.set("fs.deafutFS", "hdfs://192.168.72.110:9000/");

job.setJarByClass(UserRateSort.class);

job.setMapperClass(UserMap.class);

job.setReducerClass(UserReduce.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(UserRateTop.class);

job.setOutputKeyClass(UserRateTop.class);

job.setOutputValueClass(NullWritable.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.setInputPaths(job,new Path(args[0]));

FileOutputFormat.setOutputPath(job,new Path(args[1]));

job.submit();

boolean b = job.waitForCompletion(true);

System.exit(b ? 0:1);

}

}

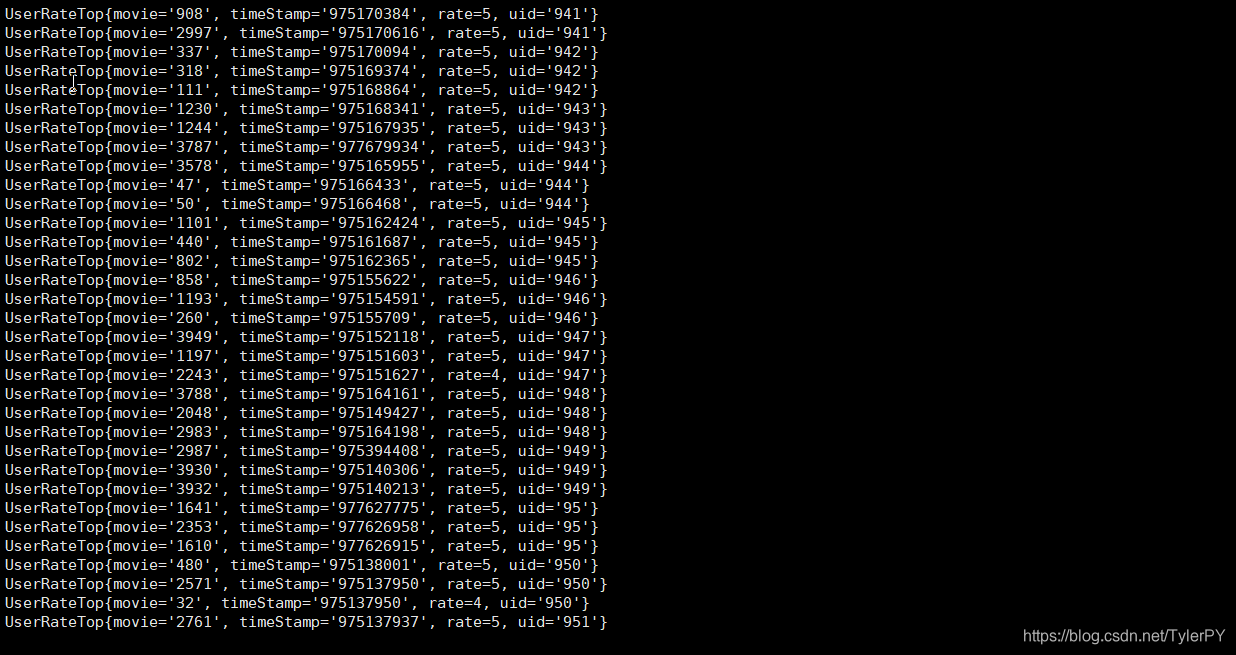

将其打包上传到虚拟机执行,结果如下。

本文介绍了一种使用MapReduce处理JSON数据的方法,旨在为每个用户找出他们评分最高的前三部电影。通过自定义UserRateTop类来存储电影评分信息,并实现WritableComparable接口进行排序。Map阶段读取JSON数据并按用户ID分组,Reduce阶段则对每个用户的评分记录进行排序并输出前三条。

本文介绍了一种使用MapReduce处理JSON数据的方法,旨在为每个用户找出他们评分最高的前三部电影。通过自定义UserRateTop类来存储电影评分信息,并实现WritableComparable接口进行排序。Map阶段读取JSON数据并按用户ID分组,Reduce阶段则对每个用户的评分记录进行排序并输出前三条。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?