kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.consumer.ConsumerConfig.371] - ConsumerConfig values: \n\tallow.auto.create.topics = true\n\tauto.commit.interval.ms = 5000\n\tauto.include.jmx.reporter = true\n\tauto.offset.reset = latest\n\tbootstrap.servers = [7.192.149.62:9092, 7.192.148.38:9092, 7.192.149.235:9092, 7.192.148.14:9092, 7.192.148.143:9092, 7.192.148.141:9092, 7.192.148.70:9092, 7.192.149.111:9092, 7.192.150.201:9092, 7.192.149.92:9092]\n\tcheck.crcs = true\n\tclient.dns.lookup = use_all_dns_ips\n\tclient.id = consumer-apigc-consumer-98757-112564\n\tclient.rack = \n\tconnections.max.idle.ms = 540000\n\tdefault.api.timeout.ms = 60000\n\tenable.auto.commit = false\n\tenable.metrics.push = true\n\texclude.internal.topics = true\n\tfetch.max.bytes = 52428800\n\tfetch.max.wait.ms = 60000\n\tfetch.min.bytes = 1\n\tgroup.id = apigc-consumer-98757\n\tgroup.instance.id = null\n\tgroup.protocol = classic\n\tgroup.remote.assignor = null\n\theartbeat.interval.ms = 3000\n\tinterceptor.classes = []\n\tinternal.leave.group.on.close = true\n\tinternal.throw.on.fetch.stable.offset.unsupported = false\n\tisolation.level = read_uncommitted\n\tkey.deserializer = class org.apache.kafka.common.serialization.StringDeserializer\n\tmax.partition.fetch.bytes = 1048576\n\tmax.poll.interval.ms = 600\n\tmax.poll.records = 1000\n\tmetadata.max.age.ms = 300000\n\tmetadata.recovery.strategy = none\n\tmetric.reporters = []\n\tmetrics.num.samples = 2\n\tmetrics.recording.level = INFO\n\tmetrics.sample.window.ms = 30000\n\tpartition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor, class org.apache.kafka.clients.consumer.CooperativeStickyAssignor]\n\treceive.buffer.bytes = 65536\n\treconnect.backoff.max.ms = 1000\n\treconnect.backoff.ms = 50\n\trequest.timeout.ms = 70000\n\tretry.backoff.max.ms = 1000\n\tretry.backoff.ms = 100\n\tsasl.client.callback.handler.class = null\n\tsasl.jaas.config = null\n\tsasl.kerberos.kinit.cmd = /usr/bin/kinit\n\tsasl.kerberos.min.time.before.relogin = 60000\n\tsasl.kerberos.service.name = null\n\tsasl.kerberos.ticket.renew.jitter = 0.05\n\tsasl.kerberos.ticket.renew.window.factor = 0.8\n\tsasl.login.callback.handler.class = null\n\tsasl.login.class = null\n\tsasl.login.connect.timeout.ms = null\n\tsasl.login.read.timeout.ms = null\n\tsasl.login.refresh.buffer.seconds = 300\n\tsasl.login.refresh.min.period.seconds = 60\n\tsasl.login.refresh.window.factor = 0.8\n\tsasl.login.refresh.window.jitter = 0.05\n\tsasl.login.retry.backoff.max.ms = 10000\n\tsasl.login.retry.backoff.ms = 100\n\tsasl.mechanism = GSSAPI\n\tsasl.oauthbearer.clock.skew.seconds = 30\n\tsasl.oauthbearer.expected.audience = null\n\tsasl.oauthbearer.expected.issuer = null\n\tsasl.oauthbearer.header.urlencode = false\n\tsasl.oauthbearer.jwks.endpoint.refresh.ms = 3600000\n\tsasl.oauthbearer.jwks.endpoint.retry.backoff.max.ms = 10000\n\tsasl.oauthbearer.jwks.endpoint.retry.backoff.ms = 100\n\tsasl.oauthbearer.jwks.endpoint.url = null\n\tsasl.oauthbearer.scope.claim.name = scope\n\tsasl.oauthbearer.sub.claim.name = sub\n\tsasl.oauthbearer.token.endpoint.url = null\n\tsecurity.protocol = PLAINTEXT\n\tsecurity.providers = null\n\tsend.buffer.bytes = 131072\n\tsession.timeout.ms = 60000\n\tsocket.connection.setup.timeout.max.ms = 30000\n\tsocket.connection.setup.timeout.ms = 10000\n\tssl.cipher.suites = null\n\tssl.enabled.protocols = [TLSv1.2]\n\tssl.endpoint.identification.algorithm = https\n\tssl.engine.factory.class = null\n\tssl.key.password = null\n\tssl.keymanager.algorithm = SunX509\n\tssl.keystore.certificate.chain = null\n\tssl.keystore.key = null\n\tssl.keystore.location = null\n\tssl.keystore.password = null\n\tssl.keystore.type = JKS\n\tssl.protocol = TLSv1.2\n\tssl.provider = null\n\tssl.secure.random.implementation = null\n\tssl.trustmanager.algorithm = PKIX\n\tssl.truststore.certificates = null\n\tssl.truststore.location = null\n\tssl.truststore.password = null\n\tssl.truststore.type = JKS\n\tvalue.deserializer = class org.apache.kafka.common.serialization.StringDeserializer\n

[2025-12-11 00:01:00.555] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.common.telemetry.internals.KafkaMetricsCollector.270] - initializing Kafka metrics collector

[2025-12-11 00:01:00.557] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.125] - Kafka version: 3.9.1

[2025-12-11 00:01:00.557] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.126] - Kafka commitId: f745dfdcee2b9851

[2025-12-11 00:01:00.557] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.127] - Kafka startTimeMs: 1765382460557

[2025-12-11 00:01:00.557] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ClassicKafkaConsumer.481] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] Subscribed to topic(s): idiag-udp-800-stat

[2025-12-11 00:01:00.578] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.consumer.ConsumerConfig.371] - ConsumerConfig values: \n\tallow.auto.create.topics = true\n\tauto.commit.interval.ms = 5000\n\tauto.include.jmx.reporter = true\n\tauto.offset.reset = latest\n\tbootstrap.servers = [7.192.149.62:9092, 7.192.148.38:9092, 7.192.149.235:9092, 7.192.148.14:9092, 7.192.148.143:9092, 7.192.148.141:9092, 7.192.148.70:9092, 7.192.149.111:9092, 7.192.150.201:9092, 7.192.149.92:9092]\n\tcheck.crcs = true\n\tclient.dns.lookup = use_all_dns_ips\n\tclient.id = consumer-apigc-consumer-98757-112565\n\tclient.rack = \n\tconnections.max.idle.ms = 540000\n\tdefault.api.timeout.ms = 60000\n\tenable.auto.commit = false\n\tenable.metrics.push = true\n\texclude.internal.topics = true\n\tfetch.max.bytes = 52428800\n\tfetch.max.wait.ms = 60000\n\tfetch.min.bytes = 1\n\tgroup.id = apigc-consumer-98757\n\tgroup.instance.id = null\n\tgroup.protocol = classic\n\tgroup.remote.assignor = null\n\theartbeat.interval.ms = 3000\n\tinterceptor.classes = []\n\tinternal.leave.group.on.close = true\n\tinternal.throw.on.fetch.stable.offset.unsupported = false\n\tisolation.level = read_uncommitted\n\tkey.deserializer = class org.apache.kafka.common.serialization.StringDeserializer\n\tmax.partition.fetch.bytes = 1048576\n\tmax.poll.interval.ms = 600\n\tmax.poll.records = 1000\n\tmetadata.max.age.ms = 300000\n\tmetadata.recovery.strategy = none\n\tmetric.reporters = []\n\tmetrics.num.samples = 2\n\tmetrics.recording.level = INFO\n\tmetrics.sample.window.ms = 30000\n\tpartition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor, class org.apache.kafka.clients.consumer.CooperativeStickyAssignor]\n\treceive.buffer.bytes = 65536\n\treconnect.backoff.max.ms = 1000\n\treconnect.backoff.ms = 50\n\trequest.timeout.ms = 70000\n\tretry.backoff.max.ms = 1000\n\tretry.backoff.ms = 100\n\tsasl.client.callback.handler.class = null\n\tsasl.jaas.config = null\n\tsasl.kerberos.kinit.cmd = /usr/bin/kinit\n\tsasl.kerberos.min.time.before.relogin = 60000\n\tsasl.kerberos.service.name = null\n\tsasl.kerberos.ticket.renew.jitter = 0.05\n\tsasl.kerberos.ticket.renew.window.factor = 0.8\n\tsasl.login.callback.handler.class = null\n\tsasl.login.class = null\n\tsasl.login.connect.timeout.ms = null\n\tsasl.login.read.timeout.ms = null\n\tsasl.login.refresh.buffer.seconds = 300\n\tsasl.login.refresh.min.period.seconds = 60\n\tsasl.login.refresh.window.factor = 0.8\n\tsasl.login.refresh.window.jitter = 0.05\n\tsasl.login.retry.backoff.max.ms = 10000\n\tsasl.login.retry.backoff.ms = 100\n\tsasl.mechanism = GSSAPI\n\tsasl.oauthbearer.clock.skew.seconds = 30\n\tsasl.oauthbearer.expected.audience = null\n\tsasl.oauthbearer.expected.issuer = null\n\tsasl.oauthbearer.header.urlencode = false\n\tsasl.oauthbearer.jwks.endpoint.refresh.ms = 3600000\n\tsasl.oauthbearer.jwks.endpoint.retry.backoff.max.ms = 10000\n\tsasl.oauthbearer.jwks.endpoint.retry.backoff.ms = 100\n\tsasl.oauthbearer.jwks.endpoint.url = null\n\tsasl.oauthbearer.scope.claim.name = scope\n\tsasl.oauthbearer.sub.claim.name = sub\n\tsasl.oauthbearer.token.endpoint.url = null\n\tsecurity.protocol = PLAINTEXT\n\tsecurity.providers = null\n\tsend.buffer.bytes = 131072\n\tsession.timeout.ms = 60000\n\tsocket.connection.setup.timeout.max.ms = 30000\n\tsocket.connection.setup.timeout.ms = 10000\n\tssl.cipher.suites = null\n\tssl.enabled.protocols = [TLSv1.2]\n\tssl.endpoint.identification.algorithm = https\n\tssl.engine.factory.class = null\n\tssl.key.password = null\n\tssl.keymanager.algorithm = SunX509\n\tssl.keystore.certificate.chain = null\n\tssl.keystore.key = null\n\tssl.keystore.location = null\n\tssl.keystore.password = null\n\tssl.keystore.type = JKS\n\tssl.protocol = TLSv1.2\n\tssl.provider = null\n\tssl.secure.random.implementation = null\n\tssl.trustmanager.algorithm = PKIX\n\tssl.truststore.certificates = null\n\tssl.truststore.location = null\n\tssl.truststore.password = null\n\tssl.truststore.type = JKS\n\tvalue.deserializer = class org.apache.kafka.common.serialization.StringDeserializer\n

[2025-12-11 00:01:00.578] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.common.telemetry.internals.KafkaMetricsCollector.270] - initializing Kafka metrics collector

[2025-12-11 00:01:00.580] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.125] - Kafka version: 3.9.1

[2025-12-11 00:01:00.580] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.126] - Kafka commitId: f745dfdcee2b9851

[2025-12-11 00:01:00.580] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.127] - Kafka startTimeMs: 1765382460580

[2025-12-11 00:01:00.580] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ClassicKafkaConsumer.481] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Subscribed to topic(s): T_gm_instance

[2025-12-11 00:01:00.583] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.Metadata.365] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Cluster ID: qO4zHm1-Tj-XzNnkbzMBPQ

[2025-12-11 00:01:00.584] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.937] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Discovered group coordinator 7.192.148.38:9092 (id: 2147483646 rack: null)

[2025-12-11 00:01:00.584] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.605] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] (Re-)joining group

[2025-12-11 00:01:01.649] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.Metadata.365] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] Cluster ID: qO4zHm1-Tj-XzNnkbzMBPQ

[2025-12-11 00:01:01.650] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.937] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] Discovered group coordinator 7.192.148.38:9092 (id: 2147483646 rack: null)

[2025-12-11 00:01:01.650] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.605] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] (Re-)joining group

[2025-12-11 00:01:05.579] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.666] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Successfully joined group with generation Generation{generationId=2895172, memberId='consumer-apigc-consumer-98757-2-bb905e91-d34a-4780-86fa-e451d39ef146', protocol='range'}

[2025-12-11 00:01:05.579] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.666] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Successfully joined group with generation Generation{generationId=2895172, memberId='consumer-apigc-consumer-98757-112565-eaac94b3-e477-4f01-9707-cbac065c041b', protocol='range'}

[2025-12-11 00:01:05.585] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.664] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Finished assignment for group at generation 2895172: {consumer-apigc-consumer-98757-112902-de6bd48e-6f45-48a9-ba11-660715428234=Assignment(partitions=[idiag-udp-800-stat-2, idiag-udp-800-stat-3]), consumer-apigc-consumer-98757-1-db6078ce-d505-43c5-80ad-d0e6d164d58d=Assignment(partitions=[idiag-udp-800-subapp-stat-0, idiag-udp-800-subapp-stat-1]), consumer-apigc-consumer-98757-112564-929082bc-0882-4835-b7a3-f15ad5de08c8=Assignment(partitions=[idiag-udp-800-stat-0, idiag-udp-800-stat-1]), consumer-apigc-consumer-98757-112903-facff737-fea5-43ab-9db9-383115d4834c=Assignment(partitions=[T_gm_instance-2]), consumer-apigc-consumer-98757-112565-eaac94b3-e477-4f01-9707-cbac065c041b=Assignment(partitions=[T_gm_instance-0, T_gm_instance-1]), consumer-apigc-consumer-98757-2-bb905e91-d34a-4780-86fa-e451d39ef146=Assignment(partitions=[idiag-udp-800-subapp-stat-2, idiag-udp-800-subapp-stat-3])}

[2025-12-11 00:01:05.592] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.843] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Successfully synced group in generation Generation{generationId=2895172, memberId='consumer-apigc-consumer-98757-2-bb905e91-d34a-4780-86fa-e451d39ef146', protocol='range'}

[2025-12-11 00:01:05.592] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.843] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Successfully synced group in generation Generation{generationId=2895172, memberId='consumer-apigc-consumer-98757-112565-eaac94b3-e477-4f01-9707-cbac065c041b', protocol='range'}

[2025-12-11 00:01:05.593] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.324] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Notifying assignor about the new Assignment(partitions=[idiag-udp-800-subapp-stat-2, idiag-udp-800-subapp-stat-3])

[2025-12-11 00:01:05.593] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.324] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Notifying assignor about the new Assignment(partitions=[T_gm_instance-0, T_gm_instance-1])

[2025-12-11 00:01:05.593] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.k.c.consumer.internals.ConsumerRebalanceListenerInvoker.58] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Adding newly assigned partitions: idiag-udp-800-subapp-stat-2, idiag-udp-800-subapp-stat-3

[2025-12-11 00:01:05.593] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.k.c.consumer.internals.ConsumerRebalanceListenerInvoker.58] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Adding newly assigned partitions: T_gm_instance-0, T_gm_instance-1

[2025-12-11 00:01:05.593] [kafka-topic2-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.consumer.internals.ConsumerUtils.209] - Setting offset for partition idiag-udp-800-subapp-stat-2 to the committed offset FetchPosition{offset=734291379, offsetEpoch=Optional.empty, currentLeader=LeaderAndEpoch{leader=Optional[7.192.148.38:9092 (id: 1 rack: cn-south-3d###115acd0f76614ae1a730ee8722f6a95a)], epoch=absent}}

[2025-12-11 00:01:05.593] [kafka-topic2-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.consumer.internals.ConsumerUtils.209] - Setting offset for partition idiag-udp-800-subapp-stat-3 to the committed offset FetchPosition{offset=733888674, offsetEpoch=Optional.empty, currentLeader=LeaderAndEpoch{leader=Optional[7.192.148.14:9092 (id: 3 rack: cn-south-3a###3a28bd93d53547aa9c109199039e5edd)], epoch=absent}}

[2025-12-11 00:01:05.593] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.consumer.internals.ConsumerUtils.209] - Setting offset for partition T_gm_instance-1 to the committed offset FetchPosition{offset=27759090, offsetEpoch=Optional.empty, currentLeader=LeaderAndEpoch{leader=Optional[7.192.148.141:9092 (id: 5 rack: cn-south-3b###197dfeb3f3b84540a4c1e06f954302dc)], epoch=absent}}

[2025-12-11 00:01:05.594] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.consumer.internals.ConsumerUtils.209] - Setting offset for partition T_gm_instance-0 to the committed offset FetchPosition{offset=27774533, offsetEpoch=Optional.empty, currentLeader=LeaderAndEpoch{leader=Optional[7.192.148.14:9092 (id: 3 rack: cn-south-3a###3a28bd93d53547aa9c109199039e5edd)], epoch=absent}}

[2025-12-11 00:01:05.681] [kafka-topic2-task-pool-0~289] [INFO] [--] [c.h.it.gaia.apigc.service.task.KafkaTopic2DataConsumeService.208] - save apiInvokeDataDOList size:95 to topic2 tbl a.

[2025-12-11 00:01:06.053] [kafka-topic2-task-pool-0~289] [INFO] [--] [c.h.it.gaia.apigc.service.task.KafkaTopic2DataConsumeService.208] - save apiInvokeDataDOList size:600 to topic2 tbl a.

[2025-12-11 00:01:06.176] [kafka-topic1-task-pool-0~289] [INFO] [--] [SqlLog@com.huawei.125] - Cost 525ms | com.huawei.it.gaia.apigc.infrastructure.mapper.IInvokeRelationMapper.insertInstanceMapping | printing sql is not allowed

[2025-12-11 00:01:06.176] [kafka-topic1-task-pool-0~289] [INFO] [--] [c.huawei.it.gaia.apigc.service.task.KafkaDataConsumeService.313] - insert instanceMappingDOList size:10968

[2025-12-11 00:01:06.292] [kafka-coordinator-heartbeat-thread | apigc-consumer-98757~289] [WARN] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1148] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] consumer poll timeout has expired. This means the time between subsequent calls to poll() was longer than the configured max.poll.interval.ms, which typically implies that the poll loop is spending too much time processing messages. You can address this either by increasing max.poll.interval.ms or by reducing the maximum size of batches returned in poll() with max.poll.records.

[2025-12-11 00:01:06.292] [kafka-coordinator-heartbeat-thread | apigc-consumer-98757~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1174] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Member consumer-apigc-consumer-98757-112565-eaac94b3-e477-4f01-9707-cbac065c041b sending LeaveGroup request to coordinator 7.192.148.38:9092 (id: 2147483646 rack: null) due to consumer poll timeout has expired.

[2025-12-11 00:01:06.294] [kafka-coordinator-heartbeat-thread | apigc-consumer-98757~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1056] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Resetting generation and member id due to: consumer pro-actively leaving the group

[2025-12-11 00:01:06.294] [kafka-coordinator-heartbeat-thread | apigc-consumer-98757~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1103] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Request joining group due to: consumer pro-actively leaving the group

[2025-12-11 00:01:06.353] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.666] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] Successfully joined group with generation Generation{generationId=2895172, memberId='consumer-apigc-consumer-98757-112564-929082bc-0882-4835-b7a3-f15ad5de08c8', protocol='range'}

[2025-12-11 00:01:06.358] [kafka-coordinator-heartbeat-thread | apigc-consumer-98757~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.867] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] SyncGroup failed: The group began another rebalance. Need to re-join the group. Sent generation was Generation{generationId=2895172, memberId='consumer-apigc-consumer-98757-112564-929082bc-0882-4835-b7a3-f15ad5de08c8', protocol='range'}

[2025-12-11 00:01:06.358] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1103] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] Request joining group due to: rebalance failed due to 'The group is rebalancing, so a rejoin is needed.' (RebalanceInProgressException)

[2025-12-11 00:01:06.358] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.605] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] (Re-)joining group

[2025-12-11 00:01:07.353] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1271] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Failing OffsetCommit request since the consumer is not part of an active group

[2025-12-11 00:01:07.354] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.k.c.consumer.internals.ConsumerRebalanceListenerInvoker.106] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Lost previously assigned partitions T_gm_instance-0, T_gm_instance-1

[2025-12-11 00:01:07.354] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1056] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Resetting generation and member id due to: consumer pro-actively leaving the group

[2025-12-11 00:01:07.354] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1103] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Request joining group due to: consumer pro-actively leaving the group

[2025-12-11 00:01:07.357] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.FetchSessionHandler.556] - [Consumer clientId=consumer-apigc-consumer-98757-112565, groupId=apigc-consumer-98757] Node 3 sent an invalid full fetch response with extraPartitions=(T_gm_instance-0), response=(T_gm_instance-0)

[2025-12-11 00:01:07.357] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.metrics.Metrics.685] - Metrics scheduler closed

[2025-12-11 00:01:07.357] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.metrics.Metrics.689] - Closing reporter org.apache.kafka.common.metrics.JmxReporter

[2025-12-11 00:01:07.357] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.metrics.Metrics.689] - Closing reporter org.apache.kafka.common.telemetry.internals.ClientTelemetryReporter

[2025-12-11 00:01:07.358] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.metrics.Metrics.695] - Metrics reporters closed

[2025-12-11 00:01:07.359] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.89] - App info kafka.consumer for consumer-apigc-consumer-98757-112565 unregistered

[2025-12-11 00:01:07.360] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1174] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] Member consumer-apigc-consumer-98757-112564-929082bc-0882-4835-b7a3-f15ad5de08c8 sending LeaveGroup request to coordinator 7.192.148.38:9092 (id: 2147483646 rack: null) due to the consumer is being closed

[2025-12-11 00:01:07.360] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1056] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] Resetting generation and member id due to: consumer pro-actively leaving the group

[2025-12-11 00:01:07.361] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1103] - [Consumer clientId=consumer-apigc-consumer-98757-112564, groupId=apigc-consumer-98757] Request joining group due to: consumer pro-actively leaving the group

[2025-12-11 00:01:08.581] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1103] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Request joining group due to: group is already rebalancing

[2025-12-11 00:01:08.581] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.k.c.consumer.internals.ConsumerRebalanceListenerInvoker.80] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Revoke previously assigned partitions idiag-udp-800-subapp-stat-2, idiag-udp-800-subapp-stat-3

[2025-12-11 00:01:08.581] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.605] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] (Re-)joining group

[2025-12-11 00:01:08.700] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.666] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Successfully joined group with generation Generation{generationId=2895173, memberId='consumer-apigc-consumer-98757-2-bb905e91-d34a-4780-86fa-e451d39ef146', protocol='range'}

[2025-12-11 00:01:08.701] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.metrics.Metrics.685] - Metrics scheduler closed

[2025-12-11 00:01:08.701] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.metrics.Metrics.689] - Closing reporter org.apache.kafka.common.metrics.JmxReporter

[2025-12-11 00:01:08.701] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.metrics.Metrics.689] - Closing reporter org.apache.kafka.common.telemetry.internals.ClientTelemetryReporter

[2025-12-11 00:01:08.701] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.metrics.Metrics.695] - Metrics reporters closed

[2025-12-11 00:01:08.702] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.664] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Finished assignment for group at generation 2895173: {consumer-apigc-consumer-98757-112902-39ef1421-662a-4e3f-a94a-d3c0df2bb0b2=Assignment(partitions=[idiag-udp-800-stat-2, idiag-udp-800-stat-3]), consumer-apigc-consumer-98757-1-db6078ce-d505-43c5-80ad-d0e6d164d58d=Assignment(partitions=[idiag-udp-800-subapp-stat-0, idiag-udp-800-subapp-stat-1]), consumer-apigc-consumer-98757-112564-929082bc-0882-4835-b7a3-f15ad5de08c8=Assignment(partitions=[idiag-udp-800-stat-0, idiag-udp-800-stat-1]), consumer-apigc-consumer-98757-2-bb905e91-d34a-4780-86fa-e451d39ef146=Assignment(partitions=[idiag-udp-800-subapp-stat-2, idiag-udp-800-subapp-stat-3])}

[2025-12-11 00:01:08.702] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.867] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] SyncGroup failed: The group began another rebalance. Need to re-join the group. Sent generation was Generation{generationId=2895173, memberId='consumer-apigc-consumer-98757-2-bb905e91-d34a-4780-86fa-e451d39ef146', protocol='range'}

[2025-12-11 00:01:08.702] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.1103] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Request joining group due to: rebalance failed due to 'The group is rebalancing, so a rejoin is needed.' (RebalanceInProgressException)

[2025-12-11 00:01:08.702] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.605] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] (Re-)joining group

[2025-12-11 00:01:08.702] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.89] - App info kafka.consumer for consumer-apigc-consumer-98757-112564 unregistered

[2025-12-11 00:01:08.703] [kafka-topic1-task-pool-0~289] [ERROR] [--] [c.huawei.it.gaia.apigc.service.task.KafkaDataConsumeService.159] - error during consume data from kafka and task stop!.

[2025-12-11 00:02:00.562] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.consumer.ConsumerConfig.371] - ConsumerConfig values: \n\tallow.auto.create.topics = true\n\tauto.commit.interval.ms = 5000\n\tauto.include.jmx.reporter = true\n\tauto.offset.reset = latest\n\tbootstrap.servers = [7.192.149.62:9092, 7.192.148.38:9092, 7.192.149.235:9092, 7.192.148.14:9092, 7.192.148.143:9092, 7.192.148.141:9092, 7.192.148.70:9092, 7.192.149.111:9092, 7.192.150.201:9092, 7.192.149.92:9092]\n\tcheck.crcs = true\n\tclient.dns.lookup = use_all_dns_ips\n\tclient.id = consumer-apigc-consumer-98757-112566\n\tclient.rack = \n\tconnections.max.idle.ms = 540000\n\tdefault.api.timeout.ms = 60000\n\tenable.auto.commit = false\n\tenable.metrics.push = true\n\texclude.internal.topics = true\n\tfetch.max.bytes = 52428800\n\tfetch.max.wait.ms = 60000\n\tfetch.min.bytes = 1\n\tgroup.id = apigc-consumer-98757\n\tgroup.instance.id = null\n\tgroup.protocol = classic\n\tgroup.remote.assignor = null\n\theartbeat.interval.ms = 3000\n\tinterceptor.classes = []\n\tinternal.leave.group.on.close = true\n\tinternal.throw.on.fetch.stable.offset.unsupported = false\n\tisolation.level = read_uncommitted\n\tkey.deserializer = class org.apache.kafka.common.serialization.StringDeserializer\n\tmax.partition.fetch.bytes = 1048576\n\tmax.poll.interval.ms = 600\n\tmax.poll.records = 1000\n\tmetadata.max.age.ms = 300000\n\tmetadata.recovery.strategy = none\n\tmetric.reporters = []\n\tmetrics.num.samples = 2\n\tmetrics.recording.level = INFO\n\tmetrics.sample.window.ms = 30000\n\tpartition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor, class org.apache.kafka.clients.consumer.CooperativeStickyAssignor]\n\treceive.buffer.bytes = 65536\n\treconnect.backoff.max.ms = 1000\n\treconnect.backoff.ms = 50\n\trequest.timeout.ms = 70000\n\tretry.backoff.max.ms = 1000\n\tretry.backoff.ms = 100\n\tsasl.client.callback.handler.class = null\n\tsasl.jaas.config = null\n\tsasl.kerberos.kinit.cmd = /usr/bin/kinit\n\tsasl.kerberos.min.time.before.relogin = 60000\n\tsasl.kerberos.service.name = null\n\tsasl.kerberos.ticket.renew.jitter = 0.05\n\tsasl.kerberos.ticket.renew.window.factor = 0.8\n\tsasl.login.callback.handler.class = null\n\tsasl.login.class = null\n\tsasl.login.connect.timeout.ms = null\n\tsasl.login.read.timeout.ms = null\n\tsasl.login.refresh.buffer.seconds = 300\n\tsasl.login.refresh.min.period.seconds = 60\n\tsasl.login.refresh.window.factor = 0.8\n\tsasl.login.refresh.window.jitter = 0.05\n\tsasl.login.retry.backoff.max.ms = 10000\n\tsasl.login.retry.backoff.ms = 100\n\tsasl.mechanism = GSSAPI\n\tsasl.oauthbearer.clock.skew.seconds = 30\n\tsasl.oauthbearer.expected.audience = null\n\tsasl.oauthbearer.expected.issuer = null\n\tsasl.oauthbearer.header.urlencode = false\n\tsasl.oauthbearer.jwks.endpoint.refresh.ms = 3600000\n\tsasl.oauthbearer.jwks.endpoint.retry.backoff.max.ms = 10000\n\tsasl.oauthbearer.jwks.endpoint.retry.backoff.ms = 100\n\tsasl.oauthbearer.jwks.endpoint.url = null\n\tsasl.oauthbearer.scope.claim.name = scope\n\tsasl.oauthbearer.sub.claim.name = sub\n\tsasl.oauthbearer.token.endpoint.url = null\n\tsecurity.protocol = PLAINTEXT\n\tsecurity.providers = null\n\tsend.buffer.bytes = 131072\n\tsession.timeout.ms = 60000\n\tsocket.connection.setup.timeout.max.ms = 30000\n\tsocket.connection.setup.timeout.ms = 10000\n\tssl.cipher.suites = null\n\tssl.enabled.protocols = [TLSv1.2]\n\tssl.endpoint.identification.algorithm = https\n\tssl.engine.factory.class = null\n\tssl.key.password = null\n\tssl.keymanager.algorithm = SunX509\n\tssl.keystore.certificate.chain = null\n\tssl.keystore.key = null\n\tssl.keystore.location = null\n\tssl.keystore.password = null\n\tssl.keystore.type = JKS\n\tssl.protocol = TLSv1.2\n\tssl.provider = null\n\tssl.secure.random.implementation = null\n\tssl.trustmanager.algorithm = PKIX\n\tssl.truststore.certificates = null\n\tssl.truststore.location = null\n\tssl.truststore.password = null\n\tssl.truststore.type = JKS\n\tvalue.deserializer = class org.apache.kafka.common.serialization.StringDeserializer\n

[2025-12-11 00:02:00.562] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.common.telemetry.internals.KafkaMetricsCollector.270] - initializing Kafka metrics collector

[2025-12-11 00:02:00.564] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.125] - Kafka version: 3.9.1

[2025-12-11 00:02:00.564] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.126] - Kafka commitId: f745dfdcee2b9851

[2025-12-11 00:02:00.564] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.127] - Kafka startTimeMs: 1765382520564

[2025-12-11 00:02:00.565] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ClassicKafkaConsumer.481] - [Consumer clientId=consumer-apigc-consumer-98757-112566, groupId=apigc-consumer-98757] Subscribed to topic(s): idiag-udp-800-stat

[2025-12-11 00:02:00.585] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.consumer.ConsumerConfig.371] - ConsumerConfig values: \n\tallow.auto.create.topics = true\n\tauto.commit.interval.ms = 5000\n\tauto.include.jmx.reporter = true\n\tauto.offset.reset = latest\n\tbootstrap.servers = [7.192.149.62:9092, 7.192.148.38:9092, 7.192.149.235:9092, 7.192.148.14:9092, 7.192.148.143:9092, 7.192.148.141:9092, 7.192.148.70:9092, 7.192.149.111:9092, 7.192.150.201:9092, 7.192.149.92:9092]\n\tcheck.crcs = true\n\tclient.dns.lookup = use_all_dns_ips\n\tclient.id = consumer-apigc-consumer-98757-112567\n\tclient.rack = \n\tconnections.max.idle.ms = 540000\n\tdefault.api.timeout.ms = 60000\n\tenable.auto.commit = false\n\tenable.metrics.push = true\n\texclude.internal.topics = true\n\tfetch.max.bytes = 52428800\n\tfetch.max.wait.ms = 60000\n\tfetch.min.bytes = 1\n\tgroup.id = apigc-consumer-98757\n\tgroup.instance.id = null\n\tgroup.protocol = classic\n\tgroup.remote.assignor = null\n\theartbeat.interval.ms = 3000\n\tinterceptor.classes = []\n\tinternal.leave.group.on.close = true\n\tinternal.throw.on.fetch.stable.offset.unsupported = false\n\tisolation.level = read_uncommitted\n\tkey.deserializer = class org.apache.kafka.common.serialization.StringDeserializer\n\tmax.partition.fetch.bytes = 1048576\n\tmax.poll.interval.ms = 600\n\tmax.poll.records = 1000\n\tmetadata.max.age.ms = 300000\n\tmetadata.recovery.strategy = none\n\tmetric.reporters = []\n\tmetrics.num.samples = 2\n\tmetrics.recording.level = INFO\n\tmetrics.sample.window.ms = 30000\n\tpartition.assignment.strategy = [class org.apache.kafka.clients.consumer.RangeAssignor, class org.apache.kafka.clients.consumer.CooperativeStickyAssignor]\n\treceive.buffer.bytes = 65536\n\treconnect.backoff.max.ms = 1000\n\treconnect.backoff.ms = 50\n\trequest.timeout.ms = 70000\n\tretry.backoff.max.ms = 1000\n\tretry.backoff.ms = 100\n\tsasl.client.callback.handler.class = null\n\tsasl.jaas.config = null\n\tsasl.kerberos.kinit.cmd = /usr/bin/kinit\n\tsasl.kerberos.min.time.before.relogin = 60000\n\tsasl.kerberos.service.name = null\n\tsasl.kerberos.ticket.renew.jitter = 0.05\n\tsasl.kerberos.ticket.renew.window.factor = 0.8\n\tsasl.login.callback.handler.class = null\n\tsasl.login.class = null\n\tsasl.login.connect.timeout.ms = null\n\tsasl.login.read.timeout.ms = null\n\tsasl.login.refresh.buffer.seconds = 300\n\tsasl.login.refresh.min.period.seconds = 60\n\tsasl.login.refresh.window.factor = 0.8\n\tsasl.login.refresh.window.jitter = 0.05\n\tsasl.login.retry.backoff.max.ms = 10000\n\tsasl.login.retry.backoff.ms = 100\n\tsasl.mechanism = GSSAPI\n\tsasl.oauthbearer.clock.skew.seconds = 30\n\tsasl.oauthbearer.expected.audience = null\n\tsasl.oauthbearer.expected.issuer = null\n\tsasl.oauthbearer.header.urlencode = false\n\tsasl.oauthbearer.jwks.endpoint.refresh.ms = 3600000\n\tsasl.oauthbearer.jwks.endpoint.retry.backoff.max.ms = 10000\n\tsasl.oauthbearer.jwks.endpoint.retry.backoff.ms = 100\n\tsasl.oauthbearer.jwks.endpoint.url = null\n\tsasl.oauthbearer.scope.claim.name = scope\n\tsasl.oauthbearer.sub.claim.name = sub\n\tsasl.oauthbearer.token.endpoint.url = null\n\tsecurity.protocol = PLAINTEXT\n\tsecurity.providers = null\n\tsend.buffer.bytes = 131072\n\tsession.timeout.ms = 60000\n\tsocket.connection.setup.timeout.max.ms = 30000\n\tsocket.connection.setup.timeout.ms = 10000\n\tssl.cipher.suites = null\n\tssl.enabled.protocols = [TLSv1.2]\n\tssl.endpoint.identification.algorithm = https\n\tssl.engine.factory.class = null\n\tssl.key.password = null\n\tssl.keymanager.algorithm = SunX509\n\tssl.keystore.certificate.chain = null\n\tssl.keystore.key = null\n\tssl.keystore.location = null\n\tssl.keystore.password = null\n\tssl.keystore.type = JKS\n\tssl.protocol = TLSv1.2\n\tssl.provider = null\n\tssl.secure.random.implementation = null\n\tssl.trustmanager.algorithm = PKIX\n\tssl.truststore.certificates = null\n\tssl.truststore.location = null\n\tssl.truststore.password = null\n\tssl.truststore.type = JKS\n\tvalue.deserializer = class org.apache.kafka.common.serialization.StringDeserializer\n

[2025-12-11 00:02:00.585] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.common.telemetry.internals.KafkaMetricsCollector.270] - initializing Kafka metrics collector

[2025-12-11 00:02:00.587] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.125] - Kafka version: 3.9.1

[2025-12-11 00:02:00.587] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.126] - Kafka commitId: f745dfdcee2b9851

[2025-12-11 00:02:00.587] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.common.utils.AppInfoParser.127] - Kafka startTimeMs: 1765382520587

[2025-12-11 00:02:00.588] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ClassicKafkaConsumer.481] - [Consumer clientId=consumer-apigc-consumer-98757-112567, groupId=apigc-consumer-98757] Subscribed to topic(s): T_gm_instance

[2025-12-11 00:02:00.590] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.Metadata.365] - [Consumer clientId=consumer-apigc-consumer-98757-112567, groupId=apigc-consumer-98757] Cluster ID: qO4zHm1-Tj-XzNnkbzMBPQ

[2025-12-11 00:02:00.591] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.937] - [Consumer clientId=consumer-apigc-consumer-98757-112567, groupId=apigc-consumer-98757] Discovered group coordinator 7.192.148.38:9092 (id: 2147483646 rack: null)

[2025-12-11 00:02:00.592] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.605] - [Consumer clientId=consumer-apigc-consumer-98757-112567, groupId=apigc-consumer-98757] (Re-)joining group

[2025-12-11 00:02:01.644] [kafka-topic1-task-pool-0~289] [INFO] [--] [org.apache.kafka.clients.Metadata.365] - [Consumer clientId=consumer-apigc-consumer-98757-112566, groupId=apigc-consumer-98757] Cluster ID: qO4zHm1-Tj-XzNnkbzMBPQ

[2025-12-11 00:02:01.645] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.937] - [Consumer clientId=consumer-apigc-consumer-98757-112566, groupId=apigc-consumer-98757] Discovered group coordinator 7.192.148.38:9092 (id: 2147483646 rack: null)

[2025-12-11 00:02:01.645] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.605] - [Consumer clientId=consumer-apigc-consumer-98757-112566, groupId=apigc-consumer-98757] (Re-)joining group

[2025-12-11 00:02:08.701] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.666] - [Consumer clientId=consumer-apigc-consumer-98757-112566, groupId=apigc-consumer-98757] Successfully joined group with generation Generation{generationId=2895174, memberId='consumer-apigc-consumer-98757-112566-4ee0cee5-aa8b-4658-9ea1-58a7f4386d1b', protocol='range'}

[2025-12-11 00:02:08.701] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.666] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Successfully joined group with generation Generation{generationId=2895174, memberId='consumer-apigc-consumer-98757-2-bb905e91-d34a-4780-86fa-e451d39ef146', protocol='range'}

[2025-12-11 00:02:08.705] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.664] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Finished assignment for group at generation 2895174: {consumer-apigc-consumer-98757-112905-f111440a-1007-4008-a792-ea7ebb68cdda=Assignment(partitions=[T_gm_instance-2]), consumer-apigc-consumer-98757-112567-e694f1c9-a263-4558-adc9-ffeb2f3770ac=Assignment(partitions=[T_gm_instance-0, T_gm_instance-1]), consumer-apigc-consumer-98757-1-db6078ce-d505-43c5-80ad-d0e6d164d58d=Assignment(partitions=[idiag-udp-800-subapp-stat-0, idiag-udp-800-subapp-stat-1]), consumer-apigc-consumer-98757-112904-df25af77-1df0-42f7-9d14-181f20aa18d0=Assignment(partitions=[idiag-udp-800-stat-2, idiag-udp-800-stat-3]), consumer-apigc-consumer-98757-112566-4ee0cee5-aa8b-4658-9ea1-58a7f4386d1b=Assignment(partitions=[idiag-udp-800-stat-0, idiag-udp-800-stat-1]), consumer-apigc-consumer-98757-2-bb905e91-d34a-4780-86fa-e451d39ef146=Assignment(partitions=[idiag-udp-800-subapp-stat-2, idiag-udp-800-subapp-stat-3])}

[2025-12-11 00:02:08.708] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.843] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Successfully synced group in generation Generation{generationId=2895174, memberId='consumer-apigc-consumer-98757-2-bb905e91-d34a-4780-86fa-e451d39ef146', protocol='range'}

[2025-12-11 00:02:08.708] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.843] - [Consumer clientId=consumer-apigc-consumer-98757-112566, groupId=apigc-consumer-98757] Successfully synced group in generation Generation{generationId=2895174, memberId='consumer-apigc-consumer-98757-112566-4ee0cee5-aa8b-4658-9ea1-58a7f4386d1b', protocol='range'}

[2025-12-11 00:02:08.708] [kafka-topic2-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordinator.324] - [Consumer clientId=consumer-apigc-consumer-98757-2, groupId=apigc-consumer-98757] Notifying assignor about the new Assignment(partitions=[idiag-udp-800-subapp-stat-2, idiag-udp-800-subapp-stat-3])

[2025-12-11 00:02:08.708] [kafka-topic1-task-pool-0~289] [INFO] [--] [o.a.kafka.clients.consumer.internals.ConsumerCoordina找一下有什么问题,报错语句

最新发布

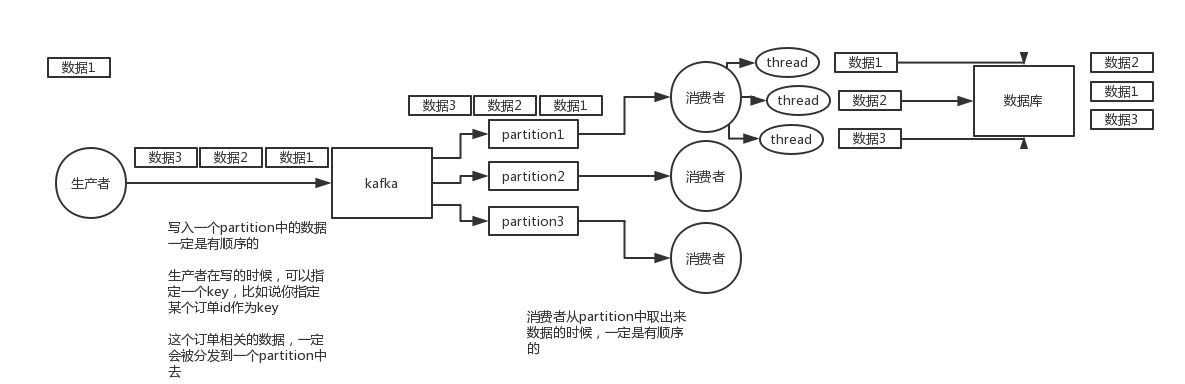

本文围绕Kafka展开,介绍了其消息顺序消费的方法,如通过partitionKey保证顺序;阐述了pull与push原理及优缺点;分析了数据丢失、积压和倾斜的情况及解决办法;还对比了Kafka与RabbitMQ、ZeroMQ、Redis等MQ的优缺点,展现了Kafka的特性。

本文围绕Kafka展开,介绍了其消息顺序消费的方法,如通过partitionKey保证顺序;阐述了pull与push原理及优缺点;分析了数据丢失、积压和倾斜的情况及解决办法;还对比了Kafka与RabbitMQ、ZeroMQ、Redis等MQ的优缺点,展现了Kafka的特性。

432

432

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?