deep learning attracts lots of attention

I believe you have seen lots of exciting results before. There are more and more deep learning applications, for example, you can see the growing deep learning trends at Google.

The history of deep learning: Ups and downs of deep learning

1958:perceptron(linear model) AI arrives in reality

1969:perceptron has limitation Someone claims that perceptron can recognize truck and tank. But they find later that these two pictures are taken on rainy and sunny days separately. Perceptron can only discriminate luminance.

1980s:multi-layer perceptron Do not have significant difference from DNN today

1986s: Backpropagation Usually more than 3 hidden layers is not helpful

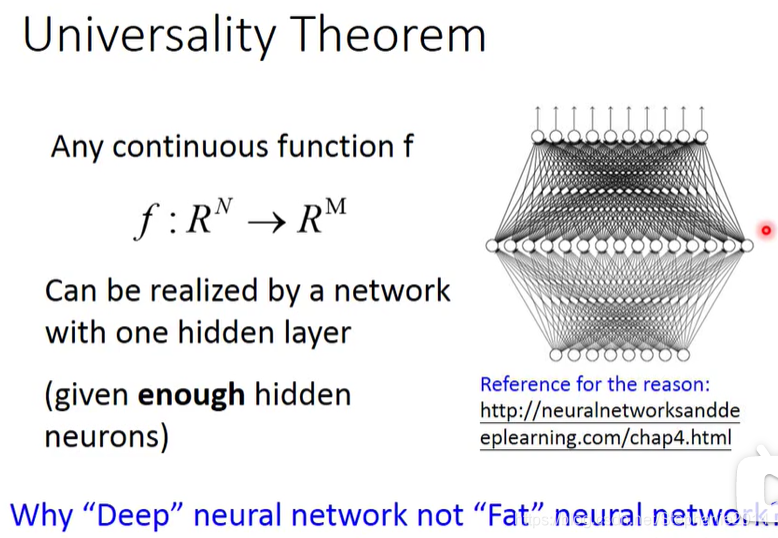

1989: 1 hidden layer is "good enough", why deep? (the effectiveness of neural network is contradicted. Neural network has bad reputation)

The way to redeem NN reputation is to change a name, from neural network to deep learning.(changing name has great power)

2006:RBM initialization (breakthrough) Reseachers think it may work because it seems so powerful. But after they try a lot. They find, actually, RBM initialization is complicated and useless. But it attracts great attention for deep learning. Stone soup

2009:GPU

2011:start to be popular in speech recognition

2012:win ILSVRC image competitiom

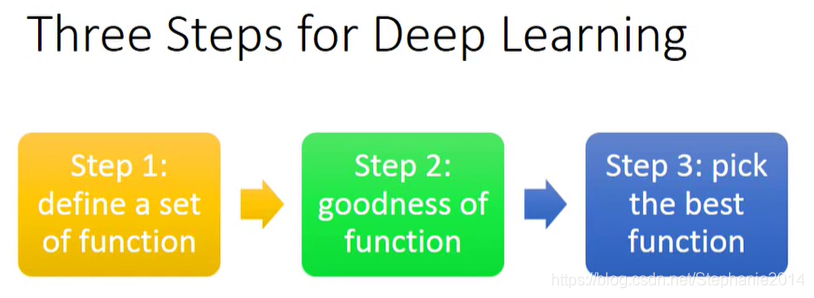

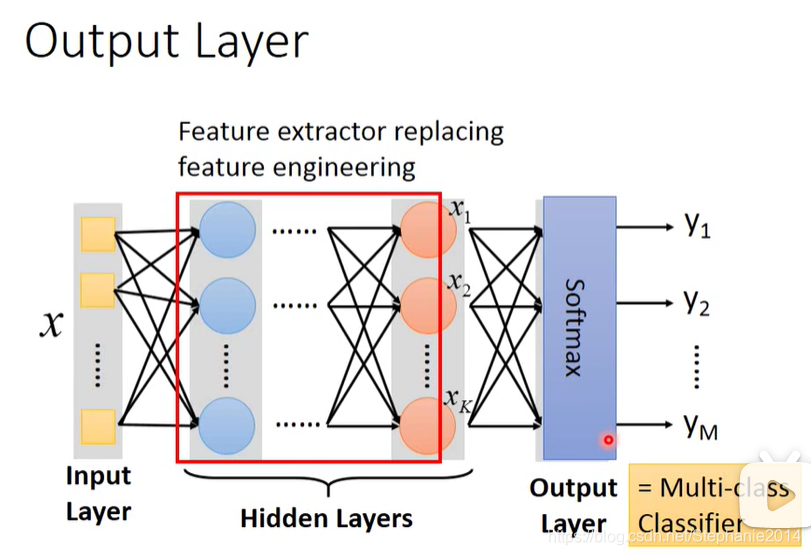

function: a neural network structure

You need to decide the network structure to let a good function in your function set.

Q: How many layers? How many neurons for each layer? Experience and intuition trial and error

Q: Can the structure be automatically determined?

E.g. Evolutionary Artificial Neural Networks

Q: Can we design the network structure?

We can, for example, CNN

tool kit

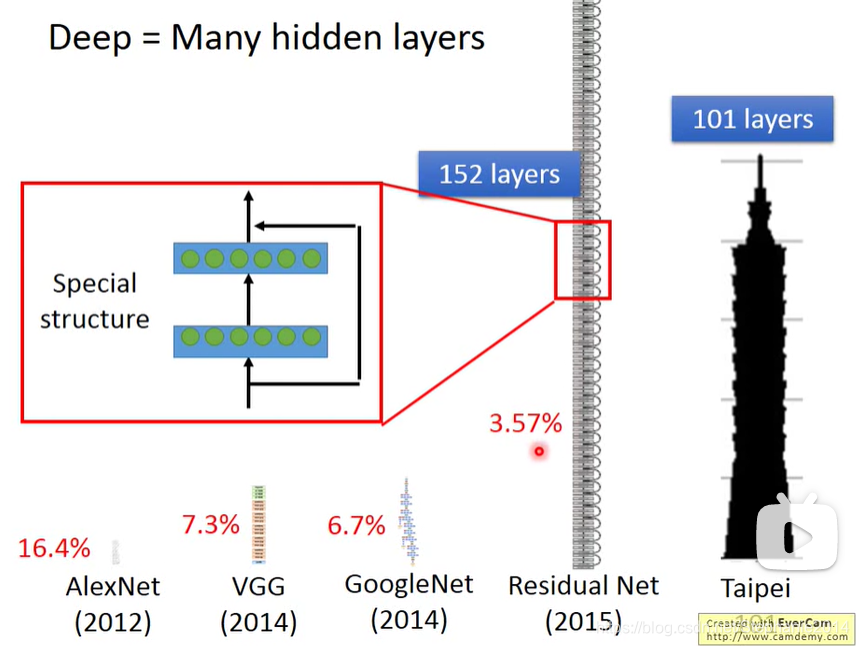

deeper is better?

本文概述了深度学习的历史,从1958年的感知机到现代的深度神经网络。深度学习经历了起起伏伏,如1980年代的多层感知机,1986年的反向传播,再到2006年的 RBM 初始化。随着GPU的使用和2012年在ILSVRC竞赛中的胜利,深度学习逐渐成为主流。尽管结构设计仍依赖于经验和试错,但自动网络结构设计和进化算法也正在发展。如今,深度学习广泛应用于语音识别和图像识别等领域,并持续吸引关注。

本文概述了深度学习的历史,从1958年的感知机到现代的深度神经网络。深度学习经历了起起伏伏,如1980年代的多层感知机,1986年的反向传播,再到2006年的 RBM 初始化。随着GPU的使用和2012年在ILSVRC竞赛中的胜利,深度学习逐渐成为主流。尽管结构设计仍依赖于经验和试错,但自动网络结构设计和进化算法也正在发展。如今,深度学习广泛应用于语音识别和图像识别等领域,并持续吸引关注。

1581

1581

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?