一、数据集准备

- 数据集下载:使用pytorch的处理图像视频的torchvision工具集直接下载MNIST的训练和测试图片

- torchvision:torchvision包含了一些常用的数据集、模型和转换函数等等,比如图片分类、语义切分、目标识别、实例分割、关键点检测、视频分类等工具

- 图片以字节形式存储

- 训练和测试时使用torch.utils.data.DataLoader加载

- 要可见图片,使用pytorch自带工具转换文件为jpg和txt(参考文末文章链接中代码)

- 预处理

二、定义网络

-

网络结构:输入层->卷积层->激活函数->池化->全连接层

(在使用ReLU激活函数时)- 选择最大池化,激活和池化两种顺序结果相同

- 选择平均池化,激活在池化之前,保留的有效特征更多

-

卷积层计算:

- feature map大小计算:(Cin-KernalSize-2*Padding)/Stride+1

- Input层:Cin=channel数,Cout=本层filter数

- 其他层:Cin=上一层filter数,Cout=本层filter数

-

参数计算:

- 卷积层:Cout*(Kernalw*Kernalh*Cin)+bias

- 池化层:不需要参数控制

- 全连接层

-

输入层;

-

卷积层:

- 卷积核大小:越小越好,参数数量降低,用多个小卷积核可以与大卷积核一样的输出大小

- 卷积核个数:等于输出channel数,数量越多提取的特征种类越多,通常为2n个

- 卷积层层数:多次尝试

三、定义损失和优化器

二、代码

#导入包

import torch

from torch.autograd import Variable #获取变量

from torch.utils.data import DataLoader #获取迭代数据

import torchvision

from torchvision.datasets import mnist #获取数据集

import torch.optim as optim

PATH="D:/softwareDocument/CNN/Data/" #数据集位置

ROOT="D:/softwareDocument/CNN/model.pt"

BATCH_SIZE=64

EPOCHS=3

LEARNING_RATE=0.001

MOMENTUM=0.9

RANDOM_SEED=1

torch.manual_seed(RANDOM_SEED)

#1.数据集准备

#下载

#transform= torchvision.transforms.Compose(

# [torchvision.transforms.ToTensor(),

# torchvision.transforms.Normalize(mean=[0.5],std=[0.5])]

# )

train_data = torchvision.datasets.MNIST(

root=PATH,#保存路径

train=True,#为表示是训练数据

transform=torchvision.transforms.ToTensor(),

download=True

)

test_data = torchvision.datasets.MNIST(

root=PATH,#保存路径

train=False,#为表示是训练数据

transform=torchvision.transforms.ToTensor(),

download=True

)## 下载完成后会生成四个压缩包

# 预处理

train_load = DataLoader(dataset=train_data,batch_size=BATCH_SIZE,shuffle=True)

test_load = DataLoader(dataset= test_data,batch_size=BATCH_SIZE,shuffle=True)

#2.定义网络结构

class MyCNN(torch.nn.Module):

def __init__(self):

super(MyCNN,self).__init__()

self.layer1=torch.nn.Sequential(

torch.nn.Conv2d(in_channels=1,out_channels=10,kernel_size=5,stride=1,padding=0),

torch.nn.MaxPool2d(2),

torch.nn.ReLU(),

)

self.layer2=torch.nn.Sequential(

torch.nn.Conv2d(in_channels=10,out_channels=20,kernel_size=5,stride=1,padding=0),

torch.nn.MaxPool2d(2),

torch.nn.ReLU(),

)

self.fc=torch.nn.Linear(20*4*4,10)

def forward(self,input):

layer1_out=self.layer1(input)

layer2_out=self.layer2(layer1_out)

layer2_out=layer2_out.view(x.size(0),-1)

out=self.fc(layer2_out)

return out

model = MyCNN()

#3.定义损失和优化器

loss_func = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(),lr=LEARNING_RATE,momentum=MOMENTUM)

#4.训练

train_loss=[]

for epoch in range(EPOCHS):

running_loss=0.0

for i,(x,y) in enumerate(train_load):

batch_x = Variable(x)

batch_y = Variable(y)

optimizer.zero_grad()# 清空上一次更行参数

out = model(batch_x)

loss=loss_func(out,batch_y)

loss.backward()# 反向传播计算更新值

optimizer.step()#更新

running_loss += loss.item()

if i%64 == 0:

print('[%d,%5d] loss:%.3f' % (epoch,i,running_loss/100))

running_loss = 0.0

train_loss.append(loss.item())

print('Finished Training')

#模型保存

torch.save(model,ROOT)

#5。测试

correct = 0

total = 0

for epoch in range(EPOCHS):

for i,(x,y) in enumerate(test_load):

test_x=Variable(x)

test_y=Variable(y)

out=model(test_x)

pred=torch.max(out,1)[1]

if pred.numpy().all() == test_y.numpy().all():

correct +=1

total +=1

# accuracy = torch.max(out,1)[1].numpy()== test_y.numpy()

print('epoch: ',epoch)

print('accuracy:\t',correct/total)

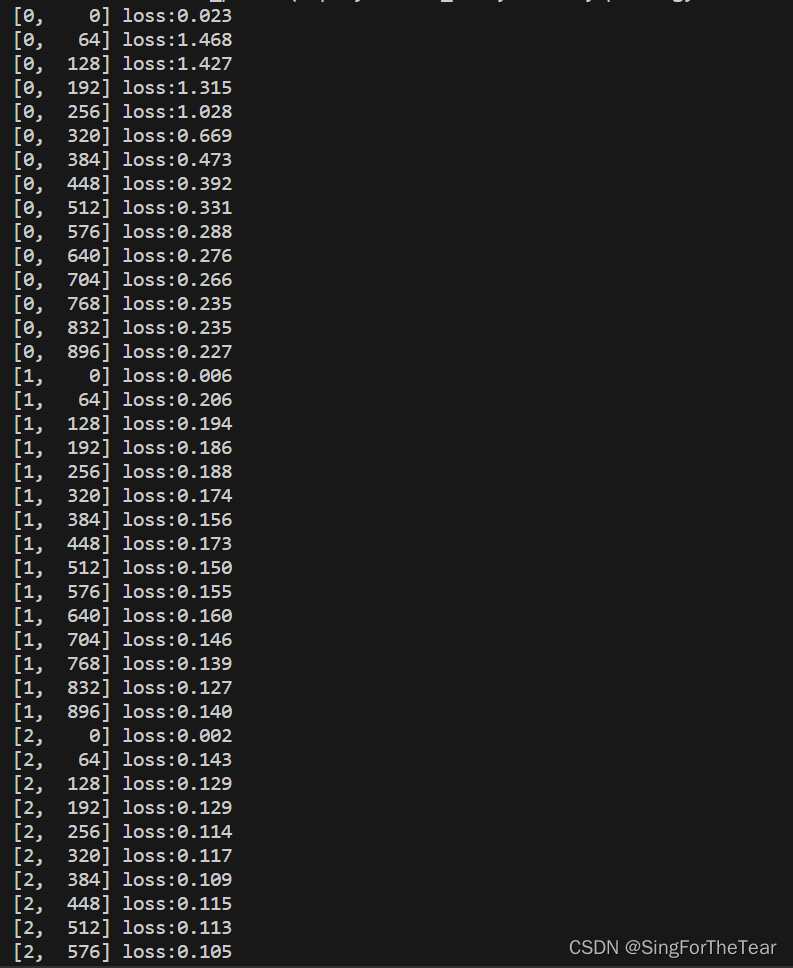

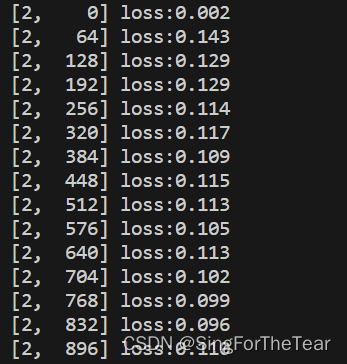

训练:

测试:

24万+

24万+