准备阶段:

1.Python2.7

2.gensim模块下载,在dos窗口下执行pip install gensim

开始阶段:

1.从维基百科下载语料,大概1.45G左右https://dumps.wikimedia.org/zhwiki/latest/zhwiki-latest-pages-articles.xml.bz2

将**.xml.bz2文件转换为txt文档

代码如下

# -*- coding: utf-8 -*- import logging import os.path import sys import warnings warnings.filterwarnings(action='ignore', category=UserWarning, module='gensim') reload(sys) # Python2.5 初始化后会删除 sys.setdefaultencoding 这个方法,我们需要重新载入 sys.setdefaultencoding('utf-8') from gensim.corpora import WikiCorpus if __name__ == '__main__': program = os.path.basename(sys.argv[0]) logger = logging.getLogger(program) logging.basicConfig(format='%(asctime)s: %(levelname)s: %(message)s') logging.root.setLevel(level=logging.INFO) logger.info("running %s" % ' '.join(sys.argv)) if len(sys.argv) < 3: print (globals()['__doc__'] % locals()) sys.exit(1) inp, outp = sys.argv[1:3] space = " " i = 0 output = open(outp, 'w') wiki = WikiCorpus(inp, lemmatize=False, dictionary={}) for text in wiki.get_texts(): output.write(space.join(text)+'\n') i = i + 1 if (i % 10000 == 0): logger.info("Saved " + str(i) + " articles") output.close() logger.info("Finished Saved " + str(i) + " articles")

将代码放在刚下载的语料数据路径下,在dos窗口命令下,找到该文件路径,然后用python XXX.py zh-wiki-latest-pages-articles.xml.bz2 wiki.zh.txt命令运行XXX.py文件

2.处理过的文件简体繁体不分,所以需要繁简转换来将txt文件进行简体转换,此时用opencc进行繁简转换,在下载页下载相应的opencchttps://bintray.com/package/files/byvoid/opencc/OpenCC 然后解压到刚刚你的txt文件的路径下,打开文件夹,运行一下opencc.exe然后再次从dos窗口进入到当前文件夹,使用命令opencc -i wiki.zh.text -o wiki.zh.text.sim -c t2s.json

将文件繁简转换

3.将处理好的文件进行结巴分词,需要安装结巴分词,pip install jieba代码如下

# coding:utf-8 import jieba import sys reload(sys) sys.setdefaultencoding('utf-8') # 创建停用词list def stopwordslist(filepath): stopwords = [line.strip() for line in open(filepath, 'r').readlines()] #对文章的每一行作为一个元组对象存进去 return stopwords # 对句子进行分词 def seg_sentence(sentence): stopwords = stopwordslist('stopwords.txt') # 这里加载停用词的路径 outstr = '' for word in sentence: if word not in stopwords: if word != '\t': outstr += word return outstr inputs = open('wiki.jieba.txt', 'r') outputs = open('output.txt', 'w') for line in inputs: line_seg = seg_sentence(line) # 这里的返回值是字符串 outputs.write(line_seg +'\n') outputs.close() inputs.close()

4.将分好词的进行模型训练,这一步时间比较长

#!/usr/bin/env python #-*- coding:utf-8 -*- import logging import os.path import sys import multiprocessing from gensim.corpora import WikiCorpus from gensim.models import Word2Vec from gensim.models.word2vec import LineSentence if __name__ == '__main__': program = os.path.basename(sys.argv[0]) logger = logging.getLogger(program) logging.basicConfig(format='%(asctime)s: %(levelname)s: %(message)s') logging.root.setLevel(level=logging.INFO) logger.info("running %s" % ' '.join(sys.argv)) # check and process input arguments if len(sys.argv) < 4: print globals()['__doc__'] % locals() sys.exit(1) inp, outp1, outp2 = sys.argv[1:4] model = Word2Vec(LineSentence(inp), size=400, window=5, min_count=5, workers=multiprocessing.cpu_count()) model.save(outp1) model.save_word2vec_format(outp2, binary=False)

会生成如下的几个文件最主要的是**txt.model和**.vectors

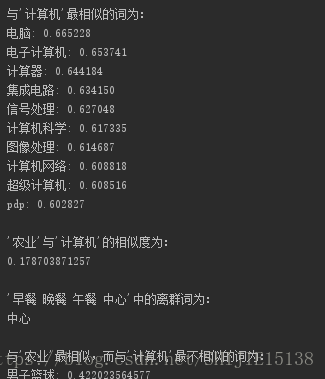

5,最后就是进行模型的检验了

# encoding:utf8 import gensim if __name__ == '__main__': model = gensim.models.Word2Vec.load('wiki.zh.txt.model') word1 = u'农业' word2 = u'计算机' if word1 in model: print (u"'%s'的词向量为: " % word1) print (model[word1]) else: print (u'单词不在字典中!') result = model.most_similar(word2) print (u"\n与'%s'最相似的词为: " % word2) for e in result: print ('%s: %f' % (e[0], e[1])) print (u"\n'%s'与'%s'的相似度为: " % (word1, word2)) print (model.similarity(word1, word2)) print (u"\n'早餐 晚餐 午餐 中心'中的离群词为: ") print (model.doesnt_match(u"早餐 晚餐 午餐 中心".split())) print (u"\n与'%s'最相似,而与'%s'最不相似的词为: " % (word1, word2)) temp = (model.most_similar(positive=[u'篮球'], negative=[u'计算机'], topn=1)) print ('%s: %s' % (temp[0][0], temp[0][1]))

到这里,我们就完成了!!!

832

832

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?