MGR简介

基于传统异步复制和半同步复制的缺陷——数据的一致性问题无法保证,MySQL官方在5.7.17版本正式推出组复制(MySQL Group Replication,简称MGR),以插件形式提供,实现了分布式下数据的最终一致性。

MGR特点如下: 1)高一致性,基于原生复制及paxos协议的组复制技术,并以插件的方式提供,提供一致数据安全保证; 2)高容错性,只要不是大多数节点坏掉就可以继续工作,有自动检测机制,当不同节点产生资源争用冲突时,不会出现错误,按照先到者优先原则进行处 理,并且内置了自动化脑裂防护机制; 3)高扩展性,节点的新增和移除都是自动的,新节点加入后,会自动从其他节点上同步状态,直到新节点和其他节点保持一致,如果某节点被移除了,其他节点自动更新组信息,自动维护新的组信息; 4)高灵活性,有单主模式和多主模式,在同一个group内,不允许两种模式同时存在。在单主模式下,主节点宕机时,会自动重新选择新的master,当旧的master恢复加入后,新master不会发生改变。所有更新操作都在主节点上进行;在多主模式下,所有的server成员都可以同时接受更新。 5) 由若干个节点共同组成一个复制组,一个事务的提交,必须经过组内大多数节点(N/2+1)决议并通过,才能得以提交。

wget https://downloads.mysql.com/archives/get/p/23/file/mysql-8.0.28-1.el7.x86_64.rpm-bundle.tar

MGR主要限制条件

-

仅支持InnoDB表,并且每张表一定要有一个主键,或者非Null+唯一键

-

必须打开GTID特性,二进制日志格式必须设置为ROW

-

不支持复制过滤,如果有节点设置了复制过滤,将影响节点间决议的达成。

-

不支持超大事务。

-

MGR忽略表锁和命名锁,在MGR中lock tables. unlock tables. get_lock. release_lock等这些表锁和命名锁将被忽略。

-

多主模式中,默认不支持Serializable隔离级别。

-

多主模式下,对同一个对象进行并发的有冲突的ddl和dml操作导致这种冲突在部分成员节点中无法检测到,最终可能导致数据不一致。

-

多主模式下可能导致死锁,比如select…for update在不同节点执行,由于多节点锁无法共享,很容易导致死锁。

其他等限制参考官方文档:

MySQL :: MySQL 8.0 Reference Manual :: 18.3.1 Group Replication Requirements

一、创建3台MySQL环境

1.1 申请3台linux主机

| 主机 | ip地址 | 操作系统版本 |

|---|---|---|

| mg1 | 192.168.2.112 | redhat 7 |

| mg2 | 192.168.2.34 | redhat 7 |

| mg3 | 192.168.2.170 | redhat 7 |

1.2. 安装mysql

services:

mysql:

image: mysql:8.0.28

container_name: mysql

environment:

MYSQL_ROOT_PASSWORD: Hbis@123

command: --default-authentication-plugin=mysql_native_password

ports:

- "3308:3306"

- "33061:33061"

network_mode: host

volumes:

- ./mysql_data:/var/lib/mysql

- ./my.cnf:/etc/mysql/conf.d/mysql.cnf

healthcheck:

test: [ "CMD", "mysqladmin" ,"ping", "-h", "localhost" ]

interval: 5s

timeout: 10s

retries: 20

proxysql:

image: proxysql/proxysql:latest

container_name: proxysql

ports:

- "6032:6032" # 管理端口

- "6033:6033" # 应用连接端口

volumes:

- ./proxysql.cnf:/etc/proxysql.cnf二、修改MySQL参数

--mgr1

cat > /etc/my.cnf <<"EOF"

[mysqld]

user=mysql

port=3308

character_set_server=utf8mb4

secure_file_priv=

server-id = 1

default-time-zone = '+8:00'

log_timestamps = SYSTEM

log-bin =

binlog_format=row

binlog_checksum=NONE

skip-name-resolve

log_slave_updates = 1

gtid-mode=ON

enforce-gtid-consistency=on

default_authentication_plugin=mysql_native_password

max_allowed_packet = 500M

lower_case_table_names = 1

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION

expire_logs_days= 60

max_connections = 20000

innodb_buffer_pool_size=1024M

slow_query_log = ON

#slow_query_log_file=/usr/local/mysql/data/mysql-slow.log

#安装mysql_replication引擎前提

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log=lhrmgr31-relay-bin

#同步算法,官网建议设置该参数使用 XXHASH64 算法

transaction_write_set_extraction=XXHASH64

#集群的uuid,组名必须是一个uuid

loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

#是否随着服务启动集群,这里设置否

loose-group_replication_start_on_boot=OFF

#集群本机端口,和服务端口不同,以本机端口 33061 接受来自组中成员的传入连接

loose-group_replication_local_address= "192.168.2.112:33061"

#集群包含的所有节点

loose-group_replication_group_seeds= "192.168.2.112:33061,192.168.2.34:33061,192.168.2.170:33061"

#是否设置为主节点,当创建集群时其他加入的节点都以该节点为目标加入集群

loose-group_replication_bootstrap_group=OFF

#设置白名单

loose-group_replication_ip_whitelist="192.168.2.112,192.168.2.34,192.168.2.170"

report_host=192.168.2.112

report_port=3308

EOF

-- mgr2

cat > /etc/my.cnf <<"EOF"

[mysqld]

user=mysql

port=3308

character_set_server=utf8mb4

secure_file_priv=

server-id = 2

default-time-zone = '+8:00'

log_timestamps = SYSTEM

log-bin =

binlog_format=row

binlog_checksum=NONE

skip-name-resolve

log_slave_updates = 1

gtid-mode=ON

enforce-gtid-consistency=on

default_authentication_plugin=mysql_native_password

max_allowed_packet = 500M

lower_case_table_names = 1

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION

expire_logs_days= 60

max_connections = 20000

innodb_buffer_pool_size=1024M

slow_query_log = ON

#slow_query_log_file=/usr/local/mysql/data/mysql-slow.log

#安装mysql_replication引擎前提

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log=lhrmgr31-relay-bin

#同步算法,官网建议设置该参数使用 XXHASH64 算法

transaction_write_set_extraction=XXHASH64

#集群的uuid,组名必须是一个uuid

loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

#是否随着服务启动集群,这里设置否

loose-group_replication_start_on_boot=OFF

#集群本机端口,和服务端口不同,以本机端口 33061 接受来自组中成员的传入连接

loose-group_replication_local_address= "192.168.2.34:33061"

#集群包含的所有节点

loose-group_replication_group_seeds= "192.168.2.112:33061,192.168.2.34:33061,192.168.2.170:33061"

#是否设置为主节点,当创建集群时其他加入的节点都以该节点为目标加入集群

loose-group_replication_bootstrap_group=OFF

#设置白名单

loose-group_replication_ip_whitelist="192.168.2.112,192.168.2.34,192.168.2.170"

report_host=192.168.2.34

report_port=3308

EOF

-- mgr3

cat > /etc/my.cnf <<"EOF"

[mysqld]

user=mysql

port=3308

character_set_server=utf8mb4

secure_file_priv=

server-id = 3

default-time-zone = '+8:00'

log_timestamps = SYSTEM

log-bin =

binlog_format=row

binlog_checksum=NONE

skip-name-resolve

log_slave_updates = 1

gtid-mode=ON

enforce-gtid-consistency=on

default_authentication_plugin=mysql_native_password

max_allowed_packet = 500M

lower_case_table_names = 1

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION

expire_logs_days= 60

max_connections = 20000

innodb_buffer_pool_size=1024M

slow_query_log = ON

#slow_query_log_file=/usr/local/mysql/data/mysql-slow.log

#安装mysql_replication引擎前提

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log=lhrmgr31-relay-bin

#同步算法,官网建议设置该参数使用 XXHASH64 算法

transaction_write_set_extraction=XXHASH64

#集群的uuid,组名必须是一个uuid

loose-group_replication_group_name="aaaaaaaa-aaaa-aaaa-aaaa-aaaaaaaaaaaa"

#是否随着服务启动集群,这里设置否

loose-group_replication_start_on_boot=OFF

#集群本机端口,和服务端口不同,以本机端口 33061 接受来自组中成员的传入连接

loose-group_replication_local_address= "192.168.2.170:33061"

#集群包含的所有节点

loose-group_replication_group_seeds= "192.168.2.112:33061,192.168.2.34:33061,192.168.2.170:33061"

#是否设置为主节点,当创建集群时其他加入的节点都以该节点为目标加入集群

loose-group_replication_bootstrap_group=OFF

#设置白名单

loose-group_replication_ip_whitelist="192.168.2.112,192.168.2.34,192.168.2.170"

report_host=192.168.2.170

report_port=3308

EOF三、重启MySQL环境

查看MySQL的主机名、server_id和server_uuid

[root@mgr1 ~]# mysql -uroot -proot123 -e "select @@hostname,@@server_id,@@server_uuid"

mysql: [Warning] Using a password on the command line interface can be insecure.

+------------+-------------+--------------------------------------+

| @@hostname | @@server_id | @@server_uuid |

+------------+-------------+--------------------------------------+

| mgr1 | 1 | 63f351cc-cdf1-11ed-a386-0242ac48000b |

+------------+-------------+--------------------------------------+

[root@mgr2 /]# mysql -uroot -proot123 -e "select @@hostname,@@server_id,@@server_uuid"

mysql: [Warning] Using a password on the command line interface can be insecure.

+------------+-------------+--------------------------------------+

| @@hostname | @@server_id | @@server_uuid |

+------------+-------------+--------------------------------------+

| mgr2 | 2 | 3401b339-cdf1-11ed-9b3f-0242ac48000c |

+------------+-------------+--------------------------------------+

[root@mgr3 /]# mysql -uroot -proot123 -e "select @@hostname,@@server_id,@@server_uuid"

mysql: [Warning] Using a password on the command line interface can be insecure.

+------------+-------------+--------------------------------------+

| @@hostname | @@server_id | @@server_uuid |

+------------+-------------+--------------------------------------+

| mgr3 | 3 | 32b2fe4a-cdf1-11ed-989c-0242ac48000d |

+------------+-------------+--------------------------------------+四、安装MGR插件(所有节点执行)

mysql> install plugin group_replication soname 'group_replication.so';

Query OK, 0 rows affected (0.08 sec

MySQL [(none)]> show plugins;

+---------------------------------+----------+--------------------+----------------------+---------+

| Name | Status | Type | Library | License |

+---------------------------------+----------+--------------------+----------------------+---------+

...................................

| group_replication | ACTIVE | GROUP REPLICATION | group_replication.so | GPL |

+---------------------------------+----------+--------------------+----------------------+---------+

46 rows in set (0.01 sec)五、设置复制账号(所有节点执行)

-- 创建账号不记录binlog

mysql> SET SQL_LOG_BIN=0;

Query OK, 0 rows affected (0.00 sec)

mysql> CREATE USER repl@'%' IDENTIFIED BY 'repl';

Query OK, 0 rows affected (0.02 sec)

mysql> GRANT REPLICATION SLAVE ON *.* TO repl@'%';

Query OK, 0 rows affected (0.00 sec)

mysql> FLUSH PRIVILEGES;

Query OK, 0 rows affected (0.01 sec)

mysql> SET SQL_LOG_BIN=1;

Query OK, 0 rows affected (0.01 sec)

mysql> change replication source to source_user='repl', source_password='repl' for channel 'group_replication_recovery';

Query OK, 0 rows affected, 2 warnings (0.10 sec)六、启动MGR单主模式

6.1 启动MGR

-- 只在主库(192.168.2.112)上执行

mysql> set global group_replication_bootstrap_group=on;

Query OK, 0 rows affected (0.00 sec)

mysql> start group_replication;

Query OK, 0 rows affected, 1 warning (1.16 sec)

mysql> set global group_replication_bootstrap_group=off;

Query OK, 0 rows affected (0.02 sec)

-- 查看mgr组信息

select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | b6876345-8c95-11f0-8f09-fa163ee831ba | 192.168.2.112 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)6.2 其他节点加入MGR

-- 在从库(192.168.2.170,192.168.2.34)上执行(必须先清理事务,否则加入组失败)

mysql> start group_replication;

ERROR 3092 (HY000): The server is not configured properly to be an active member of the group. Please see more details on error log.

mysql> reset master;

Query OK, 0 rows affected (0.15 sec)

mysql> start group_replication;

Query OK, 0 rows affected, 1 warning (4.01 sec)

-- 再次查看MGR组信息

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | b6876345-8c95-11f0-8f09-fa163ee831ba | 192.168.2.112 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

| group_replication_applier | bb7db288-8c95-11f0-b3c8-fa163eb1e28d | 192.168.2.170 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

| group_replication_applier | be7b73a2-8c95-11f0-85a9-fa163ea70aac | 192.168.2.34 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)结论:可以看到,3个节点状态为online,192.168.2.112为PRIMARY主节点,只有主节点可以写入,其他节点只读,MGR单主模式搭建成功,单主也是默认模式。

– 其他SECONDARY节点,执行写操作报错:

mysql> create database test;

ERROR 1290 (HY000): The MySQL server is running with the --super-read-only option so it cannot execute this statement七、测试同步

在主节点上创建测试数据,然后在其它节点查询:

-- 创建测试库

mysql> create database testdb;

Query OK, 1 row affected (0.09 sec)

-- 创建测试表,并插入测试数据

mysql> CREATE TABLE testdb.`t1` ( `id` int(11) NOT NULL AUTO_INCREMENT, `name` varchar(100) DEFAULT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB;

Query OK, 0 rows affected, 1 warning (0.12 sec)

mysql> insert into testdb.t1 values (1,'tony');

Query OK, 1 row affected (0.09 sec)

mysql> insert into testdb.t1 values (2,'lion');

Query OK, 1 row affected (0.12 sec)

mysql> insert into testdb.t1 values (3,'jack');

Query OK, 1 row affected (0.05 sec)

mysql> select * from testdb.t1;

+----+------+

| id | name |

+----+------+

| 1 | tony |

| 2 | lion |

| 3 | jack |

+----+------+

3 rows in set (0.01 sec)

-- 其他2个节点查询出来的结果一样

mysql> select * from testdb.t1;

+----+------+

| id | name |

+----+------+

| 1 | tony |

| 2 | lion |

| 3 | jack |

+----+------+

3 rows in set (0.04 sec)八、多主和单主模式切换

7.1 查询当前模式

mysql> show variables like '%group_replication_single_primary_mode%';

+---------------------------------------+-------+

| Variable_name | Value |

+---------------------------------------+-------+

| group_replication_single_primary_mode | ON |

+---------------------------------------+-------+

1 row in set (0.08 sec)

mysql> SELECT @@group_replication_single_primary_mode;

+-----------------------------------------+

| @@group_replication_single_primary_mode |

+-----------------------------------------+

| 1 |

+-----------------------------------------+

1 row in set (0.00 sec)结论:参数group_replication_single_primary_mode为ON,表示单主模式。

7.2 函数实现多主和单主在线切换

函数切换:从MySQL 8.0.13开始,可以使用函数进行在线修改MGR模式,不需要重启组复制。

-- 单主切多主

select group_replication_switch_to_multi_primary_mode();

-- 多主切单主,入参需要传入主库的server_uuid

select group_replication_switch_to_single_primary_mode('@@server_uuid') ;7.2.1 单主切多主模式

mysql> select @@group_replication_single_primary_mode;

+-----------------------------------------+

| @@group_replication_single_primary_mode |

+-----------------------------------------+

| 1 |

+-----------------------------------------+

1 row in set (0.01 sec)

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | b6876345-8c95-11f0-8f09-fa163ee831ba | 192.168.2.112 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

| group_replication_applier | bb7db288-8c95-11f0-b3c8-fa163eb1e28d | 192.168.2.170 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

| group_replication_applier | be7b73a2-8c95-11f0-85a9-fa163ea70aac | 192.168.2.34 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)

-- 单主切多主

mysql> select group_replication_switch_to_multi_primary_mode();

+--------------------------------------------------+

| group_replication_switch_to_multi_primary_mode() |

+--------------------------------------------------+

| Mode switched to multi-primary successfully. |

+--------------------------------------------------+

1 row in set (1.38 sec)

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | b6876345-8c95-11f0-8f09-fa163ee831ba | 192.168.2.112 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

| group_replication_applier | bb7db288-8c95-11f0-b3c8-fa163eb1e28d | 192.168.2.170 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

| group_replication_applier | be7b73a2-8c95-11f0-85a9-fa163ea70aac | 192.168.2.34 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)

mysql> select @@group_replication_single_primary_mode;

+-----------------------------------------+

| @@group_replication_single_primary_mode |

+-----------------------------------------+

| 0 |

+-----------------------------------------+

1 row in set (0.02 sec)结论:所有成员角色都变为PRIMARY主节点,成功切换成多主模式。

7.2.2 多主切单主模式

-- 重新切换成单主模式,主节点选择mgr1

mysql> select @@group_replication_single_primary_mode;

+-----------------------------------------+

| @@group_replication_single_primary_mode |

+-----------------------------------------+

| 0 |

+-----------------------------------------+

1 row in set (0.02 sec)

mysql> select @@server_uuid;

+--------------------------------------+

| @@server_uuid |

+--------------------------------------+

| 63f351cc-cdf1-11ed-a386-0242ac48000b |

+--------------------------------------+

1 row in set (0.00 sec)

mysql> select group_replication_switch_to_single_primary_mode('63f351cc-cdf1-11ed-a386-0242ac48000b') ;

+-----------------------------------------------------------------------------------------+

| group_replication_switch_to_single_primary_mode('63f351cc-cdf1-11ed-a386-0242ac48000b') |

+-----------------------------------------------------------------------------------------+

| Mode switched to single-primary successfully. |

+-----------------------------------------------------------------------------------------+

1 row in set (0.59 sec)

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | b6876345-8c95-11f0-8f09-fa163ee831ba | 192.168.2.112 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

| group_replication_applier | bb7db288-8c95-11f0-b3c8-fa163eb1e28d | 192.168.2.170 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

| group_replication_applier | be7b73a2-8c95-11f0-85a9-fa163ea70aac | 192.168.2.34 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)

mysql> select @@group_replication_single_primary_mode;

+-----------------------------------------+

| @@group_replication_single_primary_mode |

+-----------------------------------------+

| 1 |

+-----------------------------------------+

1 row in set (0.00 sec)结论:成功切换成单主模式,主节点选择mgr1。

九 模拟主节点宕机

– 如果是其中一个SECONDARY宕机,则不影响集群运行,这里模拟主节点宕机。

9.1 查询当前MGR成员状态

mysql> select @@group_replication_single_primary_mode;

+-----------------------------------------+

| @@group_replication_single_primary_mode |

+-----------------------------------------+

| 1 |

+-----------------------------------------+

1 row in set (0.00 sec)

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | b6876345-8c95-11f0-8f09-fa163ee831ba | 192.168.2.112 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

| group_replication_applier | bb7db288-8c95-11f0-b3c8-fa163eb1e28d | 192.168.2.170 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

| group_replication_applier | be7b73a2-8c95-11f0-85a9-fa163ea70aac | 192.168.2.34 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)结论:当前为单主模式,所有成员都是online,主节点为mgr1。

9.2 模拟主节点宕机

[root@monitor mysql-cluster]# docker-compose down

[+] Running 3/3

✔ Container mysql Removed 7.7s

✔ Container proxysql Removed 0.8s

✔ Network mysql-cluster_default Removed 9.3 再次查看集群状态

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | b6876345-8c95-11f0-8f09-fa163ee831ba | 192.168.2.112 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

| group_replication_applier | bb7db288-8c95-11f0-b3c8-fa163eb1e28d | 192.168.2.170 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

| group_replication_applier | be7b73a2-8c95-11f0-85a9-fa163ea70aac | 192.168.2.34 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | bb7db288-8c95-11f0-b3c8-fa163eb1e28d | 192.168.2.170 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

| group_replication_applier | be7b73a2-8c95-11f0-85a9-fa163ea70aac | 192.168.2.34 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

2 rows in set (0.00 sec)结论:原主节点为mgr1状态变为UNREACHABLE,随后被剔除,主节点变为mgr3。

当进行自动主库选举时会按照下列优先级进行:

1.低版本优先,当组复制中同时存在高版本和低版本MySQL时,低版本会被选举为主库(向下兼容)。

2.如果有多个低版本,则会参考group_replication_member_weight(0-100)变量的值,默认为50,数字越大优先级越高。

3.如果版本,group_replication_member_weight都一样,最后考虑的因素是UUID的排序,UUID最小的选举为主库。

mysql> show variables like 'group_replication_member_weight';

+---------------------------------+-------+

| Variable_name | Value |

+---------------------------------+-------+

| group_replication_member_weight | 50 |

+---------------------------------+-------+

1 row in set (0.00 sec)9.4 新的主节点上操作

mysql> insert into testdb.t1 values (4,'leo');

Query OK, 1 row affected (0.00 sec)

mysql> insert into testdb.t1 values (5,'Aaron');

Query OK, 1 row affected (0.00 sec)

mysql> select * from testdb.t1;

+----+-------+

| id | name |

+----+-------+

| 1 | tony |

| 2 | lion |

| 3 | jack |

| 4 | leo |

| 5 | Aaron |

+----+-------+

5 rows in set (0.00 sec)

-- 其他节点查询结果一致

mysql> select * from testdb.t1;

+----+-------+

| id | name |

+----+-------+

| 1 | tony |

| 2 | lion |

| 3 | jack |

| 4 | leo |

| 5 | Aaron |

+----+-------+

5 rows in set (0.00 sec)9.5 原主节点重新启动

[root@monitor mysql-cluster]# docker exec -it mysql mysql -uroot -pHbis@123

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 405

Server version: 8.0.28 MySQL Community Server - GPL

Copyright (c) 2000, 2022, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> SHOW VARIABLES LIKE 'bind_address';

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| bind_address | * |

mysql> select * from performance_schema.replication_group_members;

+---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | | | NULL | OFFLINE | | | |

+---------------------------+-----------+-------------+-------------+--------------+-------------+----------------+-----------------

1 row in set (0.03 sec)

-- 以备库角色加入原有的MGR群

mysql> start group_replication;

Query OK, 0 rows affected, 1 warning (20.74 sec)

mysql> select * from performance_schema.replication_group_members;

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| CHANNEL_NAME | MEMBER_ID | MEMBER_HOST | MEMBER_PORT | MEMBER_STATE | MEMBER_ROLE | MEMBER_VERSION | MEMBER_COMMUNICATION_STACK |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

| group_replication_applier | b6876345-8c95-11f0-8f09-fa163ee831ba | 192.168.2.112 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

| group_replication_applier | bb7db288-8c95-11f0-b3c8-fa163eb1e28d | 192.168.2.170 | 3308 | ONLINE | PRIMARY | 8.0.28 | XCom |

| group_replication_applier | be7b73a2-8c95-11f0-85a9-fa163ea70aac | 192.168.2.34 | 3308 | ONLINE | SECONDARY | 8.0.28 | XCom |

+---------------------------+--------------------------------------+---------------+-------------+--------------+-------------+----------------+----------------------------+

3 rows in set (0.00 sec)

mysql> select * from testdb.t1;

+----+-------+

| id | name |

+----+-------+

| 1 | tony |

| 2 | lion |

| 3 | jack |

| 4 | leo |

| 5 | Aaron |

+----+-------+

5 rows in set (0.02 sec)结论:原主节点为mgr1 以备库角色加入到集群,并且新主节点的操作也同步到同步到mgr1中。

注意: 原主节点为mgr1 启动后,不要以主节点的形式,加入到MGR集群中,无法成功加入到mgr集群,甚至会因为一些错误操作,需要重新初始化该节点,才能正常加入集群。所以,当原来的主节点宕机后,注意检查参数loose-group_replication_start_on_boot、loose-group_replication_bootstrap_group,建议参数文件中都设置成OFF。

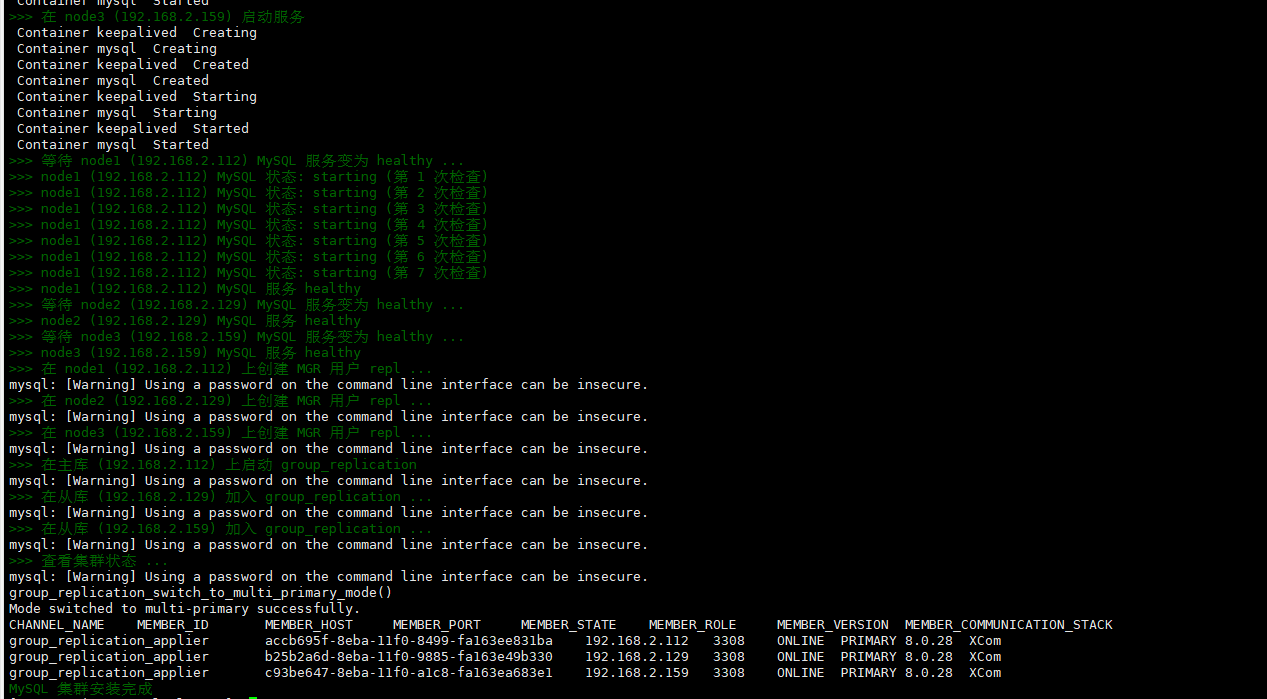

十、docker-compose快速安装脚本部署

install.sh

#!/bin/bash

this_script_dir=$(cd $(dirname ${BASH_SOURCE[0]}) && pwd)

cd $this_script_dir

source ./.env

set -e

function showMsg(){ echo -e "\033[32m$1\033[0m"; }

function showErr(){ echo -e "\033[31m$1\033[0m"; }

# 2. 节点与对应变量

declare -A NODES=(

[node1]="$MYSQL_NODE1_IP"

[node2]="$MYSQL_NODE2_IP"

[node3]="$MYSQL_NODE3_IP"

)

declare -A STATES=(

[node1]="MASTER"

[node2]="BACKUP"

[node3]="BACKUP"

)

declare -A IDs=(

[node1]="150"

[node2]="120"

[node3]="100"

)

# 端口检查函数

check_port() {

local PORT=$1

for NODE in "${!NODES[@]}"; do

IP=${NODES[$NODE]}

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "ss -lnt | awk '{print \$4}' | grep -q ':${PORT}\$'" \

&& { showErr ">>> $NODE 上端口 $PORT 已被占用"; exit 1; } \

|| showMsg ">>> $NODE 上端口 $PORT 可用"

done

}

# 拷贝镜像并加载到所有节点

load_image() {

for NODE in "${!NODES[@]}"; do

IP=${NODES[$NODE]}

showMsg ">>> 在 $NODE 创建目录 /opt/mysql-install/"

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "mkdir -p /opt/mysql-install"

showMsg ">>> 拷贝 mysql.tar.gz keepalived.tar.gz 到 $NODE"

scp -o StrictHostKeyChecking=no mysql.tar.gz "$SSH_USER@$IP":/opt/mysql-install//mysql.tar.gz

scp -o StrictHostKeyChecking=no keepalived.tar.gz "$SSH_USER@$IP":/opt/mysql-install//keepalived.tar.gz

showMsg ">>> 在 $NODE 加载 mysql 镜像"

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "docker load -i /opt/mysql-install/mysql.tar.gz"

showMsg ">>> 在 $NODE 加载 keepalived 镜像"

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "docker load -i /opt/mysql-install/keepalived.tar.gz"

done

}

# 渲染配置文件

render_config() {

# 生成临时目录

TMP_DIR=$(mktemp -d)

trap "rm -rf $TMP_DIR" EXIT

# 修改配置文件

for NODE in "${!NODES[@]}"; do

IP=${NODES[$NODE]}

ID=${IDs[$NODE]}

STATE=${STATES[$NODE]}

showMsg ">>> 正在处理 $NODE ($IP) ..."

sed \

-e "s/MYSQL_PORT/$MYSQL_PORT/g" \

-e "s/STATE/$STATE/g" \

-e "s/MYSQL_NODE1_IP/$MYSQL_NODE1_IP/g" \

-e "s/MYSQL_NODE2_IP/$MYSQL_NODE2_IP/g" \

-e "s/MYSQL_NODE3_IP/$MYSQL_NODE3_IP/g" \

"conf/mysql-${NODE}.cnf" > "$TMP_DIR/my.cnf"

# 渲染 docker-compose.yml

cat > "$TMP_DIR/docker-compose.yml" <<EOF

services:

mysql:

image: mysql:8.0.28

container_name: mysql

environment:

MYSQL_ROOT_PASSWORD: $ROOT_PWD

volumes:

- ./mysql_data:/var/lib/mysql

- ./my.cnf:/etc/mysql/conf.d/mysql.cnf

network_mode: host

healthcheck:

test: [ "CMD", "mysqladmin" ,"ping", "-h", "localhost" ]

interval: 5s

timeout: 10s

retries: 20

keepalived:

image: keepalived

container_name: keepalived

restart: always

network_mode: host

cap_add:

- NET_ADMIN

- NET_BROADCAST

- NET_RAW

environment:

KEEPALIVED_INTERFACE: "$NIC"

KEEPALIVED_PASSWORD: "123456"

KEEPALIVED_STATE: "$STATE"

KEEPALIVED_PRIORITY: "$ID"

KEEPALIVED_UNICAST_PEERS: "#PYTHON2BASH:['$MYSQL_NODE1_IP','$MYSQL_NODE2_IP','$MYSQL_NODE3_IP']"

KEEPALIVED_VIRTUAL_IPS: "$VIP"

EOF

# 远程创建目录

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "mkdir -p /opt/mysql-install/"

# 拷贝文件

scp "$TMP_DIR/my.cnf" "$SSH_USER@$IP:/opt/mysql-install/"

scp "$TMP_DIR/docker-compose.yml" "$SSH_USER@$IP:/opt/mysql-install/"

#scp "./.env" "$SSH_USER@$IP:/opt/mysql-install/"

done

}

start_service(){

for NODE in "${!NODES[@]}"; do

IP=${NODES[$NODE]}

showMsg ">>> 在 $NODE ($IP) 启动服务"

ssh -o StrictHostKeyChecking=no "${SSH_USER}@${IP}" "docker-compose -f /opt/mysql-install/docker-compose.yml up -d"

done

}

init_mgr() {

# 1. 所有节点:创建 repl 用户

for NODE in "${!NODES[@]}"; do

IP=${NODES[$NODE]}

showMsg ">>> 在 $NODE ($IP) 上创建 MGR 用户 repl ..."

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "

docker exec mysql mysql -uroot -p$ROOT_PWD -e \"

set sql_log_bin=0;

create user if not exists 'repl'@'%' identified by 'repl';

grant replication slave on *.* to 'repl'@'%';

flush privileges;

set sql_log_bin=1;

change replication source to source_user='repl', source_password='repl' for channel 'group_replication_recovery';

\" "

done

# 2. 主库初始化

MASTER_IP=${NODES[node1]}

showMsg ">>> 在主库 ($MASTER_IP) 上启动 group_replication "

ssh -o StrictHostKeyChecking=no "$SSH_USER@$MASTER_IP" "

docker exec mysql mysql -uroot -p$ROOT_PWD -e \"

set global group_replication_bootstrap_group=on;

start group_replication;

set global group_replication_bootstrap_group=off;

\" "

# 3. 从库加入集群

for NODE in node2 node3; do

IP=${NODES[$NODE]}

showMsg ">>> 在从库 ($IP) 加入 group_replication ..."

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "

docker exec mysql mysql -uroot -p$ROOT_PWD -e \"

reset master;

start group_replication;

\" "

done

sleep 5

# 4. 在主库查看集群成员

showMsg ">>> 查看集群状态 ..."

ssh -o StrictHostKeyChecking=no "$SSH_USER@$MASTER_IP" "

docker exec mysql mysql -uroot -p$ROOT_PWD -e \"

select group_replication_switch_to_multi_primary_mode();

select * from performance_schema.replication_group_members;

\" "

}

wait_mysql_healthy() {

for NODE in "${!NODES[@]}"; do

IP=${NODES[$NODE]}

showMsg ">>> 等待 $NODE ($IP) MySQL 服务变为 healthy ..."

# 最多等待 300 秒

for i in {1..60}; do

STATUS=$(ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" \

"docker inspect --format='{{json .State.Health.Status}}' mysql 2>/dev/null" | tr -d '"')

if [ "$STATUS" == "healthy" ]; then

showMsg ">>> $NODE ($IP) MySQL 服务 healthy"

break

else

showMsg ">>> $NODE ($IP) MySQL 状态: $STATUS (第 $i 次检查)"

sleep 5

fi

if [ $i -eq 60 ]; then

showErr ">>> $NODE ($IP) MySQL 在 300 秒内未变为 healthy,退出!"

exit 1

fi

done

done

}

main() {

check_port $MYSQL_PORT

load_image

render_config

start_service

wait_mysql_healthy

sleep 3

init_mgr

showMsg "MySQL 集群安装完成"

}

main "$@"#!/bin/bash

this_script_dir=$(cd $(dirname ${BASH_SOURCE[0]}) && pwd)

cd $this_script_dir

source ./.env

set -e

function showMsg(){ echo -e "\033[32m$1\033[0m"; }

function showErr(){ echo -e "\033[31m$1\033[0m"; }

# 节点列表(跟部署脚本保持一致)

declare -A NODES=(

[node1]="$MYSQL_NODE1_IP"

[node2]="$MYSQL_NODE2_IP"

[node3]="$MYSQL_NODE3_IP"

)

uninstall_node(){

local NODE=$1

local IP=$2

showMsg ">>> [$NODE] 停止并删除容器"

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "cd /opt/mysql-install && docker-compose down || true"

showMsg ">>> [$NODE] 删除容器残留"

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "docker rm -f mysql keepalived >/dev/null 2>&1 || true"

showMsg ">>> [$NODE] 删除挂载目录 /opt/mysql-install"

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "rm -rf /opt/mysql-install"

showMsg ">>> [$NODE] 删除 mysql/keepalived 镜像"

ssh -o StrictHostKeyChecking=no "$SSH_USER@$IP" "docker rmi -f mysql:8.0.28 keepalived >/dev/null 2>&1 || true"

}

main(){

for NODE in "${!NODES[@]}"; do

IP=${NODES[$NODE]}

if [ -z "$IP" ]; then

showErr "[$NODE] 没有配置 IP,跳过"

continue

fi

uninstall_node "$NODE" "$IP"

done

showMsg "MySQL 集群卸载完成"

}

main "$@"MYSQL_NODE1_IP=192.168.2.112

MYSQL_NODE2_IP=192.168.2.129

MYSQL_NODE3_IP=192.168.2.159

VIP=192.168.2.164 # keepalived的vip,需根据实际规划填写

NIC=eth0 # 安装机器的内网ip地址所属网卡名称

MYSQL_PORT=3308

SSH_USER=root

REPL_USER=repl

REPL_PWD=repl123

ROOT_PWD=Hbis@123

918

918

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?