环境如下:

系统:Ubuntu 24.04.3 LTS

使用3台机器搭建一个1个master节点,2个node节点的集群

k8s版本为1.34

docker版本为29.0

| 角色 | ip |

|---|---|

| master | 192.168.200.201 |

| node1 | 192.168.200.202 |

| node2 | 192.168.200.203 |

确保每台机器的时间一致,并且主机名不同

1 搭建k8s集群

!!1,2,3,4步需要三台主机一起执行

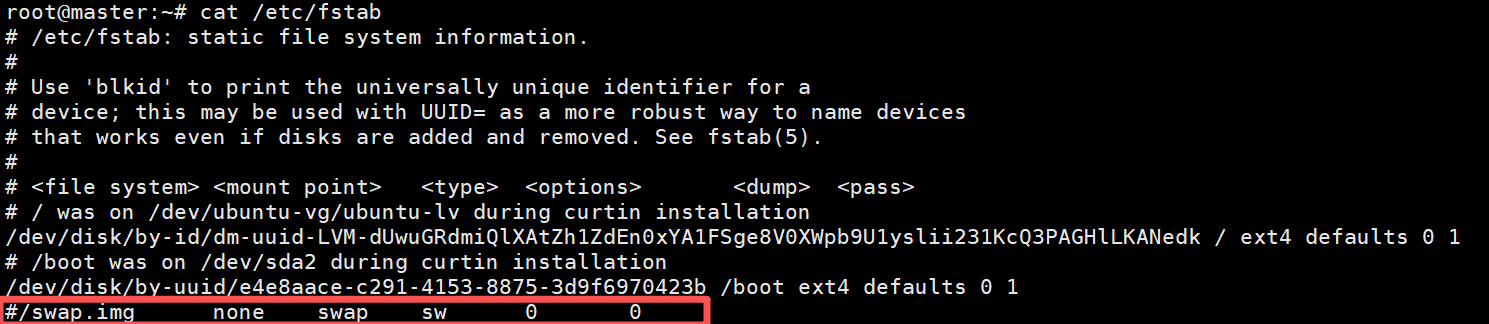

1.1 关闭swap分区

到/etc/fstab中注释掉swap的相关挂载

再执行

swapoff -a1.2 配置br_netfilter 内核模块

sudo modprobe br_netfilter

#写入内核参数配置文件(已包含 flannel 所需的桥接转发参数)

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 生效内核参数(无需重启,立即生效)

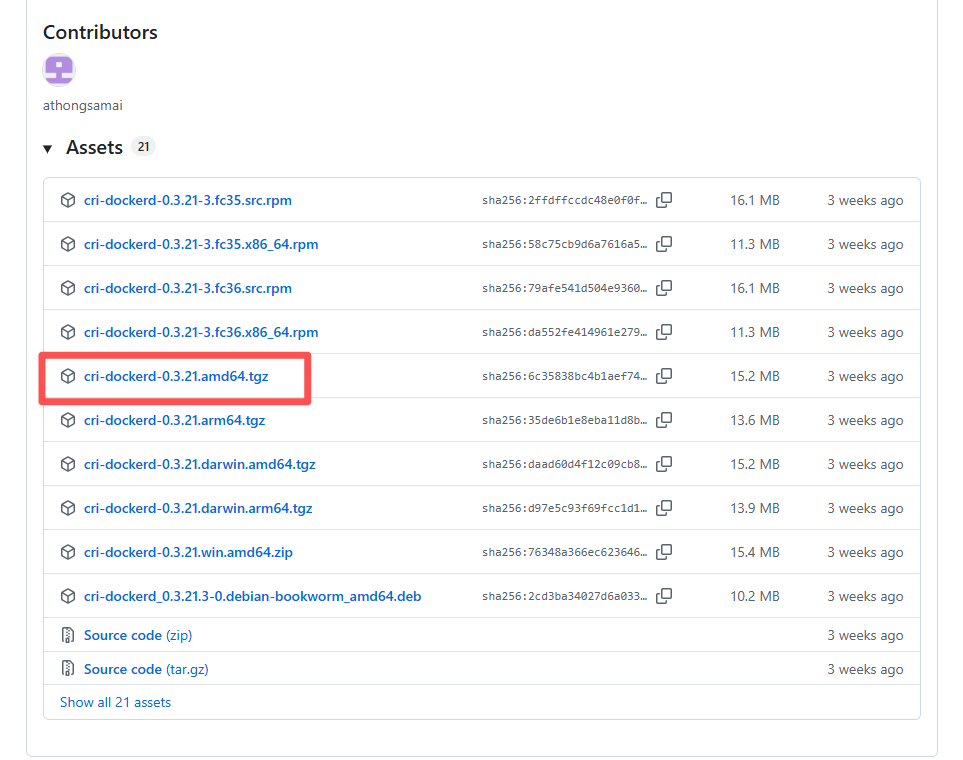

sudo sysctl --system1.3 安装cri-docker

安装包地址:https://github.com/Mirantis/cri-dockerd/releases

下载后将其解压复制到/usr/bin下

tar xvf cri-dockerd-0.3.21.amd64.tgz

install -o root -g root -m 0755 ./cri-dockerd/cri-dockerd /usr/bin/cri-dockerd创建文件模板/etc/systemd/system/cri-docker.service

sudo tee /etc/systemd/system/cri-docker.service <<-'EOF'

[Unit]

Description=CRI Interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network-online.target firewalld.service docker.service

Wants=network-online.target

Requires=cri-docker.socket

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

StartLimitBurst=3

StartLimitInterval=60s

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TasksMax=infinity

Delegate=yes

KillMode=process

[Install]

WantedBy=multi-user.target

EOF创建文件模板/etc/systemd/system/cri-docker.socket

sudo tee /etc/systemd/system/cri-docker.socket <<-'EOF'

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF启动cri-docker

systemctl daemon-reload

systemctl enable --now cri-docker.socket1.4 安装软件包

- kubeadm:用于初始化集群

- kubelet:在集群中每个节点上用来启动pod和容器

- kubectl:与集群通信的命令行工具

使用国内源安装,此处安装的k8s为1.34版本,如想安装其他版本可自行更改命令的版本号

apt-get update && apt-get install -y apt-transport-https

curl -fsSL https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.34/deb/Release.key |

gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo "deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://mirrors.aliyun.com/kubernetes-new/core/stable/v1.34/deb/ /" |

tee /etc/apt/sources.list.d/kubernetes.list

apt-get update

apt-get install -y kubelet kubeadm kubectl

1.5 master节点配置kubeadm

创建文件kubeadm_init.yaml(注意更改为自己master节点的IP)

sudo tee ./kubeadm_init.yaml <<-'EOF'

apiVersion: kubeadm.k8s.io/v1beta4

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.200.201 #更改为自己的masterIP

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock

imagePullPolicy: IfNotPresent

taints: null

---

apiVersion: kubeadm.k8s.io/v1beta4

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.34.2 #k8s版本号

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

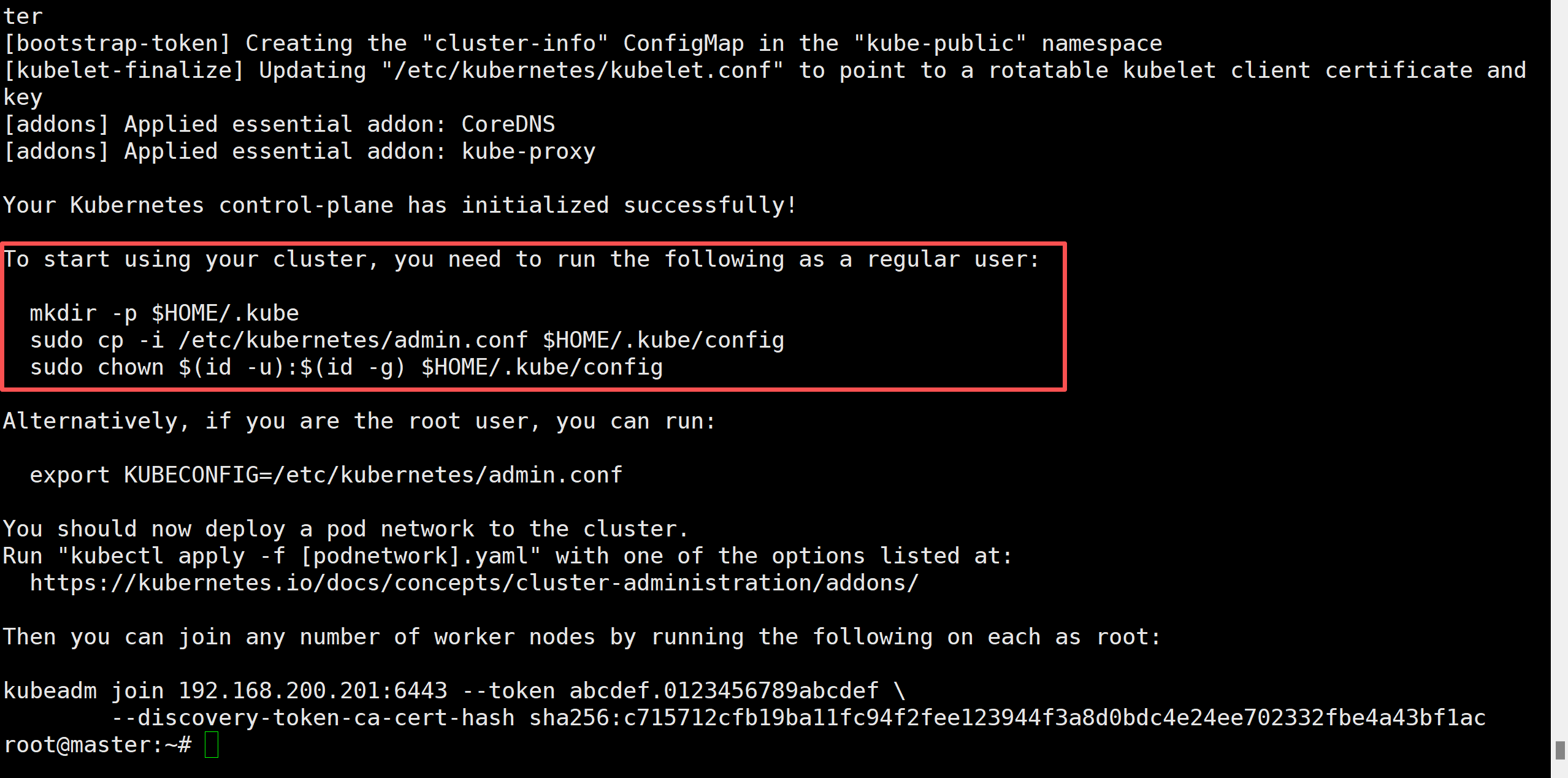

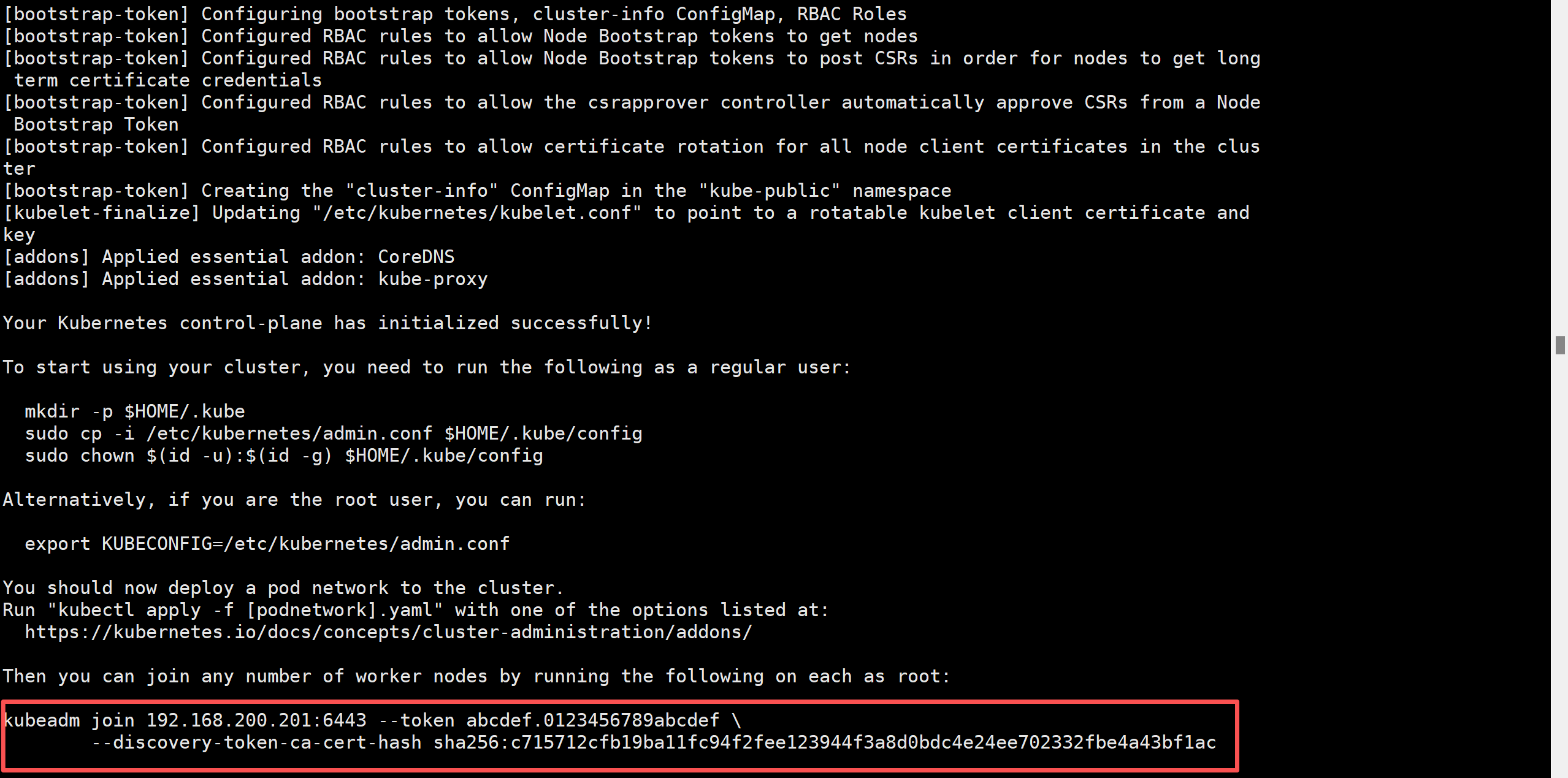

EOF1.6 在master上初始化k8s集群

kubeadm init --config ./kubeadm_init.yaml

成功后,按照提示复制config文件到指定目录

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config1.7 在master配置集群网络

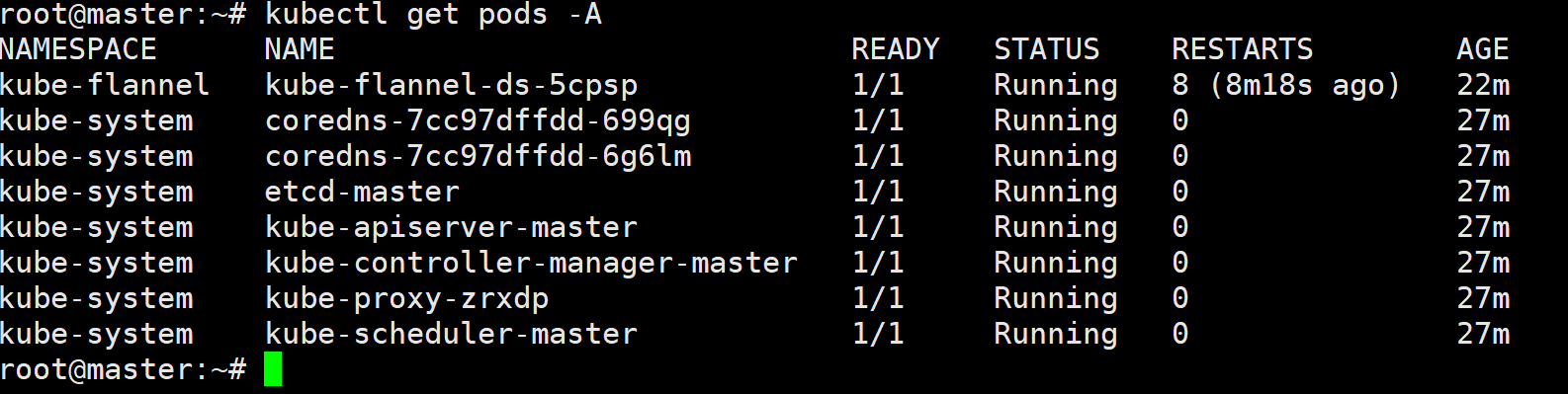

此处我们配置Flannel网络

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml运行命令检查coreDNS pod是否处于Running状态

kubectl get pods -A

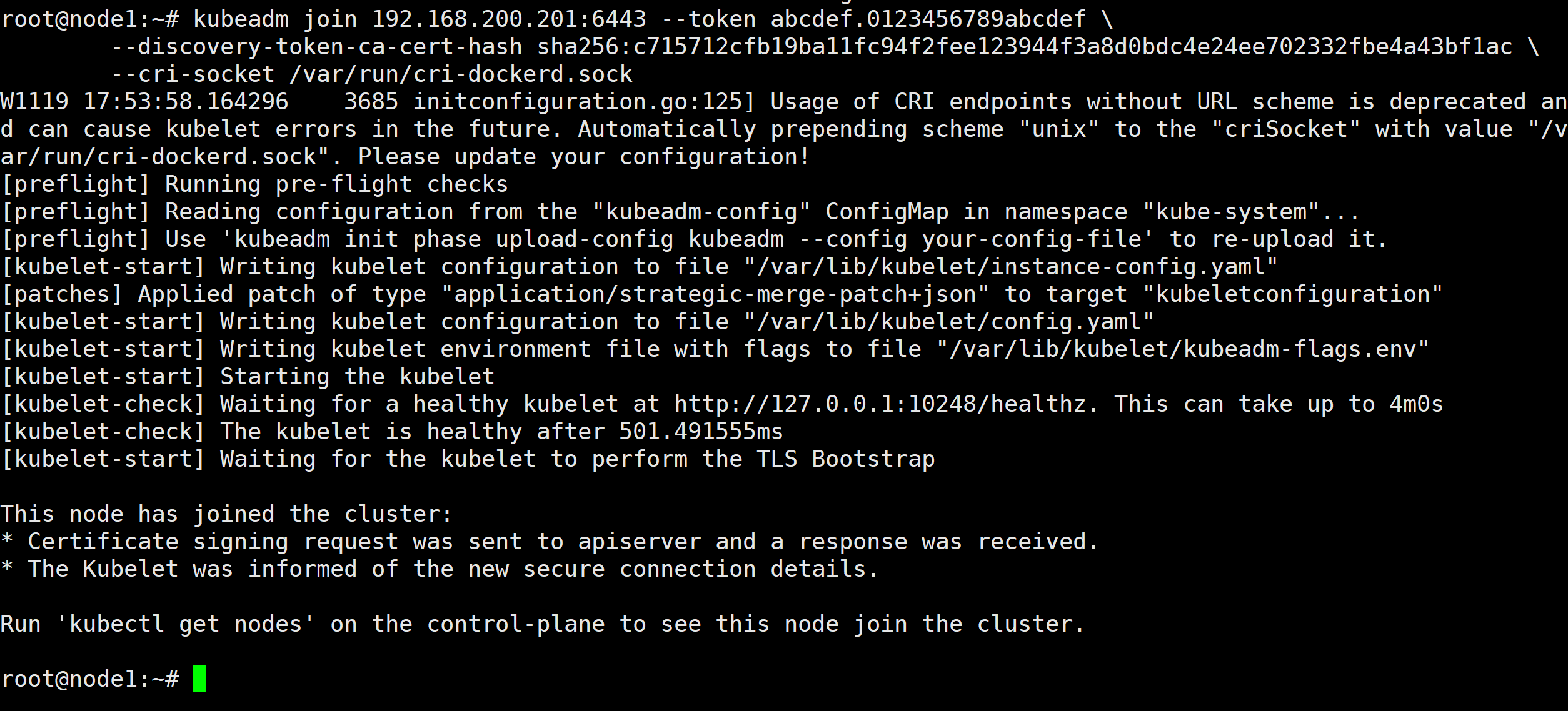

1.8 将node节点加入集群

在两台node节点执行以下命令

由于存在两个容器(k8s自带的containerd与我们手动安装的cri-dockerd)接口,这里我们需要指定为cri-dockerd接口,注意使用自己master对应的ip和hash

kubeadm join 192.168.200.201:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:c715712cfb19ba11fc94f2fee123944f3a8d0bdc4e24ee702332fbe4a43bf1ac \

--cri-socket /var/run/cri-dockerd.sock #此处指定容器接口

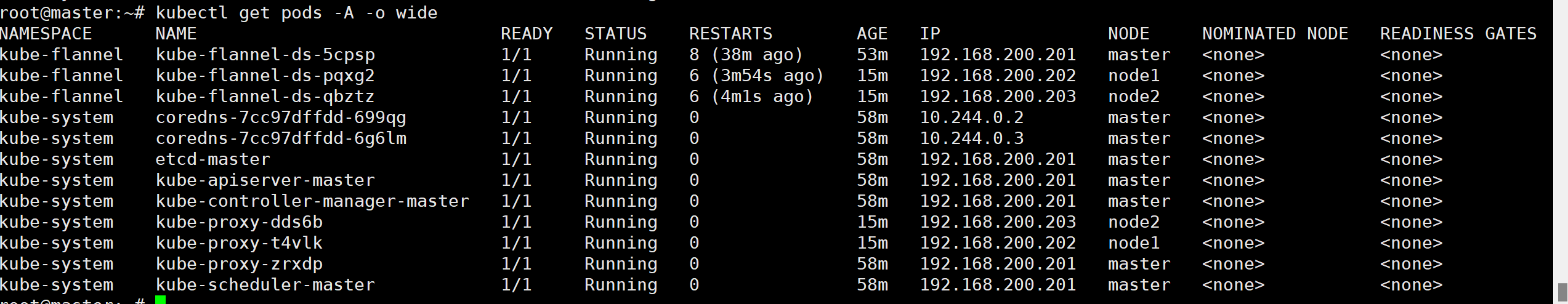

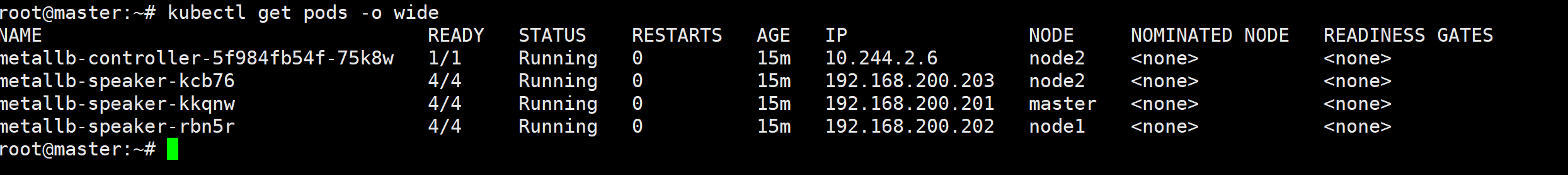

执行命令查看pod是否running

kubectl get pods -A -o wide

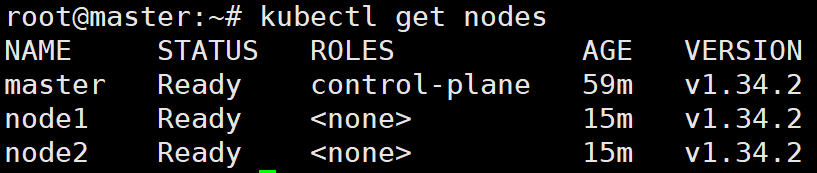

执行命令查看集群中的所有节点

kubectl get nodes

2 安装k8s组件

搭建完k8s集群之后,我们还需要安装4个组件

- helm:类似apt那样的包管理工具

- dashboard:仪表盘,更直观的查看k8s中的资源情况

- nginx ingress:整个集群的出口,相当于最集群前端的nginx

- meatllb:让服务的LoadBalancer可以绑定局域网ip

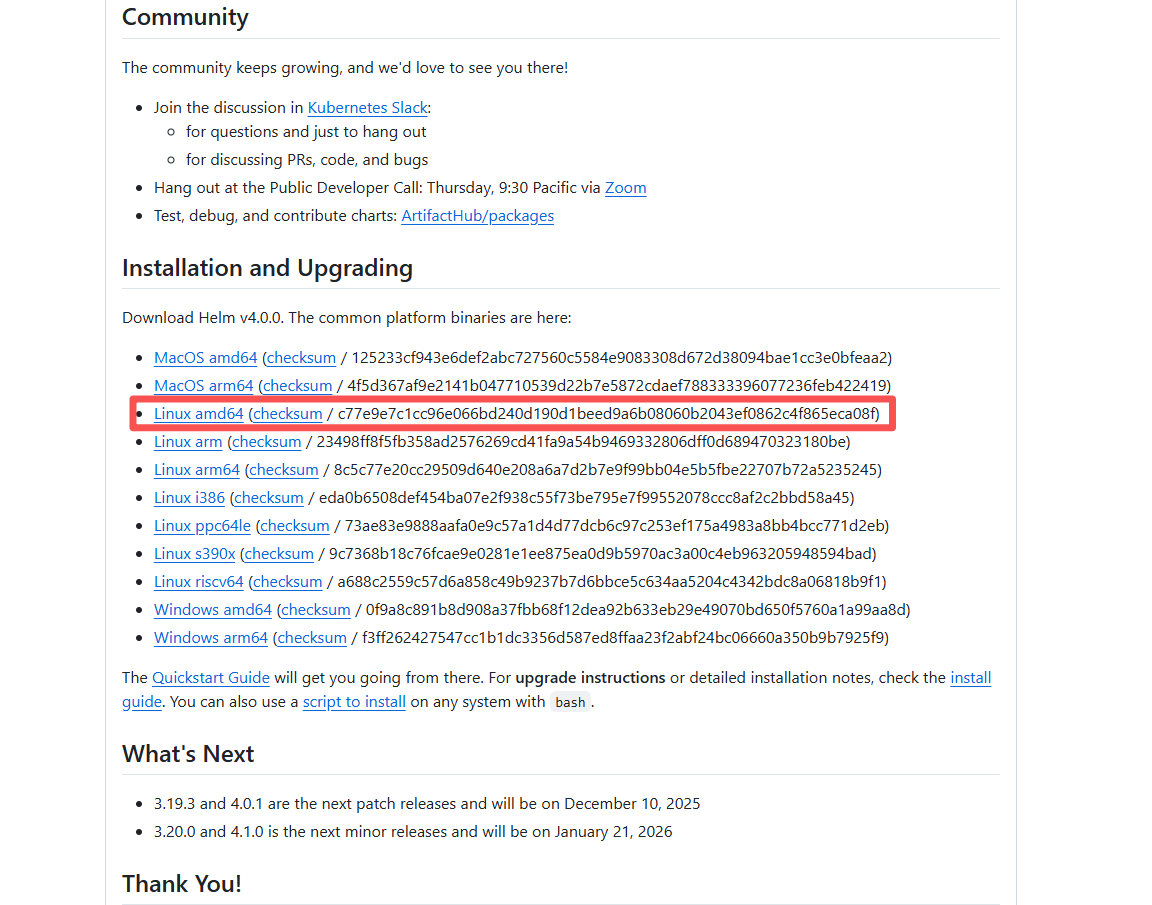

2.1 helm

下载最新软件包

上传给master节点

tar xvf helm-v4.0.0-linux-amd64.tar.tar

mv linux-amd64/helm /usr/bin/2.2 dashboard

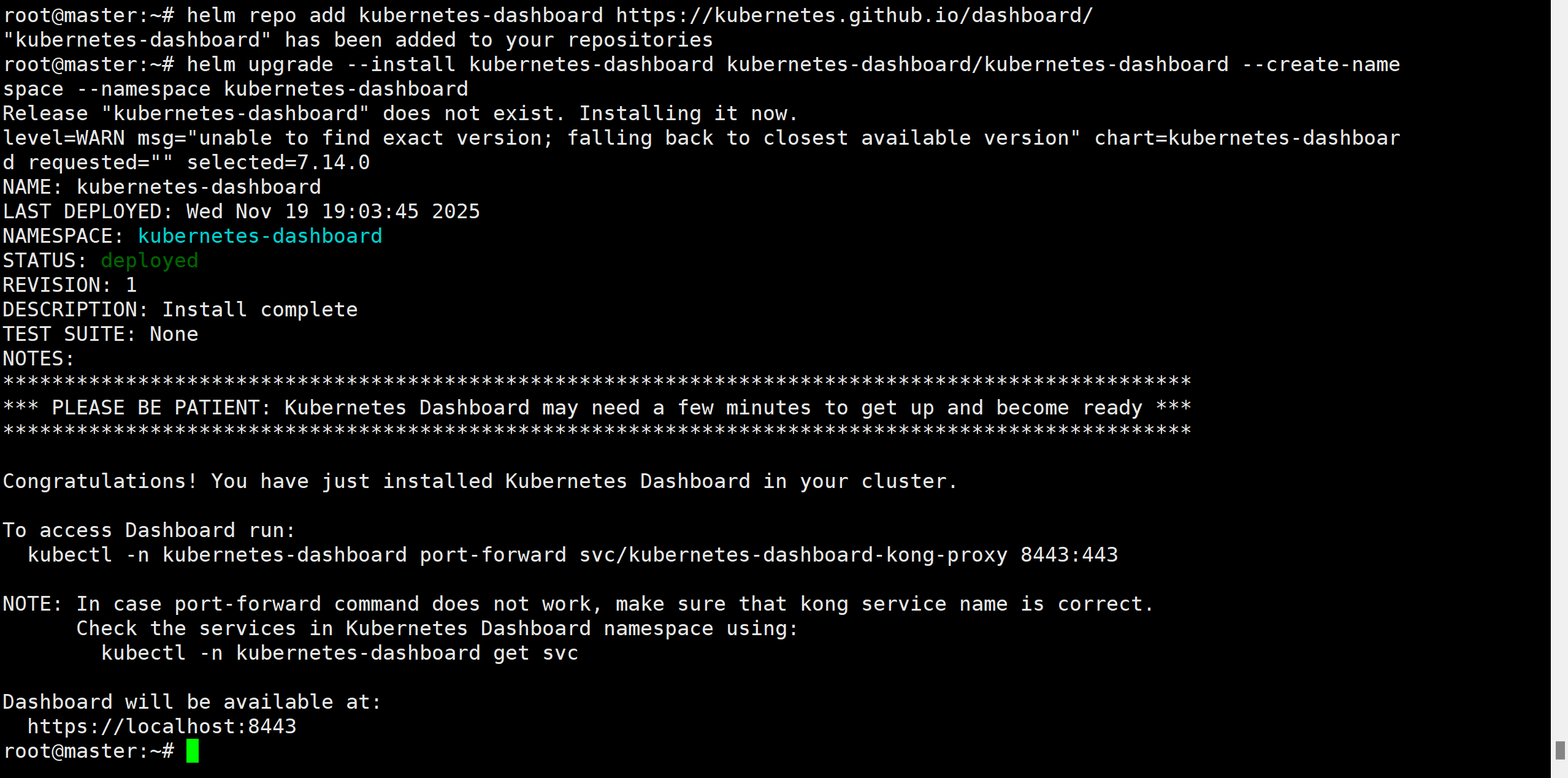

这里我们用helm安装

# 添加存储库

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

# 安装或者升级dashboard

helm upgrade --install kubernetes-dashboard kubernetes-dashboard/kubernetes-dashboard --create-namespace --namespace kubernetes-dashboard

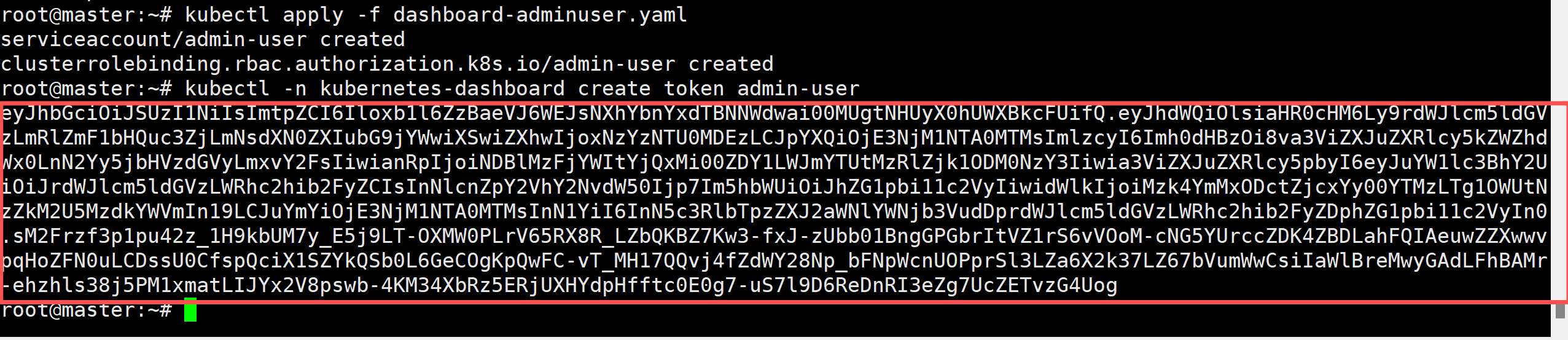

创建服务账户 创建文件模板dashboard-adminuser.yaml

sudo tee ./dashboard-adminuser.yaml <<-'EOF'

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF应用到集群

kubectl apply -f dashboard-adminuser.yaml创建令牌,用于页面登录

kubectl -n kubernetes-dashboard create token admin-user

红框的内容为令牌,复制保存

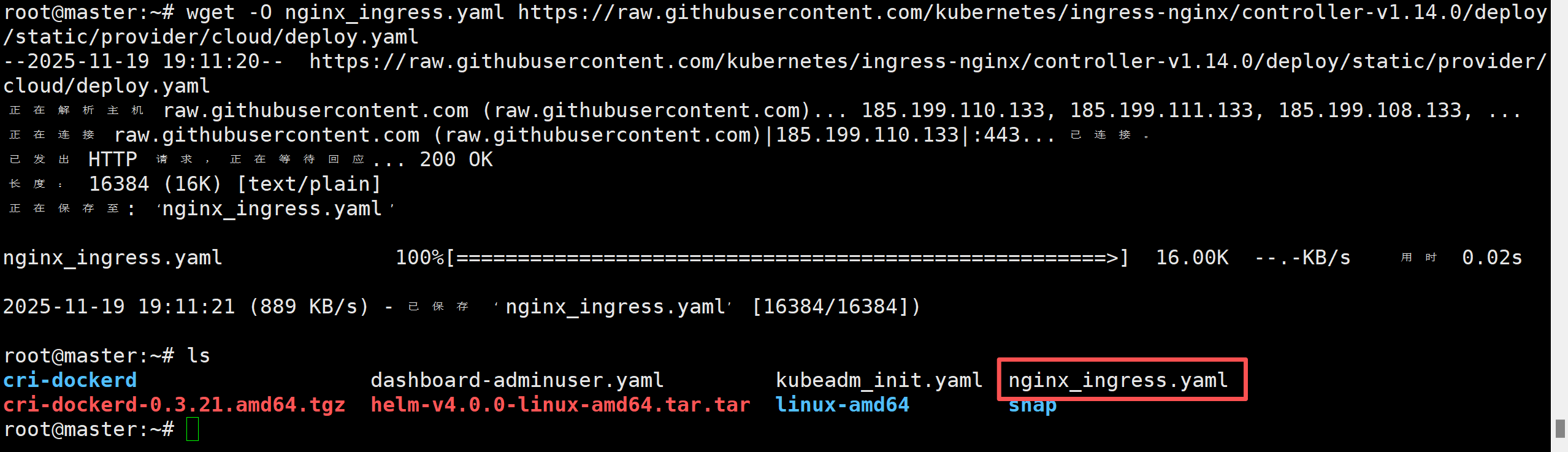

2.3 ingress

GitHub - kubernetes/ingress-nginx: Ingress NGINX Controller for Kubernetes

下载yaml文件

wget -O nginx_ingress.yaml https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.14.0/deploy/static/provider/cloud/deploy.yaml

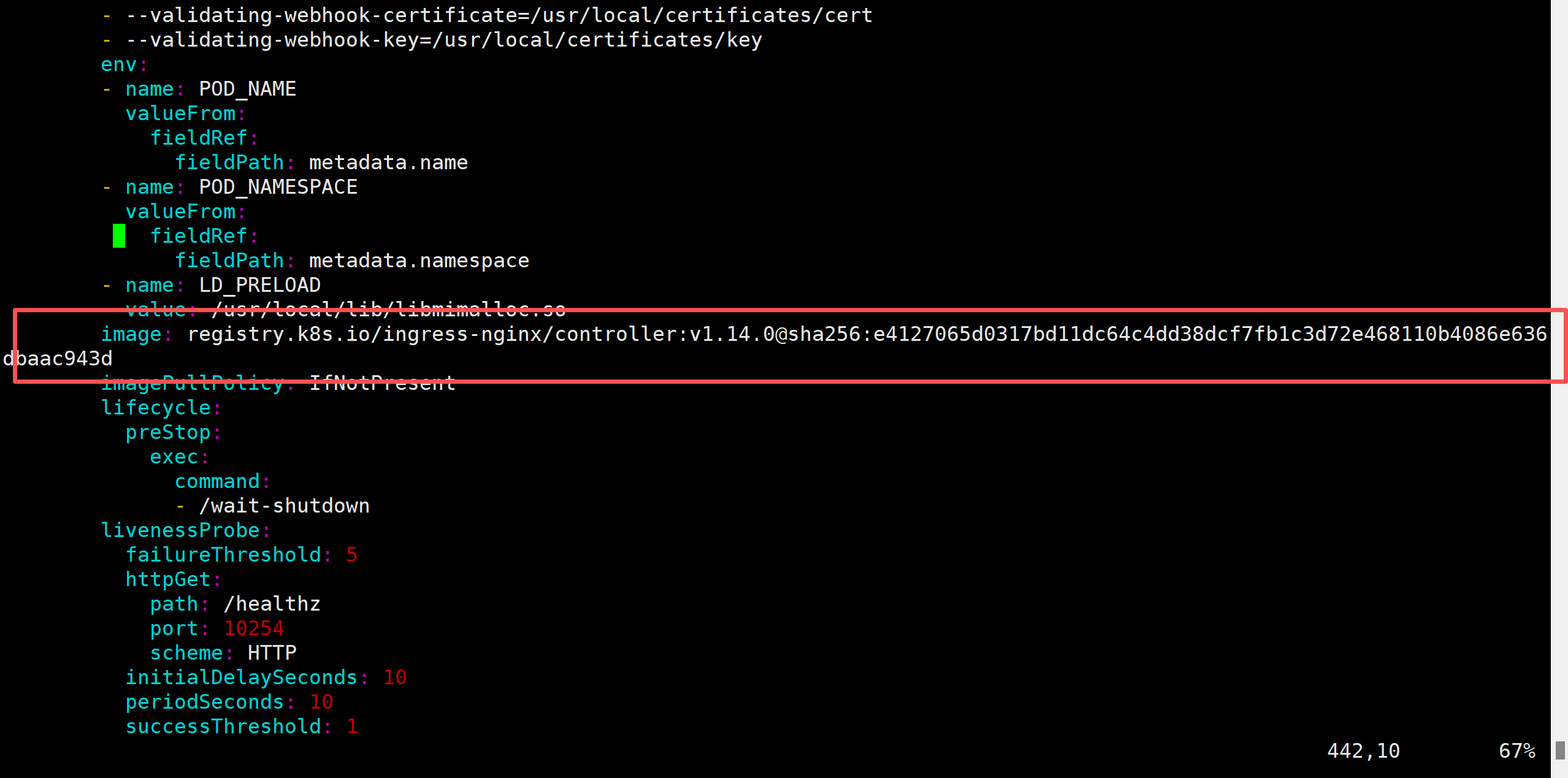

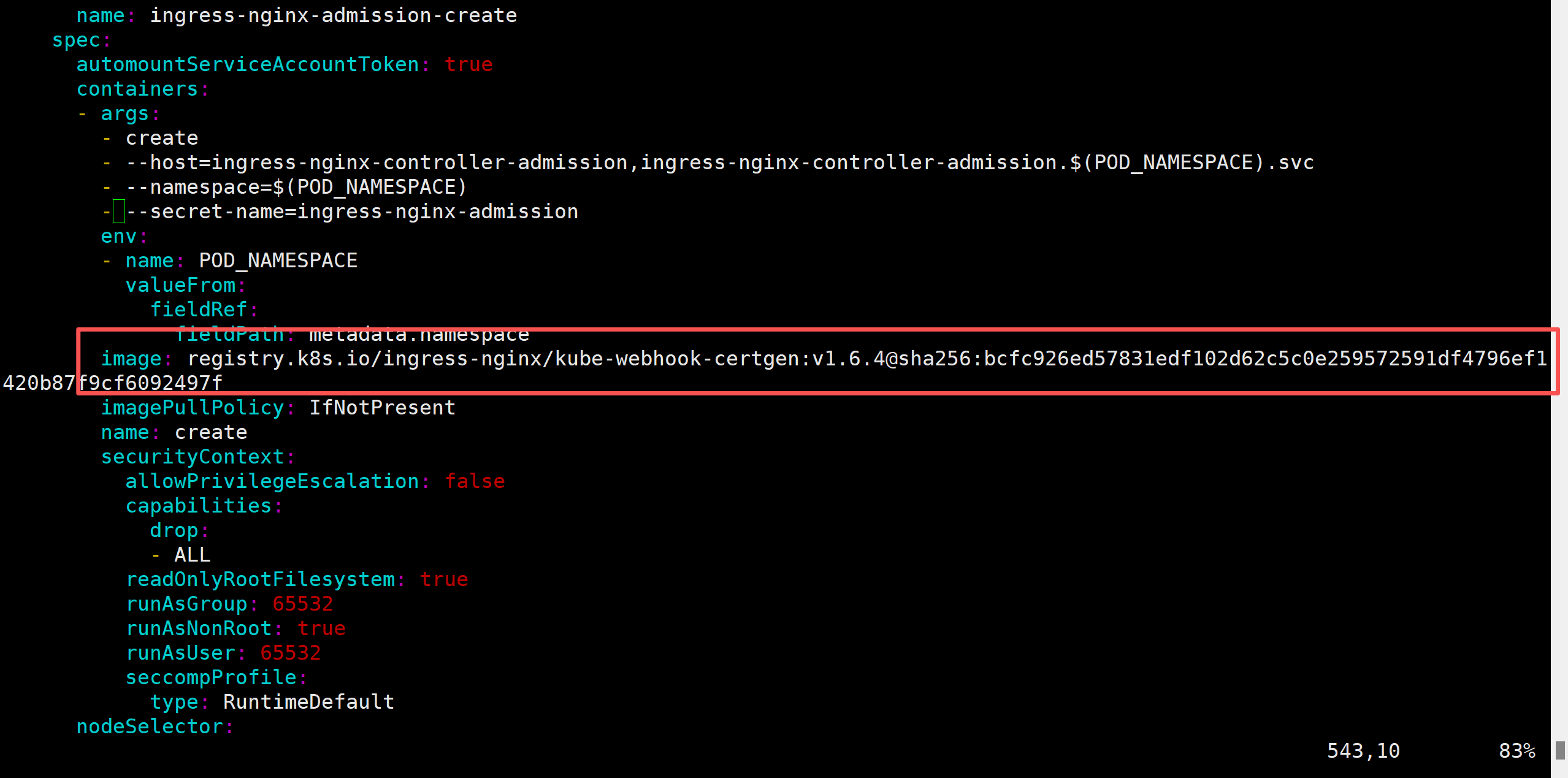

查看文件可发现使用的镜像为国外镜像仓库,这里我们更改为国内源

先记下文件里的两个版本号

将国内源版本编辑为上面俩版本号

sed -i "s|registry.k8s.io/ingress-nginx/controller:v.*|registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.14.0|" nginx_ingress.yaml

sed -i "s|registry.k8s.io/ingress-nginx/kube-webhook-certgen:v.*|registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.6.4|" nginx_ingress.yaml应用到集群

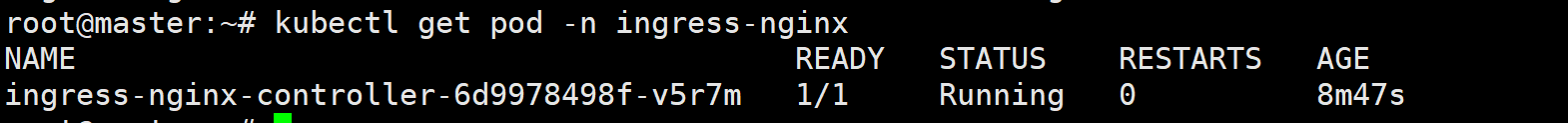

kubectl apply -f nginx_ingress.yaml查看pod启动状态

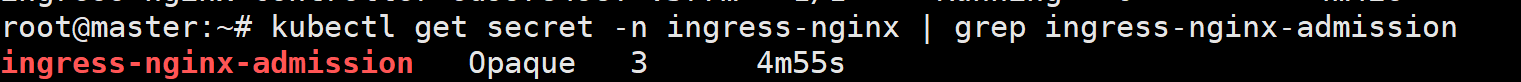

kubectl get pod -n ingress-nginxcontroller running即为成功,而后admission消失,可通过命令查看是否存在secret

kubectl get secret -n ingress-nginx | grep ingress-nginx-admission

2.4 Meatllb

这里我们用helm安装

# 添加repo源

helm repo add metallb https://metallb.github.io/metallb

# 安装

helm install metallb metallb/metallb

查看pod启动状态

kubectl get pods

创建配置模板meatllb.yaml

#使用闲置IP地址池

sudo tee ./meatllb.yaml <<-'EOF'

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: first-pool

spec:

addresses:

- 192.168.200.233-192.168.200.253

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: example

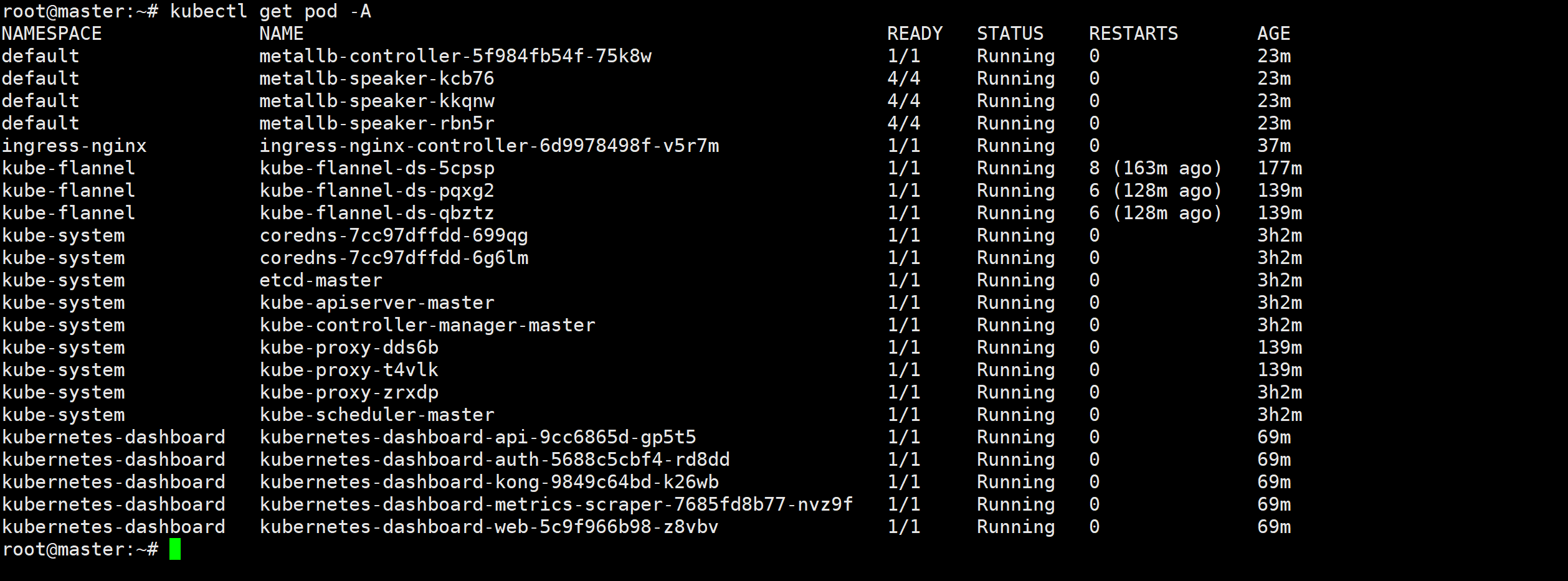

EOF查看所有节点,目前四个组件已运行成功

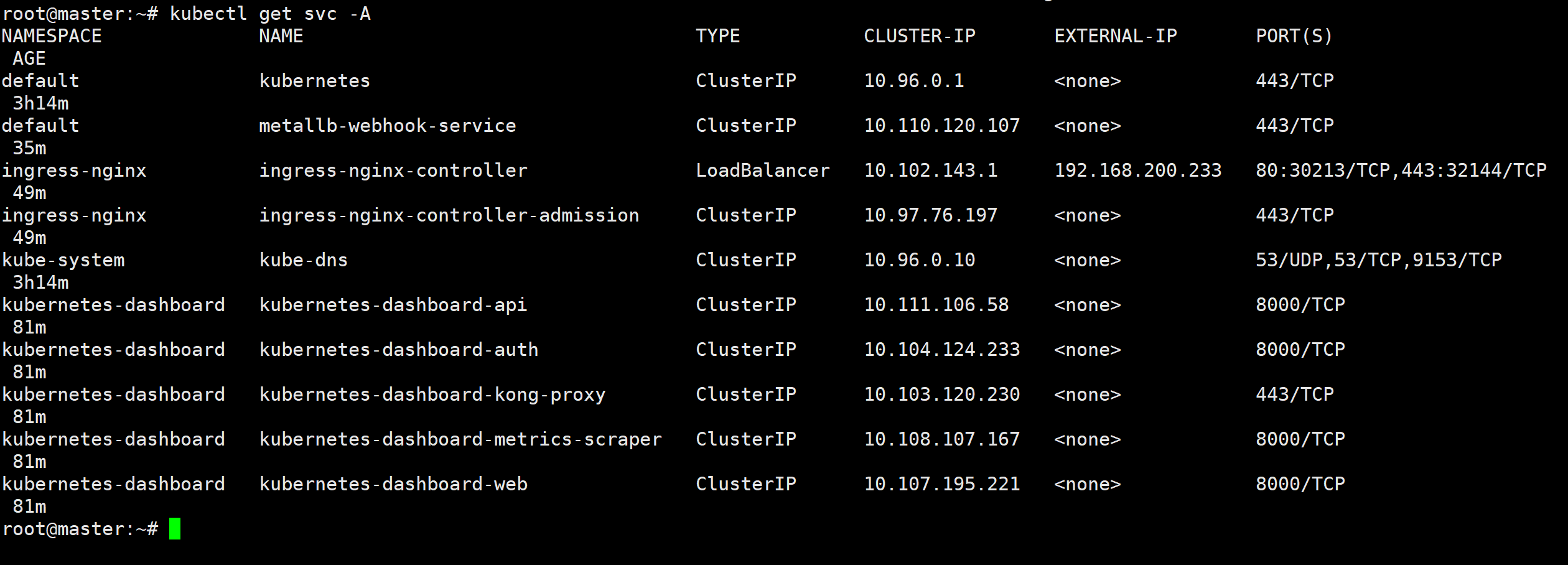

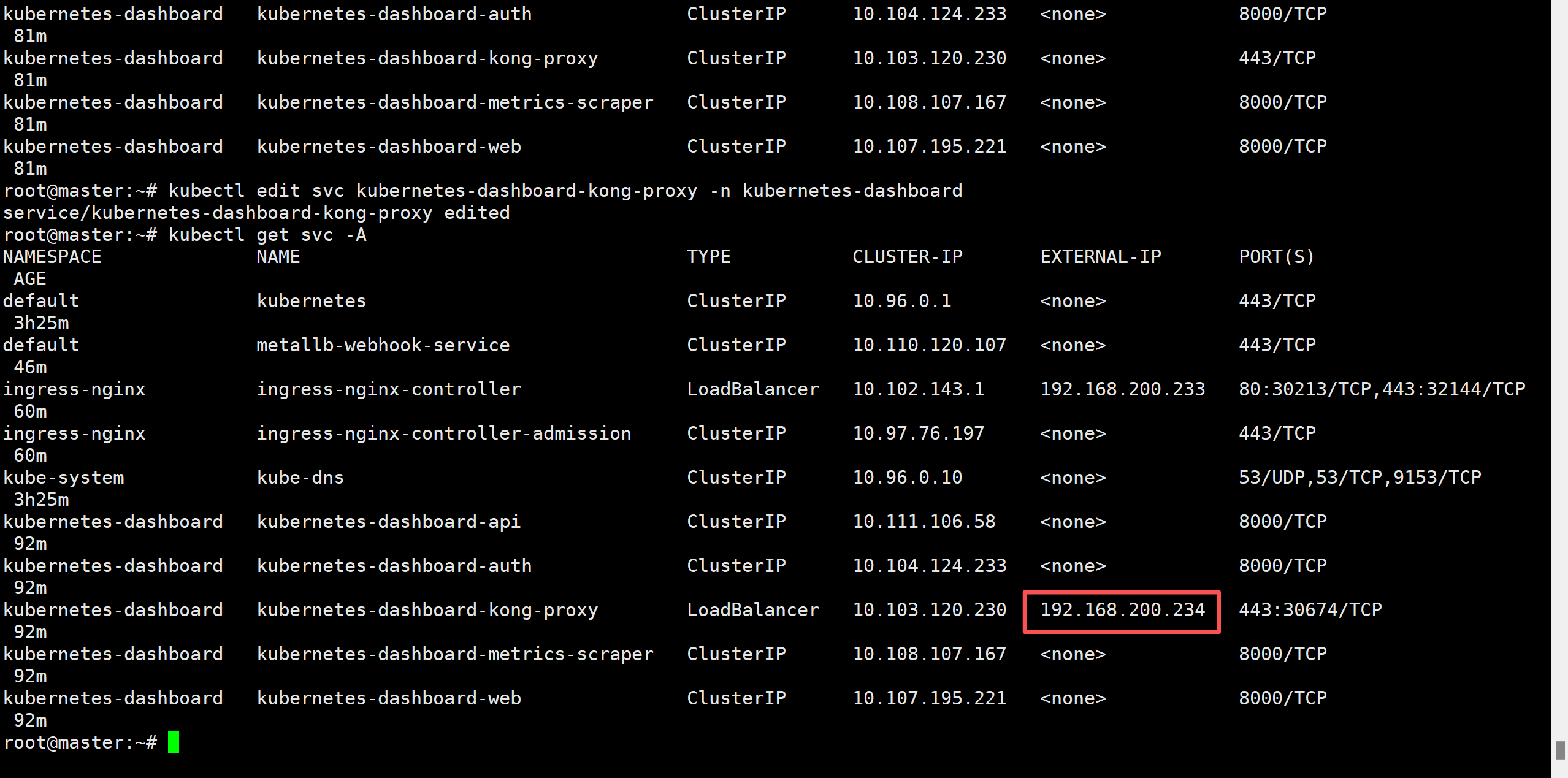

查看服务

kubectl get svc -A

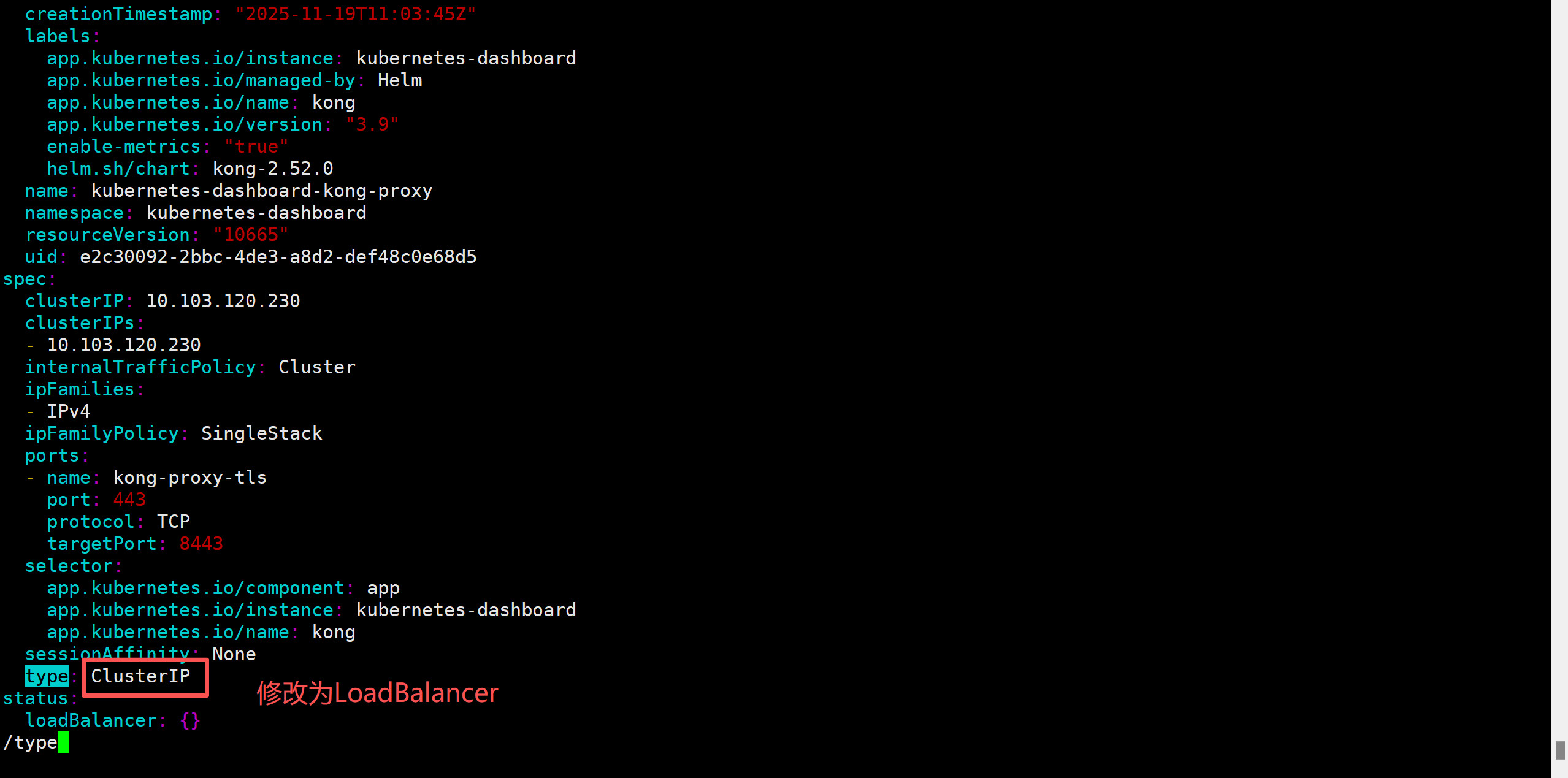

我们要将kubernetes-dashboard-kong-proxy的网络模式更改成LoadBalancer

kubectl edit svc kubernetes-dashboard-kong-proxy -n kubernetes-dashboard

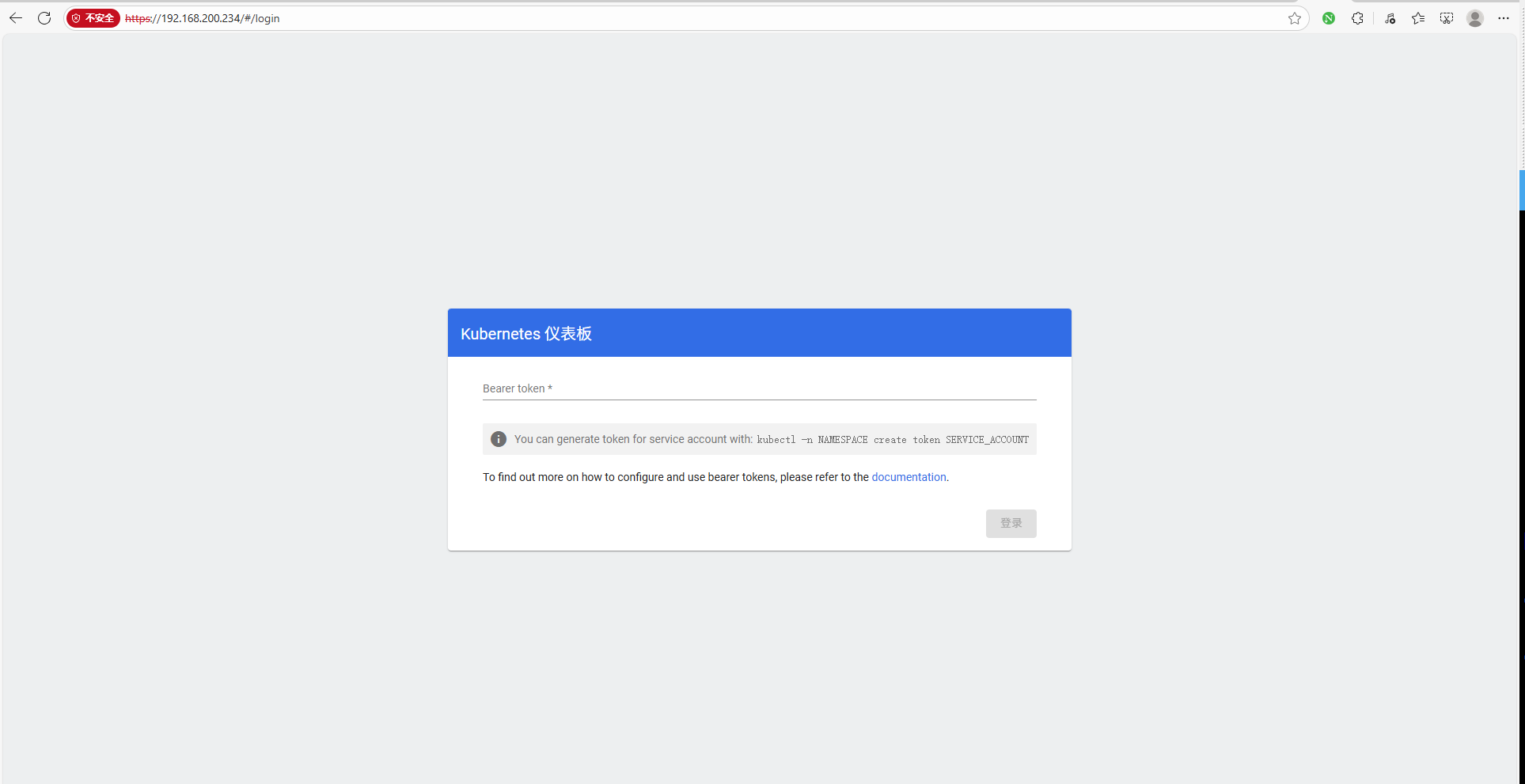

可以看到向外暴露了一个地址池里的IP,我们访问这个地址的443端口验证一下

把先前保存好的密令粘贴进去(也可以重新生成)

kubectl -n kubernetes-dashboard create token admin-user

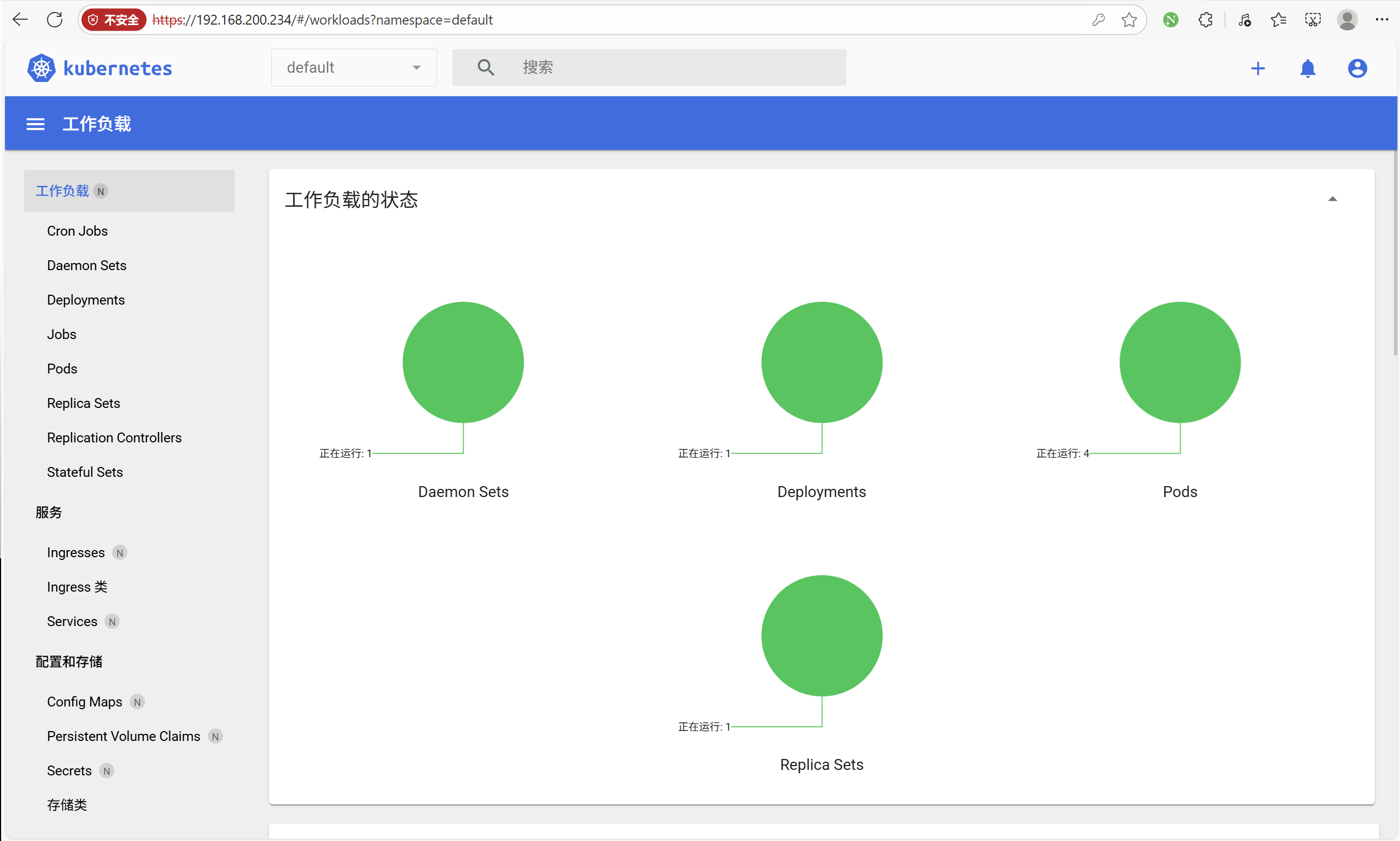

成功登陆仪表盘,ingress-nginx也是同理操作

至此k8s基本环境部署完成

Ubuntu搭建K8s集群指南

Ubuntu搭建K8s集群指南

948

948

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?