Cloud-based LLMOps platforms are convenient, but they ignore the biggest risks for enterprises. Here’s how a private, open-source approach changes the game.

The term “LLMOps” is everywhere. It promises to bring order to the chaotic world of large language models, streamlining everything from data preparation and fine-tuning to deployment and monitoring. But as enterprises rush to adopt these practices, they often overlook the elephant in the room: Where are your AI assets actually located?

For many, the default answer is the cloud, using convenient SaaS platforms. While this model is great for getting started, it introduces fundamental risks that no serious enterprise can afford to ignore. Handing over your most proprietary data, your custom-trained models, and your core intellectual property to a third-party cloud environment creates unavoidable vulnerabilities in security, compliance, and cost control.

True enterprise-grade LLMOps requires a different approach — one that puts control back where it belongs: in your hands.

The Hidden Risks of a Cloud-Only LLMOps Strategy

Relying solely on external, cloud-based platforms for your LLMOps pipeline isn’t just a technical choice; it’s a business risk. Here’s why:

- Data Sovereignty and Security: Your data is your ultimate competitive advantage. For industries like finance, healthcare, and government, sending sensitive customer or operational data to an external server for fine-tuning is a compliance nightmare and a major security risk.

- Vendor Lock-In: Once your models, datasets, and workflows are deeply integrated into a specific SaaS platform, migrating away becomes technically complex and prohibitively expensive. You become dependent on their pricing, feature roadmap, and terms of service.

- Spiraling and Unpredictable Costs: The pay-as-you-go model can quickly spiral out of control. Costs for GPU compute, model storage, and especially data egress (moving data out of the cloud) are often unpredictable and can cripple your AI budget.

- Limited Customization: SaaS platforms offer a standardized environment. Deeply integrating models with your existing on-premise data warehouses, legacy systems, or bespoke security protocols is often difficult, if not impossible.

Zoom image will be displayed

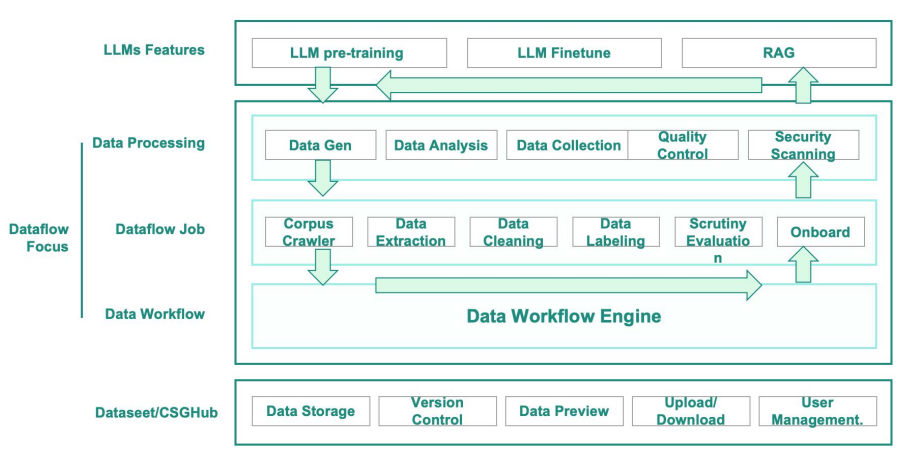

The Solution: CSGHub, Your On-Premise LLMOps Foundation

To address these challenges, you need a platform designed for privacy, security, and control. This is exactly why we built CSGHub, the open-source (Apache 2.0) core of the OpenCSG ecosystem.

Think of CSGHub as a private data center for all your AI assets. It’s an on-premise, enterprise-grade alternative to platforms like Hugging Face, built specifically for the demands of secure LLMOps.

Here’s how CSGHub provides a solid foundation for your on-premise LLMOps strategy:

- Deploy Anywhere, Control Everything: Install CSGHub within your own firewall, on your own servers, or in your private cloud. Your models and data never leave your trusted environment, ensuring full compliance and data sovereignty.

- Unified Repository for AI Assets: CSGHub acts as the central, version-controlled repository for your entire AI lifecycle. Securely store, manage, and track your foundation models, fine-tuned checkpoints, datasets, and prompts in one place.

- Open-Source and Auditable: Trust is paramount. Because CSGHub is fully open-source, your security teams can audit the code to ensure it meets your standards. You have the freedom to customize and extend the platform without any vendor constraints.

- Hybrid-Ready with Multi-Source Sync: You don’t have to live in isolation. CSGHub can securely sync models from public hubs (like Hugging Face or ModelScope) into your private instance. This gives you access to the latest open-source innovations while maintaining a secure, controlled internal environment.

Zoom image will be displayed

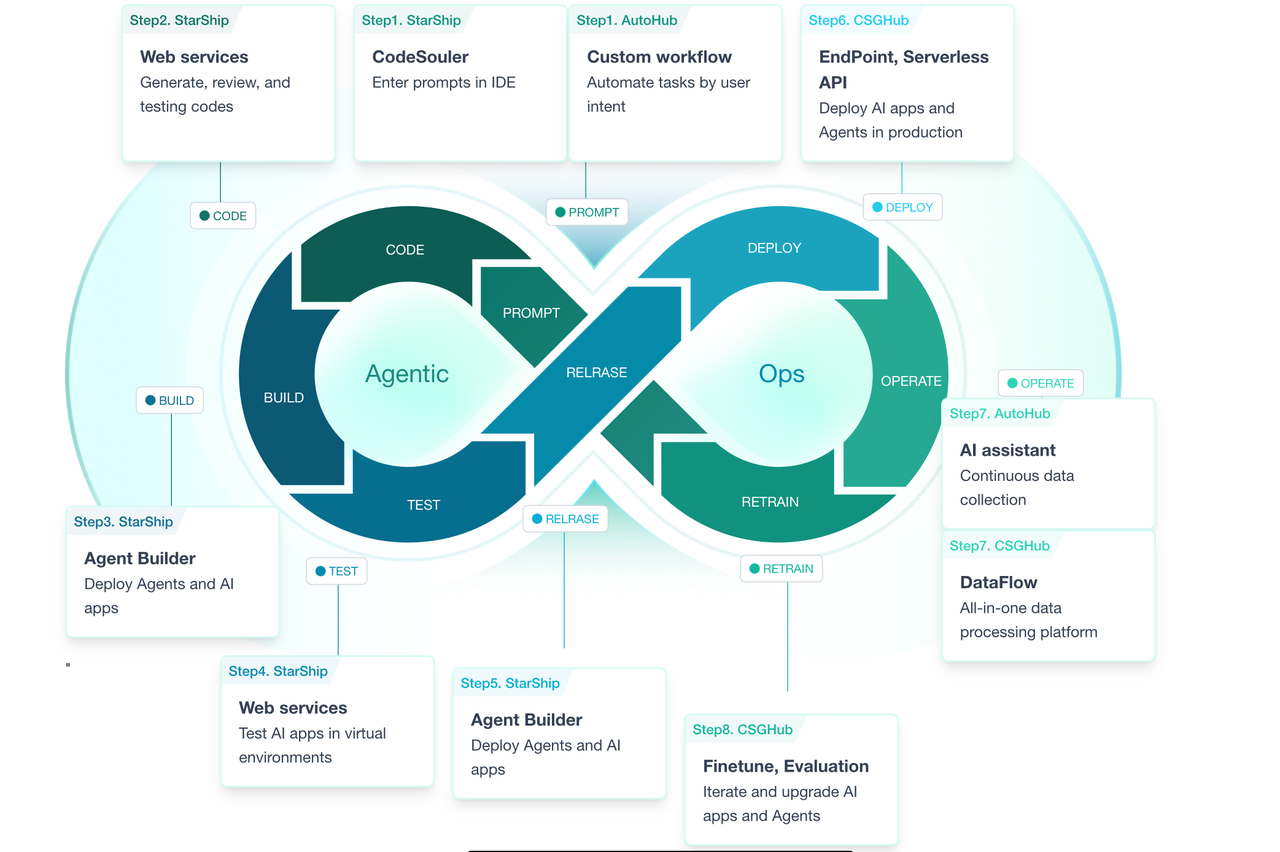

From Secure LLMOps to Value-Driven AgenticOps

A robust on-premise LLMOps pipeline is the critical first step. But it’s a means to an end. The ultimate goal is to create intelligent agents and applications that drive business value.

This is where our AgenticOps methodology comes in. With the secure foundation provided by CSGHub, our agent development platform, CSGShip, can leverage these on-premise models and data to build, test, and deploy powerful AI agents that automate complex workflows — all within your secure ecosystem.

Take Back Control of Your AI Future

Don’t let the convenience of the cloud compromise your company’s future. A successful long-term AI strategy is built on a foundation of control, security, and ownership. By adopting an on-premise LLMOps approach with OpenCSG, you can innovate confidently, knowing your most valuable assets are protected.

It’s time to address the elephant in the room.

Discover how CSGHub can power your on-premise LLMOps:https://www.opencsg.com/

Explore the CSGHub open-source project on GitHub:https://github.com/OpenCSGs/csghub

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?