import jieba

from wordcloud import WordCloud

import matplotlib.pyplot as plt

from collections import Counter

import re

def load_stopwords(filepath):

"""加载停用词表(增强兼容性)"""

try:

with open(filepath, 'r', encoding='utf-8') as f:

stopwords = [line.strip() for line in f if line.strip()]

print(f"已加载 {len(stopwords)} 个停用词")

return set(stopwords)

except Exception as e:

print(f"停用词表加载失败: {str(e)},将使用空停用词表")

return set()

def preprocess_text(text, stopwords):

"""文本预处理(更安全的清洗逻辑)"""

# 保留中文、常见标点和换行符(避免过度清洗)

text = re.sub(r'[^\u4e00-\u9fa5,。、;:!?()【】"\'\n]', '', text)

# 检查清洗后文本

if not text:

raise ValueError("文本清洗后为空!请检查原始文件内容")

# 分词并过滤

words = jieba.lcut(text)

valid_words = [word for word in words if word not in stopwords and len(word) > 1]

print(f"原始分词数: {len(words)},过滤后: {len(valid_words)}")

return valid_words

def generate_wordcloud(word_freq, output_file):

"""生成词云(增加错误处理)"""

if not word_freq:

raise ValueError("词频数据为空,无法生成词云")

try:

wc = WordCloud(

font_path='simhei.ttf', # 确保字体文件存在

background_color='white',

width=1000,

height=800,

max_words=200

)

wc.generate_from_frequencies(dict(word_freq))

plt.figure(figsize=(12, 10))

plt.imshow(wc, interpolation='bilinear')

plt.axis('off')

plt.savefig(output_file, dpi=300, bbox_inches='tight')

plt.show()

print(f"词云已保存至 {output_file}")

except Exception as e:

print(f"生成词云失败: {str(e)}")

def main():

# 文件配置

report_file = 'government_report.txt'

stopwords_file = 'stopwords.txt'

# 1. 检查文件内容

try:

with open(report_file, 'r', encoding='utf-8') as f:

report_text = f.read()

print(f"文件前100字符:\n{report_text[:100]}...") # 重要:验证文件内容

except UnicodeDecodeError:

print("UTF-8解码失败,尝试GBK编码...")

with open(report_file, 'r', encoding='gbk') as f:

report_text = f.read()

# 2. 加载停用词表

stopwords = load_stopwords(stopwords_file)

# 3. 预处理与分词

try:

words = preprocess_text(report_text, stopwords)

word_freq = Counter(words).most_common(50)

if not word_freq:

raise ValueError("无有效热词,请检查停用词表是否过滤过多或文本内容异常")

# 4. 输出热词

print("\n热词TOP50:")

for i, (word, count) in enumerate(word_freq, 1):

print(f"{i:>2}. {word}: {count}")

# 5. 生成词云

generate_wordcloud(word_freq, 'report_wordcloud.png')

except Exception as e:

print(f"处理失败: {str(e)}")

print("调试建议:")

print("1. 检查 government_report.txt 是否为有效中文文本")

print("2. 停用词表 stopwords.txt 是否过滤过多(如包含常用词)")

print("3. 尝试减少文本清洗强度(修改 preprocess_text() 中的正则表达式)")

if __name__ == '__main__':

main()

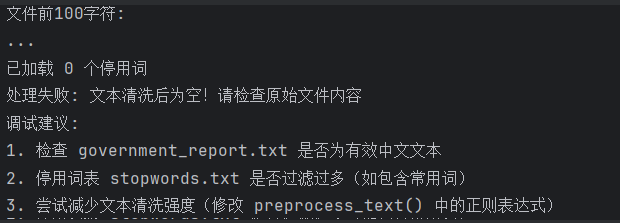

由于文件夹的内容为空,所以编译出来的结果显示0个停用词

403

403

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?