一、为什么使用EFK

EFK Stack为开发者提供了一套完整而灵活的日志管理系统。通过结合Elasticsearch的存储和搜索功能、Fluentd的采集和处理功能以及Kibana的展示和分析功能,开发者可以实现日志的收集、存储、搜索、分析和可视化。相比其他日志管理方案,EFK Stack在高性能和低资源消耗方面表现出色。特别是Fluentd和Filebeat等轻量级的日志收集工具,使得EFK Stack在资源有限或需要快速部署的场景中更具优势。

- ElasticSearch:Elasticsearch是一个基于Lucene的分布式搜索和分析引擎,能够快速存储、搜索和分析大量数据。它适用于构建实时分析应用程序,并提供全文搜索、结构化搜索、分析以及分布式、多租户能力的功能。

- Fluentd:Fluentd是一个开源的数据收集器,能够从各种来源(如应用程序、服务器、消息队列等)实时地采集日志数据。它支持多种数据输入和输出的插件,可以轻松地集成到各种环境中。Fluentd在容器化环境中表现出色,特别是与Kubernetes等容器编排系统结合使用时。它能够自动发现和管理容器日志,确保日志数据的完整性和准确性。

- Kibana:Kibana是一个基于Elasticsearch的可视化分析平台,提供了丰富的图表和可视化功能。用户可以通过Kibana对日志数据进行搜索、分析和可视化,以便更好地理解应用程序的运行状态和日志信息。

二、版本信息

Kubernetes版本:1.28.14

ElasticSearch版本:7.2.0

Fluentd版本:1.16

Kibana版本:7.2.0

三、部署ES

编写yaml创建ES的Service,使用有状态应用StatefulSet创建ES集群,使用NFS做PV,存储ES数据到PV中

#创建ES的Service

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: k8s-logging

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

---

#使用statefulset部署ES

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: k8s-logging

spec:

serviceName: elasticsearch

replicas: 2

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

tolerations:

- key: "node-role.kubernetes.io/control-plane"

operator: "Exists"

effect: "NoSchedule"

containers:

- name: elasticsearch

image: 192.168.119.150/k8s_repository/elasticsearch:7.2.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: esdata

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch.k8s-logging.svc.cluster.local,es-cluster-1.elasticsearch.k8s-logging.svc.cluster.local"

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

- name: TZ

value: "Asia/Shanghai"

initContainers:

- name: fix-permissions

image: 192.168.119.150/myrepo/busybox:stable-glibc

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: esdata

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: 192.168.119.150/myrepo/busybox:stable-glibc

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: 192.168.119.150/myrepo/busybox:stable-glibc

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: esdata

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

三、部署kibana

kibana是无状态应用,不需要存储数据,所以使用deployment部署kibana。同时创建kibana的Service和Ingress暴露服务

#部署kibana的service

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: k8s-logging

labels:

app: kibana

spec:

type: NodePort

ports:

- port: 5601

selector:

app: kibana

---

#部署kibana的ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kibana-ingress

namespace: k8s-logging

spec:

ingressClassName: nginx

rules:

- host: www.mykibana.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kibana

port:

number: 5601

---

#部署kibana的deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: k8s-logging

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

nodeSelector:

node: master

tolerations:

- key: "node-role.kubernetes.io/control-plane"

operator: "Exists"

effect: "NoSchedule"

containers:

- name: kibana

image: 192.168.119.150/k8s_repository/kibana:7.2.0

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch.k8s-logging.svc.cluster.local:9200

ports:

- containerPort: 5601

volumeMounts:

- name: tzdata

mountPath: /etc/localtime

volumes:

- name: tzdata

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

四、部署fluentd

首先创建fluentd的主配置文件

#编写fluentd的主配置文件的ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

labels:

addonmanager.kubernetes.io/mode: Reconcile

name: fluentd-es-config-main

namespace: k8s-logging

data:

fluent.conf: |-

<match fluent.**>

@type null

</match>

@include /fluentd/etc/config.d/*.conf

创建fluentd的子配置文件。在子配置文件中设置时间戳格式,并在子配置文件中定义使用kubernetes插件,根据日志中的pod信息查对应k8s信息,并在日志中增加k8s信息,并配置logstash格式输出

#编写fluentd的子配置文件的ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: fluentd-config

namespace: k8s-logging

labels:

addonmanager.kubernetes.io/mode: Reconcile

data:

containers.input.conf: |-

<source>

@id fluentd-containers.log

@type tail

path /var/log/containers/*.log

pos_file /var/log/es-containers.log.pos

time_format %Y-%m-%dT%H:%M:%S.%L%z #设置时间戳格式

tag raw.kubernetes.*

format json

read_from_head false

</source>

<match raw.kubernetes.**>

@id raw.kubernetes

@type detect_exceptions

remove_tag_prefix raw

message log

stream stream

multiline_flush_interval 5

max_bytes 500000

max_lines 1000

</match>

output.conf: |-

<filter kubernetes.**>

@type kubernetes_metadata#使用kubernetes插件,在日志中增加k8s信息

</filter>

<match **>

@id elasticsearch

@type elasticsearch

@log_level info

include_tag_key true

hosts es-cluster-0.elasticsearch:9200,es-cluster-1.elasticsearch:9200

logstash_format true

request_timeout 30s

<buffer>

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

queue_limit_length 8

overflow_action block

</buffer>

</match>

fluentd会通过不断监听/var/log/container中的容器日志,传输日志到ES数据库中保存。我们使用DaemonSet部署fluentd,保证每个node都有fluentd收集日志。并创建RBAC,给fluentd pod查k8s数据的权限

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd-es

namespace: k8s-logging

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "namespaces"

- "pods"

verbs:

- "get"

- "watch"

- "list"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: fluentd-es

labels:

k8s-app: fluentd-es

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: fluentd-es

namespace: k8s-logging

apiGroup: ""

roleRef:

kind: ClusterRole

name: fluentd-es

apiGroup: ""

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-es

namespace: k8s-logging

labels:

addonmanager.kubernetes.io/mode: Reconcile

k8s-app: fluentd-es

spec:

selector:

matchLabels:

k8s-app: fluentd-es

template:

metadata:

labels:

k8s-app: fluentd-es

spec:

securityContext: {}

serviceAccount: fluentd-es

serviceAccountName: fluentd-es

tolerations:

- key: "node-role.kubernetes.io/control-plane"

operator: "Exists"

effect: "NoSchedule"

containers:

- name: fluentd

image: 192.168.119.150/k8s_repository/fluentd-kubernetes-daemonset:v1.16

imagePullPolicy: IfNotPresent

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch.k8s-logging.svc.cluster.local"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

- name: FLUENTD_SYSTEMD_CONF

value: "disable"

- name: TZ

value: "Asia/Shanghai"

- name: FLUENT_CONTAINER_TAIL_PARSER_TYPE

value: "cri"

- name: FLUENT_CONTAINER_TAIL_PARSER_TIME_FORMAT

value: "%Y-%m-%dT%H:%M:%S.%L%z"

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: config-volume

mountPath: /fluentd/etc/config.d

- name: config-volume-main

mountPath: /fluentd/etc/fluent.conf

subPath: fluent.conf

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- configMap:

defaultMode: 420

name: fluentd-config

name: config-volume

- configMap:

defaultMode: 420

items:

- key: fluent.conf

path: fluent.conf

name: fluentd-es-config-main

name: config-volume-main

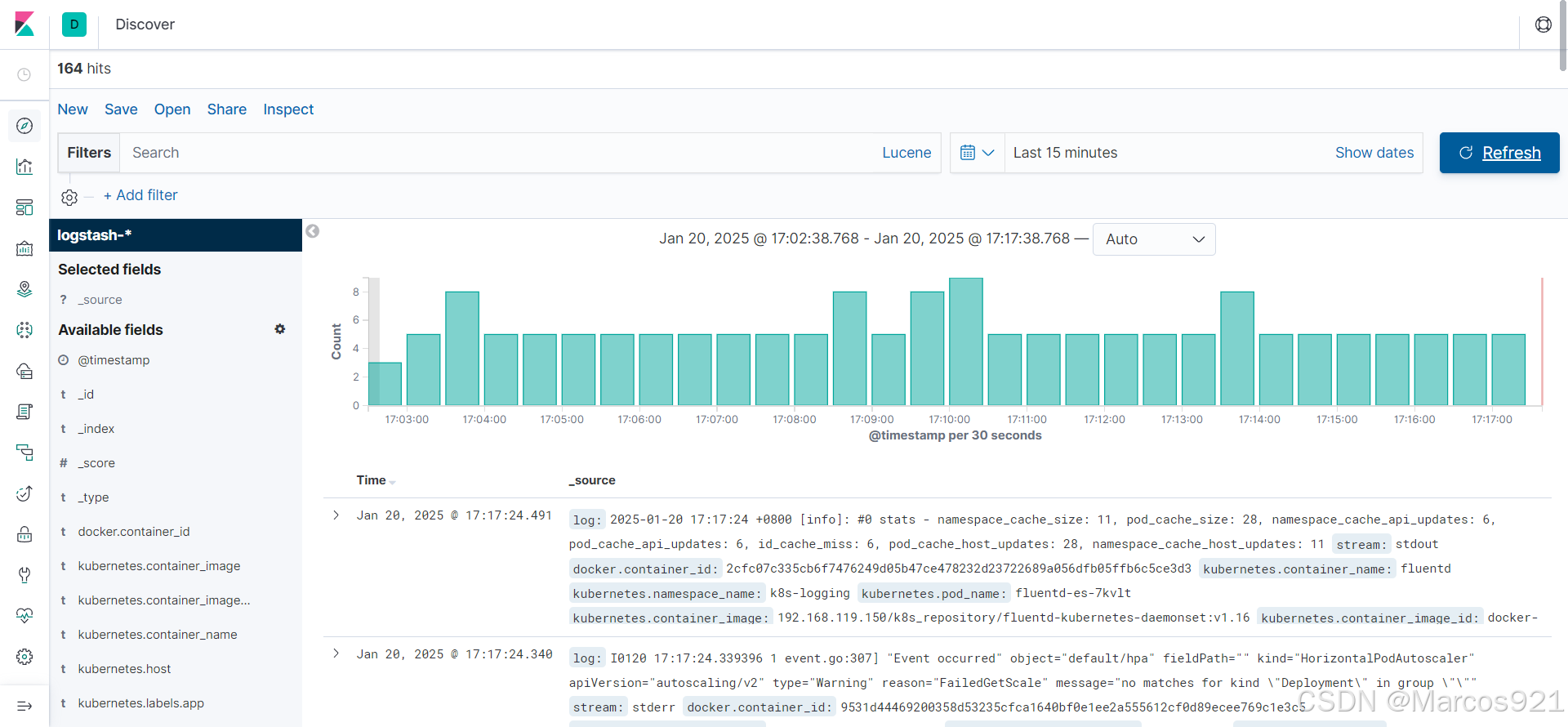

五、kibana展示数据

访问刚刚定义的ingress,访问kibana的web界面。

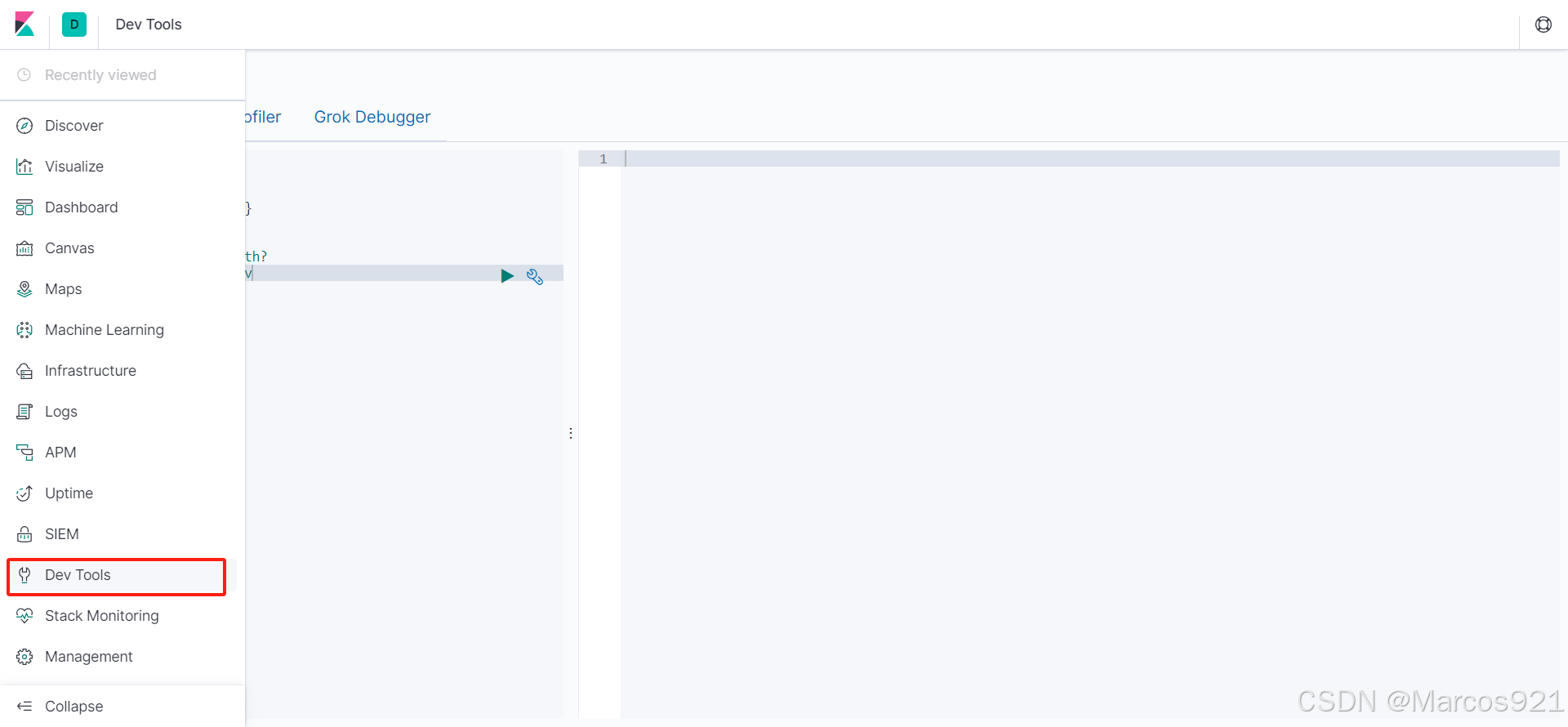

在web界面中我们可以点击侧边栏的Dev Tools,测试fluentd传输日志是否生效

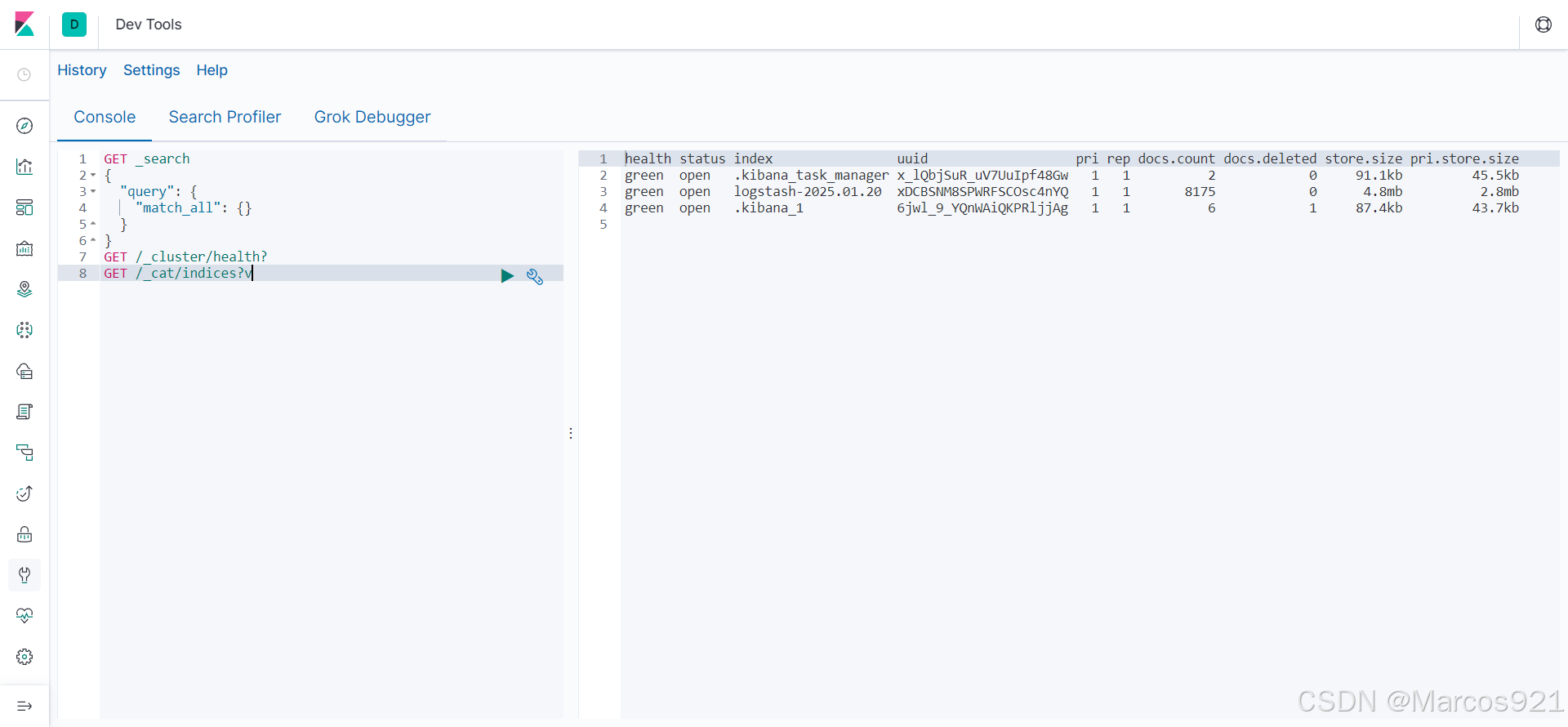

点击GET /_cat/indices?v 如果出现logstash的索引,则说明fluentd配置生效

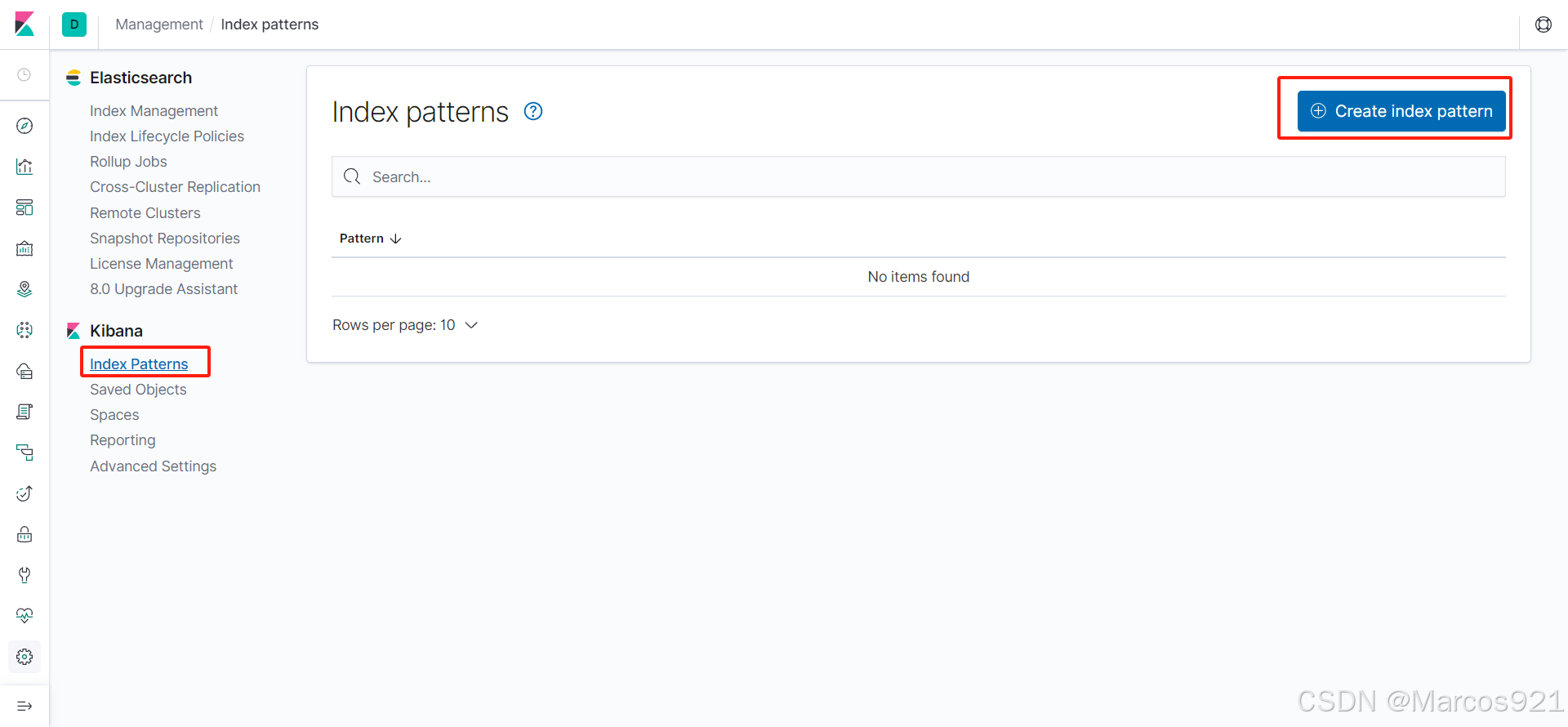

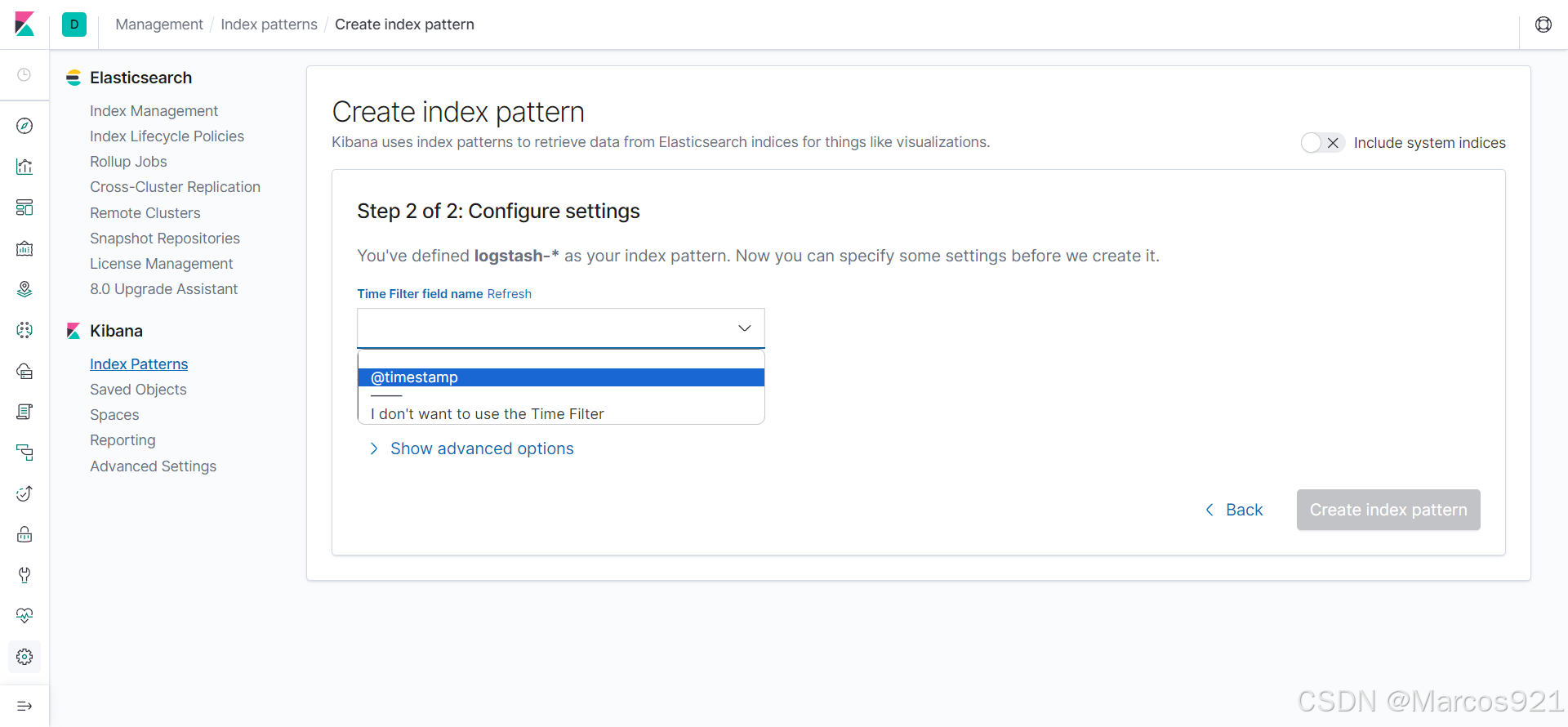

想要用kibana展示数据,还需要配置索引,点击Management / Index Patterns,创建Index pattern

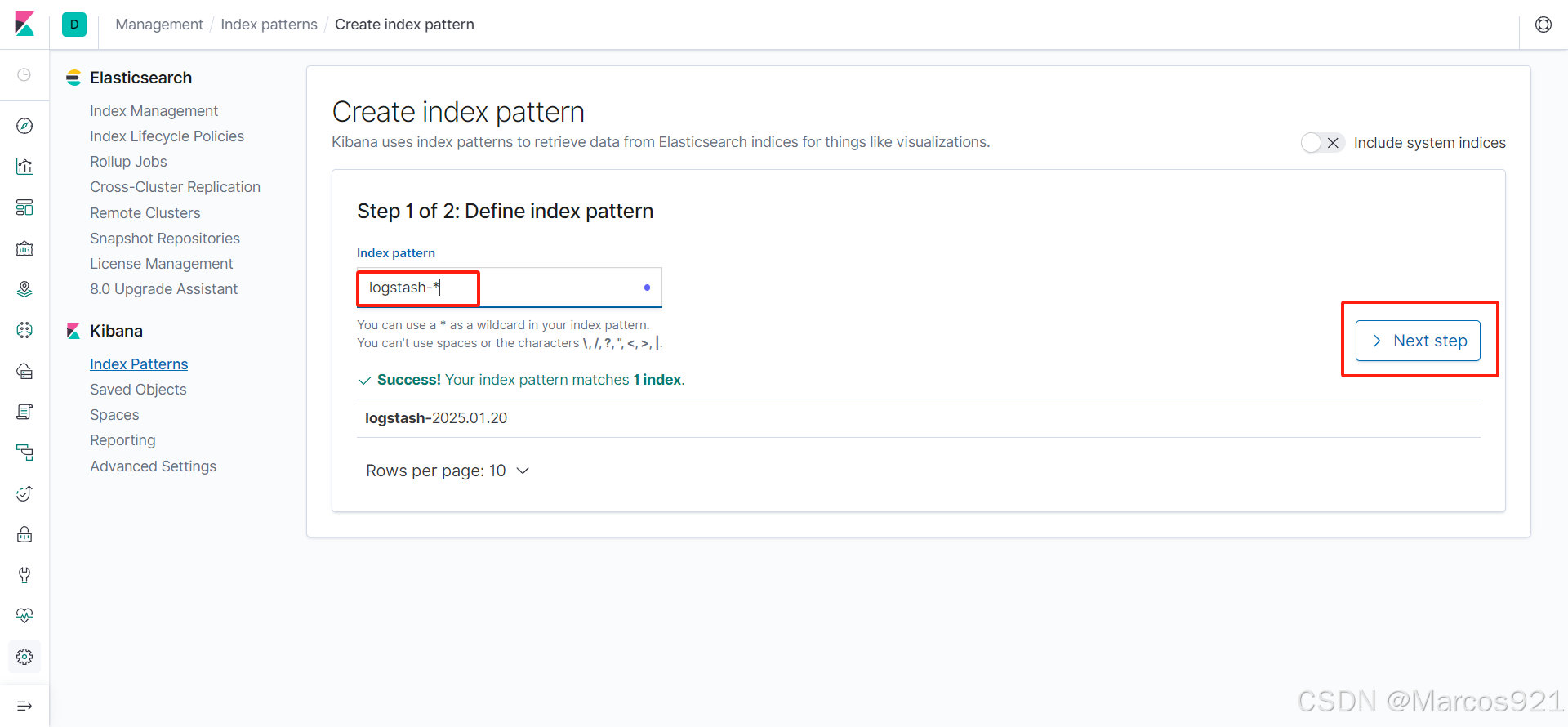

在界面中输入logstash-*,点击Next step

配置时间过滤器,选择@timestamp选项,点击Create index pattern

来到Discover界面,可以看到日志数据已经被展示出来了

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?