简述canbus

apollo项目中canbus模块的主要作用是接收Control模块发布的指令,然后将指令解析为CAN协议报文与车辆的ECU交互,且得到指令的反馈信息,并将反馈结果发布为车辆底盘信息(Chassis_detail)。

先看一下这个Chassis_detail是什么:apollo_cidi/modules/canbus/proto/chassis_detail.proto 里面存放了一些刹车、转弯、加速等等信息。底盘信息非常重要,一方面控制模块下达的指令需要先在canbus模块中解析然后通过can总线传递给车上的各个控制单元,另一方面canbus能从can总线上获取数据并且将信息解析为底盘信息,然后把消息发布出去,这一过程也叫反馈底盘信息,为的是让控制中心了解下达命令的具体执行情况。今天我们讨论问题也就是上述的过程。

message ChassisDetail {

enum Type {

QIRUI_EQ_15 = 0;

CHANGAN_RUICHENG = 1;

}

optional Type car_type = 1; // car type

optional BasicInfo basic = 2; // basic info

optional Safety safety = 3; // safety

optional Gear gear = 4; // gear

optional Ems ems = 5; // engine manager system

optional Esp esp = 6; // Electronic Stability Program

optional Gas gas = 7; // gas pedal

optional Epb epb = 8; // Electronic parking brake

optional Brake brake = 9; // brake pedal

optional Deceleration deceleration = 10; // deceleration

optional VehicleSpd vehicle_spd = 11; // vehicle speed

optional Eps eps = 12; // Electronic Power Steering

optional Light light = 13; // Light

optional Battery battery = 14; // Battery info

optional CheckResponseSignal check_response = 15;

optional License license = 16; // License info

optional Surround surround = 17; // Surround information

optional Gem gem = 18;

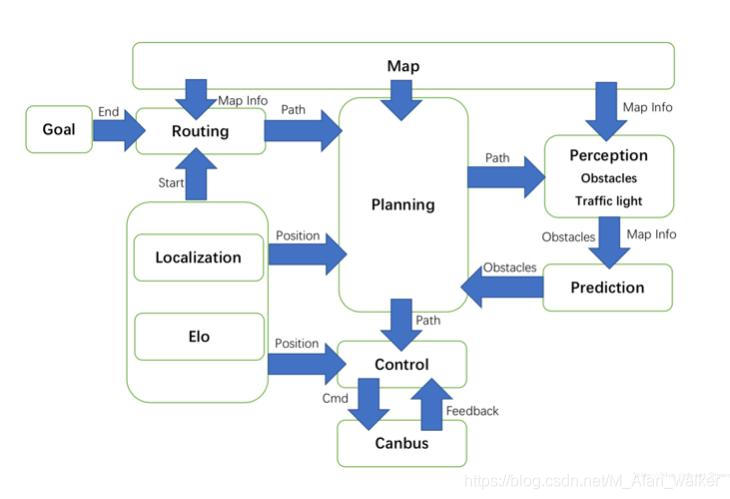

}看一下apollo的整体框架图

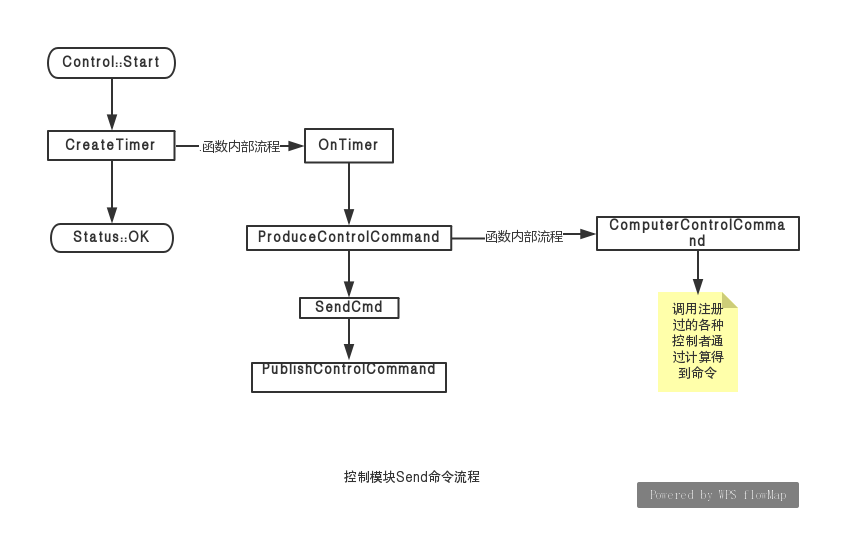

Control模块之下达Command

我用流程图来叙述这部分。apollo各个模块之间是通过ros来传递消息的,在这里通过PublishControlCommand 把控制者计算得到的命令pubish出去,在下文会看到在canbus.cc有一个回调函数来专门接受这个命令。

apollo中各个模块的展开过程如出一辙,不清楚的同学看看学习这篇博客:apollo的Planning模块源码分析。弄懂一个模块,再学习别的模块。在这里我想说一下定时器(OnTimer),创建一个定时器,有一定频率的去发布控制命令。这个频率到底是多少呢?

apollo_cidi/modules/control/conf/lincoln.pb.txt 在这个文件中control_period:0.01.也就是说control模块会以100hz的频率向外输出control_command信息,同理下面的chassis、trajectory也是一个道理。

control_period: 0.01

trajectory_period: 0.1

chassis_period: 0.01Canbus模块之解析Command

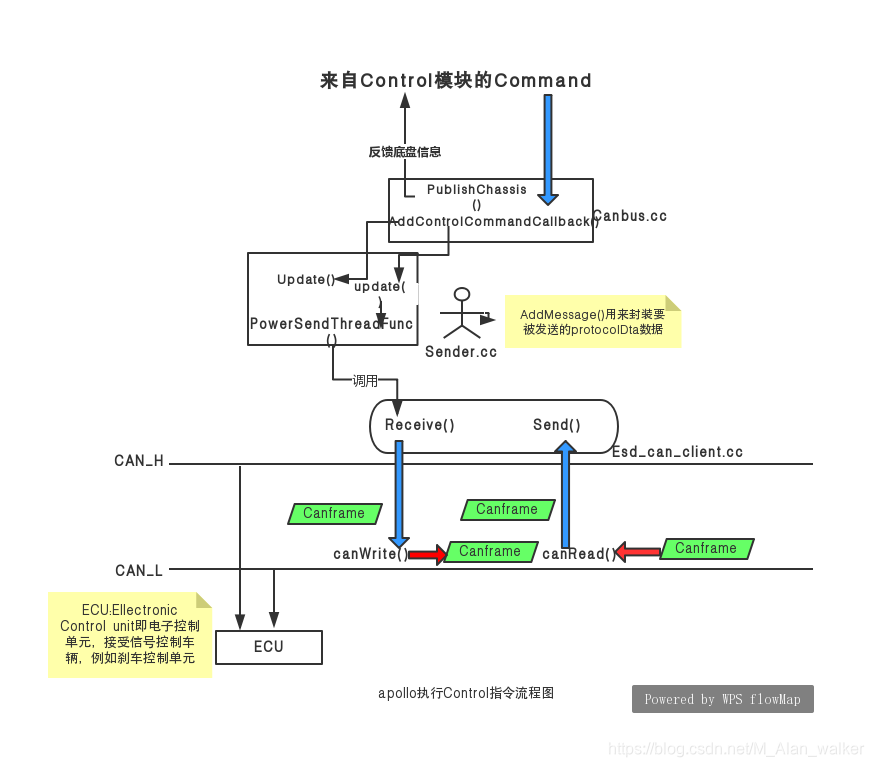

在apollo项目中有两处canbus源码,modules/canbus 这一部分的canbus主要是对Vehicle(车辆)控制的实现,modules/driver/canbus是实现数据通信主要的模块。这篇文章主要是canbus如何处理Command,这两块的canbus源码就不仔细分析了,有疑惑的可以参考这篇博文https://blog.youkuaiyun.com/davidhopper/article/details/79176505。apollo的modules各模块初始化操作大致相同,我也是通过这篇文章来学习的canbus模块。接下来进入正题“处理Command”,先看我画的流程图。

再推荐一篇博文https://blog.youkuaiyun.com/liu3612162/article/details/81981388

简单总结一下canbus模块中各个部件的功能

CanClient 负责与 CAN 卡通讯,获取消息或发送消息,MessageManager 则用来解析消息。而 CanReceiver 则调度这两个对象的工作。调度 CanClient 和 MessageManager 工作的代码在 CanReceiver 的 RecvThreadFunc 方法中。

1、canbus.cc中注册回调函数

在modules/canbus/canbus.cc的Init()函数中

if (!FLAGS_receive_guardian) {

AdapterManager::AddControlCommandCallback(&Canbus::OnControlCommand, this);

} else {

AdapterManag

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

499

499