文章目录

一、安装KubEdge

1 主节点和边缘节点都进行

1.1 我的ip以及如何更改hostname

1、我的为一主一从,一主多从更好

| 主节点 | 192.168.120.99 |

|---|---|

| 从节点 | 192.168.120.100 |

2、建议更改hostname

# 分别修改边云节点的主机名称

# master

hostnamectl set-hostname master

# edge

hostnamectl set-hostname edge

1.2 安装Docker

1、安装

sudo apt-get -y update

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

#安装GPG证书,如果不安装则没有权限从软件源下载Docker

curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# 写入软件源信息(通过这个软件源下载Docker):

sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

#安装docker

apt-get install docker.io

docker --version

2、更改配置并重启docker

sudo gedit /etc/docker/daemon.json

(1). 主节点添加:

{

"registry-mirrors": [

"https://dockerhub.azk8s.cn",

"https://reg-mirror.qiniu.com",

"https://quay-mirror.qiniu.com"

],

"exec-opts": ["native.cgroupdriver=systemd"]

}

(2). 从节点添加:

指定为 cgroupfs

{

"registry-mirrors": [

"https://dockerhub.azk8s.cn",

"https://reg-mirror.qiniu.com",

"https://quay-mirror.qiniu.com"

],

"exec-opts": ["native.cgroupdriver=cgroupfs"]

}

(3). 重启

更改docker配置之后要重启docker:

sudo systemctl daemon-reload

sudo systemctl restart docker

如果已经安装了KubeEdge,还应重启cloudcore和edgecore:

sudo systemctl restart edgecore

查看修改后的docker Cgroup的参数:

docker info | grep Cgroup

1.3 下载KubeEdge相关

我的版本为1.10.0

先把keadm-v1.10.0-linux-amd64.tar.gz和kubeedge-v1.10.0-linux-amd64.tar.gz下载到宿主机。

进行到2.4.1时,用xftp(或其它工具)将这两个文件传输到虚拟机对应文件夹中。

https://github.com/kubeedge/kubeedge/releases

2 主节点进行

2.1 kubelet, kubeadm, kubectl

apt-get update && apt-get install -y apt-transport-https

#下载镜像源密钥

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

#添加 k8s 镜像源

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

apt-get update

apt-get install -y kubelet=1.21.5-00 kubeadm=1.21.5-00 kubectl=1.21.5-00

初始化

只需要改apiserver-advertise-address,为master的ip地址

kubeadm init \

--apiserver-advertise-address=192.168.120.99 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.21.5 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

以下两个代码框系统推荐进行,执行一下。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

2.2 安装网络插件flannel

2.2.1 方法一

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

2.2.2 方法二

直接新建一个flannel.yaml,把以下内容粘贴进去,再apply

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-cloud-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: node-role.kubernetes.io/agent # 注意缩进

operator: DoesNotExist

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.19.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.19.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

kubectl apply -f flannel.yaml

2.2.3 如果失败,重新配置

kubectl delete -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

2.3 检查k8s是否成功

kubectl get pods -n kube-system

kubectl get nodes

2.4 开始KubeEdge

2.4.1 将之前下载好的文件传输到此文件夹中,并解压

#新建目录

mkdir /etc/kubeedge/

#解压

cd /etc/kubeedge/

#权限

chmod 777 /etc/kubeedge/

#解压

tar -zxvf keadm-v1.10.0-linux-amd64.tar.gz

2.4.2 添加环境变量

cd /etc/kubeedge/keadm-v1.10.0-linux-amd64/keadm

#将其配置进入环境变量,方便使用

cp keadm /usr/sbin/

2.4.3 初始化

cd /etc/kubeedge/keadm-v1.10.0-linux-amd64/keadm

sudo keadm init --advertise-address=192.168.120.99 --kubeedge-version=1.10.0

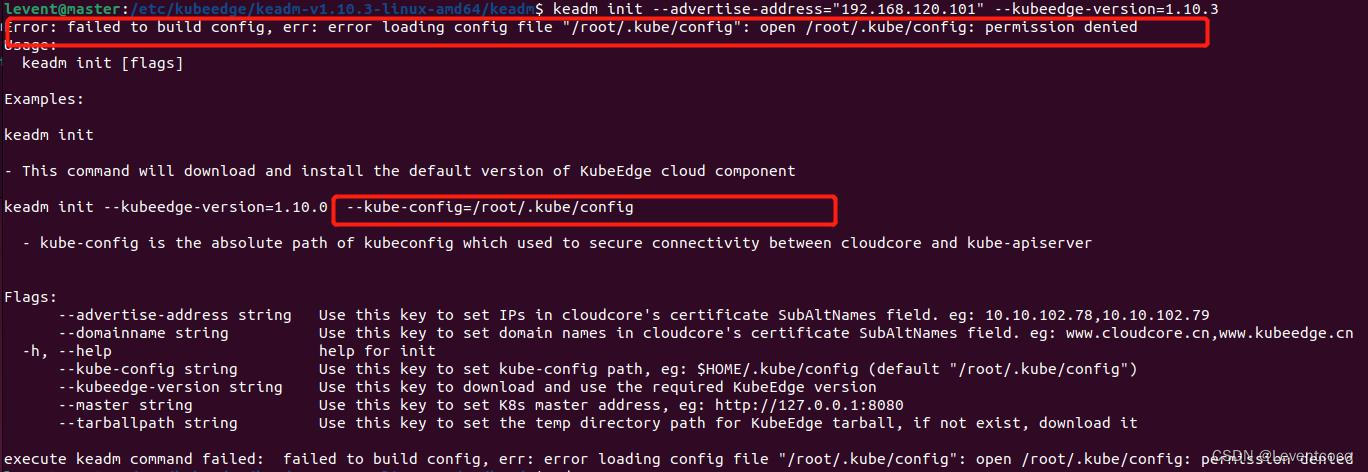

如果报错,请仔细看报错原因,例如下图:

那么正确的初始化命令应为:

sudo keadm init --advertise-address="192.168.120.101" --kubeedge-version=1.10.0 --kube-config=/home/levent/.kube/config

正确初始化截图:

2.4.4 检查cloudcore是否启动

ps -ef|grep cloudcore

2.4.5 查看cloudcore日志

journalctl -u cloudcore.service -xe

vim /var/log/kubeedge/cloudcore.log

2.4.6 查看端口

netstat -tpnl

要有这两个端口

2.4.7 查看启动状态

systemctl status cloudcore

2.4.8 设置开机启动

#启动cloudcore

systemctl start cloudcore

#设置开机自启动

systemctl enable cloudcore.service

#查看cloudcore开机启动状态 enabled:开启, disabled:关闭

systemctl is-enabled cloudcore.service

2.4.9 get token

用于3.2中边缘节点的加入

sudo keadm gettoken

3 边缘节点进行

3.1 传输文件并解压

sudo mkdir /etc/kubeedge/

将kubeedge-v1.10.0-linux-amd64.tar.gz和keadm-v1.10.0-linux-amd64.tar.gz放到此目录下。

sudo tar -zxvf keadm-v1.10.0-linux-amd64.tar.gz

3.2 边缘节点加入主节点

1、进入目录

cd keadm-v1.10.0-linux-amd64/keadm/

2、加入

cloudcore-ipport的端口号不用改,其余对应更改,join token是2.4.9生成的token

sudo keadm join --cloudcore-ipport=192.168.120.99:10000 --edgenode-name=node --kubeedge-version=1.10.0 --token=

Debug:为了让flannel能够访问到 http://127.0.0.1:10550,可能需要配置EdgeCore的metaServer功能。如果没有报错请忽略。

sudo gedit /etc/kubeedge/config/edgecore.yaml

3.3 检查

#启动edgecore

systemctl start edgecore

#设置开机自启

systemctl enable edgecore.service

#查看edgecore开机启动状态 enabled:开启, disabled:关闭

systemctl is-enabled edgecore

#查看状态

systemctl status edgecore

#查看日志

journalctl -u edgecore.service -b

3.4 主节点查看

要求所有的状态都为running或ready。

kubectl get nodes

kubectl get pod -n kube-system

4 KubEdge安装结束

4.1 KubeEdge相关的高质量Blog

https://blog.youkuaiyun.com/qq_43475285/article/details/126760865

4.2 Debug

1、The connection to the server…:6443 was refused - did you specify the right host or port?

二、安装EdgeMesh

按官网安装即可。

https://edgemesh.netlify.app/zh/guide

个人推荐手动安装,不用Helm安装。

正确安装截图:

三、安装Sedna

1 关于Sedna

https://github.com/kubeedge/sedna

2 安装Sedna

2.1 正常按照官网安装

一般情况下,按官网操作即可正常安装Sedna。

(1). 网络情况较好时,可以直接用这个命令即可成功安装。

curl https://raw.githubusercontent.com/kubeedge/sedna/main/scripts/installation/install.sh | SEDNA_ACTION=create bash -

(2). 网络情况不好时,请执行以下命令,即指定Sedna安装目录为 /opt/sedna,并参考2.2节。

# SEDNA_ROOT is the sedna git source directory or cached directory

export SEDNA_ROOT=/opt/sedna

curl https://raw.githubusercontent.com/kubeedge/sedna/main/scripts/installation/install.sh | SEDNA_ACTION=create bash -

成功安装截图:

2.2 解决网络超时问题

请参考此解决方案。

https://zhuanlan.zhihu.com/p/516314772

2.3 删除重装

curl https://raw.githubusercontent.com/kubeedge/sedna/main/scripts/installation/install.sh | SEDNA_ACTION=delete bash -

成功卸载截图:

3 查看

kubectl get deploy -n sedna gm

kubectl get ds lc -n sedna

kubectl get pod -n sedna

我目前的集群为一主一从,gm (global manager) 只存在于在云节点中,lc (local controller) 在云边节点中;更复杂的集群同理。

四、Sedna终身学习热舒适度案例

1 案例及说明文档地址

(1). 官方热舒适度案例指导

(2). 热舒适度案例官方说明文档

(3). 各版本源码 (进一步开发)

2 准备数据集

下载并解压

cd /data

wget https://kubeedge.obs.cn-north-1.myhuaweicloud.com/examples/atcii-classifier/dataset.tar.gz

tar -zxvf dataset.tar.gz

3 Create Job

3.1 Create Dataset

kubectl create -f - <<EOF

apiVersion: sedna.io/v1alpha1

kind: Dataset

metadata:

name: lifelong-dataset8

spec:

url: "/data/trainData.csv"

format: "csv"

nodeName: "iai-master"

EOF

3.2 Start Lifelong Learning Job

需要修改的地方:

1、nodeName:节点名称,可通过kubectl get node查看

2、image版本:目前使用0.5.0

3、dnsPolicy: ClusterFirstWithHostNet

kubectl create -f - <<EOF

apiVersion: sedna.io/v1alpha1

kind: LifelongLearningJob

metadata:

name: atcii-classifier-demo8

spec:

dataset:

name: "lifelong-dataset8"

trainProb: 0.8

trainSpec:

template:

spec:

nodeName: "iai-master"

dnsPolicy: ClusterFirstWithHostNet

containers:

- image: kubeedge/sedna-example-lifelong-learning-atcii-classifier:v0.5.0

name: train-worker

imagePullPolicy: IfNotPresent

args: ["train.py"] # training script

env: # Hyperparameters required for training

- name: "early_stopping_rounds"

value: "100"

- name: "metric_name"

value: "mlogloss"

trigger:

checkPeriodSeconds: 60

timer:

start: 00:01

end: 24:00

condition:

operator: ">"

threshold: 500

metric: num_of_samples

evalSpec:

template:

spec:

nodeName: "iai-master"

dnsPolicy: ClusterFirstWithHostNet

containers:

- image: kubeedge/sedna-example-lifelong-learning-atcii-classifier:v0.5.0

name: eval-worker

imagePullPolicy: IfNotPresent

args: ["eval.py"]

env:

- name: "metrics"

value: "precision_score"

- name: "metric_param"

value: "{'average': 'micro'}"

- name: "model_threshold" # Threshold for filtering deploy models

value: "0.5"

deploySpec:

template:

spec:

nodeName: "iai-master"

dnsPolicy: ClusterFirstWithHostNet

containers:

- image: kubeedge/sedna-example-lifelong-learning-atcii-classifier:v0.5.0

name: infer-worker

imagePullPolicy: IfNotPresent

args: ["inference.py"]

env:

- name: "UT_SAVED_URL" # unseen tasks save path

value: "/ut_saved_url"

- name: "infer_dataset_url" # simulation of the inference samples

value: "/data/testData.csv"

volumeMounts:

- name: utdir

mountPath: /ut_saved_url

- name: inferdata

mountPath: /data/

resources: # user defined resources

limits:

memory: 2Gi

volumes: # user defined volumes

- name: utdir

hostPath:

path: /lifelong/unseen_task/

type: DirectoryOrCreate

- name: inferdata

hostPath:

path: /data/

type: DirectoryOrCreate

outputDir: "/output"

EOF

4 查看

4.1 查看状态

(1). 查看job状态

kubectl get lifelonglearningjob atcii-classifier-demo8

kubectl get ll

(2). 查看pod状态

查看所有pod

kubectl get pods -o wide -A

查看与任务相关的三个pods,分别对应train, eval, infer:

kubectl get pod

4.2 查看结果

(1). 推理结果在/data目录下。

(2). 多任务模型文件,训练集,验证集等,在/output目录下。

(3). 此例程还实现了未知样本检测的功能。

find / -name unseen_sample.csv

五、Sedna终身学习相关内容

1、我的另一篇文章:Sedna终身学习以及KubeEdge梳理,对KubeEdge、Sedna以及Sedna的终身学习相关内容进行了梳理。

2、我为Sedna仓库贡献了终身学习热舒适度预测案例的tutorial,其中详解了配置文件以及如何定制符合自己业务需求的终身学习代码。

六、常用命令总结

1、查看pod日志

kubectl describe <your-pod-name> -n <name-space>

kubectl logs -f <your-pod-name>

2、强制删除pod

kubectl delete po <your-pod-name> -n <name-space> --force --grace-period=0

kubectl delete --all pods --namespace=<name-space>

3、重启pod

kubectl get pod <your-pod-name> -n <name-space> -o yaml | kubectl replace --force -f

4、关于镜像

查看镜像

docker images

强制删除镜像

docker rmi -f <image_id>

pip使用清华镜像

pip install sklearn==0.24.1 -i https://pypi.tuna.tsinghua.edu.cn/simple

安装pycocotools库

conda install -c conda-forge pycocotools

本文详细介绍了KubEdge的安装过程,包括主节点和边缘节点的设置,Docker的安装与配置,以及网络插件flannel的安装。接着,文章转向EdgeMesh的安装,然后重点讲述了Sedna的安装和一个终身学习的热舒适度案例,包括数据准备、创建Job和查看结果。最后,文章提供了相关资源链接和常用命令总结。

本文详细介绍了KubEdge的安装过程,包括主节点和边缘节点的设置,Docker的安装与配置,以及网络插件flannel的安装。接着,文章转向EdgeMesh的安装,然后重点讲述了Sedna的安装和一个终身学习的热舒适度案例,包括数据准备、创建Job和查看结果。最后,文章提供了相关资源链接和常用命令总结。

1278

1278

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?