目录

一、概述

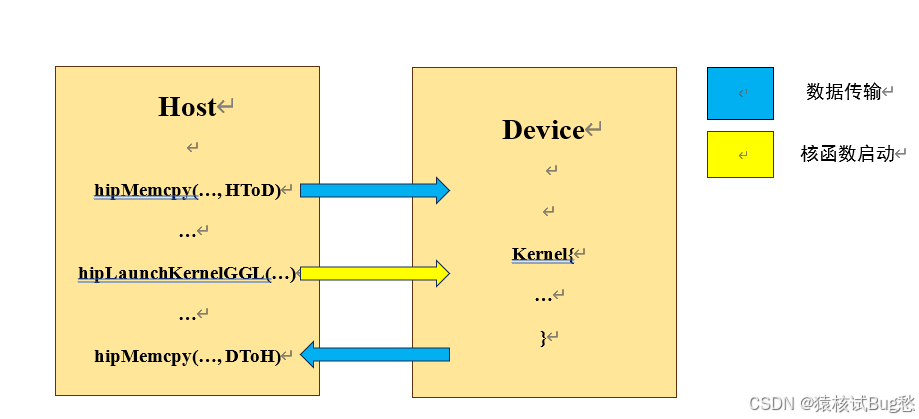

HIP属于显式编程模型,需要在程序中明确写出并行控制语句,包括数据传输、核函数启动等。核函数是运行在DCU上的函数,在CPU端运行的部分称为主机端(主要是执行管理和启动),DCU端运行的部分称为设备端(用于执行计算)。大概的流程如下图:

①主机端将需要并行计算的数据通过hipMemcpy()传递给DCU(将CPU存储的内容传递给DCU的显存);

②调用核函数启动函数hipLaunchKernelGGL()启动DCU,开始执行计算;

③设备端将计算好的结果数据通过hipMemcpy()从DCU复制回CPU。

hipMemcpy()是阻塞式的,数据复制完成后才可以执行后续的程序;hipLanuchKernelGGL()是非阻塞式的,执行完后程序继续向后执行,但是在Kernel没有计算完成之前,最后一个hipMemcpy()是不会开始的,这是由于HIP的Stream机制。

二、程序实现

下面是对归约的具体实现,reduce.cpp:

#include <stdio.h>

#include <stdlib.h>

#include <hip/hip_runtime.h>

#include <hip/hip_runtime_api.h>

int recursiveReduce(int *data, int const size)

{

if (size == 1) return data[0];

int const stride = size / 2;

for (int i = 0; i < stride; i++)

{

data[i] += data[i + stride];

}

return recursiveReduce(data, stride);

}

__global__ void reduceNeighbored (int *g_idata, int *g_odata, unsigned int n)

{

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x + threadIdx.x;

int *idata = g_idata + blockIdx.x * blockDim.x;

if (idx >= n) return;

for (int stride = 1; stride < blockDim.x; stride *= 2)

{

if ((tid % (2 * stride)) == 0)

{

idata[tid] += idata[tid + stride];

}

__syncthreads();

}

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

__global__ void reduceNeighboredLess (int *g_idata, int *g_odata,

unsigned int n)

{

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x + threadIdx.x;

int *idata = g_idata + blockIdx.x * blockDim.x;

if(idx >= n) return;

for (int stride = 1; stride < blockDim.x; stride *= 2)

{

int index = 2 * stride * tid;

if (index < blockDim.x)

{

idata[index] += idata[index + stride];

}

__syncthreads();

}

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

__global__ void reduceInterleaved (int *g_idata, int *g_odata, unsigned int n)

{

unsigned int tid = threadIdx.x;

unsigned int idx = blockIdx.x * blockDim.x + threadIdx.x;

int *idata = g_idata + blockIdx.x * blockDim.x;

if(idx >= n) return;

for (int stride = blockDim.x / 2; stride > 0; stride >>= 1)

{

if (tid < stride)

{

idata[tid] += idata[tid + stride];

}

__syncthreads();

}

if (tid == 0) g_odata[blockIdx.x] = idata[0];

}

int main(int argc, char **argv)

{

int dev = 0;

bool bResult = false;

int size = 1 << 24;

printf(" with array size %d ", size);

int blocksize = 512;

dim3 block (blocksize, 1);

dim3 grid ((size + block.x - 1) / block.x, 1);

printf("grid %d block %d\n", grid.x, block.x);

hipEvent_t start_time,stop_time;

hipEventCreate(&start_time);

hipEventCreate(&stop_time);

size_t bytes = size * sizeof(int);

int *h_idata = (int *) malloc(bytes);

int *h_odata = (int *) malloc(grid.x * sizeof(int));

int *tmp = (int *) malloc(bytes);

float time_elapsed = 1.0f ;

for (int i = 0; i < size; i++)

{

h_idata[i] = (int)( rand() & 0xFF );

}

memcpy (tmp, h_idata, bytes);

int gpu_sum = 0;

int *d_idata = NULL;

int *d_odata = NULL;

hipMalloc((void **) &d_idata, bytes);

hipMalloc((void **) &d_odata, grid.x * sizeof(int));

hipEventRecord(start_time,NULL);

int cpu_sum = recursiveReduce (tmp, size);

hipEventRecord(stop_time,NULL);

hipEventElapsedTime(&time_elapsed, start_time,stop_time);

printf("cpu reduce elapsed %f sec cpu_sum: %d\n", time_elapsed, cpu_sum);

hipMemcpy(d_idata, h_idata, bytes, hipMemcpyHostToDevice);

hipDeviceSynchronize();

hipEventRecord(start_time,NULL);

hipLaunchKernelGGL(reduceNeighbored,grid,block,0,0,d_idata,d_odata,size);

hipDeviceSynchronize();

hipEventRecord(stop_time,NULL);

hipEventElapsedTime(&time_elapsed, start_time,stop_time);

hipMemcpy(h_odata, d_odata, grid.x * sizeof(int),hipMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++) gpu_sum += h_odata[i];

printf("gpu Neighbored elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", time_elapsed, gpu_sum, grid.x, block.x);

hipMemcpy(d_idata, h_idata, bytes, hipMemcpyHostToDevice);

hipDeviceSynchronize();

hipEventRecord(start_time,NULL);

hipLaunchKernelGGL(reduceNeighboredLess,grid,block,0,0,d_idata,d_odata,size);

hipDeviceSynchronize();

hipEventRecord(stop_time,NULL);

hipEventElapsedTime(&time_elapsed, start_time,stop_time);

hipMemcpy(h_odata, d_odata, grid.x * sizeof(int),hipMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++) gpu_sum += h_odata[i];

printf("gpu Neighbored2 elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", time_elapsed, gpu_sum, grid.x, block.x);

hipMemcpy(d_idata, h_idata, bytes, hipMemcpyHostToDevice);

hipDeviceSynchronize();

hipEventRecord(start_time,NULL);

hipLaunchKernelGGL(reduceInterleaved,grid,block,0,0,d_idata,d_odata,size);

hipDeviceSynchronize();

hipEventRecord(stop_time,NULL);

hipEventElapsedTime(&time_elapsed, start_time,stop_time);

hipMemcpy(h_odata, d_odata, grid.x * sizeof(int),hipMemcpyDeviceToHost);

gpu_sum = 0;

for (int i = 0; i < grid.x; i++) gpu_sum += h_odata[i];

printf("gpu Interleaved elapsed %f sec gpu_sum: %d <<<grid %d block "

"%d>>>\n", time_elapsed, gpu_sum, grid.x, block.x);

hipEventDestroy(start_time);

hipEventDestroy(stop_time);

free(h_idata);

free(h_odata);

hipFree(d_idata);

hipFree(d_odata);

hipDeviceReset();

bResult = (gpu_sum == cpu_sum);

if(!bResult)

{

printf("Test failed!\n");

}

else

{

printf("Test success!\n");

}

return 0;

}

三、编译运行

HIP程序采用hipcc编译。

![]()

运行结果:

689

689

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?