MLM:掩码语言模型,使用Mask LM预训练任务来预训练Bert模型。基于pytorch框架,训练关于垂直领域语料的预训练语言模型,目的是提升下游任务的表现。

思路:就是对bert模型在特定领域继续训练,本代码是在bert-base的基础上进行微调,首先下载bert-base模型,embedding维度768,其次,准备特定领域的数据作为训练集、验证集和测试集。

MaskLM

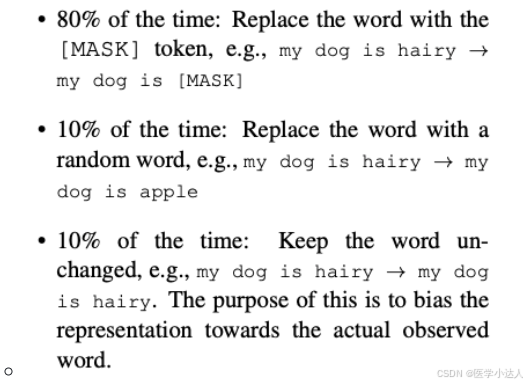

使用来自于Bert的mask机制,即对于每一个句子中的词(token):

- 85%的概率,保留原词不变

- 15%的概率,使用以下方式替换

- 80%的概率,使用字符

[MASK],替换当前token。- 10%的概率,使用词表随机抽取的token,替换当前token。

- 10%的概率,保留原词不变。

1.model框架

BertForMaskedLM.py

from dataclasses import dataclass

from typing import Optional, Tuple

import torch

import torch.utils.checkpoint

from torch import nn

from torch.nn import BCEWithLogitsLoss, CrossEntropyLoss, MSELoss

from transformers import BertPreTrainedModel, BertModel

from transformers.file_utils import add_code_sample_docstrings, add_start_docstrings, add_start_docstrings_to_model_forward

from transformers.modeling_outputs import MaskedLMOutput

from transformers.activations import ACT2FN

_CHECKPOINT_FOR_DOC = "bert-base-uncased"

_CONFIG_FOR_DOC = "BertConfig"

_TOKENIZER_FOR_DOC = "BertTokenizer"

BERT_START_DOCSTRING = r"""

This model inherits from :class:`~transformers.PreTrainedModel`. Check the superclass documentation for the generic

methods the library implements for all its model (such as downloading or saving, resizing the input embeddings,

pruning heads etc.)

This model is also a PyTorch `torch.nn.Module <https://pytorch.org/docs/stable/nn.html#torch.nn.Module>`__

subclass. Use it as a regular PyTorch Module and refer to the PyTorch documentation for all matter related to

general usage and behavior.

Parameters:

config (:class:`~transformers.BertConfig`): Model configuration class with all the parameters of the model.

Initializing with a config file does not load the weights associated with the model, only the

configuration. Check out the :meth:`~transformers.PreTrainedModel.from_pretrained` method to load the model

weights.

"""

BERT_INPUTS_DOCSTRING = r"""

Args:

input_ids (:obj:`torch.LongTensor` of shape :obj:`({0})`):

Indices of input sequence tokens in the vocabulary.

Indices can be obtained using :class:`~transformers.BertTokenizer`. See

:meth:`transformers.PreTrainedTokenizer.encode` and :meth:`transformers.PreTrainedTokenizer.__call__` for

details.

`What are input IDs? <../glossary.html#input-ids>`__

attention_mask (:obj:`torch.FloatTensor` of shape :obj:`({0})`, `optional`):

Mask to avoid performing attention on padding token indices. Mask values selected in ``[0, 1]``:

- 1 for tokens that are **not masked**,

- 0 for tokens that are **masked**.

`What are attention masks? <../glossary.html#attention-mask>`__

token_type_ids (:obj:`torch.LongTensor` of shape :obj:`({0})`, `optional`):

Segment token indices to indicate first and second portions of the inputs. Indices are selected in ``[0,

1]``:

- 0 corresponds to a `sentence A` token,

- 1 corresponds to a `sentence B` token.

`What are token type IDs? <../glossary.html#token-type-ids>`_

position_ids (:obj:`torch.LongTensor` of shape :obj:`({0})`, `optional`):

Indices of positions of each input sequence tokens in the position embeddings. Selected in the range ``[0,

config.max_position_embeddings - 1]``.

`What are position IDs? <../glossary.html#position-ids>`_

head_mask (:obj:`torch.FloatTensor` of shape :obj:`(num_heads,)` or :obj:`(num_layers, num_heads)`, `optional`):

Mask to nullify selected heads of the self-attention modules. Mask values selected in ``[0, 1]``:

- 1 indicates the head is **not masked**,

- 0 indicates the head is **masked**.

inputs_embeds (:obj:`torch.FloatTensor` of shape :obj:`({0}, hidden_size)`, `optional`):

Optionally, instead of passing :obj:`input_ids` you can choose to directly pass an embedded representation.

This is useful if you want more control over how to convert :obj:`input_ids` indices into associated

vectors than the model's internal embedding lookup matrix.

output_attentions (:obj:`bool`, `optional`):

Whether or not to return the attentions tensors of all attention layers. See ``attentions`` under returned

tensors for more detail.

output_hidden_states (:obj:`bool`, `optional`):

Whether or not to return the hidden states of all layers. See ``hidden_states`` under returned tensors for

more detail.

return_dict (:obj:`bool`, `optional`):

Whether or not to return a :class:`~transformers.file_utils.ModelOutput` instead of a plain tuple.

"""

@add_start_docstrings("""Bert Model with a `language modeling` head on top. """, BERT_START_DOCSTRING)

class BertForMaskedLM(BertPreTrainedModel):

_keys_to_ignore_on_load_unexpected = [r"pooler"]

_keys_to_ignore_on_load_missing = [r"position_ids", r"predictions.decoder.bias"]

def __init__(self, config):

super().__init__(config)

self.bert = BertModel(config, add_pooling_layer=False)

self.cls = BertOnlyMLMHead(config)

self.init_weights()

def get_output_embeddings(self):

return self.cls.predictions.decoder

def set_output_embeddings(self, new_embeddings):

self.cls.predictions.decoder = new_embeddings

@add_start_docstrings_to_model_forward(BERT_INPUTS_DOCSTRING.format("batch_size, sequence_length"))

# @add_code_sample_docstrings(

# tokenizer_class=_TOKENIZER_FOR_DOC,

# checkpoint=_CHECKPOINT_FOR_DOC,

# output_type=MaskedLMOutput,

# config_class=_CONFIG_FOR_DOC,

# )

def forward(

self,

input_ids=None,

attention_mask=None,

token_type_ids=None,

position_ids=None,

head_mask=None,

inputs_embeds=None,

encoder_hidden_states=None,

encoder_attention_mask=None,

labels=None,

output_attentions=None,

output_hidden_states=None,

return_dict=None,

):

r"""

labels (:obj:`torch.LongTensor` of shape :obj:`(batch_size, sequence_length)`, `optional`):

Labels for computing the masked language modeling loss. Indices should be in ``[-100, 0, ...,

config.vocab_size]`` (see ``input_ids`` docstring) Tokens with indices set to ``-100`` are ignored

(masked), the loss is only computed for the tokens with labels in ``[0, ..., config.vocab_size]``

"""

return_dict = return_dict if return_dict is not None else self.config.use_return_dict

outputs = self.bert(

input_ids,

attention_mask=attention_mask,

token_type_ids=token_type_ids,

position_ids=position_ids,

head_mask=head_mask,

inputs_embeds=inputs_embeds,

encoder_hidden_states=encoder_hidden_states,

encoder_attention_mask=encoder_attention_mask,

output_attentions=output_attentions,

output_hidden_states=output_hidden_states,

return_dict=return_dict,

)

sequence_output = outputs[0]

prediction_scores = self.cls(sequence_output)

masked_lm_loss = None

if labels is not None:

loss_fct = CrossEntropyLoss() # -100 index = padding token

masked_lm_loss = loss_fct(prediction_scores.view(-1, self.config.vocab_size), labels.view(-1))

if not return_dict:

output = (prediction_scores,) + outputs[2:]

return ((masked_lm_loss,) + output) if masked_lm_loss is not None else output

return MaskedLMOutput(

loss=masked_lm_loss,

logits=prediction_scores,

hidden_states=outputs.hidden_states,

attentions=outputs.attentions,

)

def prepare_inputs_for_generation(self, input_ids, attention_mask=None, **model_kwargs):

input_shape = input_ids.shape

effective_batch_size = input_shape[0]

# add a dummy token

assert self.config.pad_token_id is not None, "The PAD token should be defined for generation"

attention_mask = torch.cat([attention_mask, attention_mask.new_zeros((attention_mask.shape[0], 1))], dim=-1)

dummy_token = torch.full(

(effective_batch_size, 1), self.config.pad_token_id, dtype=torch.long, device=input_ids.device

)

input_ids = torch.cat([input_ids, dummy_token], dim=1)

return {"input_ids": input_ids, "attention_mask": attention_mask}

class BertPredictionHeadTransform(nn.Module):

def __init__(self, config):

super().__init__()

self.dense = nn.Linear(config.hidden_size, config.hidden_size)

if isinstance(config.hidden_act, str):

self.transform_act_fn = ACT2FN[config.hidden_act]

else:

self.transform_act_fn = config.hidden_act

self.LayerNorm = nn.LayerNorm(config.hidden_size, eps=config.layer_norm_eps)

def forward(self, hidden_states):

hidden_states = self.dense(hidden_states)

hidden_states = self.transform_act_fn(hidden_states)

hidden_states = self.LayerNorm(hidden_states)

return hidden_states

class BertLMPredictionHead(nn.Module):

def __init__(self, config):

super().__init__()

self.transform = BertPredictionHeadTransform(config)

# The output weights are the same as the input embeddings, but there is

# an output-only bias for each token.

self.decoder = nn.Linear(config.hidden_size, config.vocab_size, bias=False)

self.bias = nn.Parameter(torch.zeros(config.vocab_size))

# Need a link between the two variables so that the bias is correctly resized with `resize_token_embeddings`

self.decoder.bias = self.bias

def forward(self, hidden_states):

hidden_states = self.transform(hidden_states)

hidden_states = self.decoder(hidden_states)

return hidden_states

class BertOnlyMLMHead(nn.Module):

def __init__(self, config):

super().__init__()

self.predictions = BertLMPredictionHead(config)

def forward(self, sequence_output):

prediction_scores = self.predictions(sequence_output)

return prediction_scores

2.执行代码文件 main.py

import os

import time

import numpy as np

import torch

import logging

from Config import Config

from DataManager import DataManager

from Trainer import Trainer

from Predictor import Predictor

if __name__ == '__main__':

config = Config()

os.environ["CUDA_VISIBLE_DEVICES"] = config.cuda_visible_devices

# 设置随机种子,保证结果每次结果一样

np.random.seed(1)

torch.manual_seed(1)

torch.cuda.manual_seed_all(1)

torch.backends.cudnn.deterministic = True

start_time = time.time()

# 数据处理

print('read data...')

dm = DataManager(config)

# 模式

if config.mode == 'train':

# 获取数据

print('data process...')

train_loader = dm.get_dataset(mode='train')

valid_loader = dm.get_dataset(mode='dev')

# 训练

trainer = Trainer(config)

trainer.train(train_loader, valid_loader)

elif config.mode == 'test':

# 测试

test_loader = dm.get_dataset(mode='test', sampler=False)

predictor = Predictor(config)

predictor.predict(test_loader)

else:

print("no task going on!")

print("you can use one of the following lists to replace the valible of Config.py. ['train', 'test', 'valid'] !")

3.模型的训练文件 Trainer.py

import os

import time

import random

import logging

import math

import numpy as np

import pandas as pd

import torch

from torch.nn import CrossEntropyLoss

from tqdm.auto import tqdm

from datasets import Dataset, load_dataset, load_metric

from torch.utils.data import DataLoader

from transformers import AdamW, AutoModelForSequenceClassification, get_scheduler

from transformers import BertModel, BertConfig

from model.BertForMaskedLM import BertForMaskedLM

from Config import Config

from transformers import DistilBertForMaskedLM

from transformers import AutoTokenizer, DataCollatorWithPadding, BertTokenizer, DistilBertTokenizer

class Trainer(object):

def __init__(self, config):

self.config = config

self.tokenizer = AutoTokenizer.from_pretrained(self.config.initial_pretrain_tokenizer)

def train(self, train_loader, valid_loader):

"""

预训练模型

"""

print('training start')

# 初始化配置

os.environ["CUDA_VISIBLE_DEVICES"] = self.config.cuda_visible_devices

device = torch.device(self.config.device)

# # 多卡通讯配置

# if torch.cuda.device_count() > 1:

# torch.distributed.init_process_group(backend='nccl',

# init_method=self.config.init_method,

# rank=0,

# world_size=self.config.world_size)

# torch.distributed.barrier()

# 初始化模型和优化器

print('model loading')

model = BertForMaskedLM.from_pretrained(self.config.initial_pretrain_model)

# model = DistilBertForMaskedLM.from_pretrained(self.config.initial_pretrain_model)

print(">>>>>>>> Model Structure >>>>>>>>")

for name,parameters in model.named_parameters():

print(name,':',parameters.size())

print(">>>>>>>> Model Structure >>>>>>>>\n")

optimizer = AdamW(model.parameters(), lr=self.config.learning_rate)

# 定义优化器配置

num_training_steps = self.config.num_epochs * len(train_loader)

lr_scheduler = get_scheduler(

"linear",

optimizer=optimizer,

num_warmup_steps=self.config.num_warmup_steps,

num_training_steps=num_training_steps

)

# 分布式训练

model.to(device)

if torch.cuda.device_count() > 1:

model = torch.nn.parallel.DistributedDataParallel(model,

find_unused_parameters=True)

#broadcast_buffers=True)

print('start to train')

model.train()

# loss_func = CrossEntropyLoss()

progress_bar = tqdm(range(num_training_steps))

loss_best = math.inf

for epoch in range(self.config.num_epochs):

for i, batch in enumerate(train_loader):

batch = {k:v.to(device) for k,v in batch.items()}

outputs = model(**batch)

# 计算loss

loss = outputs.loss

loss = loss.mean()

loss.backward()

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

progress_bar.update(1)

if i % 500 == 0:

print('epoch:{0} iter:{1}/{2} loss:{3}'.format(epoch, i, len(train_loader), loss.item()))

# 模型保存

self.eval(valid_loader, model, epoch, device)

model_save = model.module if torch.cuda.device_count() > 1 else model

path = self.config.path_model_save + 'epoch_{}/'.format(epoch)

model_save.save_pretrained(path)

def eval(self, eval_dataloader, model, epoch, device):

losses = []

model.eval()

input = []

label = []

pred = []

for batch in eval_dataloader:

batch = {k:v.to(device) for k,v in batch.items()}

with torch.no_grad():

outputs = model(**batch)

loss = outputs.loss

loss = loss.unsqueeze(0)

losses.append(loss)

# 还原成token string

tmp_src = batch['input_ids'].cpu().numpy()

tmp_label = batch['labels'].cpu().numpy()

tmp_pred = torch.max(outputs.logits, -1)[1].cpu().numpy()

for i in range(len(tmp_label)):

line_l = tmp_label[i]

line_l_split = [ x for x in line_l if x not in [0]]

line_s = tmp_src[i]

line_s_split = line_s[:len(line_l_split)]

line_p = tmp_pred[i]

line_p_split = line_p[:len(line_l_split)]

tmp_s = self.tokenizer.convert_tokens_to_string(self.tokenizer.convert_ids_to_tokens(line_s_split))

tmp_lab = self.tokenizer.convert_tokens_to_string(self.tokenizer.convert_ids_to_tokens(line_l_split))

tmp_p = self.tokenizer.convert_tokens_to_string(self.tokenizer.convert_ids_to_tokens(line_p_split))

input.append(tmp_s)

label.append(tmp_lab)

pred.append(tmp_p)

# 计算困惑度

losses = torch.cat(losses)

losses_avg = torch.mean(losses)

perplexity = math.exp(losses_avg)

print('eval {0}: loss:{1} perplexity:{2}'.format(epoch, losses_avg.item(), perplexity))

for i in range(10):

print('-'*30)

print('input: {}'.format(input[i]))

print('label: {}'.format(label[i]))

print('pred : {}'.format(pred[i]))

return losses_avg

if __name__ == '__main__':

config = Config()

train(config)

# load_lm()

4. 数据预处理文件 DataManager.py

import os

import copy

import random

import math

import numpy as np

import pandas as pd

import torch

from tqdm.auto import tqdm

from datasets import Dataset, load_dataset, load_metric

from torch.utils.data import DataLoader

from transformers import AutoTokenizer, DataCollatorWithPadding, BertTokenizer, DistilBertTokenizer

from torch.utils.data import DataLoader, TensorDataset, RandomSampler

from torch.utils.data.distributed import DistributedSampler

class DataManager(object):

def __init__(self, config):

self.config = config

self.init_gpu_config()

def init_gpu_config(self):

"""

初始化GPU并行配置

"""

print('loading GPU config ...')

if self.config.mode == 'train' and torch.cuda.device_count() > 1:

torch.distributed.init_process_group(backend='nccl',

init_method=self.config.init_method,

rank=0,

world_size=self.config.world_size)

torch.distributed.barrier() # Make sure only the first process in distributed training process the dataset, and the others will use the cache

def get_dataset(self, mode='train', sampler=True):

"""

获取数据集

"""

# 读取tokenizer分词模型

tokenizer = AutoTokenizer.from_pretrained(self.config.initial_pretrain_tokenizer)

if mode=='train':

train_dataloader = self.data_process('train.txt', tokenizer)

return train_dataloader

elif mode=='dev':

eval_dataloader = self.data_process('dev.txt', tokenizer)

return eval_dataloader

else:

test_dataloader = self.data_process('test.txt', tokenizer, sampler=sampler)

return test_dataloader

def data_process(self, file_name, tokenizer, sampler=True):

"""

数据转换

"""

# 获取数据

text = self.open_file(self.config.path_datasets + file_name)#[:2000]

dataset = pd.DataFrame({'src':text, 'labels':text})

# dataframe to datasets

raw_datasets = Dataset.from_pandas(dataset)

# tokenizer.

tokenized_datasets = raw_datasets.map(lambda x: self.tokenize_function(x, tokenizer), batched=True) # 对于样本中每条数据进行数据转换

data_collator = DataCollatorWithPadding(tokenizer=tokenizer) # 对数据进行padding

tokenized_datasets = tokenized_datasets.remove_columns(["src"]) # 移除不需要的字段

tokenized_datasets.set_format("torch") # 格式转换

# 转换成DataLoader类

# train_dataloader = DataLoader(tokenized_datasets, shuffle=True, batch_size=config.batch_size, collate_fn=data_collator)

# eval_dataloader = DataLoader(tokenized_datasets_test, batch_size=config.batch_size, collate_fn=data_collator)

# sampler = RandomSampler(tokenized_datasets) if not torch.cuda.device_count() > 1 else DistributedSampler(tokenized_datasets)

# dataloader = DataLoader(tokenized_datasets, sampler=sampler, batch_size=self.config.batch_size, collate_fn=data_collator)

if sampler:

sampler = RandomSampler(tokenized_datasets) if not torch.cuda.device_count() > 1 else DistributedSampler(tokenized_datasets)

else:

sampler = None

dataloader = DataLoader(tokenized_datasets, sampler=sampler, batch_size=self.config.batch_size) #, collate_fn=data_collator , num_workers=2, drop_last=True

return dataloader

def tokenize_function(self, example, tokenizer):

"""

数据转换

"""

# 分词

token = tokenizer(example["src"], truncation=True, max_length=self.config.sen_max_length, padding='max_length') #config.padding)

label=copy.deepcopy(token.data['input_ids'])

token.data['labels'] = label

# 获取特殊字符ids

token_mask = tokenizer.mask_token

token_pad = tokenizer.pad_token

token_cls = tokenizer.cls_token

token_sep = tokenizer.sep_token

ids_mask = tokenizer.convert_tokens_to_ids(token_mask)

token_ex = [token_mask, token_pad, token_cls, token_sep]

ids_ex = [tokenizer.convert_tokens_to_ids(x) for x in token_ex]

# 获取vocab dict

vocab = tokenizer.vocab

vocab_int2str = { v:k for k, v in vocab.items()}

# mask机制

if self.config.whole_words_mask:

# whole words masking

mask_token = [ self.op_mask_wwm(line, ids_mask, ids_ex, vocab_int2str) for line in token.data['input_ids']]

else:

mask_token = [[self.op_mask(x, ids_mask, ids_ex, vocab) for i,x in enumerate(line)] for line in token.data['input_ids']]

# 验证mask后的字符长度

mask_token_len = len(set([len(x) for x in mask_token]))

assert mask_token_len==1, 'length of mask_token not equal.'

flag_input_label = [1 if len(x)==len(y) else 0 for x,y in zip(mask_token, label)]

assert sum(flag_input_label)==len(mask_token), 'the length between input and label not equal.'

token.data['input_ids'] = mask_token

return token

# def op_mask_wwm(self, tokens, ids_mask, ids_ex, vocab_int2str):

# """

# 基于全词mask

# """

# if len(tokens) <= 5:

# return tokens

# # string = [tokenizer.convert_ids_to_tokens(x) for x in tokens]

# line = tokens

# for i, token in enumerate(tokens):

# # 若在额外字符里,则跳过

# if token in ids_ex:

# line[i] = token

# continue

# # 采样替换

# if random.random()<=0.15:

# x = random.random()

# if x <= 0.80:

# # 获取词string

# token_str = vocab_int2str[token]

# if '##' not in token_str:

# # 若不含有子词标志

# line[i] = ids_mask

# # 后向寻找

# curr_i = i + 1

# flag = True

# while flag:

# # 判断当前词是否包含 ##

# token_index = tokens[curr_i]

# token_index_str = vocab_int2str[token_index]

# if '##' not in token_index_str:

# flag = False

# else:

# line[curr_i] = ids_mask

# curr_i += 1

# if x> 0.80 and x <= 0.9:

# # 随机生成整数

# while True:

# token = random.randint(0, len(vocab_int2str)-1)

# # 不再特殊字符index里,则跳出

# if token not in ids_ex:

# break

# return line

def op_mask(self, token, ids_mask, ids_ex, vocab):

"""

Bert的原始mask机制。

(1)85%的概率,保留原词不变

(2)15%的概率,使用以下方式替换

80%的概率,使用字符'[MASK]',替换当前token。

10%的概率,使用词表随机抽取的token,替换当前token。

10%的概率,保留原词不变。

"""

# 若在额外字符里,则跳过

if token in ids_ex:

return token

# 采样替换

if random.random()<=0.15:

x = random.random()

if x <= 0.80:

token = ids_mask

if x> 0.80 and x <= 0.9:

# 随机生成整数

while True:

token = random.randint(0, len(vocab)-1)

# 不再特殊字符index里,则跳出

if token not in ids_ex:

break

# token = random.randint(0, len(vocab)-1)

return token

def op_mask_wwm(self, tokens, ids_mask, ids_ex, vocab_int2str):

"""

基于全词mask

"""

if len(tokens) <= 5:

return tokens

# string = [tokenizer.convert_ids_to_tokens(x) for x in tokens]

# line = tokens

line = copy.deepcopy(tokens)

for i, token in enumerate(tokens):

# 若在额外字符里,则跳过

if token in ids_ex:

line[i] = token

continue

# 采样替换

if random.random()<=0.10:

x = random.random()

if x <= 0.80:

# 获取词string

token_str = vocab_int2str[token]

# 若含有子词标志

if '##' in token_str:

line[i] = ids_mask

# 前向寻找

curr_i = i - 1

flag = True

while flag:

# 判断当前词是否包含 ##

token_index = tokens[curr_i]

token_index_str = vocab_int2str[token_index]

if '##' not in token_index_str:

flag = False

line[curr_i] = ids_mask

curr_i -= 1

# 后向寻找

curr_i = i + 1

flag = True

while flag:

# 判断当前词是否包含 ##

token_index = tokens[curr_i]

token_index_str = vocab_int2str[token_index]

if '##' not in token_index_str:

flag = False

else:

line[curr_i] = ids_mask

curr_i += 1

else:

# 若不含有子词标志

line[i] = ids_mask

# 后向寻找

curr_i = i + 1

flag = True

while flag:

# 判断当前词是否包含 ##

token_index = tokens[curr_i]

token_index_str = vocab_int2str[token_index]

if '##' not in token_index_str:

flag = False

else:

line[curr_i] = ids_mask

curr_i += 1

if x> 0.80 and x <= 0.9:

# 随机生成整数

while True:

token = random.randint(0, len(vocab_int2str)-1)

# 不再特殊字符index里,则跳出

if token not in ids_ex:

break

# # 查看mask效果:int转换成string

# test = [vocab_int2str[x] for x in line]

# test_gt = [vocab_int2str[x] for x in tokens]

return line

def open_file(self, path):

"""读文件"""

text = []

with open(path, 'r', encoding='utf8') as f:

for line in f.readlines():#[:1000]:

line = line.strip()

text.append(line)

return text

5.模型的预测结果文件 Predictor.py

import os

from posixpath import sep

import time

import random

import logging

import math

import numpy as np

import pandas as pd

import torch

import torch.nn.functional as F

from apex import amp

from tqdm.auto import tqdm

from datasets import Dataset, load_dataset, load_metric

from torch.utils.data import DataLoader

from transformers import AdamW, AutoModelForSequenceClassification, get_scheduler, get_linear_schedule_with_warmup,AutoTokenizer

# from transformers import BertTokenizer, BertConfig, AutoConfig, BertForMaskedLM, DistilBertForMaskedLM, DistilBertTokenizer, AutoTokenizer

from model.BertForMaskedLM import BertForMaskedLM

from sklearn import metrics

from Config import Config

from utils.progressbar import ProgressBar

class Predictor(object):

def __init__(self, config):

self.config = config

# self.test_loader = test_loader

self.device = torch.device(self.config.device)

# 加载模型

self.load_tokenizer()

self.load_model()

def load_tokenizer(self):

"""

读取分词器

"""

print('loading tokenizer config ...')

self.tokenizer = AutoTokenizer.from_pretrained(self.config.initial_pretrain_tokenizer)

def load_model(self):

"""

加载模型及初始化模型参数

"""

print('loading model...%s' %self.config.path_model_predict)

self.model = BertForMaskedLM.from_pretrained(self.config.path_model_predict)

# 将模型加载到CPU/GPU

self.model.to(self.device)

self.model.eval()

def predict(self, test_loader):

"""

预测

"""

print('predict start')

# 初始化指标计算

progress_bar = ProgressBar(n_total=len(test_loader), desc='Predict')

src = []

label = []

pred = []

input = []

for i, batch in enumerate(test_loader):

# 推断

batch = {k:v.to(self.config.device) for k,v in batch.items()}

with torch.no_grad():

outputs = self.model(**batch)

outputs_pred = outputs.logits

# 还原成token string

tmp_src = batch['input_ids'].cpu().numpy()

tmp_label = batch['labels'].cpu().numpy()

tmp_pred = torch.max(outputs_pred, -1)[1].cpu().numpy()

for i in range(len(tmp_label)):

line_s = tmp_src[i]

line_l = tmp_label[i]

line_l_split = [ x for x in line_l if x not in [0]]

line_p = tmp_pred[i]

line_p_split = line_p[:len(line_l_split)]

tmp_s = self.tokenizer.convert_tokens_to_string(self.tokenizer.convert_ids_to_tokens(line_s))

tmp_lab = self.tokenizer.convert_tokens_to_string(self.tokenizer.convert_ids_to_tokens(line_l_split))

tmp_p = self.tokenizer.convert_tokens_to_string(self.tokenizer.convert_ids_to_tokens(line_p_split))

input.append(tmp_s)

label.append(tmp_lab)

pred.append(tmp_p)

progress_bar(i, {})

# 计算指标

total = 0

count = 0

for i,(s,t) in enumerate(zip(label, pred)):

if '[MASK]' in input[i]:

total += 1

if s==t:

count += 1

acc = count/max(1, total)

print('\nTask: acc=',acc)

# 保存

# Task 1

data = {'src':label, 'pred':pred, 'mask':input}

data = pd.DataFrame(data)

path = os.path.join(self.config.path_datasets, 'output')

if not os.path.exists(path):

os.mkdir(path)

path_output = os.path.join(path, 'pred_data.csv')

data.to_csv(path_output, sep='\t', index=False)

print('Task 1: predict result save: {}'.format(path_output))

6.损失计算文件 LossManager.py

from torch.nn import CrossEntropyLoss

class LossManager(object):

def __init__(self, loss_type='ce'):

self.loss_func = CrossEntropyLoss()

def compute(self,

input_x,

target,

hidden_emb_x=None,

hidden_emb_y=None,

alpha=0.5):

"""

计算loss

Args:

input: [N, C]

target: [N, ]

"""

loss = self.loss_func(input_x, target)

return loss

7.模型配置文件 Config.py

import os

import random

class Config(object):

def __init__(self):

self.mode = 'train'

# GPU配置

self.cuda_visible_devices = '0' # 可见的GPU

self.device = 'cuda:0' # master GPU

self.port = str(random.randint(10000,60000)) # 多卡训练进程间通讯端口

self.init_method = 'tcp://localhost:' + self.port # 多卡训练的通讯地址

self.world_size = 1 # 线程数,默认为1

# 训练配置

self.whole_words_mask = True # 使用是否whole words masking机制

self.num_epochs = 10 # 迭代次数

self.batch_size = 128 # 每个批次的大小

self.learning_rate = 3e-5 # 学习率

self.num_warmup_steps = 0.1 # warm up步数

self.sen_max_length = 96 # 句子最长长度

self.padding = True # 是否对输入进行padding

# 模型及路径配置

self.initial_pretrain_model = 'bert-base-uncased' # 加载的预训练分词器checkpoint,默认为英文。若要选择中文,替换成 bert-base-chinese

self.initial_pretrain_tokenizer = 'bert-base-uncased' # 加载的预训练模型checkpoint,默认为英文。若要选择中文,替换成 bert-base-chinese

self.path_model_save = './checkpoint/bert/' # 模型保存路径

self.path_datasets = './datasets/' # 数据集

self.path_log = './logs/'

self.path_model_predict = os.path.join(self.path_model_save, 'epoch_4')

8.进度条管理文件 progressbar.py

import time

class ProgressBar(object):

'''

custom progress bar(进度条)

Example:

>>> pbar = ProgressBar(n_total=30,desc='training')

>>> step = 2

>>> pbar(step=step)

'''

def __init__(self, n_total,width=30,desc = 'Training'):

self.width = width

self.n_total = n_total

self.start_time = time.time()

self.desc = desc

def __call__(self, step, info={}):

now = time.time()

current = step + 1

recv_per = current / self.n_total

bar = f'[{self.desc}] {current}/{self.n_total} ['

if recv_per >= 1:

recv_per = 1

prog_width = int(self.width * recv_per)

if prog_width > 0:

bar += '=' * (prog_width - 1)

if current< self.n_total:

bar += ">"

else:

bar += '='

bar += '.' * (self.width - prog_width)

bar += ']'

show_bar = f"\r{bar}"

time_per_unit = (now - self.start_time) / current

if current < self.n_total:

eta = time_per_unit * (self.n_total - current)

if eta > 3600:

eta_format = ('%d:%02d:%02d' %

(eta // 3600, (eta % 3600) // 60, eta % 60))

elif eta > 60:

eta_format = '%d:%02d' % (eta // 60, eta % 60)

else:

eta_format = '%ds' % eta

time_info = f' - ETA: {eta_format}'

else:

if time_per_unit >= 1:

time_info = f' {time_per_unit:.1f}s/step'

elif time_per_unit >= 1e-3:

time_info = f' {time_per_unit * 1e3:.1f}ms/step'

else:

time_info = f' {time_per_unit * 1e6:.1f}us/step'

show_bar += time_info

if len(info) != 0:

show_info = f'{show_bar} ' + \

"-".join([f' {key}: {value:.4f} ' for key, value in info.items()])

print(show_info, end='')

else:

print(show_bar, end='')

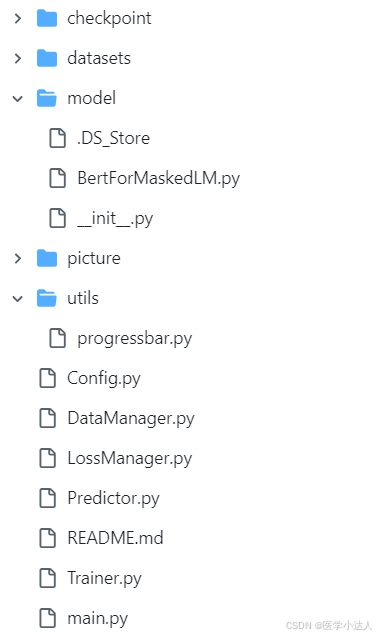

文件树:

环境配置:

numpy==1.24.1

pandas==1.5.2

scikit_learn==1.2.1

torch==1.8.0

tqdm==4.64.1

transformers==4.26.1

520

520