import torch

import torch.nn as nn

import torch.optim as optim

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from torch.utils.data import Dataset, DataLoader

from model import FullyConnectedNet #导入前面的模型

# 读取 MNIST 训练数据 CSV 文件

train_data = pd.read_csv('D:/MNIST/data/train.csv', header=None)

# 检查是否有可用的 GPU

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# 提取特征和标签

X = train_data.iloc[:, 1:].values.astype(np.float32) / 255.0

# 从数据中提取特征,从第二列开始(索引为1),将数据类型转换为 float32,并进行归一化处理除以 255.0

y = train_data.iloc[:, 0].values.astype(np.int64)

# 提取标签,第一列(索引为0),并转换为 int64 类型

# 将标签转换为独热编码

num_classes = 10

y_one_hot = np.eye(num_classes)[y]

# 使用 NumPy 的 eye 函数创建一个单位矩阵,然后根据标签 y 选择对应的行,得到独热编码形式的标签

# 将数据转换为 PyTorch 的张量,并移动到 GPU

X_train_tensor = torch.from_numpy(X).to(device)

# 将特征数据转换为 PyTorch 张量,并移动到指定设备(GPU 或 CPU)

y_train_tensor = torch.from_numpy(y_one_hot).to(device)

# 创建 PyTorch 数据集

class TrainDataset(Dataset):

def __init__(self, X, y):

self.X = X

# 初始化时接收特征数据 X

self.y = y

# 初始化时接收标签数据 y

def __len__(self):

return len(self.X)

# 返回数据集的长度,即特征数据的长度

def __getitem__(self, idx):

return self.X[idx], self.y[idx]

# 根据索引 idx 返回单个样本的特征和标签

train_dataset = TrainDataset(X_train_tensor, y_train_tensor)

# 创建一个数据集对象,传入特征和标签的张量

# 创建数据加载器

batch_size = 64

train_dataloader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

# 创建数据加载器,传入数据集对象,设置批量大小为 64,并随机打乱数据

model = FullyConnectedNet().to(device)

# 实例化自定义的全连接网络模型,并将其移动到指定设备

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss().to(device)

# 定义交叉熵损失函数,并移动到指定设备

optimizer = optim.Adam(model.parameters(), lr=0.001)

# 使用 Adam 优化器,传入模型的参数和学习率 0.001

# 训练模型

model.train()

num_epochs = 30

losses = []

accuracies = []

for epoch in range(num_epochs):

running_loss = 0.0

correct = 0

total = 0

for i, data in enumerate(train_dataloader, 0):

inputs, labels = data

optimizer.zero_grad()

# 将优化器的梯度清零

outputs = model(inputs)

# 使用模型对输入数据进行前向传播,得到输出

loss = criterion(outputs, torch.argmax(labels, dim=1))

# 计算损失,传入模型输出和标签的最大值索引(将独热编码的标签转换为对应的类别索引)

loss.backward()

# 进行反向传播,计算梯度

optimizer.step()

# 根据计算出的梯度更新模型参数

running_loss += loss.item()

# 累加损失值

_, predicted = torch.max(outputs.data, 1)

# 找到输出中最大值的索引,即预测的类别

total += labels.size(0)

# 累加总样本数

correct += (predicted == torch.argmax(labels, dim=1)).sum().item()

# 累加正确预测的样本数

epoch_loss = running_loss / len(train_dataloader)

# 计算每个 epoch 的平均损失

epoch_accuracy = correct / total

# 计算每个 epoch 的准确率

losses.append(epoch_loss)

accuracies.append(epoch_accuracy)

print(f'Epoch {epoch + 1}, Loss: {epoch_loss:.4f}, Accuracy: {epoch_accuracy:.4f}')

# 打印每个 epoch 的信息

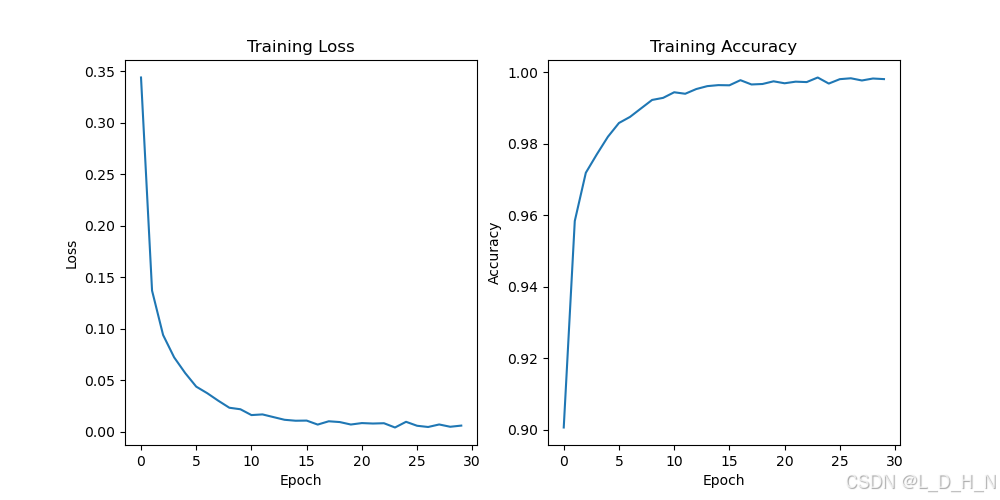

# 可视化训练损失和准确率

plt.figure(figsize=(10, 5))

plt.subplot(1, 2, 1)

plt.plot(losses)

plt.title('Training Loss')

plt.xlabel('Epoch')

plt.ylabel('Loss')

plt.subplot(1, 2, 2)

plt.plot(accuracies)

plt.title('Training Accuracy')

plt.xlabel('Epoch')

plt.ylabel('Accuracy')

plt.show()

# 保存模型参数

torch.save(model.state_dict(), 'mnist_model.pth')

训练结果:

Epoch 1, Loss: 0.3438, Accuracy: 0.9006

Epoch 2, Loss: 0.1371, Accuracy: 0.9584

Epoch 3, Loss: 0.0940, Accuracy: 0.9719

Epoch 4, Loss: 0.0723, Accuracy: 0.9771

Epoch 5, Loss: 0.0571, Accuracy: 0.9820

Epoch 6, Loss: 0.0439, Accuracy: 0.9858

Epoch 7, Loss: 0.0375, Accuracy: 0.9875

Epoch 8, Loss: 0.0302, Accuracy: 0.9899

Epoch 9, Loss: 0.0234, Accuracy: 0.9923

Epoch 10, Loss: 0.0220, Accuracy: 0.9929

Epoch 11, Loss: 0.0163, Accuracy: 0.9944

Epoch 12, Loss: 0.0170, Accuracy: 0.9940

Epoch 13, Loss: 0.0143, Accuracy: 0.9953

Epoch 14, Loss: 0.0118, Accuracy: 0.9961

Epoch 15, Loss: 0.0108, Accuracy: 0.9964

Epoch 16, Loss: 0.0110, Accuracy: 0.9964

Epoch 17, Loss: 0.0071, Accuracy: 0.9978

Epoch 18, Loss: 0.0103, Accuracy: 0.9966

Epoch 19, Loss: 0.0095, Accuracy: 0.9967

Epoch 20, Loss: 0.0072, Accuracy: 0.9975

Epoch 21, Loss: 0.0086, Accuracy: 0.9969

Epoch 22, Loss: 0.0081, Accuracy: 0.9974

Epoch 23, Loss: 0.0084, Accuracy: 0.9973

Epoch 24, Loss: 0.0043, Accuracy: 0.9986

Epoch 25, Loss: 0.0098, Accuracy: 0.9969

Epoch 26, Loss: 0.0060, Accuracy: 0.9981

Epoch 27, Loss: 0.0048, Accuracy: 0.9983

Epoch 28, Loss: 0.0072, Accuracy: 0.9977

Epoch 29, Loss: 0.0050, Accuracy: 0.9983

Epoch 30, Loss: 0.0061, Accuracy: 0.9981

可视化训练结果:

3101

3101

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?